Remodeling Specialised AI Coaching- Meet LMFlow: A Promising Toolkit to Effectively Nice-Tune and Personalize Massive Basis Fashions for Superior Efficiency

Massive language fashions (LLMs) constructed on high of huge basis fashions have proven the final potential to execute varied duties that had been inconceivable earlier than. Nonetheless, extra finetuning of such LLMs is required to extend efficiency on specialised domains or jobs. Frequent procedures for finetuning such massive fashions embrace:

- Ongoing pretraining in area of interest areas, permitting a broad base mannequin to choose up experience in such areas.

- Tuning of directions to coach a giant, general-purpose base mannequin to know and perform sure kinds of natural-language directions.

- Coaching a giant basis mannequin with the mandatory conversational skills utilizing RLHF (reinforcement studying with human suggestions).

Whereas a number of massive fashions have already been pretrained and made obtainable to the general public (GPT-J, Bloom, LLaMA, and many others.), no publicly obtainable toolbox can effectively perform finetuning operations throughout all these fashions.

To assist builders and researchers finetune and infer large fashions effectively with constrained sources, a crew of lecturers from Hong Kong College and Princeton College has created an easy-to-use and light-weight toolset.

One Nvidia 3090 GPU and 5 hours are all it takes to coach a customized mannequin based mostly on a 7-billion-parameter LLaMA mannequin. The crew has supplied the mannequin weights for tutorial analysis after utilizing this framework to finetune variations of LLaMA with 7, 13, 33, and 65 billion parameters on a single machine.

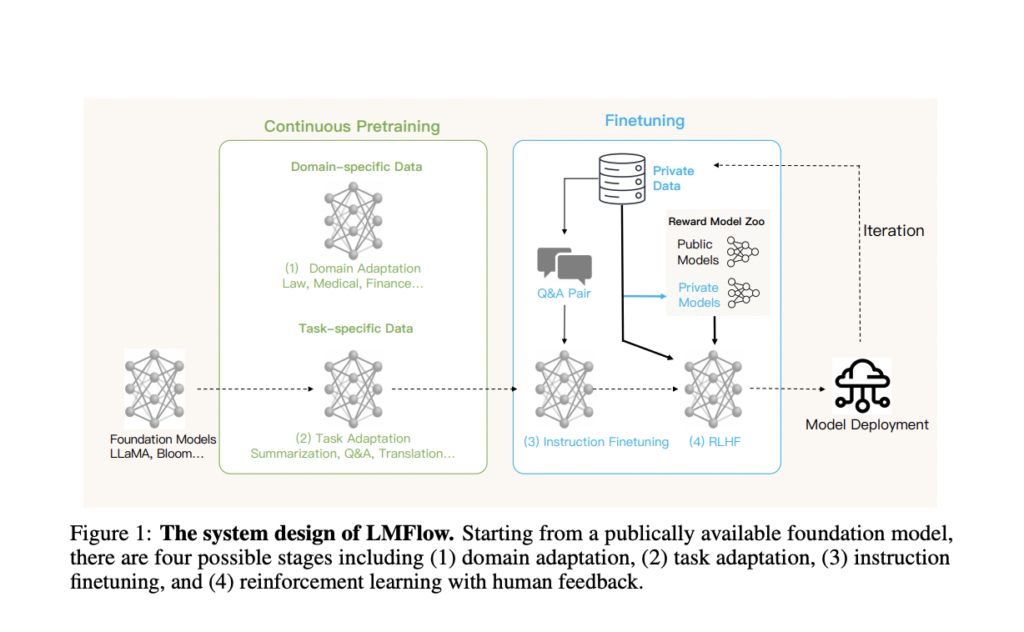

There are 4 steps to optimizing the output of a big language mannequin that’s freely obtainable on-line:

- Step one, “area adaptation,” entails coaching the mannequin on a sure area to deal with it higher.

- Process adaptation is the second step, and it entails coaching the mannequin to perform a selected purpose, reminiscent of summarization, query answering, or translation.

- Adjusting the mannequin’s parameters based mostly on tutorial question-answer pairings is the third stage, instruction finetuning.

- The final step is reinforcement studying utilizing human suggestions, which entails refining the mannequin based mostly on folks’s opinions.

LMFlow presents a full finetuning process for these 4 steps, permitting for individualized coaching of big language fashions regardless of constrained computational sources.

LMFlow presents an intensive finetuning method for large fashions with options like steady pretraining, instruction tuning, and RLHF, in addition to straightforward and versatile APIs. Individualized mannequin coaching is now accessible to everybody with LMFlow. For actions like query answering, companionship, writing, translation, and knowledgeable consultations in varied topics, every individual can decide an appropriate mannequin based mostly on their obtainable sources. If customers have a big sufficient mannequin and dataset, coaching over an extended interval will yield superior outcomes. The crew has not too long ago skilled a 33B mannequin that outperforms ChatGPT.

Examine Out The Paper and Github Link. Don’t overlook to affix our 25k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI initiatives, and extra. In case you have any questions relating to the above article or if we missed something, be happy to electronic mail us at Asif@marktechpost.com

🚀 Check Out 100’s AI Tools in AI Tools Club

Dhanshree Shenwai is a Pc Science Engineer and has an excellent expertise in FinTech corporations protecting Monetary, Playing cards & Funds and Banking area with eager curiosity in functions of AI. She is passionate about exploring new applied sciences and developments in right now’s evolving world making everybody’s life straightforward.