How Gentle & Surprise constructed a predictive upkeep answer for gaming machines on AWS

This publish is co-written with Aruna Abeyakoon and Denisse Colin from Gentle and Surprise (L&W).

Headquartered in Las Vegas, Light & Wonder, Inc. is the main cross-platform world recreation firm that gives playing services and products. Working with AWS, Gentle & Surprise not too long ago developed an industry-first safe answer, Gentle & Surprise Join (LnW Join), to stream telemetry and machine well being knowledge from roughly half one million digital gaming machines distributed throughout its on line casino buyer base globally when LnW Join reaches its full potential. Over 500 machine occasions are monitored in near-real time to provide a full image of machine circumstances and their working environments. Using knowledge streamed via LnW Join, L&W goals to create higher gaming expertise for his or her end-users in addition to convey extra worth to their on line casino clients.

Gentle & Surprise teamed up with the Amazon ML Solutions Lab to make use of occasions knowledge streamed from LnW Hook up with allow machine studying (ML)-powered predictive upkeep for slot machines. Predictive upkeep is a typical ML use case for companies with bodily tools or equipment property. With predictive upkeep, L&W can get superior warning of machine breakdowns and proactively dispatch a service crew to examine the difficulty. It will scale back machine downtime and keep away from important income loss for casinos. With no distant diagnostic system in place, subject decision by the Gentle & Surprise service crew on the on line casino ground may be expensive and inefficient, whereas severely degrading the shopper gaming expertise.

The character of the undertaking is extremely exploratory—that is the primary try at predictive upkeep within the gaming {industry}. The Amazon ML Options Lab and L&W crew launched into an end-to-end journey from formulating the ML drawback and defining the analysis metrics, to delivering a high-quality answer. The ultimate ML mannequin combines CNN and Transformer, that are the state-of-the-art neural community architectures for modeling sequential machine log knowledge. The publish presents an in depth description of this journey, and we hope you’ll take pleasure in it as a lot as we do!

On this publish, we talk about the next:

- How we formulated the predictive upkeep drawback as an ML drawback with a set of acceptable metrics for analysis

- How we ready knowledge for coaching and testing

- Information preprocessing and have engineering methods we employed to acquire performant fashions

- Performing a hyperparameter tuning step with Amazon SageMaker Automatic Model Tuning

- Comparisons between the baseline mannequin and the ultimate CNN+Transformer mannequin

- Further methods we used to enhance mannequin efficiency, comparable to ensembling

Background

On this part, we talk about the problems that necessitated this answer.

Dataset

Slot machine environments are extremely regulated and are deployed in an air-gapped setting. In LnW Join, an encryption course of was designed to supply a safe and dependable mechanism for the information to be introduced into an AWS knowledge lake for predictive modeling. The aggregated information are encrypted and the decryption key’s solely out there in AWS Key Management Service (AWS KMS). A cellular-based non-public community into AWS is about up via which the information have been uploaded into Amazon Simple Storage Service (Amazon S3).

LnW Join streams a variety of machine occasions, comparable to begin of recreation, finish of recreation, and extra. The system collects over 500 several types of occasions. As proven within the following

, every occasion is recorded together with a timestamp of when it occurred and the ID of the machine recording the occasion. LnW Join additionally information when a machine enters a non-playable state, and it will likely be marked as a machine failure or breakdown if it doesn’t get well to a playable state inside a sufficiently quick time span.

| Machine ID | Occasion Kind ID | Timestamp |

|---|---|---|

| 0 | E1 | 2022-01-01 00:17:24 |

| 0 | E3 | 2022-01-01 00:17:29 |

| 1000 | E4 | 2022-01-01 00:17:33 |

| 114 | E234 | 2022-01-01 00:17:34 |

| 222 | E100 | 2022-01-01 00:17:37 |

Along with dynamic machine occasions, static metadata about every machine can also be out there. This consists of info comparable to machine distinctive identifier, cupboard sort, location, working system, software program model, recreation theme, and extra, as proven within the following desk. (All of the names within the desk are anonymized to guard buyer info.)

| Machine ID | Cupboard Kind | OS | Location | Sport Theme |

|---|---|---|---|---|

| 276 | A | OS_Ver0 | AA Resort & On line casino | StormMaiden |

| 167 | B | OS_Ver1 | BB On line casino, Resort & Spa | UHMLIndia |

| 13 | C | OS_Ver0 | CC On line casino & Resort | TerrificTiger |

| 307 | D | OS_Ver0 | DD On line casino Resort | NeptunesRealm |

| 70 | E | OS_Ver0 | EE Resort & On line casino | RLPMealTicket |

Drawback definition

We deal with the predictive upkeep drawback for slot machines as a binary classification drawback. The ML mannequin takes within the historic sequence of machine occasions and different metadata and predicts whether or not a machine will encounter a failure in a 6-hour future time window. If a machine will break down inside 6 hours, it’s deemed a high-priority machine for upkeep. In any other case, it’s low precedence. The next determine provides examples of low-priority (prime) and high-priority (backside) samples. We use a fixed-length look-back time window to gather historic machine occasion knowledge for prediction. Experiments present that longer look-back time home windows enhance mannequin efficiency considerably (extra particulars later on this publish).

Modeling challenges

We confronted a few challenges fixing this drawback:

- We’ve got an enormous quantity occasion logs that comprise round 50 million occasions a month (from roughly 1,000 recreation samples). Cautious optimization is required within the knowledge extraction and preprocessing stage.

- Occasion sequence modeling was difficult as a result of extraordinarily uneven distribution of occasions over time. A 3-hour window can comprise anyplace from tens to hundreds of occasions.

- Machines are in a very good state more often than not and the high-priority upkeep is a uncommon class, which launched a category imbalance subject.

- New machines are added repeatedly to the system, so we had to ensure our mannequin can deal with prediction on new machines which have by no means been seen in coaching.

Information preprocessing and have engineering

On this part, we talk about our strategies for knowledge preparation and have engineering.

Characteristic engineering

Slot machine feeds are streams of unequally spaced time sequence occasions; for instance, the variety of occasions in a 3-hour window can vary from tens to hundreds. To deal with this imbalance, we used occasion frequencies as a substitute of the uncooked sequence knowledge. An easy strategy is aggregating the occasion frequency for your entire look-back window and feeding it into the mannequin. Nonetheless, when utilizing this illustration, the temporal info is misplaced, and the order of occasions will not be preserved. We as a substitute used temporal binning by dividing the time window into N equal sub-windows and calculating the occasion frequencies in every. The ultimate options of a time window are the concatenation of all its sub-window options. Growing the variety of bins preserves extra temporal info. The next determine illustrates temporal binning on a pattern window.

First, the pattern time window is break up into two equal sub-windows (bins); we used solely two bins right here for simplicity for illustration. Then, the counts of the occasions E1, E2, E3, and E4 are calculated in every bin. Lastly, they’re concatenated and used as options.

Together with the occasion frequency-based options, we used machine-specific options like software program model, cupboard sort, recreation theme, and recreation model. Moreover, we added options associated to the timestamps to seize the seasonality, comparable to hour of the day and day of the week.

Information preparation

To extract knowledge effectively for coaching and testing, we make the most of Amazon Athena and the AWS Glue Information Catalog. The occasions knowledge is saved in Amazon S3 in Parquet format and partitioned in accordance with day/month/hour. This facilitates environment friendly extraction of knowledge samples inside a specified time window. We use knowledge from all machines within the newest month for testing and the remainder of the information for coaching, which helps keep away from potential knowledge leakage.

ML methodology and mannequin coaching

On this part, we talk about our baseline mannequin with AutoGluon and the way we constructed a custom-made neural community with SageMaker automated mannequin tuning.

Constructing a baseline mannequin with AutoGluon

With any ML use case, it’s vital to ascertain a baseline mannequin for use for comparability and iteration. We used AutoGluon to discover a number of traditional ML algorithms. AutoGluon is easy-to-use AutoML device that makes use of automated knowledge processing, hyperparameter tuning, and mannequin ensemble. The very best baseline was achieved with a weighted ensemble of gradient boosted determination tree fashions. The convenience of use of AutoGluon helped us within the discovery stage to navigate shortly and effectively via a variety of potential knowledge and ML modeling instructions.

Constructing and tuning a custom-made neural community mannequin with SageMaker automated mannequin tuning

After experimenting with completely different neural networks architectures, we constructed a custom-made deep studying mannequin for predictive upkeep. Our mannequin surpassed the AutoGluon baseline mannequin by 121% in recall at 80% precision. The ultimate mannequin ingests historic machine occasion sequence knowledge, time options comparable to hour of the day, and static machine metadata. We make the most of SageMaker automatic model tuning jobs to seek for the most effective hyperparameters and mannequin architectures.

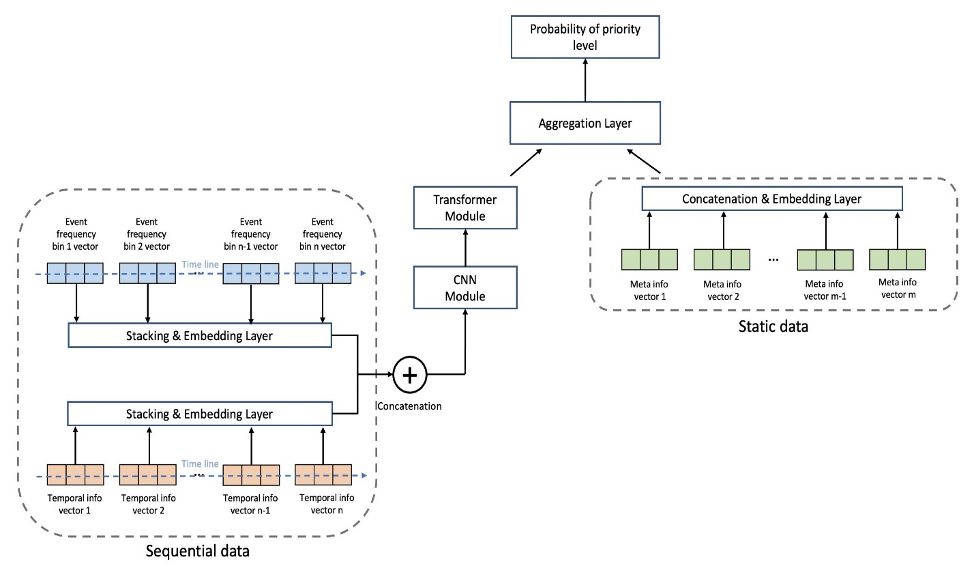

The next determine exhibits the mannequin structure. We first normalize the binned occasion sequence knowledge by common frequencies of every occasion within the coaching set to take away the overwhelming impact of high-frequency occasions (begin of recreation, finish of recreation, and so forth). The embeddings for particular person occasions are learnable, whereas the temporal characteristic embeddings (day of the week, hour of the day) are extracted utilizing the package deal GluonTS. Then we concatenate the occasion sequence knowledge with the temporal characteristic embeddings because the enter to the mannequin. The mannequin consists of the next layers:

- Convolutional layers (CNN) – Every CNN layer consists of two 1-dimensional convolutional operations with residual connections. The output of every CNN layer has the identical sequence size because the enter to permit for straightforward stacking with different modules. The whole variety of CNN layers is a tunable hyperparameter.

- Transformer encoder layers (TRANS) – The output of the CNN layers is fed along with the positional encoding to a multi-head self-attention construction. We use TRANS to instantly seize temporal dependencies as a substitute of utilizing recurrent neural networks. Right here, binning of the uncooked sequence knowledge (lowering size from hundreds to a whole lot) helps alleviate the GPU reminiscence bottlenecks, whereas holding the chronological info to a tunable extent (the variety of the bins is a tunable hyperparameter).

- Aggregation layers (AGG) – The ultimate layer combines the metadata info (recreation theme sort, cupboard sort, places) to supply the precedence stage chance prediction. It consists of a number of pooling layers and totally linked layers for incremental dimension discount. The multi-hot embeddings of metadata are additionally learnable, and don’t undergo CNN and TRANS layers as a result of they don’t comprise sequential info.

We use the cross-entropy loss with class weights as tunable hyperparameters to regulate for the category imbalance subject. As well as, the numbers of CNN and TRANS layers are essential hyperparameters with the potential values of 0, which implies particular layers might not at all times exist within the mannequin structure. This manner, we’ve a unified framework the place the mannequin architectures are searched together with different ordinary hyperparameters.

We make the most of SageMaker automated mannequin tuning, also referred to as hyperparameter optimization (HPO), to effectively discover mannequin variations and the massive search area of all hyperparameters. Computerized mannequin tuning receives the custom-made algorithm, coaching knowledge, and hyperparameter search area configurations, and searches for finest hyperparameters utilizing completely different methods comparable to Bayesian, Hyperband, and extra with a number of GPU cases in parallel. After evaluating on a hold-out validation set, we obtained the most effective mannequin structure with two layers of CNN, one layer of TRANS with 4 heads, and an AGG layer.

We used the next hyperparameter ranges to seek for the most effective mannequin structure:

To additional enhance mannequin accuracy and scale back mannequin variance, we educated the mannequin with a number of impartial random weight initializations, and aggregated the consequence with imply values as the ultimate chance prediction. There’s a trade-off between extra computing assets and higher mannequin efficiency, and we noticed that 5–10 ought to be an affordable quantity within the present use case (outcomes proven later on this publish).

Mannequin efficiency outcomes

On this part, we current the mannequin efficiency analysis metrics and outcomes.

Analysis metrics

Precision is essential for this predictive upkeep use case. Low precision means reporting extra false upkeep calls, which drives prices up via pointless upkeep. As a result of common precision (AP) doesn’t totally align with the excessive precision goal, we launched a brand new metric named common recall at excessive precisions (ARHP). ARHP is the same as the common of remembers at 60%, 70%, and 80% precision factors. We additionally used precision at prime Ok% (Ok=1, 10), AUPR, and AUROC as further metrics.

Outcomes

The next desk summarizes the outcomes utilizing the baseline and the custom-made neural community fashions, with 7/1/2022 because the prepare/take a look at break up level. Experiments present that growing the window size and pattern knowledge dimension each enhance the mannequin efficiency, as a result of they comprise extra historic info to assist with the prediction. Whatever the knowledge settings, the neural community mannequin outperforms AutoGluon in all metrics. For instance, recall on the fastened 80% precision is elevated by 121%, which lets you shortly determine extra malfunctioned machines if utilizing the neural community mannequin.

| Mannequin | Window size/Information dimension | AUROC | AUPR | ARHP | Recall@Prec0.6 | Recall@Prec0.7 | Recall@Prec0.8 | Prec@top1% | Prec@top10% |

|---|---|---|---|---|---|---|---|---|---|

| AutoGluon baseline | 12H/500k | 66.5 | 36.1 | 9.5 | 12.7 | 9.3 | 6.5 | 85 | 42 |

| Neural Community | 12H/500k | 74.7 | 46.5 | 18.5 | 25 | 18.1 | 12.3 | 89 | 55 |

| AutoGluon baseline | 48H/1mm | 70.2 | 44.9 | 18.8 | 26.5 | 18.4 | 11.5 | 92 | 55 |

| Neural Community | 48H/1mm | 75.2 | 53.1 | 32.4 | 39.3 | 32.6 | 25.4 | 94 | 65 |

The next figures illustrate the impact of utilizing ensembles to spice up the neural community mannequin efficiency. All of the analysis metrics proven on the x-axis are improved, with increased imply (extra correct) and decrease variance (extra steady). Every box-plot is from 12 repeated experiments, from no ensembles to 10 fashions in ensembles (x-axis). Comparable tendencies persist in all metrics apart from the Prec@top1% and Recall@Prec80% proven.

After factoring within the computational value, we observe that utilizing 5–10 fashions in ensembles is appropriate for Gentle & Surprise datasets.

Conclusion

Our collaboration has resulted within the creation of a groundbreaking predictive upkeep answer for the gaming {industry}, in addition to a reusable framework that may very well be utilized in a wide range of predictive upkeep situations. The adoption of AWS applied sciences comparable to SageMaker automated mannequin tuning facilitates Gentle & Surprise to navigate new alternatives utilizing near-real-time knowledge streams. Gentle & Surprise is beginning the deployment on AWS.

If you want assist accelerating using ML in your services and products, please contact the Amazon ML Solutions Lab program.

In regards to the authors

Aruna Abeyakoon is the Senior Director of Information Science & Analytics at Gentle & Surprise Land-based Gaming Division. Aruna leads the industry-first Gentle & Surprise Join initiative and helps each on line casino companions and inside stakeholders with client habits and product insights to make higher video games, optimize product choices, handle property, and well being monitoring & predictive upkeep.

Aruna Abeyakoon is the Senior Director of Information Science & Analytics at Gentle & Surprise Land-based Gaming Division. Aruna leads the industry-first Gentle & Surprise Join initiative and helps each on line casino companions and inside stakeholders with client habits and product insights to make higher video games, optimize product choices, handle property, and well being monitoring & predictive upkeep.

Denisse Colin is a Senior Information Science Supervisor at Gentle & Surprise, a number one cross-platform world recreation firm. She is a member of the Gaming Information & Analytics crew serving to develop modern options to enhance product efficiency and clients’ experiences via Gentle & Surprise Join.

Denisse Colin is a Senior Information Science Supervisor at Gentle & Surprise, a number one cross-platform world recreation firm. She is a member of the Gaming Information & Analytics crew serving to develop modern options to enhance product efficiency and clients’ experiences via Gentle & Surprise Join.

Tesfagabir Meharizghi is a Information Scientist on the Amazon ML Options Lab the place he helps AWS clients throughout numerous industries comparable to gaming, healthcare and life sciences, manufacturing, automotive, and sports activities and media, speed up their use of machine studying and AWS cloud companies to unravel their enterprise challenges.

Tesfagabir Meharizghi is a Information Scientist on the Amazon ML Options Lab the place he helps AWS clients throughout numerous industries comparable to gaming, healthcare and life sciences, manufacturing, automotive, and sports activities and media, speed up their use of machine studying and AWS cloud companies to unravel their enterprise challenges.

Mohamad Aljazaery is an utilized scientist at Amazon ML Options Lab. He helps AWS clients determine and construct ML options to handle their enterprise challenges in areas comparable to logistics, personalization and suggestions, laptop imaginative and prescient, fraud prevention, forecasting and provide chain optimization.

Mohamad Aljazaery is an utilized scientist at Amazon ML Options Lab. He helps AWS clients determine and construct ML options to handle their enterprise challenges in areas comparable to logistics, personalization and suggestions, laptop imaginative and prescient, fraud prevention, forecasting and provide chain optimization.

Yawei Wang is an Utilized Scientist on the Amazon ML Answer Lab. He helps AWS enterprise companions determine and construct ML options to handle their group’s enterprise challenges in a real-world state of affairs.

Yawei Wang is an Utilized Scientist on the Amazon ML Answer Lab. He helps AWS enterprise companions determine and construct ML options to handle their group’s enterprise challenges in a real-world state of affairs.

Yun Zhou is an Utilized Scientist on the Amazon ML Options Lab, the place he helps with analysis and improvement to make sure the success of AWS clients. He works on pioneering options for numerous industries utilizing statistical modeling and machine studying methods. His curiosity consists of generative fashions and sequential knowledge modeling.

Yun Zhou is an Utilized Scientist on the Amazon ML Options Lab, the place he helps with analysis and improvement to make sure the success of AWS clients. He works on pioneering options for numerous industries utilizing statistical modeling and machine studying methods. His curiosity consists of generative fashions and sequential knowledge modeling.

Panpan Xu is a Utilized Science Supervisor with the Amazon ML Options Lab at AWS. She is engaged on analysis and improvement of Machine Studying algorithms for high-impact buyer functions in a wide range of industrial verticals to speed up their AI and cloud adoption. Her analysis curiosity consists of mannequin interpretability, causal evaluation, human-in-the-loop AI and interactive knowledge visualization.

Panpan Xu is a Utilized Science Supervisor with the Amazon ML Options Lab at AWS. She is engaged on analysis and improvement of Machine Studying algorithms for high-impact buyer functions in a wide range of industrial verticals to speed up their AI and cloud adoption. Her analysis curiosity consists of mannequin interpretability, causal evaluation, human-in-the-loop AI and interactive knowledge visualization.

Raj Salvaji leads Options Structure within the Hospitality section at AWS. He works with hospitality clients by offering strategic steerage, technical experience to create options to advanced enterprise challenges. He attracts on 25 years of expertise in a number of engineering roles throughout Hospitality, Finance and Automotive industries.

Raj Salvaji leads Options Structure within the Hospitality section at AWS. He works with hospitality clients by offering strategic steerage, technical experience to create options to advanced enterprise challenges. He attracts on 25 years of expertise in a number of engineering roles throughout Hospitality, Finance and Automotive industries.

Shane Rai is a Principal ML Strategist with the Amazon ML Options Lab at AWS. He works with clients throughout a various spectrum of industries to unravel their most urgent and modern enterprise wants utilizing AWS’s breadth of cloud-based AI/ML companies.

Shane Rai is a Principal ML Strategist with the Amazon ML Options Lab at AWS. He works with clients throughout a various spectrum of industries to unravel their most urgent and modern enterprise wants utilizing AWS’s breadth of cloud-based AI/ML companies.