Scale back power consumption of your machine studying workloads by as much as 90% with AWS purpose-built accelerators

Machine studying (ML) engineers have historically centered on hanging a steadiness between mannequin coaching and deployment price vs. efficiency. More and more, sustainability (power effectivity) is turning into a further goal for patrons. That is vital as a result of coaching ML fashions after which utilizing the skilled fashions to make predictions (inference) could be extremely energy-intensive duties. As well as, an increasing number of functions round us have turn out to be infused with ML, and new ML-powered functions are conceived day-after-day. A well-liked instance is OpenAI’s ChatGPT, which is powered by a state-of-the-art giant language mannequin (LMM). For reference, GPT-3, an earlier generation LLM has 175 billion parameters and requires months of continuous coaching on a cluster of hundreds of accelerated processors. The Carbontracker study estimates that coaching GPT-3 from scratch might emit as much as 85 metric tons of CO2 equal, utilizing clusters of specialised {hardware} accelerators.

There are a number of methods AWS is enabling ML practitioners to decrease the environmental affect of their workloads. A technique is thru offering prescriptive guidance around architecting your AI/ML workloads for sustainability. One other approach is by providing managed ML coaching and orchestration providers equivalent to Amazon SageMaker Studio, which mechanically tears down and scales up ML sources when not in use, and supplies a bunch of out-of-the-box tooling that saves price and sources. One other main enabler is the event of energy efficient, high-performance, purpose-built accelerators for coaching and deploying ML fashions.

The main target of this put up is on {hardware} as a lever for sustainable ML. We current the outcomes of current efficiency and energy draw experiments carried out by AWS that quantify the power effectivity advantages you possibly can count on when migrating your deep studying workloads from different inference- and training-optimized accelerated Amazon Elastic Compute Cloud (Amazon EC2) situations to AWS Inferentia and AWS Trainium. Inferentia and Trainium are AWS’s recent addition to its portfolio of purpose-built accelerators particularly designed by Amazon’s Annapurna Labs for ML inference and coaching workloads.

AWS Inferentia and AWS Trainium for sustainable ML

To offer you reasonable numbers of the power financial savings potential of AWS Inferentia and AWS Trainium in a real-world software, we’ve got carried out a number of energy draw benchmark experiments. We’ve designed these benchmarks with the next key standards in thoughts:

- First, we wished to make it possible for we captured direct power consumption attributable to the take a look at workload, together with not simply the ML accelerator but in addition the compute, reminiscence, and community. Due to this fact, in our take a look at setup, we measured energy draw at that degree.

- Second, when working the coaching and inference workloads, we ensured that each one situations had been working at their respective bodily {hardware} limits and took measurements solely after that restrict was reached to make sure comparability.

- Lastly, we wished to make sure that the power financial savings reported on this put up could possibly be achieved in a sensible real-world software. Due to this fact, we used widespread customer-inspired ML use instances for benchmarking and testing.

The outcomes are reported within the following sections.

Inference experiment: Actual-time doc understanding with LayoutLM

Inference, versus coaching, is a steady, unbounded workload that doesn’t have an outlined completion level. It due to this fact makes up a big portion of the lifetime useful resource consumption of an ML workload. Getting inference proper is vital to reaching excessive efficiency, low price, and sustainability (higher power effectivity) alongside the total ML lifecycle. With inference duties, clients are normally enthusiastic about reaching a sure inference charge to maintain up with the ingest demand.

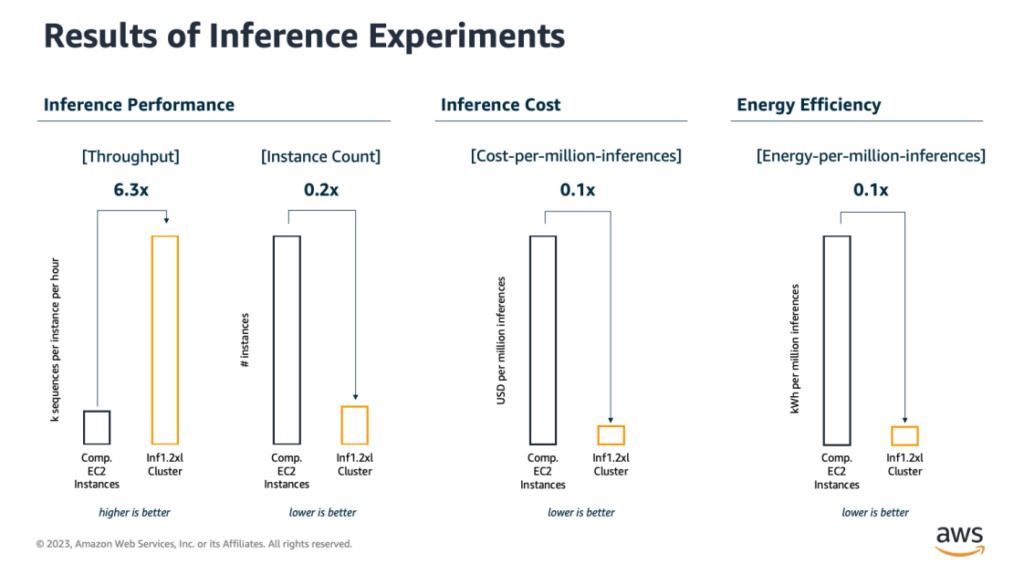

The experiment offered on this put up is impressed by a real-time doc understanding use case, which is a typical software in industries like banking or insurance coverage (for instance, for claims or software kind processing). Particularly, we choose LayoutLM, a pre-trained transformer mannequin used for doc picture processing and data extraction. We set a goal SLA of 1,000,000 inferences per hour, a price typically thought of as actual time, after which specify two {hardware} configurations able to assembly this requirement: one utilizing Amazon EC2 Inf1 instances, that includes AWS Inferentia, and one utilizing comparable accelerated EC2 situations optimized for inference duties. All through the experiment, we observe a number of indicators to measure inference efficiency, price, and power effectivity of each {hardware} configurations. The outcomes are offered within the following determine.

Efficiency, Price and Power Effectivity Outcomes of Inference Benchmarks

AWS Inferentia delivers 6.3 occasions greater inference throughput. In consequence, with Inferentia, you possibly can run the identical real-time LayoutLM-based doc understanding workload on fewer situations (6 AWS Inferentia situations vs. 33 different inference-optimized accelerated EC2 situations, equal to an 82% discount), use lower than a tenth (-92%) of the power within the course of, all whereas reaching considerably decrease price per inference (USD 2 vs. USD 25 per million inferences, equal to a 91% price discount).

Coaching experiment: Coaching BERT Massive from scratch

Coaching, versus inference, is a finite course of that’s repeated a lot much less often. ML engineers are usually enthusiastic about excessive cluster efficiency to scale back coaching time whereas conserving price below management. Power effectivity is a secondary (but rising) concern. With AWS Trainium, there isn’t a trade-off choice: ML engineers can profit from excessive coaching efficiency whereas additionally optimizing for price and lowering environmental affect.

For instance this, we choose BERT Large, a preferred language mannequin used for pure language understanding use instances equivalent to chatbot-based query answering and conversational response prediction. Coaching a well-performing BERT Massive mannequin from scratch usually requires 450 million sequences to be processed. We evaluate two cluster configurations, every with a set measurement of 16 situations and able to coaching BERT Massive from scratch (450 million sequences processed) in lower than a day. The primary makes use of conventional accelerated EC2 situations. The second setup makes use of Amazon EC2 Trn1 instances that includes AWS Trainium. Once more, we benchmark each configurations by way of coaching efficiency, price, and environmental affect (power effectivity). The outcomes are proven within the following determine.

Efficiency, Price and Power Effectivity Outcomes of Coaching Benchmarks

Within the experiments, AWS Trainium-based situations outperformed the comparable training-optimized accelerated EC2 situations by an element of 1.7 by way of sequences processed per hour, chopping the full coaching time by 43% (2.3h versus 4h on comparable accelerated EC2 situations). In consequence, when utilizing a Trainium-based occasion cluster, the full power consumption for coaching BERT Massive from scratch is roughly 29% decrease in comparison with a same-sized cluster of comparable accelerated EC2 situations. Once more, these efficiency and power effectivity advantages additionally include important price enhancements: price to coach for the BERT ML workload is roughly 62% decrease on Trainium situations (USD 787 versus USD 2091 per full coaching run).

Getting began with AWS purpose-built accelerators for ML

Though the experiments carried out right here all use commonplace fashions from the pure language processing (NLP) area, AWS Inferentia and AWS Trainium excel with many different advanced mannequin architectures together with LLMs and probably the most difficult generative AI architectures that customers are constructing (equivalent to GPT-3). These accelerators do notably effectively with fashions with over 10 billion parameters, or laptop imaginative and prescient fashions like secure diffusion (see Model Architecture Fit Guidelines for extra particulars). Certainly, lots of our clients are already utilizing Inferentia and Trainium for all kinds of ML use cases.

To run your end-to-end deep studying workloads on AWS Inferentia- and AWS Trainium-based situations, you should use AWS Neuron. Neuron is an end-to-end software program growth equipment (SDK) that features a deep studying compiler, runtime, and instruments which are natively built-in into the most well-liked ML frameworks like TensorFlow and PyTorch. You should use the Neuron SDK to simply port your current TensorFlow or PyTorch deep studying ML workloads to Inferentia and Trainium and begin constructing new fashions utilizing the identical well-known ML frameworks. For simpler setup, use one in every of our Amazon Machine Images (AMIs) for deep learning, which include lots of the required packages and dependencies. Even easier: you should use Amazon SageMaker Studio, which natively helps TensorFlow and PyTorch on Inferentia and Trainium (see the aws-samples GitHub repo for an instance).

One ultimate word: whereas Inferentia and Trainium are goal constructed for deep studying workloads, many much less advanced ML algorithms can carry out effectively on CPU-based situations (for instance, XGBoost and LightGBM and even some CNNs). In these instances, a migration to AWS Graviton3 might considerably scale back the environmental affect of your ML workloads. AWS Graviton-based situations use as much as 60% much less power for a similar efficiency than comparable accelerated EC2 situations.

Conclusion

There’s a widespread false impression that working ML workloads in a sustainable and energy-efficient vogue means sacrificing on efficiency or price. With AWS purpose-built accelerators for machine studying, ML engineers don’t must make that trade-off. As an alternative, they’ll run their deep studying workloads on extremely specialised purpose-built deep studying {hardware}, equivalent to AWS Inferentia and AWS Trainium, that considerably outperforms comparable accelerated EC2 occasion sorts, delivering decrease price, greater efficiency, and higher power effectivity—as much as 90%—all on the identical time. To start out working your ML workloads on Inferentia and Trainium, take a look at the AWS Neuron documentation or spin up one of many sample notebooks. You can too watch the AWS re:Invent 2022 discuss on Sustainability and AWS silicon (SUS206), which covers lots of the subjects mentioned on this put up.

Concerning the Authors

Karsten Schroer is a Options Architect at AWS. He helps clients in leveraging information and expertise to drive sustainability of their IT infrastructure and construct data-driven options that allow sustainable operations of their respective verticals. Karsten joined AWS following his PhD research in utilized machine studying & operations administration. He’s really obsessed with technology-enabled options to societal challenges and likes to dive deep into the strategies and software architectures that underlie these options.

Karsten Schroer is a Options Architect at AWS. He helps clients in leveraging information and expertise to drive sustainability of their IT infrastructure and construct data-driven options that allow sustainable operations of their respective verticals. Karsten joined AWS following his PhD research in utilized machine studying & operations administration. He’s really obsessed with technology-enabled options to societal challenges and likes to dive deep into the strategies and software architectures that underlie these options.

Kamran Khan is a Sr. Technical Product Supervisor at AWS Annapurna Labs. He works intently with AI/ML clients to form the roadmap for AWS purpose-built silicon improvements popping out of Amazon’s Annapurna Labs. His particular focus is on accelerated deep-learning chips together with AWS Trainium and AWS Inferentia. Kamran has 18 years of expertise within the semiconductor trade. Kamran has over a decade of expertise serving to builders obtain their ML objectives.

Kamran Khan is a Sr. Technical Product Supervisor at AWS Annapurna Labs. He works intently with AI/ML clients to form the roadmap for AWS purpose-built silicon improvements popping out of Amazon’s Annapurna Labs. His particular focus is on accelerated deep-learning chips together with AWS Trainium and AWS Inferentia. Kamran has 18 years of expertise within the semiconductor trade. Kamran has over a decade of expertise serving to builders obtain their ML objectives.