Meta’s Newest AI Breakthrough: Testing the Massively Multilingual Speech

Discover the cutting-edge multilingual options of Meta’s newest computerized speech recognition (ASR) mannequin

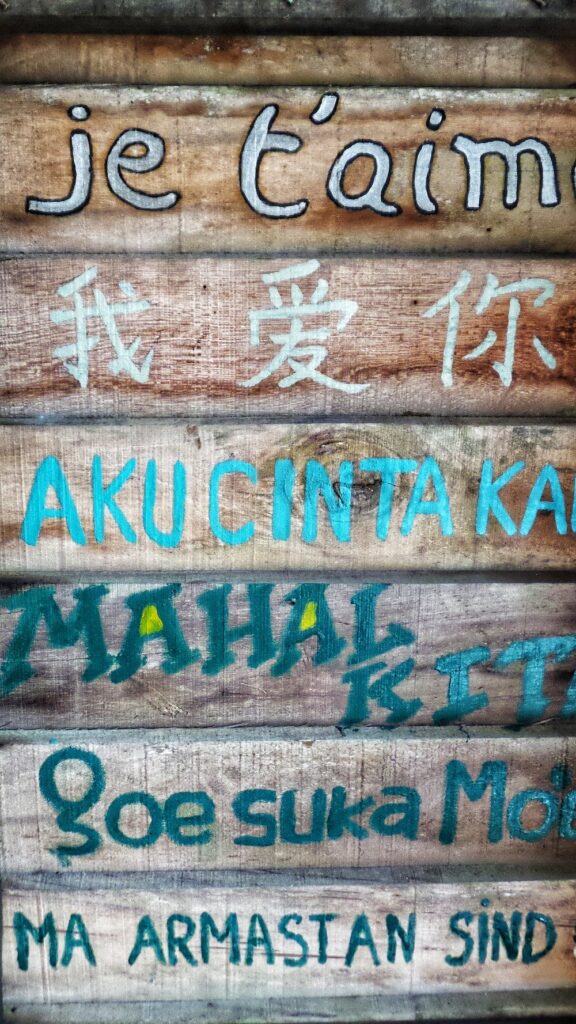

Massively Multilingual Speech (MMS)¹ is the most recent launch by Meta AI (just some days in the past). It pushes the boundaries of speech expertise by increasing its attain from about 100 languages to over 1,000. This was achieved by constructing a single multilingual speech recognition mannequin. The mannequin also can establish over 4,000 languages, representing a 40-fold improve over earlier capabilities.

The MMS venture goals to make it simpler for folks to entry data and use units of their most well-liked language. It expands text-to-speech and speech-to-text expertise to underserved languages, persevering with to cut back language limitations in our world world. Present purposes can now embrace a greater diversity of languages, similar to digital assistants or voice-activated units. On the similar time, new use instances emerge in cross-cultural communication, for instance, in messaging companies or digital and augmented actuality.

On this article, we are going to stroll by way of the usage of MMS for ASR in English and Portuguese and supply a step-by-step information on organising the setting to run the mannequin.

This text belongs to “Massive Language Fashions Chronicles: Navigating the NLP Frontier”, a brand new weekly collection of articles that can discover tips on how to leverage the ability of enormous fashions for numerous NLP duties. By diving into these cutting-edge applied sciences, we intention to empower builders, researchers, and fans to harness the potential of NLP and unlock new potentialities.

Articles printed up to now:

- Summarizing the latest Spotify releases with ChatGPT

- Master Semantic Search at Scale: Index Millions of Documents with Lightning-Fast Inference Times using FAISS and Sentence Transformers

- Unlock the Power of Audio Data: Advanced Transcription and Diarization with Whisper, WhisperX, and PyAnnotate

- Whisper JAX vs PyTorch: Uncovering the Truth about ASR Performance on GPUs

- Vosk for Efficient Enterprise-Grade Speech Recognition: An Evaluation and Implementation Guide

As at all times, the code is accessible on my Github.

Meta used non secular texts, such because the Bible, to construct a mannequin overlaying this wide selection of languages. These texts have a number of attention-grabbing elements: first, they’re translated into many languages, and second, there are publicly accessible audio recordings of individuals studying these texts in several languages. Thus, the principle dataset the place this mannequin was skilled was the New Testomony, which the analysis staff was capable of gather for over 1,100 languages and supplied greater than 32h of information per language. They went additional to make it acknowledge 4,000 languages. This was completed by utilizing unlabeled recordings of varied different Christian non secular readings. From the experiments outcomes, although the information is from a particular area, it might probably generalize properly.

These are usually not the one contributions of the work. They created a brand new preprocessing and alignment mannequin that may deal with lengthy recordings. This was used to course of the audio, and misaligned information was eliminated utilizing a last cross-validation filtering step. Recall from one in every of our earlier articles that we noticed that one of many challenges of Whisper was the incapacity to align the transcription correctly. One other vital step of the strategy was the utilization of wav2vec 2.0, a self-supervised studying mannequin, to coach their system on an enormous quantity of speech information (about 500,000 hours) in over 1,400 languages. The labeled dataset we mentioned beforehand isn’t sufficient to coach a mannequin of the scale of MMS, so wav2vec 2.0 was used to cut back the necessity for labeled information. Lastly, the ensuing fashions have been then fine-tuned for a particular speech job, similar to multilingual speech recognition or language identification.

The MMS fashions have been open-sourced by Meta just a few days in the past and have been made accessible within the Fairseq repository. Within the subsequent part, we cowl what Fairseq is and the way we are able to take a look at these new fashions from Meta.

Fairseq is an open-source sequence-to-sequence toolkit developed by Fb AI Analysis, also called FAIR. It offers reference implementations of varied sequence modeling algorithms, together with convolutional and recurrent neural networks, transformers, and different architectures.

The Fairseq repository relies on PyTorch, one other open-source venture initially developed by the Meta and now below the umbrella of the Linux Basis. It’s a very highly effective machine studying framework that provides excessive flexibility and pace, significantly on the subject of deep studying.

The Fairseq implementations are designed for researchers and builders to coach customized fashions and it helps duties similar to translation, summarization, language modeling, and different textual content technology duties. One of many key options of Fairseq is that it helps distributed coaching, which means it might probably effectively make the most of a number of GPUs both on a single machine or throughout a number of machines. This makes it well-suited for large-scale machine studying duties.

Fairseq offers two pre-trained fashions for obtain: MMS-300M and MMS-1B. You even have entry to fine-tuned fashions accessible for various languages and datasets. For our goal, we take a look at the MMS-1B mannequin fine-tuned for 102 languages within the FLEURS dataset and in addition the MMS-1B-all, which is fine-tuned to deal with 1162 languages (!), fine-tuned utilizing a number of completely different datasets.

Do not forget that these fashions are nonetheless in analysis section, making testing a bit more difficult. There are extra steps that you wouldn’t discover with production-ready software program.

First, it’s good to arrange a .env file in your venture root to configure your setting variables. It ought to look one thing like this:

CURRENT_DIR=/path/to/present/dir

AUDIO_SAMPLES_DIR=/path/to/audio_samples

FAIRSEQ_DIR=/path/to/fairseq

VIDEO_FILE=/path/to/video/file

AUDIO_FILE=/path/to/audio/file

RESAMPLED_AUDIO_FILE=/path/to/resampled/audio/file

TMPDIR=/path/to/tmp

PYTHONPATH=.

PREFIX=INFER

HYDRA_FULL_ERROR=1

USER=micro

MODEL=/path/to/fairseq/models_new/mms1b_all.pt

LANG=eng

Subsequent, it’s good to configure the YAML file situated at fairseq/examples/mms/asr/config/infer_common.yaml. This file comprises vital settings and parameters utilized by the script.

Within the YAML file, use a full path for the checkpoint subject like this (except you’re utilizing a containerized utility to run the script):

checkpoint: /path/to/checkpoint/${env:USER}/${env:PREFIX}/${common_eval.results_path}

This full path is important to keep away from potential permission points except you’re working the applying in a container.

If you happen to plan on utilizing a CPU for computation as a substitute of a GPU, you will have so as to add the next directive to the highest degree of the YAML file:

widespread:

cpu: true

This setting directs the script to make use of the CPU for computations.

We use the dotevn python library to load these setting variables in our Python script. Since we’re overwriting some system variables, we might want to use a trick to guarantee that we get the best variables loaded. We use thedotevn_valuestechnique and retailer the output in a variable. This ensures that we get the variables saved in our .envfile and never random system variables even when they’ve the identical identify.

config = dotenv_values(".env")current_dir = config['CURRENT_DIR']

tmp_dir = config['TMPDIR']

fairseq_dir = config['FAIRSEQ_DIR']

video_file = config['VIDEO_FILE']

audio_file = config['AUDIO_FILE']

audio_file_resampled = config['RESAMPLED_AUDIO_FILE']

model_path = config['MODEL']

model_new_dir = config['MODELS_NEW']

lang = config['LANG']

Then, we are able to clone the fairseq GitHub repository and set up it in our machine.

def git_clone(url, path):

"""

Clones a git repositoryParameters:

url (str): The URL of the git repository

path (str): The native path the place the git repository will likely be cloned

"""

if not os.path.exists(path):

Repo.clone_from(url, path)

def install_requirements(necessities):

"""

Installs pip packages

Parameters:

necessities (checklist): Listing of packages to put in

"""

subprocess.check_call(["pip", "install"] + necessities)

git_clone('https://github.com/facebookresearch/fairseq', 'fairseq')

install_requirements(['--editable', './'])

We already mentioned the fashions that we use on this article, so let’s obtain them to our native setting.

def download_file(url, path):

"""

Downloads a fileParameters:

url (str): URL of the file to be downloaded

path (str): The trail the place the file will likely be saved

"""

subprocess.check_call(["wget", "-P", path, url])

download_file('https://dl.fbaipublicfiles.com/mms/asr/mms1b_fl102.pt', model_new_dir)

There may be one extra restriction associated to the enter of the MMS mannequin, the sampling charge of the audio information must be 16000 Hz. In our case, we outlined two methods to generate these information: one which converts video to audio and one other that resamples audio information for the proper sampling charge.

def convert_video_to_audio(video_path, audio_path):

"""

Converts a video file to an audio fileParameters:

video_path (str): Path to the video file

audio_path (str): Path to the output audio file

"""

subprocess.check_call(["ffmpeg", "-i", video_path, "-ar", "16000", audio_path])

def resample_audio(audio_path, new_audio_path, new_sample_rate):

"""

Resamples an audio file

Parameters:

audio_path (str): Path to the present audio file

new_audio_path (str): Path to the output audio file

new_sample_rate (int): New pattern charge in Hz

"""

audio = AudioSegment.from_file(audio_path)

audio = audio.set_frame_rate(new_sample_rate)

audio.export(new_audio_path, format='wav')

We are actually able to run the inference course of utilizing our MMS-1B-all mannequin, which helps 1162 languages.

def run_inference(mannequin, lang, audio):

"""

Runs the MMS ASR inferenceParameters:

mannequin (str): Path to the mannequin file

lang (str): Language of the audio file

audio (str): Path to the audio file

"""

subprocess.check_call(

[

"python",

"examples/mms/asr/infer/mms_infer.py",

"--model",

model,

"--lang",

lang,

"--audio",

audio,

]

)

run_inference(model_path, lang, audio_file_resampled)

On this part, we describe our experimentation setup and focus on the outcomes. We carried out ASR utilizing two completely different fashions from Fairseq, MMS-1B-all and MMS-1B-FL102, in each English and Portuguese. You will discover the audio information in my GitHub repo. These are information that I generated myself only for testing functions.

Let’s begin with the MMS-1B-all mannequin. Right here is the enter and output for the English and Portuguese audio samples:

Eng: simply requiring a small clip to know if the brand new fb analysis mannequin actually performs on

Por: ora bem só agravar aqui um exemplo pa tentar perceber se de facto om novo modelo da fb analysis realmente funciona ou não vamos estar

With the MMS-1B-FL102, the generated speech was considerably worse. Let’s see the identical instance for English:

Eng: simply recarding a small ho clip to know if the brand new facebuok analysis mannequin actually performs on pace recognition duties lets see

Whereas the speech generated isn’t tremendous spectacular for the usual of fashions we’ve immediately, we have to tackle these outcomes from the attitude that these fashions open up ASR to a a lot wider vary of the worldwide inhabitants.

The Massively Multilingual Speech mannequin, developed by Meta, represents yet another step to foster world communication and broaden the attain of language expertise utilizing AI. Its skill to know over 4,000 languages and performance successfully throughout 1,162 of them will increase accessibility for quite a few languages which have been historically underserved.

Our testing of the MMS fashions showcased the chances and limitations of the expertise at its present stage. Though the speech generated by the MMS-1B-FL102 mannequin was not as spectacular as anticipated, the MMS-1B-all mannequin supplied promising outcomes, demonstrating its capability to transcribe speech in each English and Portuguese. Portuguese has been a type of underserved languages, specifically after we contemplate Portuguese from Portugal.

Be happy to attempt it out in your most well-liked language and to share the transcription and suggestions within the remark part.

Communicate: LinkedIn