How OCX Cognition diminished ML mannequin improvement time from weeks to days and mannequin replace time from days to actual time utilizing AWS Step Capabilities and Amazon SageMaker

This submit was co-authored by Brian Curry (Founder and Head of Merchandise at OCX Cognition) and Sandhya MN (Information Science Lead at InfoGain)

OCX Cognition is a San Francisco Bay Space-based startup, providing a industrial B2B software program as a service (SaaS) product referred to as Spectrum AI. Spectrum AI is a predictive (generative) CX analytics platform for enterprises. OCX’s options are developed in collaboration with Infogain, an AWS Superior Tier Companion. Infogain works with OCX Cognition as an built-in product crew, offering human-centered software program engineering companies and experience in software program improvement, microservices, automation, Web of Issues (IoT), and synthetic intelligence.

The Spectrum AI platform combines buyer attitudes with prospects’ operational information and makes use of machine studying (ML) to generate steady perception on CX. OCX constructed Spectrum AI on AWS as a result of AWS supplied a variety of instruments, elastic computing, and an ML surroundings that might preserve tempo with evolving wants.

On this submit, we talk about how OCX Cognition with the assist of Infogain and OCX’s AWS account crew improved their finish buyer expertise and diminished time to worth by automating and orchestrating ML features that supported Spectrum AI’s CX analytics. Utilizing AWS Step Functions, the AWS Step Functions Data Science SDK for Python, and Amazon SageMaker Experiments, OCX Cognition diminished ML mannequin improvement time from 6 weeks to 2 weeks and diminished ML mannequin replace time from 4 days to near-real time.

Background

The Spectrum AI platform has to supply fashions tuned for a whole lot of various generative CX scores for every buyer, and these scores have to be uniquely computed for tens of hundreds of energetic accounts. As time passes and new experiences accumulate, the platform has to replace these scores based mostly on new information inputs. After new scores are produced, OCX and Infogain compute the relative impression of every underlying operational metric within the prediction. Amazon SageMaker is a web-based built-in improvement surroundings (IDE) that permits you to construct, prepare, and deploy ML fashions for any use case with totally managed infrastructure, instruments, and workflows. With SageMaker, the OCX-Infogain crew developed their answer utilizing shared code libraries throughout individually maintained Jupyter notebooks in Amazon SageMaker Studio.

The issue: Scaling the answer for a number of prospects

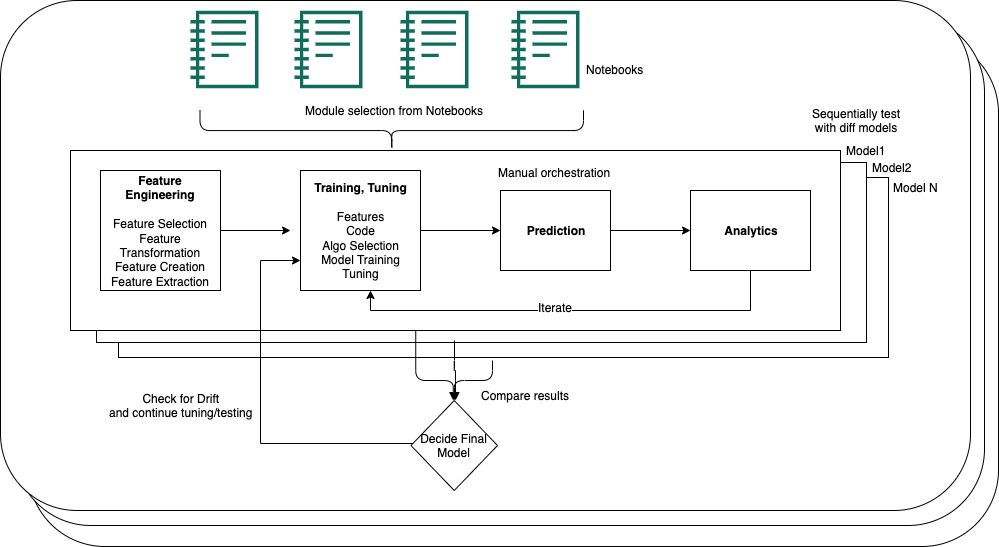

Whereas the preliminary R&D proved profitable, scaling posed a problem. OCX and Infogain’s ML improvement concerned a number of steps: function engineering, mannequin coaching, prediction, and the technology of analytics. The code for modules resided in a number of notebooks, and operating these notebooks was handbook, with no orchestration instrument in place. For each new buyer, the OCX-Infogain crew spent 6 weeks per buyer on mannequin improvement time as a result of libraries couldn’t be reused. Because of the period of time spent on mannequin improvement, the OCX-Infogain crew wanted an automatic and scalable answer that operated as a singular platform utilizing distinctive configurations for every of their prospects.

The next structure diagram depicts OCX’s preliminary ML mannequin improvement and replace processes.

Resolution overview

To simplify the ML course of, the OCX-Infogain crew labored with the AWS account crew to develop a customized declarative ML framework to switch all repetitive code. This diminished the necessity to develop new low-level ML code. New libraries may very well be reused for a number of prospects by configuring the information appropriately for every buyer by means of YAML recordsdata.

Whereas this high-level code continues to be developed initially in Studio utilizing Jupyter notebooks, it’s then transformed to Python (.py recordsdata), and the SageMaker platform is used to construct a Docker picture with BYO (deliver your personal) containers. The Docker photos are then pushed to Amazon Elastic Container Registry (Amazon ECR) as a preparatory step. Lastly, the code is run utilizing Step Capabilities.

The AWS account crew advisable the Step Capabilities Information Science SDK and SageMaker Experiments to automate function engineering, mannequin coaching, and mannequin deployment. The Step Capabilities Information Science SDK was used to generate the step features programmatically. The OCX-Infogain crew realized use options like Parallel and MAP inside Step Capabilities to orchestrate a lot of coaching and processing jobs in parallel, which reduces the runtime. This was mixed with Experiments, which features as an analytics instrument, monitoring a number of ML candidates and hyperparameter tuning variations. These built-in analytics allowed the OCX-Infogain crew to check a number of metrics at runtime and determine best-performing fashions on the fly.

The next structure diagram reveals the MLOps pipeline developed for the mannequin creation cycle.

The Step Capabilities Information Science SDK is used to research and examine a number of mannequin coaching algorithms. The state machine runs a number of fashions in parallel, and every mannequin output is logged into Experiments. When mannequin coaching is full, the outcomes of a number of experiments are retrieved and in contrast utilizing the SDK. The next screenshots present how the very best performing mannequin is chosen for every stage.

The next are the high-level steps of the ML lifecycle:

- ML builders push their code into libraries on the Gitlab repository when improvement in Studio is full.

- AWS CodePipeline is used to take a look at the suitable code from the Gitlab repository.

- A Docker picture is ready utilizing this code and pushed to Amazon ECR for serverless computing.

- Step Capabilities is used to run steps utilizing Amazon SageMaker Processing jobs. Right here, a number of impartial duties are run in parallel:

- Function engineering is carried out, and the options are saved within the function retailer.

- Mannequin coaching is run, with a number of algorithms and a number of other mixtures of hyperparameters using the YAML configuration file.

- The coaching step operate is designed to have heavy parallelism. The fashions for every journey stage are run in parallel. That is depicted within the following diagram.

- Mannequin outcomes are then logged in Experiments. The perfect-performing mannequin is chosen and pushed to the mannequin registry.

- Predictions are made utilizing the best-performing fashions for every CX analytic we generate.

- Lots of of analytics are generated after which handed off for publication in a knowledge warehouse hosted on AWS.

Outcomes

With this method, OCX Cognition has automated and accelerated their ML processing. By changing labor-intensive handbook processes and extremely repetitive improvement burdens, the fee per buyer is diminished by over 60%. This additionally permits OCX to scale their software program enterprise by tripling general capability and doubling capability for simultaneous onboarding of shoppers. OCX’s automating of their ML processing unlocks new potential to develop by means of buyer acquisition. Utilizing SageMaker Experiments to trace mannequin coaching is essential to figuring out the very best set of fashions to make use of and take to manufacturing. For his or her prospects, this new answer gives not solely an 8% enchancment in ML efficiency, however a 63% enchancment in time to worth. New buyer onboarding and the preliminary mannequin technology has improved from 6 weeks to 2 weeks. As soon as constructed and in place, OCX begins to repeatedly regenerate the CX analytics as new enter information arrives from the client. These replace cycles have improved from 4 days to near-real time

Conclusion

On this submit, we confirmed how OCX Cognition and Infogain utilized Step Capabilities, the Step Capabilities Information Science SDK for Python, and Sagemaker Experiments together with Sagemaker Studio to cut back time to worth for the OCX-InfoGain crew in growing and updating CX analytics fashions for his or her prospects.

To get began with these companies, discuss with Amazon SageMaker, AWS Step Functions Data Science Python SDK, AWS Step Functions, and Manage Machine Learning with Amazon SageMaker Experiments.

In regards to the Authors

Brian Curry is presently a founder and Head of Merchandise at OCX Cognition, the place we’re constructing a machine studying platform for buyer analytics. Brian has greater than a decade of expertise main cloud options and design-centered product organizations.

Brian Curry is presently a founder and Head of Merchandise at OCX Cognition, the place we’re constructing a machine studying platform for buyer analytics. Brian has greater than a decade of expertise main cloud options and design-centered product organizations.

Sandhya M N is a part of Infogain and leads the Information Science crew for OCX. She is a seasoned software program improvement chief with in depth expertise throughout a number of applied sciences and trade domains. She is enthusiastic about staying updated with expertise and utilizing it to ship enterprise worth to prospects.

Sandhya M N is a part of Infogain and leads the Information Science crew for OCX. She is a seasoned software program improvement chief with in depth expertise throughout a number of applied sciences and trade domains. She is enthusiastic about staying updated with expertise and utilizing it to ship enterprise worth to prospects.

Prashanth Ganapathy is a Senior Options Architect within the Small Medium Enterprise (SMB) section at AWS. He enjoys studying about AWS AI/ML companies and serving to prospects meet their enterprise outcomes by constructing options for them. Outdoors of labor, Prashanth enjoys pictures, journey, and making an attempt out completely different cuisines.

Prashanth Ganapathy is a Senior Options Architect within the Small Medium Enterprise (SMB) section at AWS. He enjoys studying about AWS AI/ML companies and serving to prospects meet their enterprise outcomes by constructing options for them. Outdoors of labor, Prashanth enjoys pictures, journey, and making an attempt out completely different cuisines.

Sabha Parameswaran is a Senior Options Architect at AWS with over 20 years of deep expertise in enterprise software integration, microservices, containers and distributed techniques efficiency tuning, prototyping, and extra. He’s based mostly out of the San Francisco Bay Space. At AWS, he’s targeted on serving to prospects of their cloud journey and can be actively concerned in microservices and serverless-based structure and frameworks.

Sabha Parameswaran is a Senior Options Architect at AWS with over 20 years of deep expertise in enterprise software integration, microservices, containers and distributed techniques efficiency tuning, prototyping, and extra. He’s based mostly out of the San Francisco Bay Space. At AWS, he’s targeted on serving to prospects of their cloud journey and can be actively concerned in microservices and serverless-based structure and frameworks.

Vaishnavi Ganesan is a Options Architect at AWS based mostly within the San Francisco Bay Space. She is concentrated on serving to Business Section prospects on their cloud journey and is enthusiastic about safety within the cloud. Outdoors of labor, Vaishnavi enjoys touring, mountaineering, and making an attempt out varied espresso roasters.

Vaishnavi Ganesan is a Options Architect at AWS based mostly within the San Francisco Bay Space. She is concentrated on serving to Business Section prospects on their cloud journey and is enthusiastic about safety within the cloud. Outdoors of labor, Vaishnavi enjoys touring, mountaineering, and making an attempt out varied espresso roasters.

Ajay Swaminathan is an Account Supervisor II at AWS. He’s an advocate for Business Section prospects, offering the proper monetary, enterprise innovation, and technical sources in accordance along with his prospects’ objectives. Outdoors of labor, Ajay is enthusiastic about snowboarding, dubstep and drum and bass music, and basketball.

Ajay Swaminathan is an Account Supervisor II at AWS. He’s an advocate for Business Section prospects, offering the proper monetary, enterprise innovation, and technical sources in accordance along with his prospects’ objectives. Outdoors of labor, Ajay is enthusiastic about snowboarding, dubstep and drum and bass music, and basketball.