Get insights in your person’s search conduct from Amazon Kendra utilizing an ML-powered serverless stack

Amazon Kendra is a extremely correct and clever search service that permits customers to look unstructured and structured knowledge utilizing pure language processing (NLP) and superior search algorithms. With Amazon Kendra, you will discover related solutions to your questions rapidly, with out sifting by paperwork. Nonetheless, simply enabling end-users to get the solutions to their queries will not be sufficient in in the present day’s world. We have to consistently perceive the end-user’s search conduct, reminiscent of what are the highest queries for the month, have any new question that queries appeared lately, what share of queries obtained instantaneous reply, and extra.

Though the Amazon Kendra console comes outfitted with an analytics dashboard, lots of our clients choose to construct a customized dashboard. This lets you create distinctive views and filters, and grants administration groups entry to a streamlined, one-click dashboard without having to log in to the AWS Management Console and seek for the suitable dashboard. As well as, you possibly can improve your dashboard’s performance by including preprocessing logic, reminiscent of grouping related high queries. For instance, you might need to group related queries reminiscent of “What’s Amazon Kendra” and “What’s the objective of Amazon Kendra” collectively so that you could successfully analyze the metrics and achieve a deeper understanding of the information. Such grouping of comparable queries could be accomplished utilizing the idea of semantic similarity.

This submit discusses an end-to-end answer to implement this use case, which incorporates utilizing AWS Lambda to extract the summarized metrics from Amazon Kendra, calculating the semantic similarity rating utilizing a Hugging Face mannequin hosted on an Amazon SageMaker Serverless Inference endpoint to group related queries, and creating an Amazon QuickSight dashboard to show the person insights successfully.

Answer overview

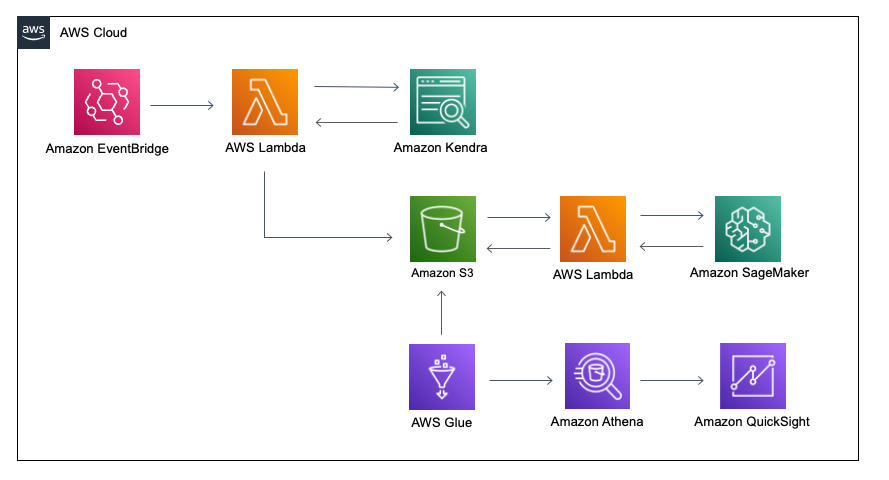

The next diagram illustrates our answer structure.

The high-level workflow is as follows:

- An Amazon EventBridge scheduler triggers Lambda features as soon as a month to extract final month’s search metrics from Amazon Kendra.

- The Lambda features add the search metrics to an Amazon Simple Storage Service (Amazon S3) bucket.

- The Lambda features group related queries within the uploaded file based mostly on the semantic similarity rating by Hugging Face mannequin hosted on a SageMaker inference endpoint.

- An AWS Glue crawler creates or updates the AWS Glue Information Catalog from the uploaded file within the S3 bucket for an Amazon Athena desk.

- QuickSight makes use of the Athena desk dataset to create analyses and dashboards.

For this answer, we deploy the infrastructure assets to create the QuickSight evaluation and dashboard utilizing an AWS CloudFormation template.

Conditions

Full the next prerequisite steps:

- When you’re a first-time person of QuickSight in your AWS account, sign up for QuickSight.

- Get the Amazon Kendra index ID that you really want visualize your search metrics from Amazon Kendra. You’ll have to use the search engine for some time (for instance, just a few weeks) to have the ability to extract a enough quantity of knowledge to make use of to extract some insights.

- Clone the GitHub repo to create the container picture:

- Create an Amazon Elastic Container Registry (Amazon ECR) repository in us-east-1 and push the container picture created by the downloaded Dockerfile. For directions, consult with Creating a private repository.

- Run the next instructions within the listing of your native setting to create and push the container picture to the ECR repository you created:

Deploy the CloudFormation template

Full the next steps to deploy the CloudFormation template:

- Obtain the CloudFormation template kendrablog-sam-template.yml.

- On the AWS CloudFormation console, create a brand new stack.

Use the us-east-1 Area for this deployment.

- Add the template instantly or by your most well-liked S3 bucket.

- For KendraIndex, enter the Amazon Kendra index ID from the stipulations.

- For LambdaECRRepository, enter the ECR repository from the stipulations.

- For QSIdentityRegion, enter the identification Area of QuickSight. The identification Area aligns along with your Area choice once you signed up your QuickSight subscription.

- For QSUserDefaultPassward, enter the default password to make use of in your QuickSight person.

You’ll be prompted to vary this password once you first register to the QuickSight console.

- For QSUserEmail, enter the e-mail handle to make use of for the QuickSight person.

- Select Subsequent.

- Go away different settings as default and select Subsequent.

- Choose the acknowledgement test bins and select Create stack.

When the deployment is full, you possibly can affirm all of the generated assets on the stack’s Assets tab on the AWS CloudFormation console.

We stroll by a few of the key parts of this answer within the following sections.

Get insights from Amazon Kendra search metrics

We are able to get the metrics knowledge from Amazon Kendra utilizing the GetSnapshots API. There are 10 metrics for analyzing what info the customers are looking for: 5 metrics embody traits knowledge for us to search for patterns over time, and 5 metrics use only a snapshot or aggregated knowledge. The metrics with the day by day pattern knowledge are clickthrough fee, zero click on fee, zero search outcomes fee, instantaneous reply fee, and whole queries. The metrics with aggregated knowledge are high queries, high queries with zero clicks, high queries with zero search outcomes, high clicked on paperwork, and whole paperwork.

We use Lambda features to get the search metrics knowledge from Amazon Kendra. The features extract the metrics from Amazon Kendra and retailer them in Amazon S3. You’ll find the features within the GitHub repo.

Create a SageMaker serverless endpoint and host a Hugging Face mannequin to calculate semantic similarity

After the metrics are extracted, the following step is to finish the preprocessing for the aggregated metrics. The preprocessing step checks the semantic similarity between the question texts and teams them collectively to point out the entire counts for the same queries. For instance, if there are three queries of “What’s S3” and two queries of “What’s the objective of S3,” it should group them collectively and present that there are 5 queries of “What’s S3” or “What’s the objective of S3.”

To calculate semantic similarity, we use a mannequin from the Hugging Face mannequin library. Hugging Face is a well-liked open-source platform that gives a variety of NLP fashions, together with transformers, which have been skilled on quite a lot of NLP duties. These fashions could be simply built-in with SageMaker and benefit from its wealthy coaching and deployment choices. The Hugging Face Deep Studying Containers (DLCs), which comes pre-packaged with the required libraries, make it straightforward to deploy the mannequin in SageMaker with simply few strains of code. In our use case, we first get the vector embedding utilizing the Hugging Face pre-trained mannequin flax-sentence-embeddings/all_datasets_v4_MiniLM-L6, after which use cosine similarity to calculate the similarity rating between the vector embeddings.

To get the vector embedding from the Hugging Face mannequin, we create a serverless endpoint in SageMaker. Serverless endpoints assist save price since you solely pay for the period of time the inference runs. To create a serverless endpoint, you first outline the max concurrent invocations for a single endpoint, often called MaxConcurrency, and the reminiscence measurement. The reminiscence sizes you possibly can select are 1024 MB, 2048 MB, 3072 MB, 4096 MB, 5120 MB, or 6144 MB. SageMaker Serverless Inference auto-assigns compute assets proportional to the reminiscence you choose.

We additionally have to pad one of many vectors with zeros in order that the scale of the 2 vectors matches with one another and we will calculate the cosine similarity as a dot product of the 2 vectors. We are able to set a threshold for cosine similarity (for instance, 0.6) and if the similarity rating is greater than the brink, we will group the queries collectively. After the queries are grouped, we will perceive the highest queries higher. We put all this logic in a Lambda operate and deploy the operate utilizing a container picture. The container picture comprises codes to invoke the SageMaker Serverless Inference endpoints, and vital Python libraries to run the Lambda operate reminiscent of NumPy, pandas, and scikit-learn. The next file is an instance of the output from the Lambda operate: HF_QUERIES_BY_COUNT.csv.

Create a dashboard utilizing QuickSight

After you may have collected the metrics and preprocessed the aggregated metrics, you possibly can visualize the information to get the enterprise insights. For this answer, we use QuickSight for the enterprise intelligence (BI) dashboard and Athena as the information supply for QuickSight.

QuickSight is a completely managed enterprise-grade BI service that you need to use to create analyses and dashboards to ship easy-to-understand insights. You possibly can select numerous sorts of charts and graphs to ship the enterprise insights successfully by a QuickSight dashboard. QuickSight connects to your knowledge and combines knowledge from many alternative sources, reminiscent of Amazon S3 and Athena. For our answer, we use Athena as the information supply.

Athena is an interactive question service that makes it straightforward to research knowledge instantly in Amazon S3 utilizing commonplace SQL. You should utilize Athena queries to create your customized views from knowledge saved in an S3 bucket earlier than visualizing it with QuickSight. This answer makes use of an AWS Glue crawler to create the AWS Glue Information Catalog for the Athena desk from the recordsdata within the S3 bucket.

The CloudFormation template runs the primary crawler throughout useful resource creation. The next screenshot exhibits the Information Catalog schema.

The next screenshot exhibits the Athena desk pattern you will note after the deployment.

Entry permission to the AWS Glue databases and tables are managed by AWS Lake Formation. The CloudFormation template already hooked up the required Lake Formation permissions to the generated AWS Identity and Access Management (IAM) person for QuickSight. When you see permission points along with your IAM principal, grant at the least the SELECT permission to the AWS Glue tables to your IAM principal in Lake Formation. You’ll find the AWS Glue database identify on the Outputs tab of the CloudFormation stack. For extra info, consult with Granting Data Catalog permissions using the named resource method.

Now we have accomplished the information preparation step. The final step is to create an evaluation and dashboard utilizing QuickSight.

- Sign in to the QuickSight console with the QuickSight person that the CloudFormation template generated.

- Within the navigation pane, select Datasets.

- Select Dataset.

- Select Athena as the information supply.

- Enter a reputation for Information Supply identify and select

kendrablogfor Athena workgroup. - Select Create knowledge supply.

- Select

AWSDataCatalogfor Catalog andkendra-search-analytics-databasefor Database, and choose one of many tables you need to use for evaluation. - Select Choose.

- Choose Import to SPICE for faster analytics and select Edit/Preview knowledge.

- Optionally, select Add knowledge to hitch extra knowledge.

- You can too modify the information schema, reminiscent of column identify or knowledge kind, and be a part of a number of datasets, if wanted.

- Select Publish & Visualize to maneuver on to creating visuals.

- Select your visible kind and set dimensions to create your visible.

- You possibly can optionally configure extra options for the chart utilizing the navigation pane, reminiscent of filters, actions, and themes.

The next screenshots present a pattern QuickSight dashboard in your reference. “Search Queries group by related queries” within the screenshot exhibits how the search queries been consolidated utilizing semantic similarity.

Clear up

Delete the QuickSight assets (dashboard, evaluation, and dataset) that you simply created and infrastructure assets that AWS CloudFormation generated to keep away from undesirable costs. You possibly can delete the infrastructure useful resource and QuickSight person that was created by the stack by way of the AWS CloudFormation console.

Conclusion

This submit confirmed an end-to-end answer to get enterprise insights from Amazon Kendra. The answer offered the serverless stack to deploy a customized dashboard for Amazon Kendra search analytics metrics utilizing Lambda and QuickSight. We additionally solved widespread challenges regarding analyzing related queries utilizing a SageMaker Hugging Face mannequin. You could possibly additional improve the dashboard by including extra insights reminiscent of the important thing phrases or the named entities within the queries utilizing Amazon Comprehend and displaying these within the dashboard. Please check out the answer and tell us your suggestions.

Concerning the Authors

Genta Watanabe is a Senior Technical Account Supervisor at Amazon Internet Providers. He spends his time working with strategic automotive clients to assist them obtain operational excellence. His areas of curiosity are machine studying and synthetic intelligence. In his spare time, Genta enjoys spending time along with his household and touring.

Genta Watanabe is a Senior Technical Account Supervisor at Amazon Internet Providers. He spends his time working with strategic automotive clients to assist them obtain operational excellence. His areas of curiosity are machine studying and synthetic intelligence. In his spare time, Genta enjoys spending time along with his household and touring.

Abhijit Kalita is a Senior AI/ML Evangelist at Amazon Internet Providers. He spends his time working with public sector companions in Asia Pacific, enabling them on their AI/ML workloads. He has a few years of expertise in knowledge analytics, AI, and machine studying throughout totally different verticals reminiscent of automotive, semiconductor manufacturing, and monetary companies. His areas of curiosity are machine studying and synthetic intelligence, particularly NLP and laptop imaginative and prescient. In his spare time, Abhijit enjoys spending time along with his household, biking, and enjoying along with his little hamster.

Abhijit Kalita is a Senior AI/ML Evangelist at Amazon Internet Providers. He spends his time working with public sector companions in Asia Pacific, enabling them on their AI/ML workloads. He has a few years of expertise in knowledge analytics, AI, and machine studying throughout totally different verticals reminiscent of automotive, semiconductor manufacturing, and monetary companies. His areas of curiosity are machine studying and synthetic intelligence, particularly NLP and laptop imaginative and prescient. In his spare time, Abhijit enjoys spending time along with his household, biking, and enjoying along with his little hamster.