Differentially non-public clustering for large-scale datasets – Google AI Weblog

Clustering is a central downside in unsupervised machine studying (ML) with many functions throughout domains in each business and educational analysis extra broadly. At its core, clustering consists of the next downside: given a set of knowledge components, the purpose is to partition the info components into teams such that related objects are in the identical group, whereas dissimilar objects are in several teams. This downside has been studied in math, pc science, operations analysis and statistics for greater than 60 years in its myriad variants. Two widespread types of clustering are metric clustering, by which the weather are factors in a metric space, like within the k-means downside, and graph clustering, the place the weather are nodes of a graph whose edges signify similarity amongst them.

|

| Within the k-means clustering downside, we’re given a set of factors in a metric house with the target to determine okay consultant factors, known as facilities (right here depicted as triangles), in order to reduce the sum of the squared distances from every level to its closest middle. Source, rights: CC-BY-SA-4.0 |

Regardless of the in depth literature on algorithm design for clustering, few sensible works have centered on rigorously defending the consumer’s privateness throughout clustering. When clustering is utilized to non-public knowledge (e.g., the queries a consumer has made), it’s crucial to contemplate the privateness implications of utilizing a clustering answer in an actual system and the way a lot info the output answer reveals in regards to the enter knowledge.

To make sure privateness in a rigorous sense, one answer is to develop differentially private (DP) clustering algorithms. These algorithms be sure that the output of the clustering doesn’t reveal non-public details about a particular knowledge factor (e.g., whether or not a consumer has made a given question) or delicate knowledge in regards to the enter graph (e.g., a relationship in a social community). Given the significance of privateness protections in unsupervised machine studying, in recent times Google has invested in analysis on theory and practice of differentially non-public metric or graph clustering, and differential privateness in quite a lot of contexts, e.g., heatmaps or tools to design DP algorithms.

Right now we’re excited to announce two necessary updates: 1) a new differentially-private algorithm for hierarchical graph clustering, which we’ll be presenting at ICML 2023, and a couple of) the open-source release of the code of a scalable differentially-private okay-means algorithm. This code brings differentially non-public okay-means clustering to massive scale datasets utilizing distributed computing. Right here, we can even talk about our work on clustering know-how for a current launch within the well being area for informing public well being authorities.

Differentially non-public hierarchical clustering

Hierarchical clustering is a well-liked clustering method that consists of recursively partitioning a dataset into clusters at an more and more finer granularity. A well-known instance of hierarchical clustering is the phylogenetic tree in biology by which all life on Earth is partitioned into finer and finer teams (e.g., kingdom, phylum, class, order, and so forth.). A hierarchical clustering algorithm receives as enter a graph representing the similarity of entities and learns such recursive partitions in an unsupervised means. But on the time of our analysis no algorithm was identified to compute hierarchical clustering of a graph with edge privateness, i.e., preserving the privateness of the vertex interactions.

In “Differentially-Private Hierarchical Clustering with Provable Approximation Guarantees”, we contemplate how properly the issue might be approximated in a DP context and set up agency higher and decrease bounds on the privateness assure. We design an approximation algorithm (the primary of its form) with a polynomial working time that achieves each an additive error that scales with the variety of nodes n (of order n2.5) and a multiplicative approximation of O(log½ n), with the multiplicative error similar to the non-private setting. We additional present a brand new decrease certain on the additive error (of order n2) for any non-public algorithm (regardless of its working time) and supply an exponential-time algorithm that matches this decrease certain. Furthermore, our paper features a beyond-worst-case evaluation specializing in the hierarchical stochastic block model, a regular random graph mannequin that displays a pure hierarchical clustering construction, and introduces a non-public algorithm that returns an answer with an additive value over the optimum that’s negligible for bigger and bigger graphs, once more matching the non-private state-of-the-art approaches. We imagine this work expands the understanding of privateness preserving algorithms on graph knowledge and can allow new functions in such settings.

Massive-scale differentially non-public clustering

We now change gears and talk about our work for metric house clustering. Most prior work in DP metric clustering has centered on enhancing the approximation ensures of the algorithms on the okay-means goal, leaving scalability questions out of the image. Certainly, it isn’t clear how environment friendly non-private algorithms similar to k-means++ or k-means// might be made differentially non-public with out sacrificing drastically both on the approximation ensures or the scalability. Alternatively, each scalability and privateness are of main significance at Google. Because of this, we just lately revealed multiple papers that handle the issue of designing environment friendly differentially non-public algorithms for clustering that may scale to large datasets. Our purpose is, furthermore, to supply scalability to massive scale enter datasets, even when the goal variety of facilities, okay, is massive.

We work within the massively parallel computation (MPC) mannequin, which is a computation mannequin consultant of contemporary distributed computation architectures. The mannequin consists of a number of machines, every holding solely a part of the enter knowledge, that work along with the purpose of fixing a worldwide downside whereas minimizing the quantity of communication between machines. We current a differentially private constant factor approximation algorithm for okay-means that solely requires a relentless variety of rounds of synchronization. Our algorithm builds upon our previous work on the issue (with code available here), which was the primary differentially-private clustering algorithm with provable approximation ensures that may work within the MPC mannequin.

The DP fixed issue approximation algorithm drastically improves on the earlier work utilizing a two section method. In an preliminary section it computes a crude approximation to “seed” the second section, which consists of a extra subtle distributed algorithm. Outfitted with the first-step approximation, the second section depends on outcomes from the Coreset literature to subsample a related set of enter factors and discover a good differentially non-public clustering answer for the enter factors. We then show that this answer generalizes with roughly the identical assure to the whole enter.

Vaccination search insights by way of DP clustering

We then apply these advances in differentially non-public clustering to real-world functions. One instance is our utility of our differentially-private clustering answer for publishing COVID vaccine-related queries, whereas offering robust privateness protections for the customers.

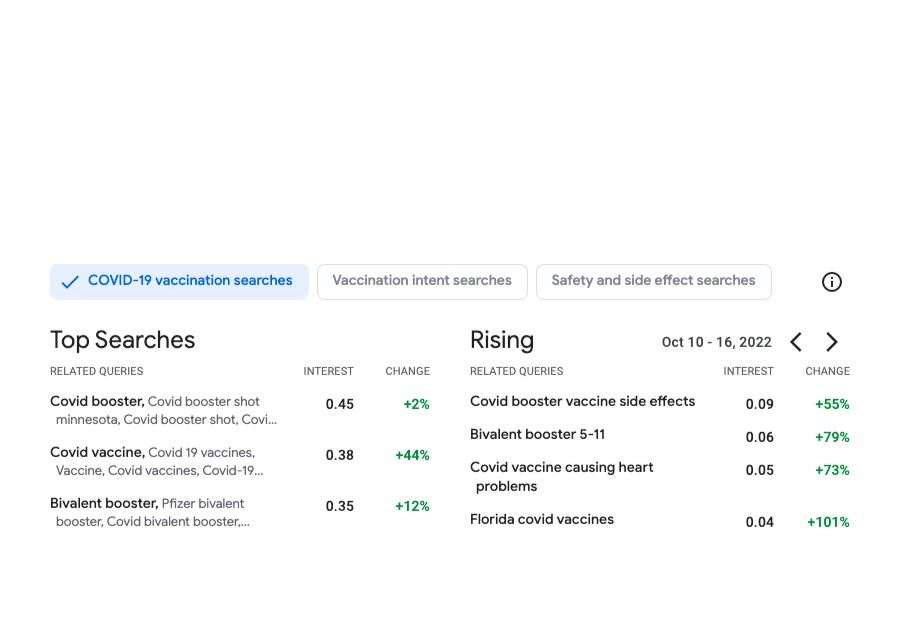

The purpose of Vaccination Search Insights (VSI) is to assist public well being resolution makers (well being authorities, authorities businesses and nonprofits) determine and reply to communities’ info wants relating to COVID vaccines. With a purpose to obtain this, the software permits customers to discover at totally different geolocation granularities (zip-code, county and state stage within the U.S.) the highest themes searched by customers relating to COVID queries. Particularly, the software visualizes statistics on trending queries rising in curiosity in a given locale and time.

To raised assist figuring out the themes of the trending searches, the software clusters the search queries primarily based on their semantic similarity. That is accomplished by making use of a custom-designed okay-means–primarily based algorithm run over search knowledge that has been anonymized utilizing the DP Gaussian mechanism so as to add noise and take away low-count queries (thus leading to a differentially clustering). The strategy ensures robust differential privateness ensures for the safety of the consumer knowledge.

This software offered fine-grained knowledge on COVID vaccine notion within the inhabitants at unprecedented scales of granularity, one thing that’s particularly related to grasp the wants of the marginalized communities disproportionately affected by COVID. This venture highlights the influence of our funding in analysis in differential privateness, and unsupervised ML strategies. We need to different necessary areas the place we will apply these clustering strategies to assist information resolution making round world well being challenges, like search queries on climate change–related challenges similar to air high quality or excessive warmth.

Acknowledgements

We thank our co-authors Silvio Lattanzi, Vahab Mirrokni, Andres Munoz Medina, Shyam Narayanan, David Saulpic, Chris Schwiegelshohn, Sergei Vassilvitskii, Peilin Zhong and our colleagues from the Well being AI crew that made the VSI launch attainable Shailesh Bavadekar, Adam Boulanger, Tague Griffith, Mansi Kansal, Chaitanya Kamath, Akim Kumok, Yael Mayer, Tomer Shekel, Megan Shum, Charlotte Stanton, Mimi Solar, Swapnil Vispute, and Mark Younger.

For extra info on the Graph Mining team (a part of Algorithm and Optimization) go to our pages.