🤗Hugging Face Transformers Agent | by Sophia Yang | Might, 2023

Simply two days in the past, 🤗Hugging Face launched Transformers Agent — an agent that leverages pure language to decide on a instrument from a curated assortment of instruments and attain varied duties. Does it sound acquainted? Sure, it does as a result of it’s lots like 🦜🔗LangChain Instruments and Brokers. On this weblog publish, I’ll cowl what Transformers Agent is and its comparisons with 🦜🔗LangChain Agent.

You may check out the code in this colab (offered by Hugging Face).

Briefly, it offers a pure language API on high of transformers: we outline a set of curated instruments and design an agent to interpret pure language and to make use of these instruments.

I can think about engineers at HuggingFace be like: We have now so many wonderful fashions hosted on HuggingFace. Can we combine these with LLMs? Can we use LLMs to determine which mannequin to make use of, write code, run code, and generate outcomes? Primarily, no one must be taught all of the sophisticated task-specific fashions anymore. Simply give it a process, LLMs (brokers) will do all the pieces for us.

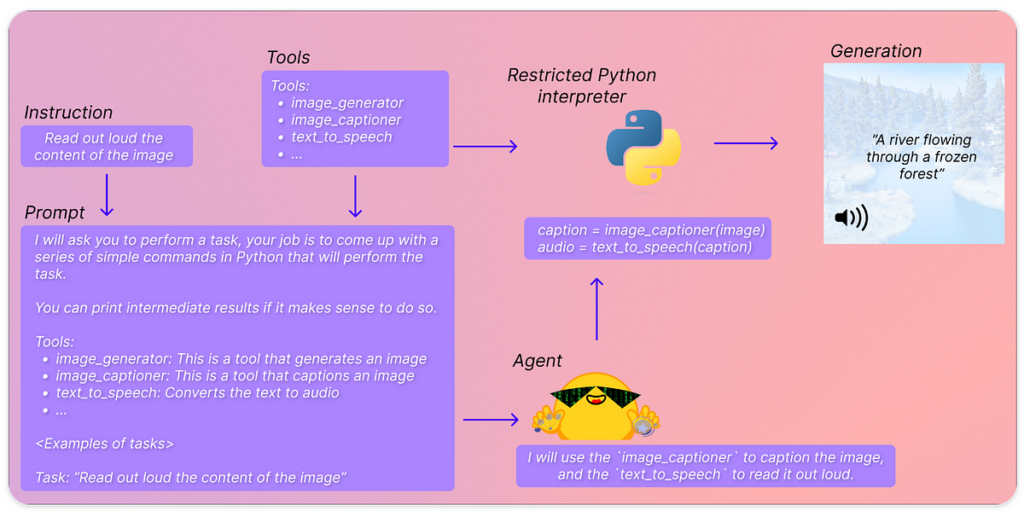

Listed below are the steps:

- Instruction: the immediate customers present

- Immediate: a immediate template with the precise instruction added, the place it lists a number of instruments to make use of.

- Instruments: a curated listing of transformers fashions, e.g., Flan-T5 for query answering,

- Agent: an LLM that interprets the query, decides which instruments to make use of, and generates code to carry out the duty with the instruments.

- Restricted Python interpreter: execute Python code.

Step 1: Instantiate an agent.

Step 1 is to instantiate an agent. An agent is simply an LLM, which may be an OpenAI mannequin, a StarCoder mannequin, or an OpenAssistant mannequin.

The OpenAI mannequin wants the OpenAI API key and the utilization is just not free. We load the StarCoder mannequin and the OpenAssistant mannequin from the HuggingFace Hub, which requires HuggingFace Hub API key and it’s free to make use of.

from transformers import HfAgent# OpenAI

agent = OpenAiAgent(mannequin="text-davinci-003", api_key="<your_api_key>")

from transformers import OpenAiAgent

from huggingface_hub import login

login("<YOUR_TOKEN>")

# Starcoder

agent = HfAgent("https://api-inference.huggingface.co/fashions/bigcode/starcoder")

# OpenAssistant

agent = HfAgent(url_endpoint="https://api-inference.huggingface.co/fashions/OpenAssistant/oasst-sft-4-pythia-12b-epoch-3.5")

Step 2: Run the agent.

agent.run is a single execution methodology and selects the instrument for the duty mechanically, e.g., choose the picture generator instrument to create a picture.

agent.chat retains the chat historical past. For instance, right here it is aware of we generated an image earlier and it could possibly rework a picture.

Transformers Agent continues to be experimental. It’s lots smaller scope and fewer versatile. The primary focus of Transformers Agent proper now’s for utilizing Transformer fashions and executing Python code, whereas LangChain Agent does “virtually” all the pieces. Let be break it down to check totally different parts between Transformers and LangChain Brokers:

Instruments

- 🤗Hugging Face Transfomers Agent has an incredible listing of instruments, every powered by transformer fashions. These instruments provide three important benefits: 1) Although Transformers Agent can solely work together with few instruments at present, it has the potential to speak with over 100,000 Hugging Face mannequin. It possesses full multimodal capabilities, encompassing textual content, photos, video, audio, and paperwork.; 2) Since these fashions are purpose-built for particular duties, using them may be extra simple and yield extra correct outcomes in comparison with relying solely on LLMs. For instance, as a substitute of designing the prompts for the LLM to carry out textual content classification, we will merely deploy BART that’s designed for textual content classification; 3) These instruments unlocked capabilities that LLMs alone can’t accomplish. Take BLIP, for instance, which allows us to generate fascinating picture captions — a process past the scope of LLMs.

- 🦜🔗LangChain instruments are all exterior APIs, reminiscent of Google Search, Python REPL. In actual fact, LangChain helps HuggingFace Instruments by way of the

load_huggingface_tooloperate. LangChain can doubtlessly do plenty of issues Transformers Agent can do already. Then again, Transformers Brokers can doubtlessly incorporate all of the LangChain instruments as properly. - In each circumstances, every instrument is only a Python file. You’ll find the recordsdata of 🤗Hugging Face Transformers Agent instruments here and 🦜🔗LangChain instruments here. As you’ll be able to see, every Python file accommodates one class indicating one instrument.

Agent

- 🤗Hugging Face Transformers Agent makes use of this prompt template to find out which instrument to make use of based mostly on the instrument’s description. It asks the LLM to supply an explanations and it offers some few-shots studying examples within the immediate.

- 🦜🔗LangChain by default makes use of the ReAct framework to find out which instrument to make use of based mostly on the instrument’s description. The ReAct framework is described on this paper. It doesn’t solely act on a call but in addition offers ideas and reasoning, which has similarities to the explanations Transformers Agent makes use of. As well as, 🦜🔗LangChain has 4 agent types.

Customized Agent

Making a customized agent is just not too troublesome in each circumstances:

- See the HuggingFace Transformer Agent instance in direction of the tip of this colab.

- See the LangChain example right here.

“Code-execution”

- 🤗Hugging Face Transformers Agent contains “code-execution” as one of many steps after the LLM selects the instruments and generates the code. This restricts the Transformers Agent’s objective to execute Python code.

- 🦜🔗LangChain contains “code-execution” as one in all its instruments, which signifies that executing code is just not the final step of the entire course of. This offers much more flexibility on what the duty objective is: it may very well be executing Python code, or it may be one thing else like doing a Google Search and returning search outcomes.

On this weblog publish, we explored the performance of 🤗Hugging Face Transformers Brokers and in contrast it to 🦜🔗LangChain Brokers. I sit up for witnessing additional developments and developments in Transformers Agent.

. . .

By Sophia Yang on Might 12, 2023

Sophia Yang is a Senior Information Scientist. Join with me on LinkedIn, Twitter, and YouTube and be a part of the DS/ML Book Club ❤️