Stanford and Mila Researchers Suggest Hyena: An Consideration-Free Drop-in Substitute to the Core Constructing Block of Many Massive-Scale Language Fashions

As everyone knows that the race to develop and give you mindblowing Generative fashions corresponding to ChatGPT and Bard, and their underlying expertise corresponding to GPT3 and GPT4, has taken the AI world by magnanimous power, there are nonetheless many challenges in terms of the accessibility, coaching and precise feasibility of those fashions in numerous use instances which pertains to our each day issues.

If anybody has ever performed round with any of such sequence fashions, there’s one sure-shot drawback that may have ruined their pleasure. That’s, the size of enter they’ll ship in to immediate the mannequin.

If they’re fans who need to dabble within the core of such applied sciences and prepare their customized mannequin, the entire optimization course of makes it fairly an not possible job.

On the coronary heart of those issues lies the quadratic nature of the optimization of consideration fashions that sequence fashions make the most of. One of many largest causes is the computation value of such algorithms and the assets wanted to resolve this situation. It may be a particularly costly answer, particularly if somebody needs to scale it up, which results in just a few concentrated organizations having a vivid sense of understanding and actual management of such algorithms.

Merely put, consideration reveals quadratic value in sequence size. Limiting the quantity of context accessible and scaling it’s a pricey affair.

Nevertheless, fear not; there’s new structure known as the Hyena, which is now making waves within the NLP neighborhood, and other people ordain it because the rescuer all of us want. It challenges the dominance of the prevailing consideration mechanisms, and the analysis paper demonstrates its potential to topple the prevailing system.

Developed by a group of researchers at a number one college, Hyena boasts a formidable efficiency on a spread of subquadratic NLP duties when it comes to optimization. On this article, we’ll look intently at Hyena’s claims.

This paper means that subquadratic operators can match the standard of consideration fashions at scale with out being that pricey when it comes to parameters and optimization value. Based mostly on focused reasoning duties, the authors distill the three most essential properties contributing to its efficiency.

- Knowledge management

- Sublinear parameter scaling

- Unrestricted context.

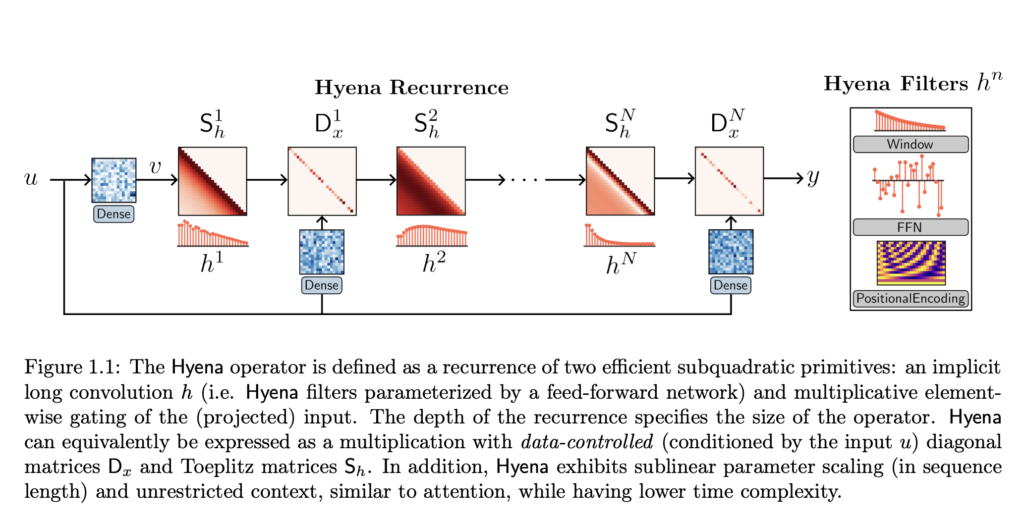

Aiming with these factors in thoughts, they then introduce the Hyena hierarchy. This new operator combines lengthy convolutions and element-wise multiplicative gating to match the standard of consideration at scale whereas lowering the computational value.

The experiments carried out reveal mindblowing outcomes.

- Language modeling.

Hyena’s scaling was examined on autoregressive language modeling, which, when evaluated on perplexity on benchmark dataset WikiText103 and The Pile, revealed that Hyena is the primary attention-free, convolution structure to match GPT high quality with a 20% discount in complete FLOPS.

Perplexity on WikiText103 (similar tokenizer). ∗ are outcomes from (Dao et al., 2022c). Deeper and thinner fashions (Hyena-slim) obtain decrease perplexity

Perplexity on The Pile for fashions educated till a complete variety of tokens e.g., 5 billion (completely different runs for every token complete). All fashions use the identical tokenizer (GPT2). FLOP depend is for the 15 billion token run

- Massive Scale picture classification

The paper demonstrates the potential of Hyena as a common deep-learning operator for picture classification. On picture translation, they drop-in exchange consideration layers within the Imaginative and prescient Transformer(ViT) with the Hyena operator and match the efficiency with ViT.

On CIFAR-2D, we check a 2D model of Hyena lengthy convolution filters in an ordinary convolutional structure, which improves on the 2D lengthy convolutional mannequin S4ND (Nguyen et al., 2022) in accuracy with an 8% speedup and 25% fewer parameters.

The promising outcomes on the sub-billion parameter scale counsel that focus is probably not all we’d like and that easier subquadratic designs corresponding to Hyena, knowledgeable by easy guiding ideas and analysis on mechanistic interpretability benchmarks, type the idea for environment friendly massive fashions.

With the waves this structure is creating locally, it will likely be fascinating to see if the Hyena would have the final giggle.

Take a look at the Paper and Github link. Don’t overlook to hitch our 20k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the most recent AI analysis information, cool AI tasks, and extra. In case you have any questions relating to the above article or if we missed something, be happy to e mail us at Asif@marktechpost.com

🚀 Check Out 100’s AI Tools in AI Tools Club