Securing MLflow in AWS: Positive-grained entry management with AWS native companies

With Amazon SageMaker, you may handle the entire end-to-end machine studying (ML) lifecycle. It gives many native capabilities to assist handle ML workflows elements, resembling experiment monitoring, and mannequin governance by way of the mannequin registry. This publish offers an answer tailor-made to prospects which can be already utilizing MLflow, an open-source platform for managing ML workflows.

In a previous post, we mentioned MLflow and the way it can run on AWS and be built-in with SageMaker—particularly, when monitoring coaching jobs as experiments and deploying a mannequin registered in MLflow to the SageMaker managed infrastructure. Nevertheless, the open-source version of MLflow doesn’t present native consumer entry management mechanisms for a number of tenants on the monitoring server. This implies any consumer with entry to the server has admin rights and might modify experiments, mannequin variations, and levels. This could be a problem for enterprises in regulated industries that have to maintain sturdy mannequin governance for audit functions.

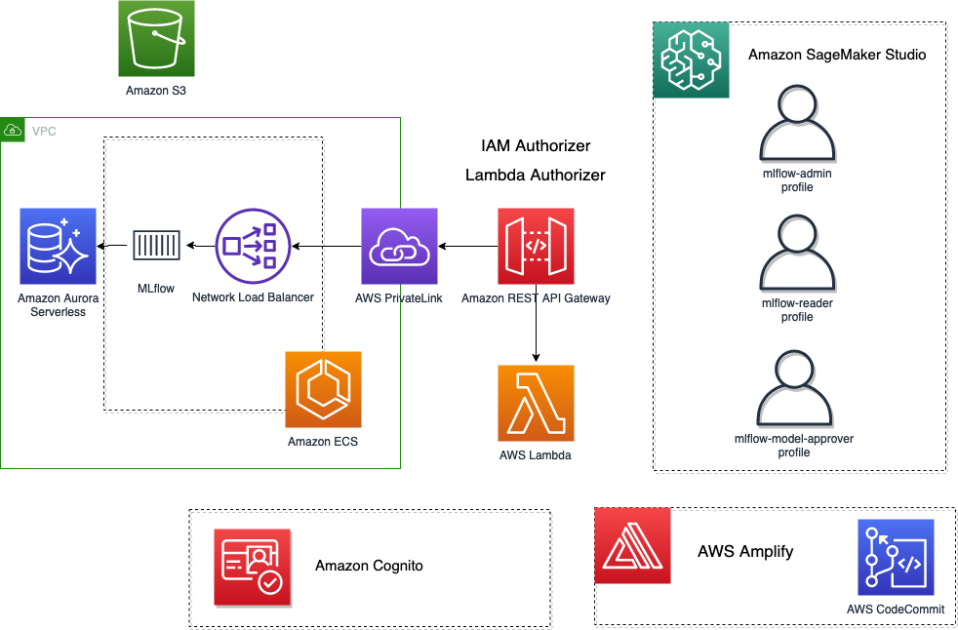

On this publish, we deal with these limitations by implementing the entry management outdoors of the MLflow server and offloading authentication and authorization duties to Amazon API Gateway, the place we implement fine-grained entry management mechanisms on the useful resource stage utilizing Identity and Access Management (IAM). By doing so, we are able to obtain sturdy and safe entry to the MLflow server from each SageMaker managed infrastructure and Amazon SageMaker Studio, with out having to fret about credentials and all of the complexity behind credential administration. The modular design proposed on this structure makes modifying entry management logic easy with out impacting the MLflow server itself. Lastly, due to SageMaker Studio extensibility, we additional enhance the information scientist expertise by making MLflow accessible inside Studio, as proven within the following screenshot.

MLflow has built-in the characteristic that permits request signing using AWS credentials into the upstream repository for its Python SDK, bettering the mixing with SageMaker. The modifications to the MLflow Python SDK can be found for everybody since MLflow model 1.30.0.

At a excessive stage, this publish demonstrates the next:

- How one can deploy an MLflow server on a serverless structure working on a non-public subnet not accessible instantly from the skin. For this activity, we construct on high the next GitHub repo: Manage your machine learning lifecycle with MLflow and Amazon SageMaker.

- How one can expose the MLflow server by way of non-public integrations to an API Gateway, and implement a safe entry management for programmatic entry by way of the SDK and browser entry by way of the MLflow UI.

- How one can log experiments and runs, and register fashions to an MLflow server from SageMaker utilizing the related SageMaker execution roles to authenticate and authorize requests, and methods to authenticate by way of Amazon Cognito to the MLflow UI. We offer examples demonstrating experiment monitoring and utilizing the mannequin registry with MLflow from SageMaker coaching jobs and Studio, respectively, within the supplied notebook.

- How one can use MLflow as a centralized repository in a multi-account setup.

- How one can lengthen Studio to reinforce the consumer expertise by rendering MLflow inside Studio. For this activity, we present methods to reap the benefits of Studio extensibility by putting in a JupyterLab extension.

Now let’s dive deeper into the small print.

Resolution overview

You possibly can take into consideration MLflow as three completely different core elements working facet by facet:

- A REST API for the backend MLflow monitoring server

- SDKs so that you can programmatically work together with the MLflow monitoring server APIs out of your mannequin coaching code

- A React entrance finish for the MLflow UI to visualise your experiments, runs, and artifacts

At a excessive stage, the structure we’ve envisioned and carried out is proven within the following determine.

Conditions

Earlier than deploying the answer, be sure you have entry to an AWS account with admin permissions.

Deploy the answer infrastructure

To deploy the answer described on this publish, comply with the detailed directions within the GitHub repository README. To automate the infrastructure deployment, we use the AWS Cloud Development Kit (AWS CDK). The AWS CDK is an open-source software program improvement framework to create AWS CloudFormation stacks by means of computerized CloudFormation template technology. A stack is a group of AWS assets that may be programmatically up to date, moved, or deleted. AWS CDK constructs are the constructing blocks of AWS CDK functions, representing the blueprint to outline cloud architectures.

We mix 4 stacks:

- The MLFlowVPCStack stack performs the next actions:

- The RestApiGatewayStack stack performs the next actions:

- Exposes the MLflow server by way of AWS PrivateLink to an REST API Gateway.

- Deploys an Amazon Cognito consumer pool to handle the customers accessing the UI (nonetheless empty after the deployment).

- Deploys an AWS Lambda authorizer to confirm the JWT token with the Amazon Cognito consumer pool ID keys and returns IAM insurance policies to permit or deny a request. This authorization technique is utilized to

<MLFlow-Monitoring-Server-URI>/*. - Provides an IAM authorizer. This will probably be utilized to the to the

<MLFlow-Monitoring-Server-URI>/api/*, which is able to take priority over the earlier one.

- The AmplifyMLFlowStack stack performs the next motion:

- Creates an app linked to the patched MLflow repository in AWS CodeCommit to construct and deploy the MLflow UI.

- The SageMakerStudioUserStack stack performs the next actions:

- Deploys a Studio area (if one doesn’t exist but).

- Provides three customers, each with a unique SageMaker execution position implementing a unique entry stage:

- mlflow-admin – Has admin-like permission to any MLflow assets.

- mlflow-reader – Has read-only admin permissions to any MLflow assets.

- mlflow-model-approver – Has the identical permissions as mlflow-reader, plus can register new fashions from current runs in MLflow and promote current registered fashions to new levels.

Deploy the MLflow monitoring server on a serverless structure

Our intention is to have a dependable, extremely obtainable, cost-effective, and safe deployment of the MLflow monitoring server. Serverless applied sciences are the right candidate to fulfill all these necessities with minimal operational overhead. To attain that, we construct a Docker container picture for the MLflow experiment monitoring server, and we run it in on AWS Fargate on Amazon ECS in its devoted VPC working on a non-public subnet. MLflow depends on two storage elements: the backend retailer and for the artifact retailer. For the backend retailer, we use Aurora Serverless, and for the artifact retailer, we use Amazon S3. For the high-level structure, check with Scenario 4: MLflow with remote Tracking Server, backend and artifact stores. In depth particulars on how to do that activity might be discovered within the following GitHub repo: Manage your machine learning lifecycle with MLflow and Amazon SageMaker.

Safe MLflow by way of API Gateway

At this level, we nonetheless don’t have an entry management mechanism in place. As a primary step, we expose MLflow to the skin world utilizing AWS PrivateLink, which establishes a non-public connection between the VPC and different AWS companies, in our case API Gateway. Incoming requests to MLflow are then proxied by way of a REST API Gateway, giving us the chance to implement a number of mechanisms to authorize incoming requests. For our functions, we deal with solely two:

- Utilizing IAM authorizers – With IAM authorizers, the requester should have the best IAM coverage assigned to entry the API Gateway assets. Each request should add authentication info to requests despatched by way of HTTP by AWS Signature Version 4.

- Utilizing Lambda authorizers – This gives the best flexibility as a result of it leaves full management over how a request might be approved. Finally, the Lambda authorizer should return an IAM coverage, which in flip will probably be evaluated by API Gateway on whether or not the request ought to be allowed or denied.

For the complete record of supported authentication and authorization mechanisms in API Gateway, check with Controlling and managing access to a REST API in API Gateway.

MLflow Python SDK authentication (IAM authorizer)

The MLflow experiment monitoring server implements a REST API to work together in a programmatic approach with the assets and artifacts. The MLflow Python SDK offers a handy solution to log metrics, runs, and artifacts, and it interfaces with the API assets hosted beneath the namespace <MLflow-Monitoring-Server-URI>/api/. We configure API Gateway to make use of the IAM authorizer for useful resource entry management on this namespace, thereby requiring each request to be signed with AWS Signature Model 4.

To facilitate the request signing course of, ranging from MLflow 1.30.0, this functionality might be seamlessly enabled. Ensure that the requests_auth_aws_sigv4 library is put in within the system and set the MLFLOW_TRACKING_AWS_SIGV4 setting variable to True. Extra info might be discovered within the official MLflow documentation.

At this level, the MLflow SDK solely wants AWS credentials. As a result of request_auth_aws_sigv4 makes use of Boto3 to retrieve credentials, we all know that it might load credentials from the instance metadata when an IAM position is related to an Amazon Elastic Compute Cloud (Amazon EC2) occasion (for different methods to provide credentials to Boto3, see Credentials). Which means that it might additionally load AWS credentials when working from a SageMaker managed occasion from the related execution position, as mentioned later on this publish.

Configure IAM insurance policies to entry MLflow APIs by way of API Gateway

You should utilize IAM roles and insurance policies to manage who can invoke assets on API Gateway. For extra particulars and IAM coverage reference statements, check with Control access for invoking an API.

The next code exhibits an instance IAM coverage that grants the caller permissions to all strategies on all assets on the API Gateway shielding MLflow, virtually giving admin entry to the MLflow server:

{

"Model": "2012-10-17",

"Assertion": [

{

"Action": "execute-api:Invoke",

"Resource": "arn:aws:execute-api:<REGION>:<ACCOUNT_ID>:<MLFLOW_API_ID>/<STAGE>/*/*",

"Effect": "Allow"

}

]

}If we wish a coverage that permits a consumer read-only entry to all assets, the IAM coverage would seem like the next code:

{

"Model": "2012-10-17",

"Assertion": [

{

"Action": "execute-api:Invoke",

"Resource": [

"arn:aws:execute-api:<REGION>:<ACCOUNT_ID>:<MLFLOW_API_ID>/<STAGE>/GET/*",

"arn:aws:execute-api:<REGION>:<ACCOUNT_ID>:<MLFLOW_API_ID>/<STAGE>/POST/api/2.0/mlflow/runs/search/",

"arn:aws:execute-api:<REGION>:<ACCOUNT_ID>:<MLFLOW_API_ID>/<STAGE>/POST/api/2.0/mlflow/experiments/search",

],

"Impact": "Enable"

}

]

}One other instance could be a coverage to provide particular customers permissions to register fashions to the mannequin registry and promote them later to particular levels (staging, manufacturing, and so forth):

{

"Model": "2012-10-17",

"Assertion": [

{

"Action": "execute-api:Invoke",

"Resource": [

"arn:aws:execute-api:<REGION>:<ACCOUNT_ID>:<MLFLOW_API_ID>/<STAGE>/GET/*",

"arn:aws:execute-api:<REGION>:<ACCOUNT_ID>:<MLFLOW_API_ID>/<STAGE>/POST/api/2.0/mlflow/runs/search/",

"arn:aws:execute-api:<REGION>:<ACCOUNT_ID>:<MLFLOW_API_ID>/<STAGE>/POST/api/2.0/mlflow/experiments/search",

"arn:aws:execute-api:<REGION>:<ACCOUNT_ID>:<MLFLOW_API_ID>/<STAGE>/POST/api/2.0/mlflow/model-versions/*",

"arn:aws:execute-api:<REGION>:<ACCOUNT_ID>:<MLFLOW_API_ID>/<STAGE>/POST/api/2.0/mlflow/registered-models/*"

],

"Impact": "Enable"

}

]

}MLflow UI authentication (Lambda authorizer)

Browser entry to the MLflow server is dealt with by the MLflow UI carried out with React. The MLflow UI hasn’t been designed to help authenticated customers. Implementing a strong login movement may seem a frightening activity, however fortunately we are able to depend on the Amplify UI React components for authentication, which significantly reduces the hassle to create a login movement in a React software, utilizing Amazon Cognito for the identities retailer.

Amazon Cognito permits us to handle our personal consumer base and in addition support third-party identity federation, making it possible to construct, for instance, ADFS federation (see Building ADFS Federation for your Web App using Amazon Cognito User Pools for extra particulars). Tokens issued by Amazon Cognito should be verified on API Gateway. Merely verifying the token is just not sufficient for fine-grained entry management, due to this fact the Lambda authorizer permits us the pliability to implement the logic we want. We will then construct our personal Lambda authorizer to confirm the JWT token and generate the IAM insurance policies to let the API Gateway deny or permit the request. The next diagram illustrates the MLflow login movement.

For extra details about the precise code modifications, check with the patch file cognito.patch, relevant to MLflow model 2.3.1.

This patch introduces two capabilities:

- Add the Amplify UI elements and configure the Amazon Cognito particulars by way of setting variables that implement the login movement

- Extract the JWT from the session and create an Authorization header with a bearer token of the place to ship the JWT

Though sustaining diverging code from the upstream all the time provides extra complexity than counting on the upstream, it’s price noting that the modifications are minimal as a result of we depend on the Amplify React UI elements.

With the brand new login movement in place, let’s create the manufacturing construct for our up to date MLflow UI. AWS Amplify Hosting is an AWS service that gives a git-based workflow for CI/CD and internet hosting of net apps. The construct step within the pipeline is outlined by the buildspec.yaml, the place we are able to inject as setting variables particulars concerning the Amazon Cognito consumer pool ID, the Amazon Cognito id pool ID, and the consumer pool shopper ID wanted by the Amplify UI React part to configure the authentication movement. The next code is an instance of the buildspec.yaml file:

model: "1.0"

functions:

- frontend:

phases:

preBuild:

instructions:

- fallocate -l 4G /swapfile

- chmod 600 /swapfile

- mkswap /swapfile

- swapon /swapfile

- swapon -s

- yarn set up

construct:

instructions:

- echo "REACT_APP_REGION=$REACT_APP_REGION" >> .env

- echo "REACT_APP_COGNITO_USER_POOL_ID=$REACT_APP_COGNITO_USER_POOL_ID" >> .env

- echo "REACT_APP_COGNITO_IDENTITY_POOL_ID=$REACT_APP_COGNITO_IDENTITY_POOL_ID" >> .env

- echo "REACT_APP_COGNITO_USER_POOL_CLIENT_ID=$REACT_APP_COGNITO_USER_POOL_CLIENT_ID" >> .env

- yarn run construct

artifacts:

baseDirectory: construct

recordsdata:

- "**/*"Securely log experiments and runs utilizing the SageMaker execution position

One of many key elements of the answer mentioned right here is the safe integration with SageMaker. SageMaker is a managed service, and as such, it performs operations in your behalf. What SageMaker is allowed to do is outlined by the IAM insurance policies hooked up to the execution position that you simply affiliate to a SageMaker coaching job, or that you simply affiliate to a consumer profile working from Studio. For extra info on the SageMaker execution position, check with SageMaker Roles.

By configuring the API Gateway to make use of IAM authentication on the <MLFlow-Monitoring-Server-URI>/api/* assets, we are able to outline a set of IAM insurance policies on the SageMaker execution position that can permit SageMaker to work together with MLflow in line with the entry stage specified.

When setting the MLFLOW_TRACKING_AWS_SIGV4 setting variable to True whereas working in Studio or in a SageMaker coaching job, the MLflow Python SDK will robotically signal all requests, which will probably be validated by the API Gateway:

os.environ['MLFLOW_TRACKING_AWS_SIGV4'] = "True"

mlflow.set_tracking_uri(tracking_uri)

mlflow.set_experiment(experiment_name)Take a look at the SageMaker execution position with the MLflow SDK

In the event you entry the Studio area that was generated, you will see that three customers:

- mlflow-admin – Related to an execution position with comparable permissions because the consumer within the Amazon Cognito group admins

- mlflow-reader – Related to an execution position with comparable permissions because the consumer within the Amazon Cognito group readers

- mlflow-model-approver – Related to an execution position with comparable permissions because the consumer within the Amazon Cognito group model-approvers

To check the three completely different roles, check with the labs supplied as a part of this pattern on every consumer profile.

The next diagram illustrates the workflow for Studio consumer profiles and SageMaker job authentication with MLflow.

Equally, when working SageMaker jobs on the SageMaker managed infrastructure, in the event you set the setting variable MLFLOW_TRACKING_AWS_SIGV4 to True, and the SageMaker execution position handed to the roles has the proper IAM coverage to entry the API Gateway, you may securely work together along with your MLflow monitoring server with no need to handle the credentials your self. When working SageMaker coaching jobs and initializing an estimator class, you may move setting variables that SageMaker will inject and make it obtainable to the coaching script, as proven within the following code:

setting={

"AWS_DEFAULT_REGION": area,

"MLFLOW_EXPERIMENT_NAME": experiment_name,

"MLFLOW_TRACKING_URI": tracking_uri,

"MLFLOW_AMPLIFY_UI_URI": mlflow_amplify_ui,

"MLFLOW_TRACKING_AWS_SIGV4": "true",

"MLFLOW_USER": consumer

}

estimator = SKLearn(

entry_point="practice.py",

source_dir="source_dir",

position=position,

metric_definitions=metric_definitions,

hyperparameters=hyperparameters,

instance_count=1,

instance_type="ml.m5.massive",

framework_version='1.0-1',

base_job_name="mlflow",

setting=setting

)Visualize runs and experiments from the MLflow UI

After the primary deployment is full, let’s populate the Amazon Cognito consumer pool with three customers, every belonging to a unique group, to check the permissions we’ve carried out. You should utilize this script add_users_and_groups.py to seed the consumer pool. After working the script, in the event you verify the Amazon Cognito consumer pool on the Amazon Cognito console, it’s best to see the three customers created.

On the REST API Gateway facet, the Lambda authorizer will first confirm the signature of the token utilizing the Amazon Cognito consumer pool key and confirm the claims. Solely after that can it extract the Amazon Cognito group the consumer belongs to from the declare within the JWT token (cognito:teams) and apply completely different permissions based mostly on the group that we’ve programmed.

For our particular case, we’ve three teams:

- admins – Can see and might edit all the pieces

- readers – Can solely see all the pieces

- model-approvers – The identical as readers, plus can register fashions, create variations, and promote mannequin variations to the following stage

Relying on the group, the Lambda authorizer will generate completely different IAM insurance policies. That is simply an instance on how authorization might be achieved; with a Lambda authorizer, you may implement any logic you want. We’ve got opted to construct the IAM coverage at run time within the Lambda operate itself; nonetheless, you may pregenerate applicable IAM insurance policies, retailer them in Amazon DynamoDB, and retrieve them at run time in line with your personal enterprise logic. Nevertheless, if you wish to limit solely a subset of actions, you want to pay attention to the MLflow REST API definition.

You possibly can discover the code for the Lambda authorizer on the GitHub repo.

Multi-account issues

Knowledge science workflows need to move a number of levels as they progress from experimentation to manufacturing. A standard method entails separate accounts devoted to completely different phases of the AI/ML workflow (experimentation, improvement, and manufacturing). Nevertheless, generally it’s fascinating to have a devoted account that acts as central repository for fashions. Though our structure and pattern check with a single account, it may be simply prolonged to implement this final state of affairs, due to the IAM capability to switch roles even throughout accounts.

The next diagram illustrates an structure utilizing MLflow as a central repository in an remoted AWS account.

For this use case, we’ve two accounts: one for the MLflow server, and one for the experimentation accessible by the information science staff. To allow cross-account entry from a SageMaker coaching job working within the information science account, we want the next parts:

- A SageMaker execution position within the information science AWS account with an IAM coverage hooked up that permits assuming a unique position within the MLflow account:

{

"Model": "2012-10-17",

"Assertion": {

"Impact": "Enable",

"Motion": "sts:AssumeRole",

"Useful resource": "<ARN-ROLE-IN-MLFLOW-ACCOUNT>"

}

}- An IAM position within the MLflow account with the best IAM coverage hooked up that grants entry to the MLflow monitoring server, and permits the SageMaker execution position within the information science account to imagine it:

{

"Model": "2012-10-17",

"Assertion": [

{

"Effect": "Allow",

"Principal": {

"AWS": "<ARN-SAGEMAKER-EXECUTION-ROLE-IN-DATASCIENCE-ACCOUNT>"

},

"Action": "sts:AssumeRole"

}

]

}Inside the coaching script working within the information science account, you need to use this instance earlier than initializing the MLflow shopper. You’ll want to assume the position within the MLflow account and retailer the non permanent credentials as setting variables, as a result of this new set of credentials will probably be picked up by a brand new Boto3 session initialized throughout the MLflow shopper.

import boto3

# Session utilizing the SageMaker Execution Function within the Knowledge Science Account

session = boto3.Session()

sts = session.shopper("sts")

response = sts.assume_role(

RoleArn="<ARN-ROLE-IN-MLFLOW-ACCOUNT>",

RoleSessionName="AssumedMLflowAdmin"

)

credentials = response['Credentials']

os.environ['AWS_ACCESS_KEY_ID'] = credentials['AccessKeyId']

os.environ['AWS_SECRET_ACCESS_KEY'] = credentials['SecretAccessKey']

os.environ['AWS_SESSION_TOKEN'] = credentials['SessionToken']

# set distant mlflow server and initialize a brand new boto3 session within the context

# of the assumed position

mlflow.set_tracking_uri(tracking_uri)

experiment = mlflow.set_experiment(experiment_name)On this instance, RoleArn is the ARN of the position you wish to assume, and RoleSessionName is title that you simply select for the assumed session. The sts.assume_role technique returns non permanent safety credentials that the MLflow shopper will use to create a brand new shopper for the assumed position. The MLflow shopper then will ship signed requests to API Gateway within the context of the assumed position.

Render MLflow inside SageMaker Studio

SageMaker Studio is predicated on JupyterLab, and simply as in JupyterLab, you may set up extensions to spice up your productiveness. Because of this flexibility, information scientists working with MLflow and SageMaker can additional enhance their integration by accessing the MLflow UI from the Studio setting and instantly visualizing the experiments and runs logged. The next screenshot exhibits an instance of MLflow rendered in Studio.

For details about putting in JupyterLab extensions in Studio, check with Amazon SageMaker Studio and SageMaker Notebook Instance now come with JupyterLab 3 notebooks to boost developer productivity. For particulars on including automation by way of lifecycle configurations, check with Customize Amazon SageMaker Studio using Lifecycle Configurations.

Within the pattern repository supporting this publish, we offer instructions on methods to set up the jupyterlab-iframe extension. After the extension has been put in, you may entry the MLflow UI with out leaving Studio utilizing the identical set of credentials you will have saved within the Amazon Cognito consumer pool.

Subsequent steps

There are a number of choices for increasing upon this work. One concept is to consolidate the id retailer for each SageMaker Studio and the MLflow UI. An alternative choice can be to make the most of a third-party id federation service with Amazon Cognito, after which make the most of AWS IAM Identity Center (successor to AWS Single Signal-On) to grant entry to Studio utilizing the identical third-party id. One other one is to introduce full automation utilizing Amazon SageMaker Pipelines for the CI/CD a part of the mannequin constructing, and utilizing MLflow as a centralized experiment monitoring server and mannequin registry with sturdy governance capabilities, in addition to automation to robotically deploy authorised fashions to a SageMaker internet hosting endpoint.

Conclusion

The intention of this publish was to supply enterprise-level entry management for MLflow. To attain this, we separated the authentication and authorization processes from the MLflow server and transferred them to API Gateway. We utilized two authorization strategies supplied by API Gateway, IAM authorizers and Lambda authorizers, to cater to the necessities of each the MLflow Python SDK and the MLflow UI. It’s essential to know that customers are exterior to MLflow, due to this fact a constant governance requires sustaining the IAM insurance policies, particularly in case of very granular permissions. Lastly, we demonstrated methods to improve the expertise of information scientists by integrating MLflow into Studio by means of easy extensions.

Check out the answer by yourself by accessing the GitHub repo and tell us when you’ve got any questions within the feedback!

Further assets

For extra details about SageMaker and MLflow, see the next:

Concerning the Authors

Paolo Di Francesco is a Senior Options Architect at Amazon Internet Providers (AWS). He holds a PhD in Telecommunication Engineering and has expertise in software program engineering. He’s obsessed with machine studying and is at present specializing in utilizing his expertise to assist prospects attain their objectives on AWS, particularly in discussions round MLOps. Exterior of labor, he enjoys enjoying soccer and studying.

Paolo Di Francesco is a Senior Options Architect at Amazon Internet Providers (AWS). He holds a PhD in Telecommunication Engineering and has expertise in software program engineering. He’s obsessed with machine studying and is at present specializing in utilizing his expertise to assist prospects attain their objectives on AWS, particularly in discussions round MLOps. Exterior of labor, he enjoys enjoying soccer and studying.

Chris Fregly is a Principal Specialist Resolution Architect for AI and machine studying at Amazon Internet Providers (AWS) based mostly in San Francisco, California. He’s co-author of the O’Reilly E-book, “Knowledge Science on AWS.” Chris can be the Founding father of many world meetups targeted on Apache Spark, TensorFlow, Ray, and KubeFlow. He repeatedly speaks at AI and machine studying conferences the world over together with O’Reilly AI, Open Knowledge Science Convention, and Large Knowledge Spain.

Chris Fregly is a Principal Specialist Resolution Architect for AI and machine studying at Amazon Internet Providers (AWS) based mostly in San Francisco, California. He’s co-author of the O’Reilly E-book, “Knowledge Science on AWS.” Chris can be the Founding father of many world meetups targeted on Apache Spark, TensorFlow, Ray, and KubeFlow. He repeatedly speaks at AI and machine studying conferences the world over together with O’Reilly AI, Open Knowledge Science Convention, and Large Knowledge Spain.

Irshad Buchh is a Principal Options Architect at Amazon Internet Providers (AWS). Irshad works with massive AWS World ISV and SI companions and helps them construct their cloud technique and broad adoption of Amazon’s cloud computing platform. Irshad interacts with CIOs, CTOs and their Architects and helps them and their finish prospects implement their cloud imaginative and prescient. Irshad owns the strategic and technical engagements and supreme success round particular implementation tasks, and creating a deep experience within the Amazon Internet Providers applied sciences in addition to broad know-how round how functions and companies are constructed utilizing the Amazon Internet Providers platform.

Irshad Buchh is a Principal Options Architect at Amazon Internet Providers (AWS). Irshad works with massive AWS World ISV and SI companions and helps them construct their cloud technique and broad adoption of Amazon’s cloud computing platform. Irshad interacts with CIOs, CTOs and their Architects and helps them and their finish prospects implement their cloud imaginative and prescient. Irshad owns the strategic and technical engagements and supreme success round particular implementation tasks, and creating a deep experience within the Amazon Internet Providers applied sciences in addition to broad know-how round how functions and companies are constructed utilizing the Amazon Internet Providers platform.