Studying to navigate outside with none outside expertise – Google AI Weblog

Instructing cell robots to navigate in complicated outside environments is essential to real-world purposes, corresponding to supply or search and rescue. Nonetheless, that is additionally a difficult drawback because the robotic must understand its environment, after which discover to determine possible paths in the direction of the objective. One other widespread problem is that the robotic wants to beat uneven terrains, corresponding to stairs, curbs, or rockbed on a path, whereas avoiding obstacles and pedestrians. In our prior work, we investigated the second problem by instructing a quadruped robotic to deal with difficult uneven obstacles and various outdoor terrains.

In “IndoorSim-to-OutdoorReal: Learning to Navigate Outdoors without any Outdoor Experience”, we current our latest work to deal with the robotic problem of reasoning in regards to the perceived environment to determine a viable navigation path in outside environments. We introduce a learning-based indoor-to-outdoor switch algorithm that makes use of deep reinforcement studying to coach a navigation coverage in simulated indoor environments, and efficiently transfers that very same coverage to actual outside environments. We additionally introduce Context-Maps (maps with surroundings observations created by a consumer), that are utilized to our algorithm to allow environment friendly long-range navigation. We display that with this coverage, robots can efficiently navigate lots of of meters in novel outside environments, round beforehand unseen outside obstacles (bushes, bushes, buildings, pedestrians, and so forth.), and in several climate situations (sunny, overcast, sundown).

PointGoal navigation

Consumer inputs can inform a robotic the place to go along with instructions like “go to the Android statue”, footage displaying a goal location, or by merely choosing some extent on a map. On this work, we specify the navigation objective (a specific level on a map) as a relative coordinate to the robotic’s present place (i.e., “go to ∆x, ∆y”), that is often known as the PointGoal Visual Navigation (PointNav) process. PointNav is a normal formulation for navigation duties and is likely one of the normal selections for indoor navigation duties. Nonetheless, as a result of numerous visuals, uneven terrains and lengthy distance objectives in outside environments, coaching PointNav insurance policies for outside environments is a difficult process.

Indoor-to-outdoor switch

Latest successes in coaching wheeled and legged robotic brokers to navigate in indoor environments have been enabled by the event of quick, scalable simulators and the supply of large-scale datasets of photorealistic 3D scans of indoor environments. To leverage these successes, we develop an indoor-to-outdoor switch method that permits our robots to be taught from simulated indoor environments and to be deployed in actual outside environments.

To beat the variations between simulated indoor environments and actual outside environments, we apply kinematic control and picture augmentation strategies in our studying system. When utilizing kinematic management, we assume the existence of a dependable low-level locomotion controller that may management the robotic to exactly attain a brand new location. This assumption permits us to immediately transfer the robotic to the goal location throughout simulation coaching via a forward Euler integration and relieves us from having to explicitly mannequin the underlying robotic dynamics in simulation, which drastically improves the throughput of simulation knowledge technology. Prior work has proven that kinematic management can result in higher sim-to-real switch in comparison with a dynamic control approach, the place full robotic dynamics are modeled and a low-level locomotion controller is required for shifting the robotic.

| Left Kinematic management; Proper: Dynamic management |

We created an outside maze-like surroundings utilizing objects discovered indoors for preliminary experiments, the place we used Boston Dynamics’ Spot robot for take a look at navigation. We discovered that the robotic may navigate round novel obstacles within the new outside surroundings.

| The Spot robotic efficiently navigates round obstacles present in indoor environments, with a coverage educated completely in simulation. |

Nonetheless, when confronted with unfamiliar outside obstacles not seen throughout coaching, corresponding to a big slope, the robotic was unable to navigate the slope.

| The robotic is unable to navigate up slopes, as slopes are uncommon in indoor environments and the robotic was not educated to deal with it. |

To allow the robotic to stroll up and down slopes, we apply a picture augmentation method in the course of the simulation coaching. Particularly, we randomly tilt the simulated digital camera on the robotic throughout coaching. It may be pointed up or down inside 30 levels. This augmentation successfully makes the robotic understand slopes regardless that the ground is degree. Coaching on these perceived slopes permits the robotic to navigate slopes within the real-world.

| By randomly tilting the digital camera angle throughout coaching in simulation, the robotic is now in a position to stroll up and down slopes. |

For the reason that robots have been solely educated in simulated indoor environments, wherein they sometimes have to stroll to a objective only a few meters away, we discover that the realized community did not course of longer-range inputs — e.g., the coverage did not stroll ahead for 100 meters in an empty house. To allow the coverage community to deal with long-range inputs which are widespread for outside navigation, we normalize the objective vector through the use of the log of the objective distance.

Context-Maps for complicated long-range navigation

Placing the whole lot collectively, the robotic can navigate outside in the direction of the objective, whereas strolling on uneven terrain, and avoiding bushes, pedestrians and different outside obstacles. Nonetheless, there may be nonetheless one key element lacking: the robotic’s potential to plan an environment friendly long-range path. At this scale of navigation, taking a mistaken flip and backtracking may be pricey. For instance, we discover that the native exploration technique realized by normal PointNav insurance policies are inadequate to find a long-range objective and often results in a useless finish (proven beneath). It is because the robotic is navigating with out context of its surroundings, and the optimum path is probably not seen to the robotic from the beginning.

| Navigation insurance policies with out context of the surroundings don’t deal with complicated long-range navigation objectives. |

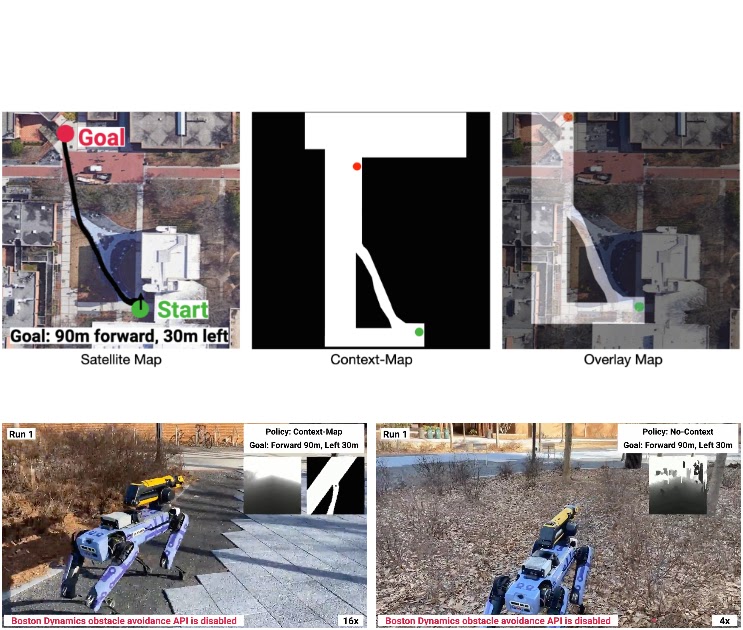

To allow the robotic to take the context into consideration and purposefully plan an environment friendly path, we offer a Context-Map (a binary picture that represents a top-down occupancy map of the area that the robotic is inside) as further observations for the robotic. An instance Context-Map is given beneath, the place the black area denotes areas occupied by obstacles and white area is walkable by the robotic. The inexperienced and crimson circle denotes the beginning and objective location of the navigation process. Via the Context-Map, we are able to present hints to the robotic (e.g., the slender opening within the route beneath) to assist it plan an environment friendly navigation route. In our experiments, we create the Context-Map for every route guided by Google Maps satellite pictures. We denote this variant of PointNav with environmental context, as Context-Guided PointNav.

|

| Instance of the Context-Map (proper) for a navigation process (left). |

You will need to observe that the Context-Map doesn’t have to be correct as a result of it solely serves as a tough define for planning. Throughout navigation, the robotic nonetheless must depend on its onboard cameras to determine and adapt its path to pedestrians, that are absent on the map. In our experiments, a human operator shortly sketches the Context-Map from the satellite tv for pc picture, masking out the areas to be prevented. This Context-Map, along with different onboard sensory inputs, together with depth pictures and relative place to the objective, are fed right into a neural community with attention fashions (i.e., transformers), that are educated utilizing DD-PPO, a distributed implementation of proximal policy optimization, in large-scale simulations.

|

| The Context-Guided PointNav structure consists of a 3-layer convolutional neural network (CNN) to course of depth pictures from the robotic’s digital camera, and a multilayer perceptron (MLP) to course of the objective vector. The options are handed right into a gated recurrent unit (GRU). We use an extra CNN encoder to course of the context-map (top-down map). We compute the scaled dot product attention between the map and the depth picture, and use a second GRU to course of the attended options (Context Attn., Depth Attn.). The output of the coverage are linear and angular velocities for the Spot robotic to comply with. |

Outcomes

We consider our system throughout three long-range outside navigation duties. The supplied Context-Maps are tough, incomplete surroundings outlines that omit obstacles, corresponding to vehicles, bushes, or chairs.

With the proposed algorithm, our robotic can efficiently attain the distant objective location 100% of the time, and not using a single collision or human intervention. The robotic was in a position to navigate round pedestrians and real-world litter that aren’t current on the context-map, and navigate on varied terrain together with filth slopes and grass.

Route 1

|

Route 2

|

Route 3

|

Conclusion

This work opens up robotic navigation analysis to the much less explored area of numerous outside environments. Our indoor-to-outdoor switch algorithm makes use of zero real-world expertise and doesn’t require the simulator to mannequin predominantly-outdoor phenomena (terrain, ditches, sidewalks, vehicles, and so forth). The success within the method comes from a mixture of a strong locomotion management, low sim-to-real hole in depth and map sensors, and large-scale coaching in simulation. We display that offering robots with approximate, high-level maps can allow long-range navigation in novel outside environments. Our outcomes present compelling proof for difficult the (admittedly affordable) speculation {that a} new simulator should be designed for each new situation we want to research. For extra info, please see our project page.

Acknowledgements

We want to thank Sonia Chernova, Tingnan Zhang, April Zitkovich, Dhruv Batra, and Jie Tan for advising and contributing to the challenge. We’d additionally wish to thank Naoki Yokoyama, Nubby Lee, Diego Reyes, Ben Jyenis, and Gus Kouretas for assist with the robotic experiment setup.