A New AI Analysis From Stanford Presents an Different Clarification for Seemingly Sharp and Unpredictable Emergent Skills of Massive Language Fashions

Researchers have lengthy explored the emergent options of complicated methods, from physics to biology to arithmetic. Nobel Prize-winning physicist P.W. Anderson’s commentary “Extra Is Totally different” is one notable instance. It makes the case that as a system’s complexity rises, new properties might manifest that can’t (simply or in any respect) be predicted, even from a exact quantitative understanding of the system’s microscopic particulars. Resulting from discoveries displaying massive language fashions (LLMs), similar to GPT, PaLM, and LaMDA, which can show what is called “emergent skills” throughout quite a lot of duties, rising has these days attracted a whole lot of curiosity in machine studying.

It was lately and succinctly acknowledged that “emergent skills of LLMs” refers to “skills that aren’t current in smaller-scale fashions however are current in large-scale fashions; thus, they can’t be predicted by merely extrapolating the efficiency enhancements on smaller-scale fashions.” The GPT-3 household might have been the primary to search out such emergent abilities. Later works emphasised the invention, writing that “efficiency is predictable at a normal stage, efficiency on a selected job can typically emerge fairly unpredictably and abruptly at scale”; in actual fact, these emergent skills had been so startling and noteworthy that it was argued that such “abrupt, particular functionality scaling” needs to be thought-about one of many two primary defining options of LLMs. Moreover, the phrases “sharp left turns” and “breakthrough capabilities” have been employed.

These quotations establish the 2 traits distinguishing rising abilities in LLMs:

1. Sharpness, altering from absent to current ostensibly immediately

2. Unpredictability, transitioning at mannequin sizes that look like inconceivable. These newly found abilities have attracted a whole lot of curiosity, resulting in inquiries like What determines which skills will emerge? What determines when abilities will manifest? How can they be certain that fascinating abilities all the time emerge whereas accelerating the emergence of undesirable ones? The relevance of those points for AI security and alignment is highlighted by emergent skills, which warn that larger fashions might someday, with out discover, possess undesirable mastery over hazardous abilities.

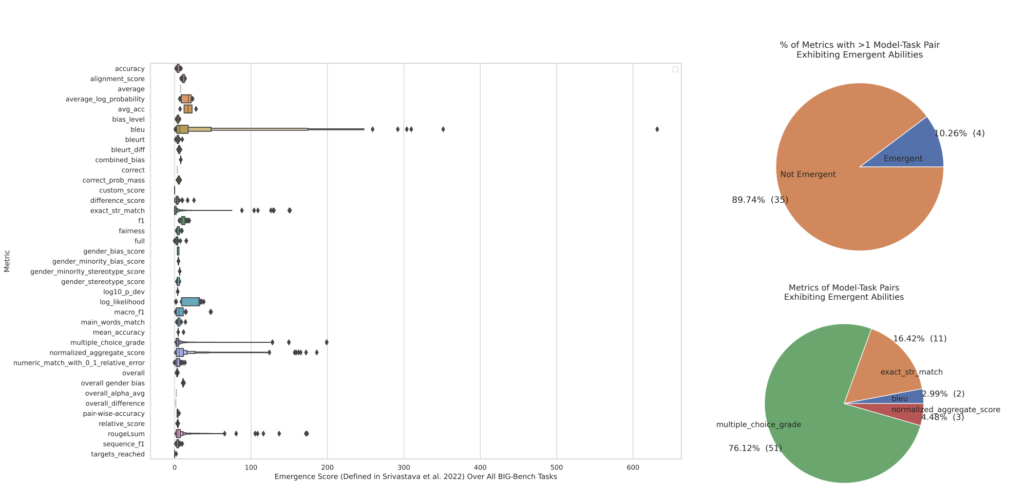

Researchers from Stanford take a look at the concept that LLMs comprise emergent skills extra exactly, abrupt and unanticipated adjustments in mannequin outputs as a operate of mannequin scale on specific duties on this research. Our skepticism stems from the discovering that rising abilities appear restricted to measures that discontinuously or nonlinearly scale the per-token error charge of any mannequin. As an illustration, they show that on BIG-Bench exams, > 92% of rising abilities fall below one in every of two metrics: A number of Choices. If the selection with the best chance is 0, grade def = 1; in any other case. If the output string completely matches the goal string, then Actual String Match def = 1; else, 0.

This raises the potential for a distinct rationalization for the emergence of LLMs’ emergent skills: adjustments that seem abrupt and unpredictable might have been introduced on by the researcher’s measurement selection. Regardless of the mannequin household’s per-token error charge altering easily, repeatedly, and predictably with growing mannequin scale, this raises the potential for one other rationalization.

They particularly declare that the researcher’s selection of a metric that nonlinearly or discontinuously deforms per-token error charges, the shortage of take a look at information to precisely estimate the efficiency of smaller fashions (leading to smaller fashions showing wholly incapable of performing the duty), and the analysis of too few large-scale fashions are all causes of emergent skills being a mirage. They supply a simple mathematical mannequin to precise their alternate viewpoint and present the way it statistically helps the proof for emergent LLM abilities.

Following that, they put their alternate concept to the take a look at in three complementary methods:

1. Utilizing the InstructGPT / GPT-3 mannequin household, they formulate, take a look at, and make sure three predictions primarily based on their different hypotheses.

2. They conduct a meta-analysis of beforehand revealed information and show that emergent abilities solely happen for sure metrics and never for mannequin households on duties (columns) within the area of job metric-model household triplets. They additional show that altering the measure for outputs from fastened fashions vanishes the emergence phenomena.

3. They illustrate how similar metric selections might produce what look like emergent abilities by purposefully inducing emergent skills in deep neural networks of varied architectures on numerous imaginative and prescient duties (which, to one of the best of their data, have by no means been proved).

Take a look at the Research Paper. Don’t overlook to affix our 20k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the newest AI analysis information, cool AI initiatives, and extra. When you have any questions concerning the above article or if we missed something, be at liberty to electronic mail us at Asif@marktechpost.com

🚀 Check Out 100’s AI Tools in AI Tools Club

Aneesh Tickoo is a consulting intern at MarktechPost. He’s at the moment pursuing his undergraduate diploma in Information Science and Synthetic Intelligence from the Indian Institute of Know-how(IIT), Bhilai. He spends most of his time engaged on initiatives geared toward harnessing the ability of machine studying. His analysis curiosity is picture processing and is keen about constructing options round it. He loves to attach with individuals and collaborate on attention-grabbing initiatives.