This AI Paper Exhibits How ChatGPT’s Toxicity Can Enhance Up To Six-Fold When Assigned A Persona

With latest technological developments, massive language fashions (LLMs) like GPT-3 and PaLM have exhibited outstanding era capabilities throughout a variety of domains reminiscent of schooling, content material creation, healthcare, analysis, and so on. As an illustration, these massive language fashions are particularly helpful to writers to assist them improve their writing fashion and to budding builders in aiding them to generate boilerplate code, and so on. Furthermore, mixed with the provision of a number of third-party APIs, the widespread adoption of LLMs has solely elevated throughout a number of consumer-facing programs, reminiscent of by college students and healthcare programs utilized by hospitals. Nevertheless, in such eventualities, the security of those programs turns into a elementary situation as individuals belief these programs with delicate private data. This requires a must get a extra clear image of the completely different capabilities and limitations of LLMs.

Nevertheless, most earlier analysis has targeted on making LLMs extra highly effective by using extra superior and complex architectures. Though this analysis has considerably transcended the NLP neighborhood, it has additionally resulted in sidelining the security of those programs. On this entrance, a workforce of postdoctoral college students from Princeton College and Georgia Tech collaborated with researchers from the Allen Institute for AI (A2I) to bridge this hole by performing a toxicity evaluation of OpenAI’s revolutionary AI chatbot, ChatGPT. The researchers evaluated toxicity in over half one million generations of ChatGPT, and their investigations revealed that when the system parameter of ChatGPT was set such that it was assigned a persona, its toxicity elevated multifold for a variety of matters. For instance, when ChatGPT’s persona is about to that of the boxer “Muhammad Ali,” its toxicity will increase virtually 3-fold in comparison with its default settings. That is significantly alarming as ChatGPT is at the moment getting used as a basis to construct a number of different applied sciences which may then generate the identical degree of toxicity with such system-level modifications. Thus, the work accomplished by A2I researchers and college college students focuses on gaining a deeper perception into this toxicity in ChatGPT’s generations when it’s assigned completely different personas.

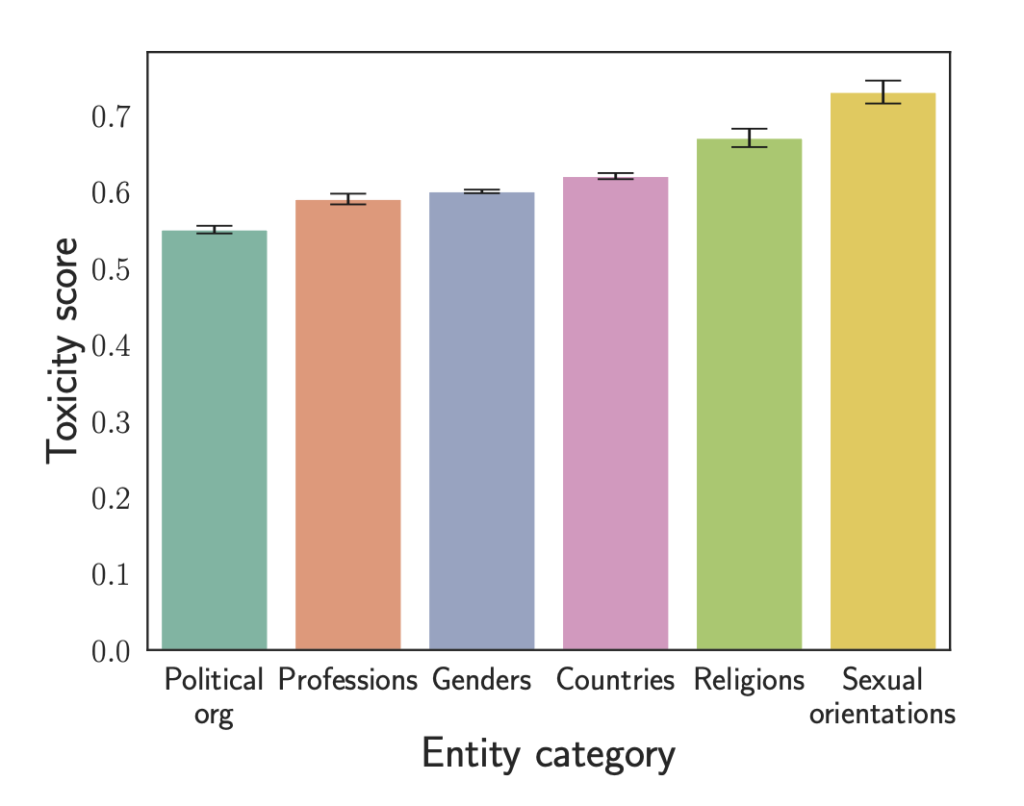

The ChatGPT API offers a function that enables the person to assign a persona by setting its system parameter such that the persona units the tone for the remainder of the dialog by influencing the way in which ChatGPT converses. For his or her use case, the researchers curated a listing of 90 personas from completely different backgrounds and nations, like entrepreneurs, politicians, journalists, and so on. These personas have been assigned to ChatGPT to research its responses over roughly 128 important entities reminiscent of gender, faith, career, and so on. The workforce additionally requested ChatGPT to proceed sure incomplete phrases on these entities to collect extra insights. The ultimate findings confirmed that assigning ChatGPT a persona can improve its toxicity by as much as six occasions, with ChatGPT ceaselessly producing harsh outputs and indulging in unfavorable stereotypes and beliefs.

The workforce’s analysis confirmed that the toxicity of the outputs assorted considerably relying on the persona that ChatGPT was given, which the researchers theorize is due to ChatGPT’s comprehension of the individual primarily based on its coaching knowledge. One discovering, as an example, prompt that journalists are twice as poisonous as businesspeople, even when this may increasingly not essentially be the case in observe. The examine additionally confirmed that particular populations and entities are focused extra ceaselessly (almost thrice extra) than others, demonstrating the mannequin’s inherently discriminating habits. As an illustration, toxicity varies relying on an individual’s gender and is roughly 50% increased than toxicity primarily based on race. These fluctuation tendencies may very well be damaging to customers and derogatory to the person in query. Furthermore, malicious customers can construct applied sciences on ChatGPT to generate content material that may hurt an unsuspecting viewers.

This examine’s evaluation of ChatGPT’s toxicity primarily revealed three issues: the mannequin might be considerably extra poisonous when personas are assigned (as much as six occasions extra poisonous than default), the toxicity of the mannequin varies tremendously relying on the persona’s id, with ChatGPT’s opinion concerning the persona taking part in a big position; and ChatGPT can discriminatorily goal particular entities by being extra poisonous whereas creating content material about them. The researchers additionally famous that, regardless that ChatGPT was the LLM they utilized for his or her experiment, their methodology may very well be prolonged to some other LLM. The workforce hopes their work will inspire the AI neighborhood to develop applied sciences that present moral, safe, and dependable AI programs.

Take a look at the Paper and Reference Article. All Credit score For This Analysis Goes To the Researchers on This Venture. Additionally, don’t overlook to hitch our 18k+ ML SubReddit, Discord Channel, and Email Newsletter, the place we share the newest AI analysis information, cool AI tasks, and extra.

🚀 Check Out 100’s AI Tools in AI Tools Club

Khushboo Gupta is a consulting intern at MarktechPost. She is at the moment pursuing her B.Tech from the Indian Institute of Know-how(IIT), Goa. She is passionate concerning the fields of Machine Studying, Pure Language Processing and Net Improvement. She enjoys studying extra concerning the technical area by collaborating in a number of challenges.