Inpaint photos with Steady Diffusion utilizing Amazon SageMaker JumpStart

In November 2022, we announced that AWS clients can generate photos from textual content with Stable Diffusion fashions utilizing Amazon SageMaker JumpStart. Right now, we’re excited to introduce a brand new function that allows customers to inpaint photos with Steady Diffusion fashions. Inpainting refers back to the technique of changing a portion of a picture with one other picture based mostly on a textual immediate. By offering the unique picture, a masks picture that outlines the portion to get replaced, and a textual immediate, the Steady Diffusion mannequin can produce a brand new picture that replaces the masked space with the article, topic, or surroundings described within the textual immediate.

You need to use inpainting for restoring degraded photos or creating new photos with novel topics or kinds in sure sections. Inside the realm of architectural design, Steady Diffusion inpainting may be utilized to restore incomplete or broken areas of constructing blueprints, offering exact data for development crews. Within the case of medical MRI imaging, the affected person’s head should be restrained, which can result in subpar outcomes as a result of cropping artifact inflicting knowledge loss or decreased diagnostic accuracy. Picture inpainting can successfully assist mitigate these suboptimal outcomes.

On this put up, we current a complete information on deploying and working inference utilizing the Steady Diffusion inpainting mannequin in two strategies: by means of JumpStart’s person interface (UI) in Amazon SageMaker Studio, and programmatically by means of JumpStart APIs out there within the SageMaker Python SDK.

Resolution overview

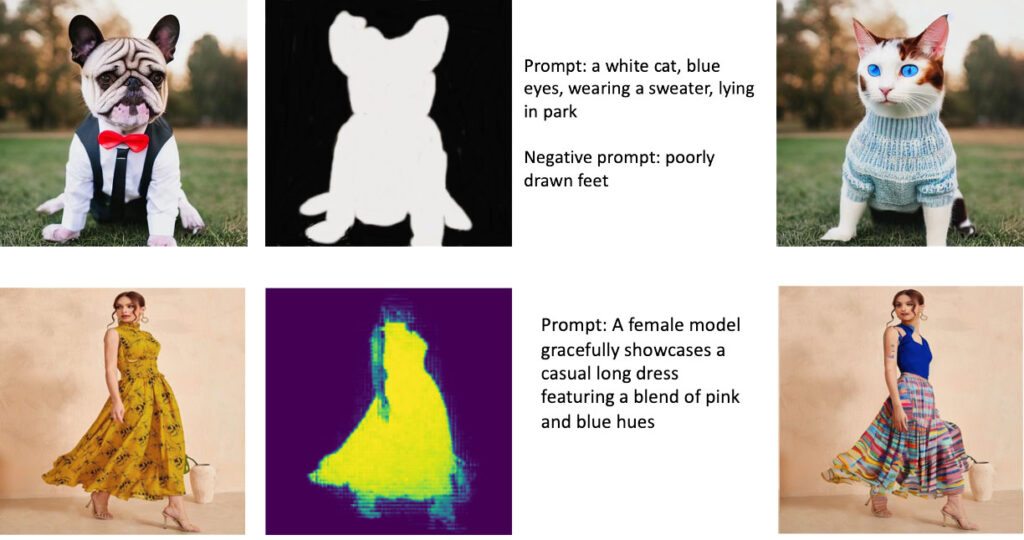

The next photos are examples of inpainting. The unique photos are on the left, the masks picture is within the middle, and the inpainted picture generated by the mannequin is on the fitting. For the primary instance, the mannequin was supplied with the unique picture, a masks picture, and the textual immediate “a white cat, blue eyes, carrying a sweater, mendacity in park,” in addition to the detrimental immediate “poorly drawn ft.” For the second instance, the textual immediate was “A feminine mannequin gracefully showcases an off-the-cuff lengthy gown that includes a mix of pink and blue hues,”

Working giant fashions like Steady Diffusion requires customized inference scripts. You must run end-to-end assessments to ensure that the script, the mannequin, and the specified occasion work collectively effectively. JumpStart simplifies this course of by offering ready-to-use scripts which have been robustly examined. You possibly can entry these scripts with one click on by means of the Studio UI or with only a few strains of code by means of the JumpStart APIs.

The next sections information you thru deploying the mannequin and working inference utilizing both the Studio UI or the JumpStart APIs.

Be aware that by utilizing this mannequin, you conform to the CreativeML Open RAIL++-M License.

Entry JumpStart by means of the Studio UI

On this part, we illustrate the deployment of JumpStart fashions utilizing the Studio UI. The accompanying video demonstrates finding the pre-trained Steady Diffusion inpainting mannequin on JumpStart and deploying it. The mannequin web page provides important particulars in regards to the mannequin and its utilization. To carry out inference, we make use of the ml.p3.2xlarge occasion sort, which delivers the required GPU acceleration for low-latency inference at an inexpensive value. After the SageMaker internet hosting occasion is configured, select Deploy. The endpoint will probably be operational and ready to deal with inference requests inside roughly 10 minutes.

JumpStart gives a pattern pocket book that may assist speed up the time it takes to run inference on the newly created endpoint. To entry the pocket book in Studio, select Open Pocket book within the Use Endpoint from Studio part of the mannequin endpoint web page.

Use JumpStart programmatically with the SageMaker SDK

Using the JumpStart UI lets you deploy a pre-trained mannequin interactively with just a few clicks. Alternatively, you possibly can make use of JumpStart fashions programmatically by utilizing APIs built-in inside the SageMaker Python SDK.

On this part, we select an acceptable pre-trained mannequin in JumpStart, deploy this mannequin to a SageMaker endpoint, and carry out inference on the deployed endpoint, all utilizing the SageMaker Python SDK. The next examples comprise code snippets. To entry the whole code with all of the steps included on this demonstration, consult with the Introduction to JumpStart Image editing – Stable Diffusion Inpainting instance pocket book.

Deploy the pre-trained mannequin

SageMaker makes use of Docker containers for numerous construct and runtime duties. JumpStart makes use of the SageMaker Deep Learning Containers (DLCs) which might be framework-specific. We first fetch any further packages, in addition to scripts to deal with coaching and inference for the chosen activity. Then the pre-trained mannequin artifacts are individually fetched with model_uris, which gives flexibility to the platform. This enables a number of pre-trained fashions for use with a single inference script. The next code illustrates this course of:

Subsequent, we offer these sources to a SageMaker model occasion and deploy an endpoint:

After the mannequin is deployed, we will get hold of real-time predictions from it!

Enter

The enter is the bottom picture, a masks picture, and the immediate describing the topic, object, or surroundings to be substituted within the masked-out portion. Creating the right masks picture for in-painting results includes a number of finest practices. Begin with a particular immediate, and don’t hesitate to experiment with numerous Steady Diffusion settings to attain desired outcomes. Make the most of a masks picture that intently resembles the picture you purpose to inpaint. This strategy aids the inpainting algorithm in finishing the lacking sections of the picture, leading to a extra pure look. Excessive-quality photos typically yield higher outcomes, so be sure that your base and masks photos are of excellent high quality and resemble one another. Moreover, go for a big and easy masks picture to protect element and reduce artifacts.

The endpoint accepts the bottom picture and masks as uncooked RGB values or a base64 encoded picture. The inference handler decodes the picture based mostly on content_type:

- For

content_type = “software/json”, the enter payload should be a JSON dictionary with the uncooked RGB values, textual immediate, and different non-compulsory parameters - For

content_type = “software/json;jpeg”, the enter payload should be a JSON dictionary with the base64 encoded picture, a textual immediate, and different non-compulsory parameters

Output

The endpoint can generate two sorts of output: a Base64-encoded RGB picture or a JSON dictionary of the generated photos. You possibly can specify which output format you need by setting the settle for header to "software/json" or "software/json;jpeg" for a JPEG picture or base64, respectively.

- For

settle for = “software/json”, the endpoint returns the a JSON dictionary with RGB values for the picture - For

settle for = “software/json;jpeg”, the endpoint returns a JSON dictionary with the JPEG picture as bytes encoded with base64.b64 encoding

Be aware that sending or receiving the payload with the uncooked RGB values might hit default limits for the enter payload and the response dimension. Due to this fact, we suggest utilizing the base64 encoded picture by setting content_type = “software/json;jpeg” and settle for = “software/json;jpeg”.

The next code is an instance inference request:

Supported parameters

Steady Diffusion inpainting fashions assist many parameters for picture technology:

- picture – The unique picture.

- masks – A picture the place the blacked-out portion stays unchanged throughout picture technology and the white portion is changed.

- immediate – A immediate to information the picture technology. It may be a string or an inventory of strings.

- num_inference_steps (non-compulsory) – The variety of denoising steps throughout picture technology. Extra steps result in increased high quality picture. If specified, it should be a optimistic integer. Be aware that extra inference steps will result in an extended response time.

- guidance_scale (non-compulsory) – A better steerage scale ends in a picture extra intently associated to the immediate, on the expense of picture high quality. If specified, it should be a float.

guidance_scale<=1is ignored. - negative_prompt (non-compulsory) – This guides the picture technology in opposition to this immediate. If specified, it should be a string or an inventory of strings and used with

guidance_scale. Ifguidance_scaleis disabled, that is additionally disabled. Furthermore, if the immediate is an inventory of strings, then thenegative_promptshould even be an inventory of strings. - seed (non-compulsory) – This fixes the randomized state for reproducibility. If specified, it should be an integer. Everytime you use the identical immediate with the identical seed, the ensuing picture will at all times be the identical.

- batch_size (non-compulsory) – The variety of photos to generate in a single ahead go. If utilizing a smaller occasion or producing many photos, scale back

batch_sizeto be a small quantity (1–2). The variety of photos = variety of prompts*num_images_per_prompt.

Limitations and biases

Though Steady Diffusion has spectacular efficiency in inpainting, it suffers from a number of limitations and biases. These embody however aren’t restricted to:

- The mannequin might not generate correct faces or limbs as a result of the coaching knowledge doesn’t embody ample photos with these options.

- The mannequin was skilled on the LAION-5B dataset, which has grownup content material and might not be match for product use with out additional issues.

- The mannequin might not work properly with non-English languages as a result of the mannequin was skilled on English language textual content.

- The mannequin can’t generate good textual content inside photos.

- Steady Diffusion inpainting usually works finest with photos of decrease resolutions, reminiscent of 256×256 or 512×512 pixels. When working with high-resolution photos (768×768 or increased), the tactic would possibly battle to keep up the specified degree of high quality and element.

- Though using a seed might help management reproducibility, Steady Diffusion inpainting should produce assorted outcomes with slight alterations to the enter or parameters. This would possibly make it difficult to fine-tune the output for particular necessities.

- The tactic would possibly battle with producing intricate textures and patterns, particularly after they span giant areas inside the picture or are important for sustaining the general coherence and high quality of the inpainted area.

For extra data on limitations and bias, consult with the Stable Diffusion Inpainting model card.

Inpainting answer with masks generated by way of a immediate

CLIPSeq is a sophisticated deep studying approach that makes use of the ability of pre-trained CLIP (Contrastive Language-Picture Pretraining) fashions to generate masks from enter photos. This strategy gives an environment friendly solution to create masks for duties reminiscent of picture segmentation, inpainting, and manipulation. CLIPSeq makes use of CLIP to generate a textual content description of the enter picture. The textual content description is then used to generate a masks that identifies the pixels within the picture which might be related to the textual content description. The masks can then be used to isolate the related components of the picture for additional processing.

CLIPSeq has a number of benefits over different strategies for producing masks from enter photos. First, it’s a extra environment friendly technique, as a result of it doesn’t require the picture to be processed by a separate picture segmentation algorithm. Second, it’s extra correct, as a result of it might probably generate masks which might be extra intently aligned with the textual content description of the picture. Third, it’s extra versatile, as a result of you should utilize it to generate masks from all kinds of photos.

Nonetheless, CLIPSeq additionally has some disadvantages. First, the approach might have limitations by way of subject material, as a result of it depends on pre-trained CLIP fashions that will not embody particular domains or areas of experience. Second, it may be a delicate technique, as a result of it’s vulnerable to errors within the textual content description of the picture.

For extra data, consult with Virtual fashion styling with generative AI using Amazon SageMaker.

Clear up

After you’re achieved working the pocket book, be sure that to delete all sources created within the course of to make sure that the billing is stopped. The code to wash up the endpoint is out there within the related notebook.

Conclusion

On this put up, we confirmed find out how to deploy a pre-trained Steady Diffusion inpainting mannequin utilizing JumpStart. We confirmed code snippets on this put up—the total code with all the steps on this demo is out there within the Introduction to JumpStart – Enhance image quality guided by prompt instance pocket book. Check out the answer by yourself and ship us your feedback.

To study extra in regards to the mannequin and the way it works, see the next sources:

To study extra about JumpStart, try the next posts:

Concerning the Authors

Dr. Vivek Madan is an Utilized Scientist with the Amazon SageMaker JumpStart group. He received his PhD from College of Illinois at Urbana-Champaign and was a Publish Doctoral Researcher at Georgia Tech. He’s an energetic researcher in machine studying and algorithm design and has revealed papers in EMNLP, ICLR, COLT, FOCS, and SODA conferences.

Dr. Vivek Madan is an Utilized Scientist with the Amazon SageMaker JumpStart group. He received his PhD from College of Illinois at Urbana-Champaign and was a Publish Doctoral Researcher at Georgia Tech. He’s an energetic researcher in machine studying and algorithm design and has revealed papers in EMNLP, ICLR, COLT, FOCS, and SODA conferences.

Alfred Shen is a Senior AI/ML Specialist at AWS. He has been working in Silicon Valley, holding technical and managerial positions in numerous sectors together with healthcare, finance, and high-tech. He’s a devoted utilized AI/ML researcher, concentrating on CV, NLP, and multimodality. His work has been showcased in publications reminiscent of EMNLP, ICLR, and Public Well being.

Alfred Shen is a Senior AI/ML Specialist at AWS. He has been working in Silicon Valley, holding technical and managerial positions in numerous sectors together with healthcare, finance, and high-tech. He’s a devoted utilized AI/ML researcher, concentrating on CV, NLP, and multimodality. His work has been showcased in publications reminiscent of EMNLP, ICLR, and Public Well being.