Finest Machine Studying Mannequin For Sparse Knowledge

Picture by Writer

Sparse knowledge refers to datasets with many options with zero values. It might trigger issues in numerous fields, particularly in machine studying.

Sparse knowledge can happen on account of inappropriate function engineering strategies. For example, utilizing a one-hot encoding that creates numerous dummy variables.

Sparsity will be calculated by taking the ratio of zeros in a dataset to the entire variety of components. Addressing sparsity will have an effect on the accuracy of your machine-learning mannequin.

Additionally, we should always distinguish sparsity from lacking knowledge. Lacking knowledge merely implies that some values are usually not accessible. In sparse knowledge, all values are current, however most are zero.

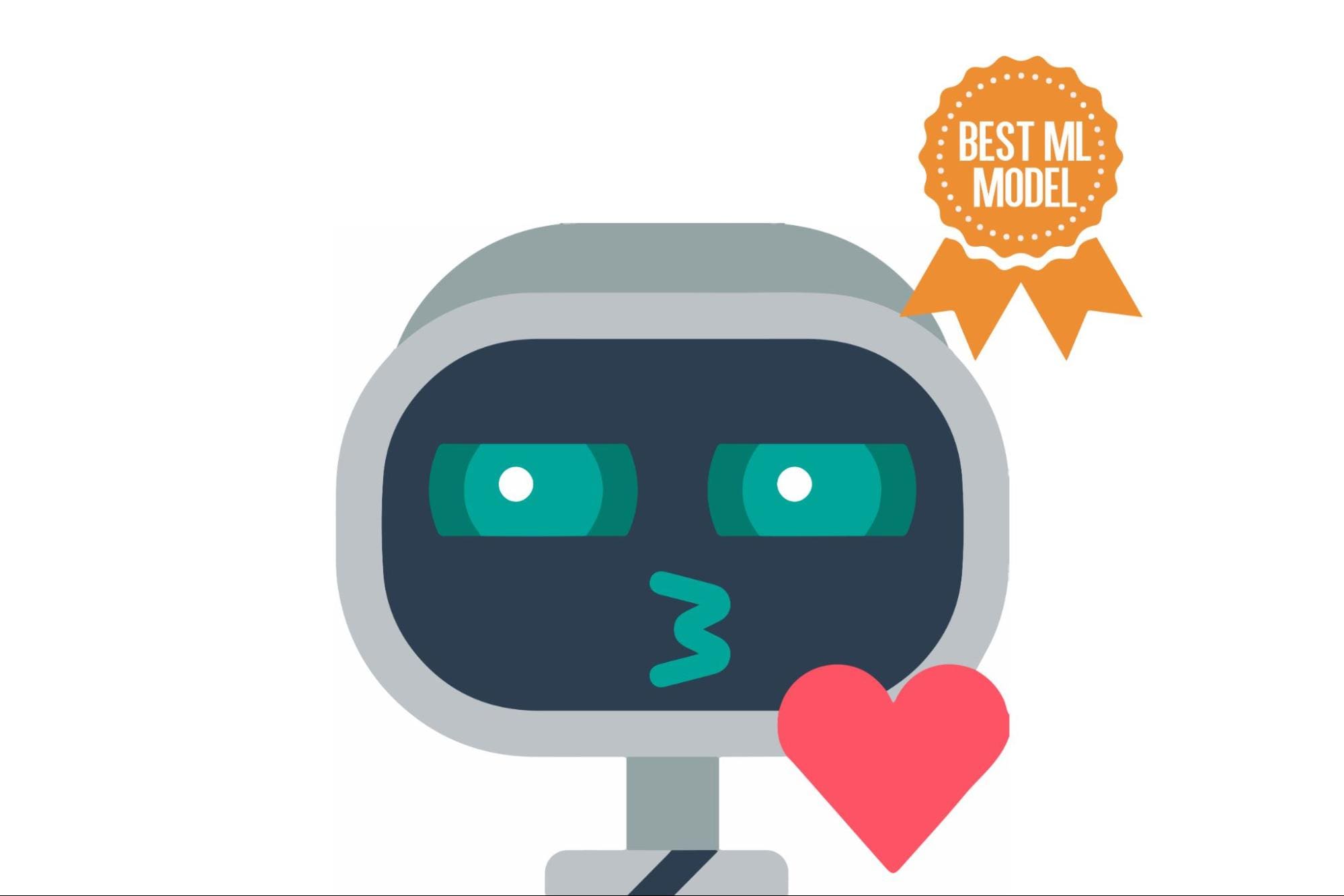

Additionally, sparsity causes distinctive challenges for machine studying. To be precise, it causes overfitting, shedding good knowledge, reminiscence issues, and time issues.

This text will discover these widespread issues associated to sparse knowledge. Then we’ll cowl the strategies used to deal with this difficulty.

Lastly, we’ll apply totally different machine studying fashions to the sparse knowledge and clarify why these fashions are appropriate for sparse knowledge.

All through the article, I’ll predominantly use the scikit-learn library, and for those who want to modify the code and arguments, I’ll present the official documentation hyperlinks too.

Now let’s begin with the widespread issues with sparse knowledge.

Sparse knowledge can pose distinctive challenges for knowledge evaluation. We already talked about that a few of the most typical points embrace overfitting, shedding good knowledge, reminiscence issues, and time issues.

Now, let’s have an in depth have a look at every.

Picture by Writer

Overfitting

Overfitting happens when a mannequin turns into too complicated and begins to seize noise within the knowledge as an alternative of the underlying patterns.

In sparse knowledge, there could also be numerous options, however only some of them are literally related to the evaluation. This may make it troublesome to determine which options are necessary and which of them are usually not.

Because of this, a mannequin could overfit to noise within the knowledge and carry out poorly on new knowledge.

In case you are new to machine studying or wish to know extra, you are able to do that within the scikit-learn documentation about overfitting.

Shedding Good Knowledge

One of many greatest issues with sparse knowledge is that it might probably result in the lack of probably helpful data.

When we now have very restricted knowledge, it turns into tougher to determine significant patterns or relationships in that knowledge. It is because the noise and randomness inherent to any knowledge set can extra simply obscure important options when the info is sparse.

Moreover, as a result of the quantity of knowledge accessible is proscribed, there’s a larger probability that we are going to miss out on a few of the actually beneficial patterns or relationships within the knowledge. That is very true in circumstances the place the info is sparse resulting from a scarcity of sampling, versus merely being lacking. In such circumstances, we could not even concentrate on the lacking knowledge factors and thus could not understand we’re shedding beneficial data.

That’s why if too many options are eliminated, or the info is compressed an excessive amount of, necessary data could also be misplaced, leading to a much less correct mannequin.

Reminiscence Downside

Reminiscence issues can come up as a result of giant dimension of the dataset. Sparse knowledge usually leads to many options, and storing this knowledge will be computationally costly. This may restrict the quantity of knowledge that may be processed without delay or require vital computing assets.

Here you possibly can see totally different methods to scale your knowledge by utilizing scikit-learn.

Time Downside

The time drawback may happen as a result of giant dimension of the dataset. Sparse knowledge could require longer processing instances, particularly when coping with numerous options. This may restrict the velocity at which knowledge will be processed, which will be problematic in time-sensitive purposes.

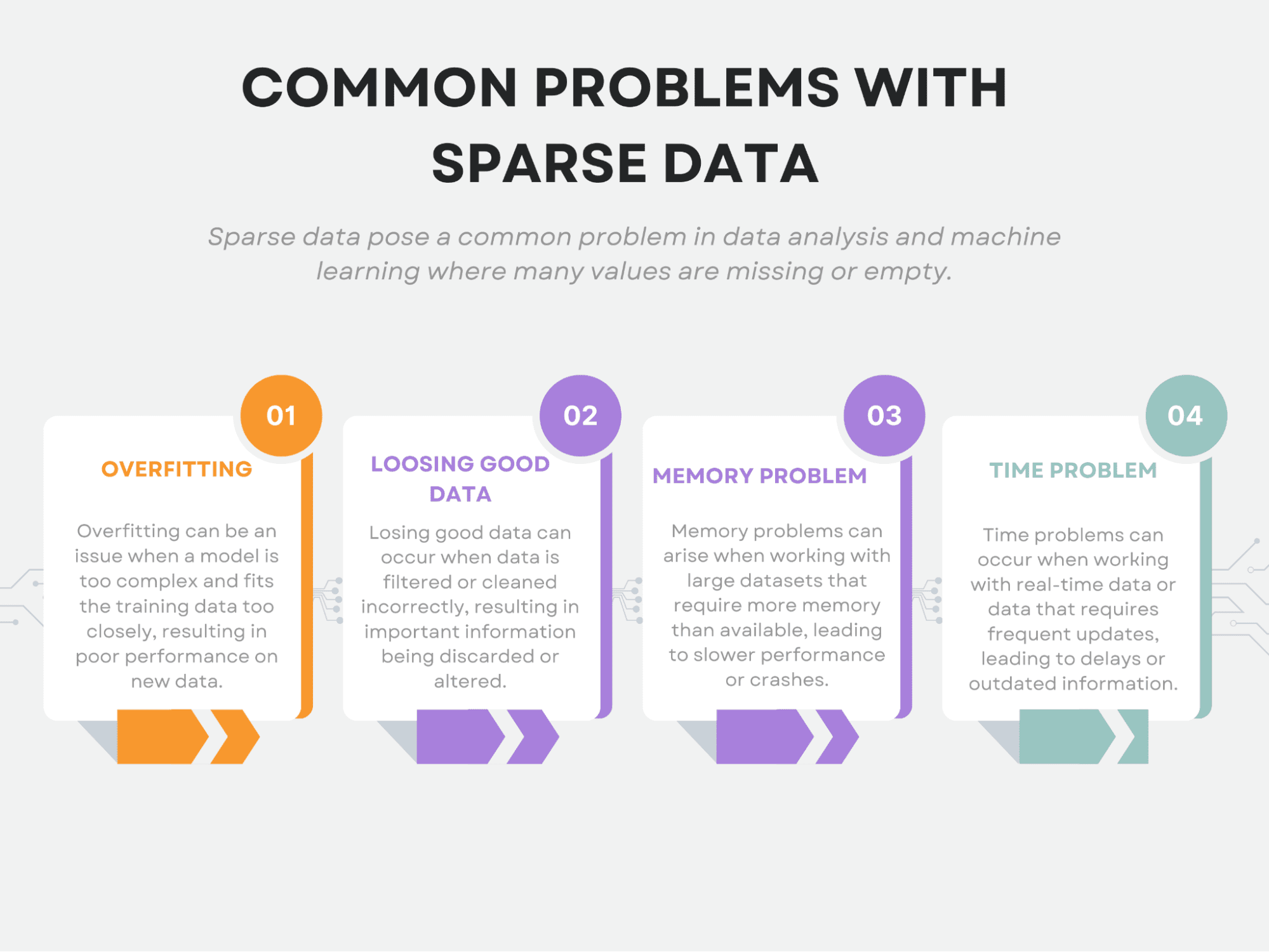

Picture by Writer

Sparse knowledge poses a problem in knowledge evaluation resulting from its low prevalence of non-zero values. Nevertheless, there are a number of strategies accessible to mitigate this difficulty.

One widespread strategy is eradicating the function inflicting sparsity within the dataset.

An alternative choice is to make use of Principal Part Evaluation (PCA) to cut back the dimensionality of the dataset whereas retaining necessary data.

Characteristic hashing is one other method that may be employed, which entails mapping options to a fixed-length vector.

T-Distributed Stochastic Neighbor Embedding (t-SNE) is one other helpful technique that may be utilized to visualise high-dimensional datasets.

Along with these strategies, deciding on an appropriate machine studying mannequin that may deal with sparse knowledge, akin to SVM or logistic regression, is essential.

By implementing these methods, one can successfully tackle the challenges related to sparse knowledge in knowledge evaluation.

Now let’s begin with the techniques used to cut back sparse knowledge first, then we’ll go deeper into the fashions.

Take away it!

When working with sparse knowledge, one strategy is to take away options that comprise principally zero values. This may be completed by setting a threshold on the proportion of non-zero values in every function. Any function that falls beneath this threshold will be faraway from the dataset.

This strategy might help scale back the dimensionality of the dataset and enhance the efficiency of sure machine studying algorithms.

Code Instance

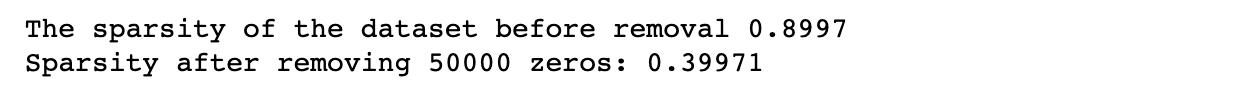

On this instance, we set the scale of the dataset, in addition to the sparsity stage, which determines what number of values within the dataset shall be zero.

Then, we generate random knowledge with the required sparsity stage to verify whether or not our technique works or not. At this step, we calculate the sparsity to match afterward.

Subsequent, the code units the variety of zeros to take away and randomly removes a selected variety of zeros from the dataset. Then we recalculate the sparsity of the modified dataset to verify whether or not our technique works or not.

Lastly, we recalculate the sparsity to see the modifications.

Right here is the code.

import numpy as np

# Set the scale of the dataset

num_rows = 1000

num_cols = 100

# Set the sparsity stage of the dataset

sparsity = 0.9

# Generate random knowledge with the required sparsity stage

knowledge = np.random.random((num_rows, num_cols))

knowledge[data < sparsity] = 0

# Calculate the sparsity of the dataset

num_zeros = (knowledge == 0).sum()

total_elements = knowledge.form[0] * knowledge.form[1]

sparsity = num_zeros / total_elements

print(f"The sparsity of the dataset earlier than removing {sparsity:.4f}")

# Set the variety of zeros to take away

num_zeros_to_remove = 50000

# Take away a selected variety of zeros randomly from the dataset

zero_indices = np.argwhere(knowledge == 0)

zeros_to_remove = np.random.alternative(

zero_indices.form[0], num_zeros_to_remove, exchange=False

)

knowledge[

zero_indices[zeros_to_remove, 0], zero_indices[zeros_to_remove, 1]

] = np.nan

# Calculate the sparsity of the modified dataset

num_zeros = (knowledge == 0).sum()

total_elements = knowledge.form[0] * knowledge.form[1]

sparsity = num_zeros / total_elements

print(

"Sparsity after eradicating {} zeros:".format(num_zeros_to_remove), sparsity

)

Right here is the output.

PCA

PCA is a well-liked method for dimensionality discount. It identifies the principal parts of the info, that are the instructions wherein the info varies probably the most.

These principal parts can then be used to symbolize the info in a lower-dimensional house.

Within the context of sparse knowledge, PCA can be utilized to determine an important options that comprise probably the most variation within the knowledge.

By deciding on solely these options, we will scale back the dimensionality of the dataset whereas nonetheless retaining a lot of the necessary data.

You’ll be able to implement PCA by utilizing the sci-kit study library, as we’ll do it subsequent within the code instance. Here is the official documentation if you wish to study extra about it.

Code Instance

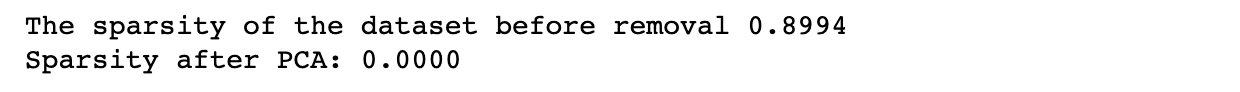

To use PCA to sparse knowledge, we will use the scikit-learn library in Python.

The library gives a PCA class that we will use to suit a PCA mannequin to the info and rework it into lower-dimensional house.

Within the first part of the next code, we create a dataset as we did within the earlier part, with a given dimension and sparsity.

Within the second part, we’ll apply PCA to cut back the dimension of the dataset to 10. After that, we’ll recalculate the sparsity.

Right here is the code.

import numpy as np

# Set the scale of the dataset

num_rows = 1000

num_cols = 100

# Set the sparsity stage of the dataset

sparsity = 0.9

# Generate random knowledge with the required sparsity stage

knowledge = np.random.random((num_rows, num_cols))

knowledge[data < sparsity] = 0

# Calculate the sparsity of the dataset

num_zeros = (knowledge == 0).sum()

total_elements = knowledge.form[0] * knowledge.form[1]

sparsity = num_zeros / total_elements

print(f"The sparsity of the dataset earlier than removing {sparsity:.4f}")

# Apply PCA to the dataset

pca = PCA(n_components=10)

data_pca = pca.fit_transform(knowledge)

# Calculate the sparsity of the diminished dataset

num_zeros = (data_pca == 0).sum()

total_elements = data_pca.form[0] * data_pca.form[1]

sparsity = num_zeros / total_elements

print(f"Sparsity after PCA: {sparsity:.4f}")

Right here is the output.

Characteristic Hashing

One other technique for working with sparse knowledge is named function hashing. This strategy converts every function right into a fixed-length array of values utilizing a hashing operate.

The hashing operate maps every enter function to a set of indices within the fixed-length array. The values are summed collectively if a number of enter options are mapped to the identical index. Characteristic hashing will be helpful for big datasets the place storing a big function dictionary will not be possible.

We’ll cowl this collectively within the subsequent part, but if you wish to dig deeper into it, here you possibly can see the official documentation of the function hasher within the scikit-learn library.

Code Instance

Right here, we once more use the identical technique in dataset creation.

Then we apply function hashing to the dataset utilizing the FeatureHasher class from scikit-learn.

We specify the variety of output options with the n_features parameter and the enter kind as a dictionary with the input_type parameter.

We then rework the enter knowledge into hashed arrays utilizing the rework technique of the FeatureHasher object.

Lastly, we calculate the sparsity of the ensuing dataset.

Right here is the code.

import numpy as np

# Set the scale of the dataset

num_rows = 1000

num_cols = 100

# Set the sparsity stage of the dataset

sparsity = 0.9

# Generate random knowledge with the required sparsity stage

knowledge = np.random.random((num_rows, num_cols))

knowledge[data < sparsity] = 0

# Calculate the sparsity of the dataset

num_zeros = (knowledge == 0).sum()

total_elements = knowledge.form[0] * knowledge.form[1]

sparsity = num_zeros / total_elements

print(f"The sparsity of the dataset earlier than removing {sparsity:.4f}")

# Apply function hashing to the dataset

hasher = FeatureHasher(n_features=10, input_type="dict")

data_dict = [

dict(("feature" + str(i), val) for i, val in enumerate(row))

for row in data

]

data_hashed = hasher.rework(data_dict).toarray()

# Calculate the sparsity of the diminished dataset

num_zeros = (data_hashed == 0).sum()

total_elements = data_hashed.form[0] * data_hashed.form[1]

sparsity = num_zeros / total_elements

print(f"Sparsity after function hashing: {sparsity:.4f}")

Right here is the output.

t-SNE Embedding

t-SNE (t-Distributed Stochastic Neighbor Embedding) is a non-linear dimensionality discount method used to visualise high-dimensional knowledge. It reduces the dimensionality of the info whereas preserving its international construction and has change into a well-liked instrument in machine studying for visualizing and clustering high-dimensional knowledge.

t-SNE is especially helpful for working with sparse knowledge as a result of it might probably successfully scale back the dimensionality of the info whereas sustaining its construction. The t-SNE algorithm works by calculating pairwise distances between knowledge factors in high- and low-dimensional areas. It then minimizes the distinction between these distances in high- and low-dimensional house.

To make use of t-SNE with sparse knowledge, the info should first be transformed right into a dense matrix. This may be completed utilizing numerous strategies, akin to PCA or function hashing. As soon as the info has been transformed, t-SNE will be high-x to acquire a low-dimensional embedding of the info.

Additionally, in case you are interested by t-SNE, here is the official documentation of the scikit-learn to see extra.

Code Instance

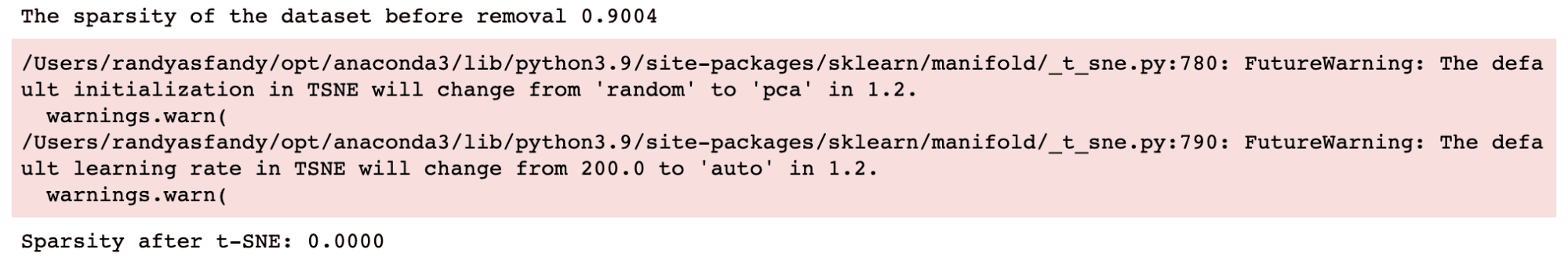

The next code first units the scale of the dataset and the sparsity stage, generates random knowledge with the required sparsity stage, and calculates the sparsity of the dataset earlier than t-SNE is utilized, as we did within the earlier examples.

It then applies t-SNE to the dataset with 3 parts and calculates the sparsity of the ensuing t-SNE embedding. Lastly, it prints out the sparsity of the t-SNE embedding.

Right here is the code.

import numpy as np

# Set the scale of the dataset

num_rows = 1000

num_cols = 100

# Set the sparsity stage of the dataset

sparsity = 0.9

# Generate random knowledge with the required sparsity stage

knowledge = np.random.random((num_rows, num_cols))

knowledge[data < sparsity] = 0

# Calculate the sparsity of the dataset

num_zeros = (knowledge == 0).sum()

total_elements = knowledge.form[0] * knowledge.form[1]

sparsity = num_zeros / total_elements

print(f"The sparsity of the dataset earlier than removing {sparsity:.4f}")

# Apply t-SNE to the dataset

tsne = TSNE(n_components=3)

data_tsne = tsne.fit_transform(knowledge)

# Calculate the sparsity of the t-SNE embedding

num_zeros = (data_tsne == 0).sum()

total_elements = data_tsne.form[0] * data_tsne.form[1]

sparsity = num_zeros / total_elements

print(f"Sparsity after t-SNE: {sparsity:.4f}")

Right here is the output.

Now that we now have addressed the challenges of working with sparse knowledge, we will discover machine studying fashions particularly designed to carry out effectively with sparse knowledge.

These fashions can deal with the distinctive traits of sparse knowledge, akin to a excessive variety of options with many zeros and restricted data, which might make it difficult to attain correct predictions with conventional fashions.

Through the use of fashions designed explicitly for sparse knowledge, we will make sure that our predictions are extra exact and dependable.

Now let’s discuss concerning the fashions good for sparse knowledge.

SVC (Assist Vector Classifier)

SVC (Assist Vector Classifier) with the linear kernel can carry out effectively with sparse knowledge as a result of it makes use of a subset of coaching factors, referred to as assist vectors, to make predictions. This implies it might probably deal with high-dimensional, sparse knowledge effectively.

You should utilize Assist Vector for regression, too.

I defined the Support Vector Machine here if you wish to study extra concerning the Assist Vector algorithm, each classification and regression.

Logistic Regression

This may additionally work effectively with sparse knowledge as a result of logistic regression makes use of a regularization time period to regulate the mannequin complexity, which might help forestall overfitting on sparse datasets.

If you wish to study extra about logistic regression and in addition for different classification algorithms, right here is the Overview of Machine Learning Algorithms: Classification.

KNeighboursClassifier

This algorithm can work effectively with sparse knowledge because it computes distances between knowledge factors and may deal with high-dimensional knowledge.

You’ll be able to see KNN and different machine learning algorithms here that you must know for knowledge science.

MLPClassifier

The MLPClassifier can carry out effectively with sparse knowledge when the enter knowledge is standardized, because it makes use of gradient descent for optimization.

Here you possibly can see the implementation of MLP Classifier, alongside witha bunch of different algorithms, with the assistance of ChatGPT.

DecisionTreeClassifier

It might work effectively with sparse knowledge when the variety of options is small. Should you have no idea about choice bushes, I defined decision trees and random forests here, which shall be our remaining mannequin for analyzing the fashions for sparse knowledge.

RandomForestClassifier

The RandomForestClassifier can work effectively with sparse knowledge when the variety of options is small.

Picture by Writer

Now, I’ll present you ways these fashions carry out on the generated knowledge. However, I’ll add one other algorithm to see whether or not these algorithms will outperform this algorithm (which is often not good for sparse knowledge) or not.

Code Instance

On this part, we’ll check a number of machine studying fashions on a sparse dataset, which is a dataset with numerous empty or zero values.

We’ll calculate the sparsity of the dataset and consider the fashions utilizing the F1 rating.

Then, we’ll create an information body with the F1 scores for every mannequin to match their efficiency. Additionally, we’ll filter out any warnings which will seem throughout the analysis course of.

import numpy as np

from scipy.sparse import random

import numpy as np

from scipy.sparse import random

from sklearn.model_selection import train_test_split

from sklearn.metrics import f1_score

from sklearn.svm import SVC

from sklearn.linear_model import LogisticRegression, Lasso

from sklearn.cluster import KMeans

from sklearn.neighbors import KNeighborsClassifier

from sklearn.neural_network import MLPClassifier

from sklearn.datasets import make_classification

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn.exceptions import ConvergenceWarning

import warnings

# Generate a sparse dataset

X = random(1000, 20, density=0.1, format="csr", random_state=42)

y = np.random.randint(2, dimension=1000)

# Calculate the sparsity of the dataset

sparsity = 1.0 - X.nnz / float(X.form[0] * X.form[1])

print("Sparsity:", sparsity)

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42

)

# Practice and consider a number of classifiers

classifiers = [

SVC(kernel="linear"),

LogisticRegression(),

KMeans(

n_clusters=2,

init="k-means++",

max_iter=100,

random_state=42,

algorithm="full",

),

KNeighborsClassifier(n_neighbors=5),

MLPClassifier(

hidden_layer_sizes=(100, 50),

max_iter=1000,

alpha=0.01,

solver="sgd",

verbose=0,

random_state=21,

tol=0.000000001,

),

DecisionTreeClassifier(),

RandomForestClassifier(),

]

# Create an empty DataFrame with column names

df = pd.DataFrame(columns=["Classifier", "F1 Score"])

# Filter out the particular warning

warnings.filterwarnings(

"ignore", class=ConvergenceWarning

) # Filter warning that mlp classifier will probably print out.

for clf in classifiers:

clf.match(X_train, y_train)

y_pred = clf.predict(X_test)

f1 = f1_score(y_test, y_pred)

df = pd.concat(

[

df,

pd.DataFrame(

{"Classifier": [type(clf).__name__], "F1 Rating": [f1]}

),

],

ignore_index=True,

)

df = df.sort_values(by="F1 Rating", ascending=True)

df

Right here is the output.

By now, you would possibly catch an algorithm that isn’t well-suited for the sparse knowledge. Sure, the reply is the KMeans. However why?

KMeans is often not effectively suited to sparse knowledge as a result of it’s primarily based on distance measures, which will be problematic with high-dimensional, sparse knowledge.

There are additionally some algorithms that we will’t even attempt. For example, for those who attempt to embrace the GaussianNB classifier on this checklist, you’ll get an error. It means that the GaussianNB classifier expects dense knowledge as an alternative of sparse knowledge. It is because the GaussianNB classifier assumes that the enter knowledge follows Gaussian distribution and is unsuitable for sparse knowledge.

In conclusion, working with sparse knowledge will be difficult resulting from numerous issues like overfitting, shedding good knowledge, reminiscence, and time issues.

Nevertheless, a number of strategies can be found for working with sparse options, together with eradicating options, utilizing PCA, and have hashing.

Furthermore, sure machine studying fashions like SVM, Logistic Regression, Lasso, Resolution Tree, Random Forest, MLP, and k-nearest neighbors are well-suited for dealing with sparse knowledge.

These fashions have been designed to deal with high-dimensional and sparse knowledge effectively, making them one of the best decisions for sparse knowledge issues. Utilizing these strategies and fashions can enhance your mannequin’s accuracy and save time and assets.

Nate Rosidi is an information scientist and in product technique. He is additionally an adjunct professor instructing analytics, and is the founding father of StrataScratch, a platform serving to knowledge scientists put together for his or her interviews with actual interview questions from prime corporations. Join with him on Twitter: StrataScratch or LinkedIn.