Saying PyCaret 3.0: Open-source, Low-code Machine Studying in Python

Generated by Moez Ali utilizing Midjourney

- Introduction

- Secure Time Collection Forecasting Module

- New Object Oriented API

- Extra choices for Experiment Logging

- Refactored Preprocessing Module

- Compatibility with the most recent sklearn model

- Distributed Parallel Mannequin Coaching

- Speed up Mannequin Coaching on CPU

- RIP: NLP and Arules module

- Extra Data

- Contributors

PyCaret is an open-source, low-code machine studying library in Python that automates machine studying workflows. It’s an end-to-end machine studying and mannequin administration software that exponentially accelerates the experiment cycle and makes you extra productive.

In contrast with the opposite open-source machine studying libraries, PyCaret is an alternate low-code library that can be utilized to interchange a whole lot of strains of code with a couple of strains solely. This makes experiments exponentially quick and environment friendly. PyCaret is basically a Python wrapper round a number of machine studying libraries and frameworks in Python.

The design and ease of PyCaret are impressed by the rising position of citizen information scientists, a time period first utilized by Gartner. Citizen Information Scientists are energy customers who can carry out each easy and reasonably refined analytical duties that will beforehand have required extra technical experience.

To be taught extra about PyCaret, take a look at our GitHub or Official Docs.

Take a look at our full Release Notes for PyCaret 3.0

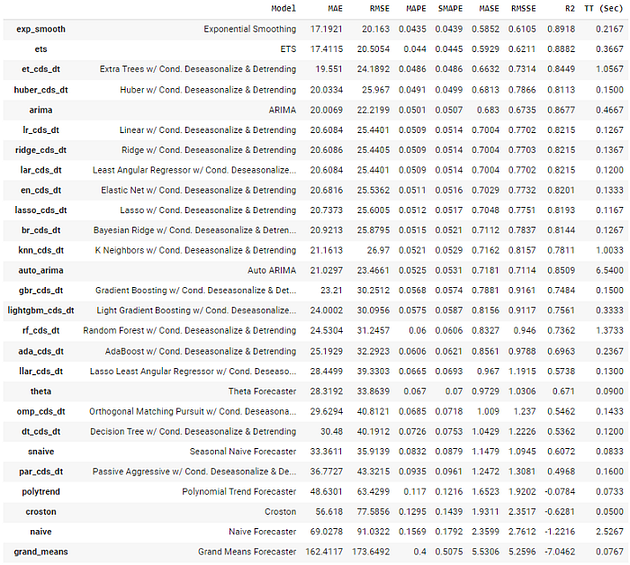

PyCaret’s Time Collection module is now secure and out there underneath 3.0. At present, it helps forecasting duties, however it’s deliberate to have time-series anomaly detection and clustering algorithms out there sooner or later.

# load dataset

from pycaret.datasets import get_data

information = get_data('airline')

# init setup

from pycaret.time_series import *

s = setup(information, fh = 12, session_id = 123)

# examine fashions

greatest = compare_models()

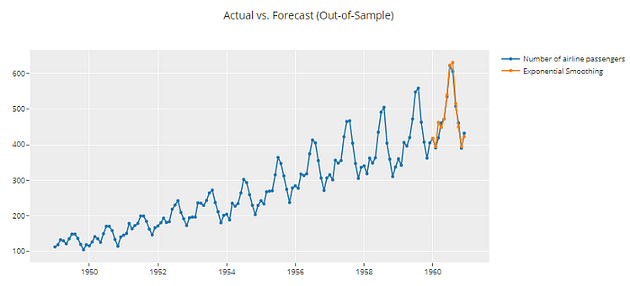

# forecast plot

plot_model(greatest, plot="forecast")

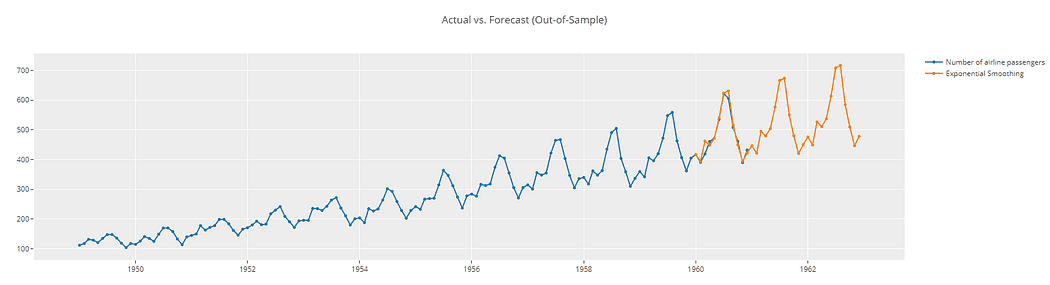

# forecast plot 36 days out in future

plot_model(greatest, plot="forecast", data_kwargs = {'fh' : 36})

Though PyCaret is a implausible software, it doesn’t adhere to the everyday object-oriented programming practices utilized by Python builders. To deal with this difficulty, we needed to rethink among the preliminary design choices we made for the 1.0 model. It is very important word that it is a vital change that can require appreciable effort to implement. Now, let’s discover how this may have an effect on you.

# Useful API (Current)

# load dataset

from pycaret.datasets import get_data

information = get_data('juice')

# init setup

from pycaret.classification import *

s = setup(information, goal="Buy", session_id = 123)

# examine fashions

greatest = compare_models()

It is nice to do experiments in the identical pocket book, however if you wish to run a distinct experiment with completely different setup operate parameters, this is usually a downside. Though it’s potential, the earlier experiment’s settings will likely be changed.

Nonetheless, with our new object-oriented API, you may effortlessly conduct a number of experiments in the identical pocket book and examine them with none problem. It’s because the parameters are linked to an object and might be related to numerous modeling and preprocessing choices.

# load dataset

from pycaret.datasets import get_data

information = get_data('juice')

# init setup 1

from pycaret.classification import ClassificationExperiment

exp1 = ClassificationExperiment()

exp1.setup(information, goal="Buy", session_id = 123)

# examine fashions init 1

greatest = exp1.compare_models()

# init setup 2

exp2 = ClassificationExperiment()

exp2.setup(information, goal="Buy", normalize = True, session_id = 123)

# examine fashions init 2

best2 = exp2.compare_models()

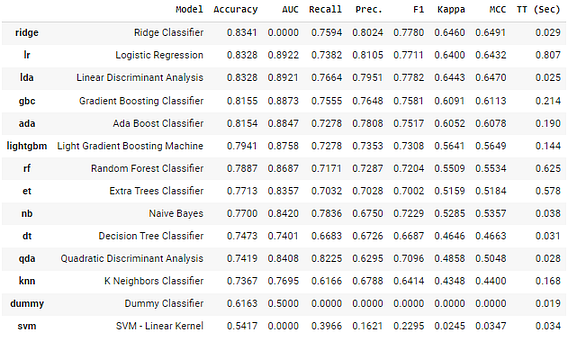

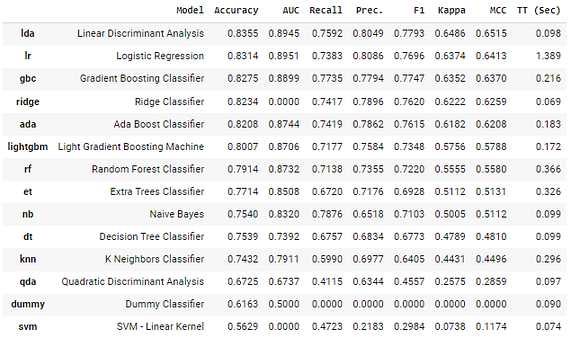

exp1.compare_models

exp2.compare_models

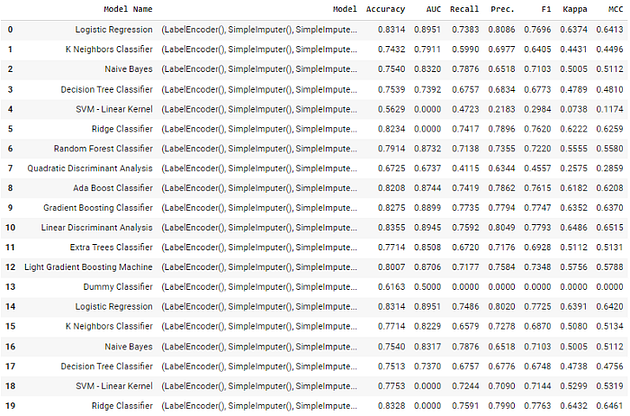

After conducting experiments, you may make the most of the get_leaderboard operate to create leaderboards for every experiment, making it simpler to match them.

import pandas as pd

# generate leaderboard

leaderboard_exp1 = exp1.get_leaderboard()

leaderboard_exp2 = exp2.get_leaderboard()

lb = pd.concat([leaderboard_exp1, leaderboard_exp2])

Output truncated

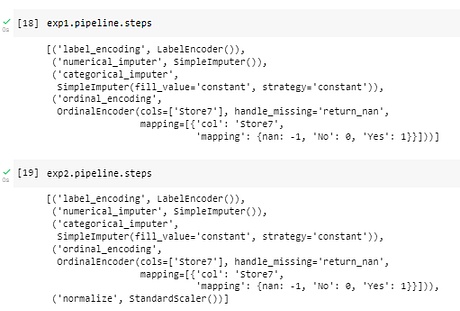

# print pipeline steps

print(exp1.pipeline.steps)

print(exp2.pipeline.steps)

PyCaret 2 can mechanically log experiments utilizing MLflow . Whereas it’s nonetheless the default, there are extra choices for experiment logging in PyCaret 3. The newly added choices within the newest model are wandb, cometml, dagshub .

To alter the logger from default MLflow to different out there choices, merely cross one of many following within thelog_experiment parameter. ‘mlflow’, ‘wandb’, ‘cometml’, ‘dagshub’.

The preprocessing module underwent a whole redesign to enhance its effectivity and efficiency, in addition to to make sure compatibility with the most recent model of Scikit-Be taught.

PyCaret 3 consists of a number of new preprocessing functionalities, corresponding to revolutionary categorical encoding strategies, help for textual content options in machine studying modeling, novel outlier detection strategies, and superior function choice strategies.

Among the new options are:

- New categorical encoding strategies

- Dealing with textual content options for machine studying modeling

- New strategies to detect outliers

- New strategies for function choice

- Assure to keep away from goal leakage as your complete pipeline is now fitted at a fold stage.

PyCaret 2 depends closely on scikit-learn 0.23.2, which makes it unattainable to make use of the most recent scikit-learn model (1.X) concurrently with PyCaret in the identical setting.

PyCaret is now suitable with the most recent model of scikit-learn, and we wish to hold it that approach.

To scale on giant datasets, you may run compare_models operate on a cluster in distributed mode. To try this, you should use the parallel parameter within the compare_models operate.

This was made potential due to Fugue, an open-source unified interface for distributed computing that lets customers execute Python, Pandas, and SQL code on Spark, Dask, and Ray with minimal rewrites

# load dataset

from pycaret.datasets import get_data

diabetes = get_data('diabetes')

# init setup

from pycaret.classification import *

clf1 = setup(information = diabetes, goal="Class variable", n_jobs = 1)

# create pyspark session

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

# import parallel back-end

from pycaret.parallel import FugueBackend

# examine fashions

greatest = compare_models(parallel = FugueBackend(spark))

You may apply Intel optimizations for machine studying algorithms and velocity up your workflow. To coach fashions with Intel optimizations use sklearnex engine, set up of Intel sklearnex library is required:

# set up sklearnex

pip set up scikit-learn-intelex

To make use of the intel optimizations, merely cross engine="sklearnex" within the create_model operate.

# Useful API (Current)

# load dataset

from pycaret.datasets import get_data

information = get_data('financial institution')

# init setup

from pycaret.classification import *

s = setup(information, goal="deposit", session_id = 123)

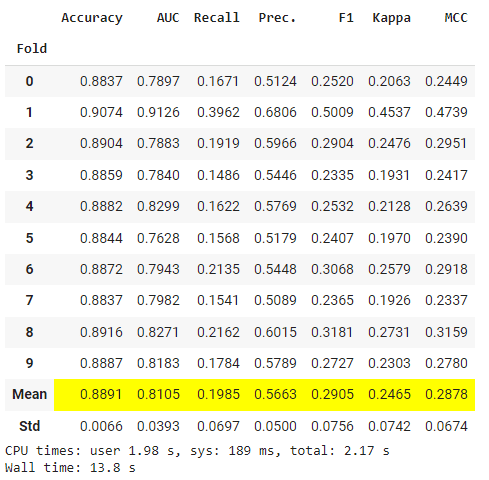

Mannequin coaching with out intel accelerations:

%%time

lr = create_model('lr')

Mannequin coaching with intel accelerations:

%%time

lr2 = create_model('lr', engine="sklearnex")

There are some variations in mannequin efficiency (immaterial normally) however the enchancment in timing is ~60% on a 30K rows dataset. The profit is way greater when coping with bigger datasets.

NLP is altering quick, and there are various devoted libraries and corporations working solely to resolve end-to-end NLP duties. On account of lack of sources, current experience within the group, and new contributors prepared to keep up and help NLP and Arules, now we have determined to drop them from PyCaret. PyCaret 3.0 doesn’t have nlp and arules module. It has additionally been faraway from the documentation. You may nonetheless use them with the older model of PyCaret.

Docs Getting began with PyCaret

API Reference Detailed API docs

Tutorials New to PyCaret? Take a look at our official notebooks

Notebooks created and maintained by the group

Blog Tutorials and articles by contributors

Videos Video tutorials and occasions

YouTube Subscribe our YouTube channel

Slack Be part of our slack group

LinkedIn Observe our LinkedIn web page

Discussions Have interaction with the group and contributors

Because of all of the contributors who’ve participated in PyCaret 3.

@ngupta23

@Yard1

@tvdboom

@jinensetpal

@goodwanghan

@Alexsandruss

@daikikatsuragawa

@caron14

@sherpan

@haizadtarik

@ethanglaser

@kumar21120

@satya-pattnaik

@ltsaprounis

@sayantan1410

@AJarman

@drmario-gh

@NeptuneN

@Abonia1

@LucasSerra

@desaizeeshan22

@rhoboro

@jonasvdd

@PivovarA

@ykskks

@chrimaho

@AnthonyA1223

@ArtificialZeng

@cspartalis

@vladocodes

@huangzhhui

@keisuke-umezawa

@ryankarlos

@celestinoxp

@qubiit

@beckernick

@napetrov

@erwanlc

@Danpilz

@ryanxjhan

@wkuopt

@TremaMiguel

@IncubatorShokuhou

@moezali1

Moez Ali writes about PyCaret and its use-cases in the true world, If you need to be notified mechanically, you may comply with Moez on Medium, LinkedIn, and Twitter.

Original. Reposted with permission.