Write Readable Assessments for Your Machine Studying Fashions with Behave | by Khuyen Tran | Mar, 2023

Use pure language to check the conduct of your ML fashions

Think about you create an ML mannequin to foretell buyer sentiment based mostly on opinions. Upon deploying it, you understand that the mannequin incorrectly labels sure constructive opinions as detrimental after they’re rephrased utilizing detrimental phrases.

This is only one instance of how an especially correct ML mannequin can fail with out correct testing. Thus, testing your mannequin for accuracy and reliability is essential earlier than deployment.

However how do you take a look at your ML mannequin? One easy method is to make use of unit-test:

from textblob import TextBlobdef test_sentiment_the_same_after_paraphrasing():

despatched = "The resort room was nice! It was spacious, clear and had a pleasant view of the town."

sent_paraphrased = "The resort room wasn't dangerous. It wasn't cramped, soiled, and had a good view of the town."

sentiment_original = TextBlob(despatched).sentiment.polarity

sentiment_paraphrased = TextBlob(sent_paraphrased).sentiment.polarity

both_positive = (sentiment_original > 0) and (sentiment_paraphrased > 0)

both_negative = (sentiment_original < 0) and (sentiment_paraphrased < 0)

assert both_positive or both_negative

This method works however will be difficult for non-technical or enterprise contributors to grasp. Wouldn’t or not it’s good in the event you may incorporate venture targets and targets into your checks, expressed in pure language?

That’s when behave is useful.

Be at liberty to play and fork the supply code of this text right here:

behave is a Python framework for behavior-driven growth (BDD). BDD is a software program growth methodology that:

- Emphasizes collaboration between stakeholders (akin to enterprise analysts, builders, and testers)

- Permits customers to outline necessities and specs for a software program software

Since behave supplies a typical language and format for expressing necessities and specs, it may be supreme for outlining and validating the conduct of machine studying fashions.

To put in behave, sort:

pip set up behave

Let’s use behave to carry out varied checks on machine studying fashions.

Invariance testing checks whether or not an ML mannequin produces constant outcomes beneath totally different circumstances.

An instance of invariance testing entails verifying if a mannequin is invariant to paraphrasing. If a mannequin is paraphrase-variant, it could misclassify a constructive overview as detrimental when the overview is rephrased utilizing detrimental phrases.

Characteristic File

To make use of behave for invariance testing, create a listing known as options. Beneath that listing, create a file known as invariant_test_sentiment.function.

└── options/

└─── invariant_test_sentiment.function

Throughout the invariant_test_sentiment.function file, we’ll specify the venture necessities:

The “Given,” “When,” and “Then” elements of this file current the precise steps that shall be executed by behave through the take a look at.

Python Step Implementation

To implement the steps used within the eventualities with Python, begin with creating the options/steps listing and a file known as invariant_test_sentiment.py inside it:

└── options/

├──── invariant_test_sentiment.function

└──── steps/

└──── invariant_test_sentiment.py

The invariant_test_sentiment.py file accommodates the next code, which checks whether or not the sentiment produced by the TextBlob mannequin is constant between the unique textual content and its paraphrased model.

from behave import given, then, when

from textblob import TextBlob@given("a textual content")

def step_given_positive_sentiment(context):

context.despatched = "The resort room was nice! It was spacious, clear and had a pleasant view of the town."

@when("the textual content is paraphrased")

def step_when_paraphrased(context):

context.sent_paraphrased = "The resort room wasn't dangerous. It wasn't cramped, soiled, and had a good view of the town."

@then("each textual content ought to have the identical sentiment")

def step_then_sentiment_analysis(context):

# Get sentiment of every sentence

sentiment_original = TextBlob(context.despatched).sentiment.polarity

sentiment_paraphrased = TextBlob(context.sent_paraphrased).sentiment.polarity

# Print sentiment

print(f"Sentiment of the unique textual content: {sentiment_original:.2f}")

print(f"Sentiment of the paraphrased sentence: {sentiment_paraphrased:.2f}")

# Assert that each sentences have the identical sentiment

both_positive = (sentiment_original > 0) and (sentiment_paraphrased > 0)

both_negative = (sentiment_original < 0) and (sentiment_paraphrased < 0)

assert both_positive or both_negative

Clarification of the code above:

- The steps are recognized utilizing decorators matching the function’s predicate:

given,when, andthen. - The decorator accepts a string containing the remainder of the phrase within the matching state of affairs step.

- The

contextvariable lets you share values between steps.

Run the Check

To run the invariant_test_sentiment.function take a look at, sort the next command:

behave options/invariant_test_sentiment.function

Output:

Characteristic: Sentiment Evaluation # options/invariant_test_sentiment.function:1

As an information scientist

I need to make sure that my mannequin is invariant to paraphrasing

In order that my mannequin can produce constant leads to real-world eventualities.

State of affairs: Paraphrased textual content

Given a textual content

When the textual content is paraphrased

Then each textual content ought to have the identical sentiment

Traceback (most up-to-date name final):

assert both_positive or both_negative

AssertionErrorCaptured stdout:

Sentiment of the unique textual content: 0.66

Sentiment of the paraphrased sentence: -0.38

Failing eventualities:

options/invariant_test_sentiment.function:6 Paraphrased textual content

0 options handed, 1 failed, 0 skipped

0 eventualities handed, 1 failed, 0 skipped

2 steps handed, 1 failed, 0 skipped, 0 undefined

The output exhibits that the primary two steps handed and the final step failed, indicating that the mannequin is affected by paraphrasing.

Directional testing is a statistical methodology used to evaluate whether or not the affect of an impartial variable on a dependent variable is in a selected course, both constructive or detrimental.

An instance of directional testing is to verify whether or not the presence of a selected phrase has a constructive or detrimental impact on the sentiment rating of a given textual content.

To make use of behave for directional testing, we’ll create two recordsdata directional_test_sentiment.function and directional_test_sentiment.py .

└── options/

├──── directional_test_sentiment.function

└──── steps/

└──── directional_test_sentiment.py

Characteristic File

The code in directional_test_sentiment.function specifies the necessities of the venture as follows:

Discover that “And” is added to the prose. Because the previous step begins with “Given,” behave will rename “And” to “Given.”

Python Step Implementation

The code indirectional_test_sentiment.py implements a take a look at state of affairs, which checks whether or not the presence of the phrase “superior ” positively impacts the sentiment rating generated by the TextBlob mannequin.

from behave import given, then, when

from textblob import TextBlob@given("a sentence")

def step_given_positive_word(context):

context.despatched = "I like this product"

@given("the identical sentence with the addition of the phrase '{phrase}'")

def step_given_a_positive_word(context, phrase):

context.new_sent = f"I like this {phrase} product"

@when("I enter the brand new sentence into the mannequin")

def step_when_use_model(context):

context.sentiment_score = TextBlob(context.despatched).sentiment.polarity

context.adjusted_score = TextBlob(context.new_sent).sentiment.polarity

@then("the sentiment rating ought to enhance")

def step_then_positive(context):

assert context.adjusted_score > context.sentiment_score

The second step makes use of the parameter syntax {phrase}. When the .function file is run, the worth specified for {phrase} within the state of affairs is routinely handed to the corresponding step perform.

Because of this if the state of affairs states that the identical sentence ought to embody the phrase “superior,” behave will routinely exchange {phrase} with “superior.”

This conversion is helpful once you need to use totally different values for the

{phrase}parameter with out altering each the.functionfile and the.pyfile.

Run the Check

behave options/directional_test_sentiment.function

Output:

Characteristic: Sentiment Evaluation with Particular Phrase

As an information scientist

I need to make sure that the presence of a selected phrase has a constructive or detrimental impact on the sentiment rating of a textual content

State of affairs: Sentiment evaluation with particular phrase

Given a sentence

And the identical sentence with the addition of the phrase 'superior'

After I enter the brand new sentence into the mannequin

Then the sentiment rating ought to enhance 1 function handed, 0 failed, 0 skipped

1 state of affairs handed, 0 failed, 0 skipped

4 steps handed, 0 failed, 0 skipped, 0 undefined

Since all of the steps handed, we are able to infer that the sentiment rating will increase because of the new phrase’s presence.

Minimal performance testing is a sort of testing that verifies if the system or product meets the minimal necessities and is useful for its supposed use.

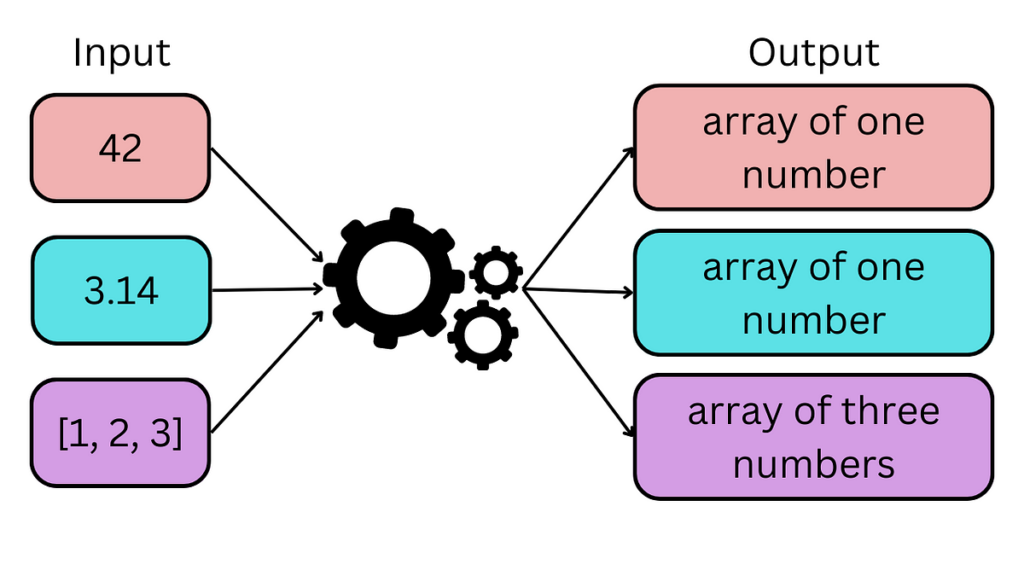

One instance of minimal performance testing is to verify whether or not the mannequin can deal with various kinds of inputs, akin to numerical, categorical, or textual knowledge.

To make use of minimal performance testing for enter validation, create two recordsdata minimum_func_test_input.function and minimum_func_test_input.py .

└── options/

├──── minimum_func_test_input.function

└──── steps/

└──── minimum_func_test_input.py

Characteristic File

The code in minimum_func_test_input.function specifies the venture necessities as follows:

Python Step Implementation

The code in minimum_func_test_input.py implements the necessities, checking if the output generated by predict for a selected enter sort meets the expectations.

from behave import given, then, whenimport numpy as np

from sklearn.linear_model import LinearRegression

from typing import Union

def predict(input_data: Union[int, float, str, list]):

"""Create a mannequin to foretell enter knowledge"""

# Reshape the enter knowledge

if isinstance(input_data, (int, float, record)):

input_array = np.array(input_data).reshape(-1, 1)

else:

increase ValueError("Enter sort not supported")

# Create a linear regression mannequin

mannequin = LinearRegression()

# Prepare the mannequin on a pattern dataset

X = np.array([[1], [2], [3], [4], [5]])

y = np.array([2, 4, 6, 8, 10])

mannequin.match(X, y)

# Predict the output utilizing the enter array

return mannequin.predict(input_array)

@given("I've an integer enter of {input_value}")

def step_given_integer_input(context, input_value):

context.input_value = int(input_value)

@given("I've a float enter of {input_value}")

def step_given_float_input(context, input_value):

context.input_value = float(input_value)

@given("I've an inventory enter of {input_value}")

def step_given_list_input(context, input_value):

context.input_value = eval(input_value)

@when("I run the mannequin")

def step_when_run_model(context):

context.output = predict(context.input_value)

@then("the output needs to be an array of 1 quantity")

def step_then_check_output(context):

assert isinstance(context.output, np.ndarray)

assert all(isinstance(x, (int, float)) for x in context.output)

assert len(context.output) == 1

@then("the output needs to be an array of three numbers")

def step_then_check_output(context):

assert isinstance(context.output, np.ndarray)

assert all(isinstance(x, (int, float)) for x in context.output)

assert len(context.output) == 3

Run the Check

behave options/minimum_func_test_input.function

Output:

Characteristic: Check my_ml_model State of affairs: Check integer enter

Given I've an integer enter of 42

After I run the mannequin

Then the output needs to be an array of 1 quantity

State of affairs: Check float enter

Given I've a float enter of three.14

After I run the mannequin

Then the output needs to be an array of 1 quantity

State of affairs: Check record enter

Given I've an inventory enter of [1, 2, 3]

After I run the mannequin

Then the output needs to be an array of three numbers

1 function handed, 0 failed, 0 skipped

3 eventualities handed, 0 failed, 0 skipped

9 steps handed, 0 failed, 0 skipped, 0 undefined

Since all of the steps handed, we are able to conclude that the mannequin outputs match our expectations.

This part will define some drawbacks of utilizing behave in comparison with pytest, and clarify why it could nonetheless be value contemplating the software.

Studying Curve

Utilizing Conduct-Pushed Growth (BDD) in conduct could end in a steeper studying curve than the extra conventional testing method utilized by pytest.

Counter argument: The deal with collaboration in BDD can result in higher alignment between enterprise necessities and software program growth, leading to a extra environment friendly growth course of total.

Slower efficiency

behave checks will be slower than pytest checks as a result of behave should parse the function recordsdata and map them to step definitions earlier than operating the checks.

Counter argument: behave’s deal with well-defined steps can result in checks which are simpler to grasp and modify, decreasing the general effort required for take a look at upkeep.

Much less flexibility

behave is extra inflexible in its syntax, whereas pytest permits extra flexibility in defining checks and fixtures.

Counter argument: behave’s inflexible construction may help guarantee consistency and readability throughout checks, making them simpler to grasp and keep over time.

Abstract

Though behave has some drawbacks in comparison with pytest, its deal with collaboration, well-defined steps, and structured method can nonetheless make it a helpful software for growth groups.

Congratulations! You’ve got simply realized how you can make the most of behave for testing machine studying fashions. I hope this information will assist you in creating extra understandable checks.