Researchers at Apple Suggest Ferret-UI: A New Multimodal Massive Language Mannequin (MLLM) Tailor-made for Enhanced Understanding of Cellular UI Screens

Cellular functions are integral to day by day life, serving myriad functions, from leisure to productiveness. Nevertheless, the complexity and variety of cellular person interfaces (UIs) usually pose challenges relating to accessibility and user-friendliness. These interfaces are characterised by distinctive options equivalent to elongated facet ratios and densely packed components, together with icons and texts, which standard fashions wrestle to interpret precisely. This hole in expertise underscores the urgent want for specialised fashions able to deciphering the intricate panorama of cellular apps.

Current analysis and methodologies in cellular UI understanding have launched frameworks and fashions such because the RICO dataset, Pix2Struct, and ILuvUI, specializing in structural evaluation and language-vision modeling. CogAgent leverages display photographs for UI navigation, whereas Highlight applies vision-language fashions to cellular interfaces. Fashions like Ferret, Shikra, and Kosmos2 improve referring and grounding capabilities however primarily goal pure photographs. MobileAgent and AppAgent make use of MLLMs for display navigation, indicating a rising emphasis on intuitive interplay mechanisms regardless of their reliance on exterior modules or predefined actions.

Apple researchers have launched Ferret-UI, a mannequin particularly developed to advance the understanding and interplay with cellular UIs. Distinguishing itself from current fashions, Ferret-UI incorporates an “any decision” functionality, adapting to display facet ratios and specializing in superb particulars inside UI components. This strategy ensures a deeper, extra nuanced comprehension of cellular interfaces.

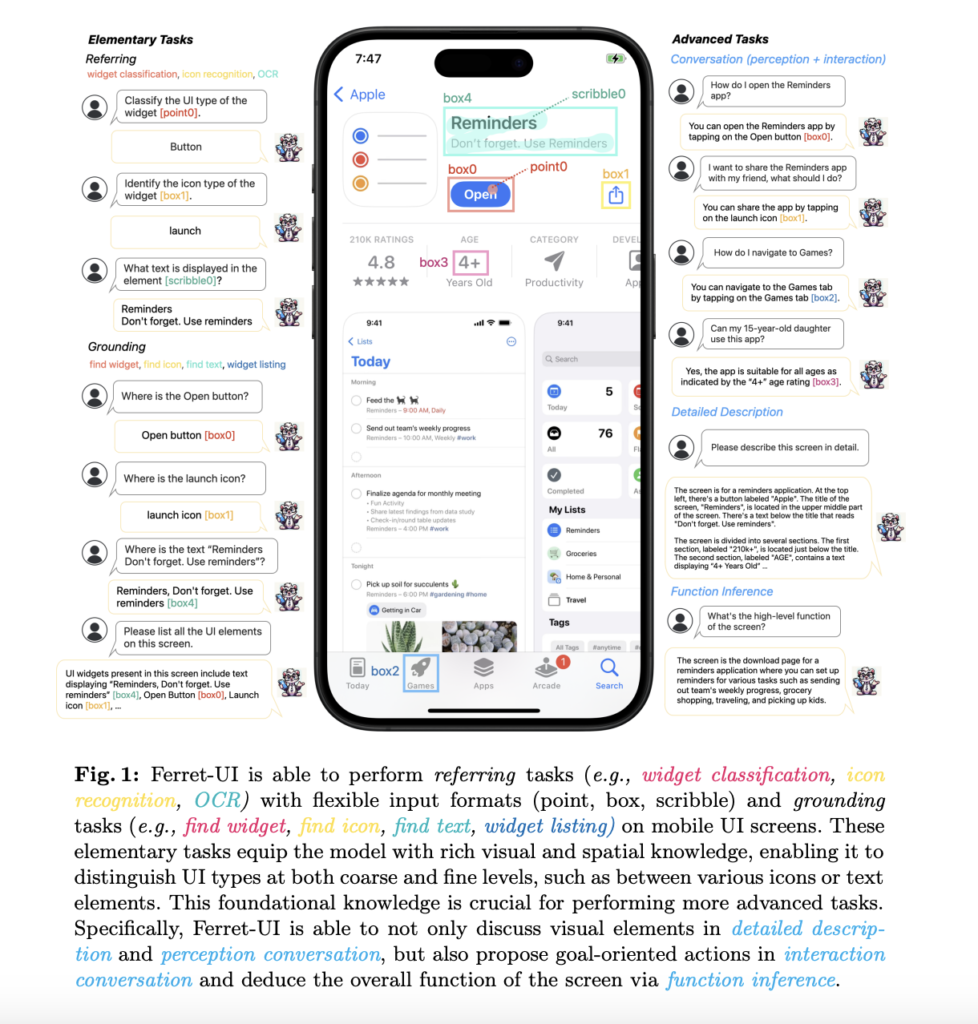

Ferret-UI’s methodology revolves round adapting its structure for cellular UI screens, using an “any decision” technique for dealing with varied facet ratios. The mannequin processes UI screens by dividing them into sub-images, making certain detailed ingredient focus. Coaching includes the RICO dataset for Android and proprietary information for iPhone screens, overlaying elementary and superior UI duties. This contains widget classification, icon recognition, OCR, and grounding duties like discover widget and discover icon, leveraging GPT-4 for producing superior activity information. The sub-images are encoded individually, utilizing visible options of various granularity to complement the mannequin’s understanding and interplay capabilities with cellular UIs.

Ferret-UI is greater than only a promising mannequin; it’s a confirmed performer. It outperformed open-source UI MLLMs and GPT-4V, exhibiting a major leap in task-specific performances. In icon recognition duties, Ferret-UI reached an accuracy charge of 95%, a considerable 25% improve over the closest competitor mannequin. It achieved a 90% success charge for widget classification, surpassing GPT-4V by 30%. Grounding duties like discovering widgets and icons noticed Ferret-UI sustaining 92% and 93% accuracy, respectively, marking 20% and 22% enchancment in comparison with current fashions. These figures underline Ferret-UI’s enhanced functionality in cellular UI understanding, setting new benchmarks in accuracy and reliability for the sector.

In conclusion, the analysis launched Ferret-UI, Apple’s novel strategy to bettering cellular UI understanding via an “any decision” technique and a specialised coaching routine. By leveraging detailed aspect-ratio changes and complete datasets, Ferret-UI considerably superior task-specific efficiency metrics, notably exceeding these of current fashions. The quantitative outcomes underscore the mannequin’s enhanced interpretative capabilities. However it’s not simply concerning the numbers. Ferret-UI’s success illustrates the potential for extra intuitive and accessible cellular app interactions, paving the way in which for future developments in UI comprehension. It’s a mannequin that may really make a distinction in how we work together with cellular UIs.

Take a look at the Paper. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t neglect to comply with us on Twitter. Be part of our Telegram Channel, Discord Channel, and LinkedIn Group.

In case you like our work, you’ll love our newsletter..

Don’t Neglect to hitch our 40k+ ML SubReddit

Nikhil is an intern guide at Marktechpost. He’s pursuing an built-in twin diploma in Supplies on the Indian Institute of Know-how, Kharagpur. Nikhil is an AI/ML fanatic who’s all the time researching functions in fields like biomaterials and biomedical science. With a robust background in Materials Science, he’s exploring new developments and creating alternatives to contribute.