Use on a regular basis language to look and retrieve knowledge with Mixtral 8x7B on Amazon SageMaker JumpStart

With the widespread adoption of generative artificial intelligence (AI) options, organizations try to make use of these applied sciences to make their groups extra productive. One thrilling use case is enabling pure language interactions with relational databases. Quite than writing advanced SQL queries, you may describe in plain language what knowledge you wish to retrieve or manipulate. The massive language mannequin (LLM) can perceive the intent behind your pure language enter and knowledge topography and routinely generate the suitable SQL code. This permits analysts to be extra productive by not having to context swap into inflexible question syntax, whereas additionally opening up relational databases to much less technical customers.

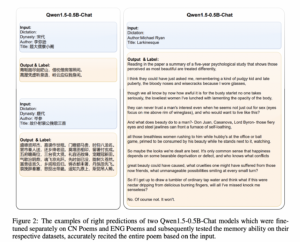

On this submit, we present you the way to arrange and deploy an answer to speak along with your databases utilizing pure language, permitting customers to achieve insights into their knowledge with out writing any code or SQL queries.

Advantages of text-to-SQL generative AI and the Mixtral 8x7B mannequin

Contemplate Michelle, a enterprise analyst liable for getting ready weekly gross sales experiences by working advanced SQL queries on their knowledge warehouse to combination numbers by product, area, and time interval. Previously, this guide course of took 2–3 hours per week working with the analyst workforce to jot down these queries by hand. Now with text-to-SQL generative AI, Michelle merely describes the report she wants in plain English, reminiscent of “Present whole income final week for footwear within the Western area grouped by sub-category.” The AI assistant routinely generates the required SQL question, runs it on the info warehouse, and returns a formatted report in seconds.

By eliminating the SQL bottleneck, Michelle saves hours per week, now spent on extra impactful evaluation as a substitute of question writing. She will iterate sooner and reply questions on demand. Different enterprise customers like Michelle achieve comparable productiveness advantages from this conversational entry to relational knowledge. The generative AI instrument primarily turns self-service analytics aspirations into actuality by permitting enterprise groups to depart the SQL to the machines.

For this implementation, Mixtral 8x7B MoE was used. Mixtral 8x7B is a state-of-the-art Sparse Combination of Specialists (MoE) basis mannequin launched by Mistral AI. It helps a number of use instances reminiscent of textual content summarization, classification, textual content technology, and code technology. It’s an 8x mannequin, which suggests it accommodates eight distinct teams of parameters. The mannequin has about 45 billion whole parameters and helps a context size of 32,000 tokens. MoE is a kind of neural community structure that consists of a number of “specialists,” the place every professional is a neural community. Within the context of transformer fashions, MoE replaces some feed-forward layers with sparse MoE layers. These layers have a sure variety of specialists, and a router community selects which specialists course of every token at every layer. MoE fashions allow extra compute-efficient and sooner inference in comparison with dense fashions. In comparison with conventional LLMs, Mixtral 8x7B presents the benefit of sooner decoding on the pace of a smaller parameter-dense mannequin regardless of containing extra parameters. It additionally outperforms different open-access fashions on sure benchmarks and helps an extended context size.

You can currently deploy Mixtral 8x7B on Amazon SageMaker JumpStart with one click. Amazon SageMaker JumpStart gives a simplified method to entry and deploy over 100 completely different open supply and third-party basis fashions. As an alternative of getting to manually combine, optimize, and configure every basis mannequin your self, SageMaker JumpStart handles these advanced duties for you. With only a few clicks, you may deploy state-of-the-art fashions from Hugging Face, Cohere, AI21 Labs, Stability AI, and extra utilizing optimized containers and SageMaker endpoints. SageMaker JumpStart eliminates the heavy lifting concerned in basis mannequin deployment. You get entry to an enormous catalog of prebuilt fashions you could shortly put to make use of for inference. It’s a scalable, cost-effective method to implement highly effective AI options with out machine studying (ML) experience.

Resolution overview

The next diagram illustrates the answer structure.

At a excessive stage, the general answer consists of three core elements:

The tip-to-end move is as follows:

- The consumer asks a pure language query, which is handed to the Mixtral 8x7B Instruct mannequin, hosted in SageMaker.

- The LLM analyzes the query and makes use of the schema fetched from the related Amazon Redshift database to generate a SQL question.

- The SQL question is run in opposition to the database. In case of an error, a retry workflow is run.

- Tabular outcomes obtained are handed again to the LLM to interpret and convert them right into a pure language response to the consumer’s authentic query.

Stipulations

To launch an endpoint to host Mixtral 8x7B from SageMaker JumpStart, you could must request a service quota improve to entry an ml.g5.48xlarge occasion for endpoint utilization. You’ll be able to request service quota increases by way of the AWS Management Console, AWS Command Line Interface (AWS CLI), or API to permit entry to these further assets.

To observe together with this instance, you additionally want entry to a relational knowledge supply. Amazon Redshift is used as the first knowledge supply on this submit with the TICKIT database. This database helps analysts observe gross sales exercise for the fictional TICKIT web site, the place customers purchase and promote tickets on-line for sporting occasions, reveals, and live shows. Particularly, analysts can determine ticket motion over time, success charges for sellers, and the best-selling occasions, venues, and seasons. You can even experiment with different AWS knowledge sources like Amazon RDS, Athena, or your individual relational databases. Be sure to have the connection particulars in your knowledge supply obtainable, reminiscent of database URL, consumer identify, and password.

To observe the demo utilizing Amazon Redshift, you first must arrange a Redshift cluster in the event you don’t have already got one. Use the Amazon Redshift console or AWS CLI to launch a cluster along with your desired node kind and variety of nodes. When the cluster is out there, create a brand new database and tables in it to carry your pattern relational knowledge. You’ll be able to load data from Amazon Simple Storage Service (Amazon S3) or instantly insert rows. When storing knowledge in Amazon S3, be sure that all public entry is blocked and the info is encrypted at relaxation and in transit. For extra data, check with Security best practices for Amazon S3. Lastly, be sure that to notice the cluster endpoint, database identify, and credentials to attach. With a Redshift cluster provisioned and loaded with knowledge, you’ll have a really perfect relational backend able to pair for pure language entry.

To check that you just efficiently added knowledge to your Redshift cluster, full the next steps:

- On the Amazon Redshift console, select Clusters within the navigation pane.

- Select the cluster you wish to question.

- Navigate to the Question Editor tab to open the question editor.

- Run the next pattern queries or write your individual SQL queries:

-

- Discover whole gross sales on a given date:

- Discover prime 10 consumers:

The question editor permits saving, scheduling, and sharing queries. You can even view question plans, examine run particulars, and monitor question efficiency.

Implement the answer

The code consists of numerous features which might be invoked by the logic proven within the answer diagram. We present you the related code blocks on this breakdown that match with the diagram. You’ll be able to see the whole code for the answer within the GitHub repository.

To implement this answer, full the next steps:

- Arrange a Redshift cluster. For this submit, we use an RA3 kind cluster.

- Load the TICKIT gross sales dataset into the Redshift cluster. For directions, see Load data from Amazon S3 to Amazon Redshift.

- To substantiate that Amazon Redshift entry is non-public and restricted solely to your VPC, check with the steps in Enable private access to Amazon Redshift from your client applications in another VPC.

- Arrange a SageMaker domain, ensuring it has the suitable permissions to work together with Amazon Redshift.

- Clone the next GitHub repository into SageMaker Studio Basic.

- Step one is to deploy the Mixtral 8x7B Instruct SageMaker endpoint. We use the default dimension ml.g5.48xlarge occasion. Just remember to have an ml.g5.48xlarge for endpoint utilization service quota of no less than 1.

- Arrange the connectivity to the Redshift cluster. Be sure to interchange these placeholders along with your Redshift identifiers. For safety functions, you need to have the credentials secured utilizing AWS Secrets Manager. For directions, see Enhance your security posture by storing Amazon Redshift admin credentials without human intervention using AWS Secrets Manager integration

- Arrange the pure language query and the immediate parameters for the mannequin

The Redshift cluster is queried to generate the related database schema and instance data, as proven in Step 2:

The generated SQL question is run on the Redshift cluster (Steps 6–8):

The question would possibly fail due to errors within the LLM-generated SQL. This is the reason we now have a debugging step, which might iterate for a sure variety of instances, asking the LLM to have a look at the Amazon Redshift error message and the earlier context (consumer query, DB schema, desk samples, and previous SQL question generated) and generate a brand new question addressing it. Steering is supplied to the mannequin utilizing immediate engineering and directions to provide you with a distinct question. The brand new question is then run on the cluster once more. This course of is configured to repeat as much as 5 instances within the pattern code, or till the question efficiently runs. If the question doesn’t run efficiently inside the variety of retries specified, a failure message is returned again to the consumer. This step highlighted in pink within the diagram.

If the question efficiently runs, we move the tabular outcomes from Amazon Redshift to the LLM to interpret them and, primarily based on the preliminary query, present a solution in pure language to be returned to the consumer (Steps 10–13):

Take a look at the answer

Let’s discover an instance run of the answer. We ask the query, “What are the highest 5 vendor names in San Diego, primarily based on the variety of tickets bought in 2008?” The next is the SQL question generated:

The next is the question response from Amazon Redshift:

The next is the ultimate reply supplied by the LLM:

Finest practices

Enhancing response effectivity in text-to-SQL methods entails incorporating a number of key greatest practices:

- Caching parsed SQL – To enhance response instances and keep away from reprocessing repeated queries, parsed SQL and acknowledged question prompts could be cached from the system. This cache could be checked earlier than invoking the LLM for every new textual content question.

- Monitoring – Utilization logs and metrics round question parsing, SQL technology latency, and outcome set sizes needs to be collected. Monitoring this knowledge allows optimization by revealing ache factors—whether or not from insufficient coaching knowledge, limitations in immediate engineering, or knowledge mannequin points.

- Scheduled knowledge refresh – To maintain materialized view knowledge present, refresh schedules utilizing batch or incremental approaches are wanted. The correct stability mitigates the overhead of the refresh whereas ensuring that textual content queries generate outcomes utilizing the newest knowledge.

- Central knowledge catalog – Sustaining a centralized knowledge catalog gives a unified metadata layer throughout knowledge sources, which is crucial for guiding LLM SQL technology. This catalog allows deciding on acceptable tables and schemas to deal with textual content queries.

- Guardrails – Use immediate engineering to stop the LLM from producing SQL that may alter tables or logic to stop working queries that may alter any tables. One essential advice is to make use of a consumer position that solely has learn privileges.

By contemplating these optimization dimensions, pure language-to-SQL options can scale effectively whereas delivering intuitive knowledge entry. As with every generative AI system, maintaining a tally of efficiency is essential whereas enabling extra customers to profit.

These are only a few of the completely different greatest practices you could observe. For a deeper dive, see Generating value from enterprise data: Best practices for Text2SQL and generative AI.

Clear up

To wash up your assets, full the steps on this part.

Delete the SageMaker endpoint

To delete a SageMaker mannequin endpoint, observe these steps:

- On the SageMaker console, within the navigation pane, select Inference, then select Endpoints.

- On the Endpoints web page, choose the endpoint you wish to delete.

- On the Actions menu, choose Delete.

- On the affirmation web page, select Delete to delete the endpoint.

The endpoint deletion course of will start. You’ll be able to test the endpoint standing on the Endpoints web page to substantiate it has been deleted.

Delete the Redshift cluster

Full the next steps to delete your Redshift cluster:

- On the Amazon Redshift console, within the navigation pane, select Clusters to show your listing of clusters.

- Select the cluster you wish to delete.

- On the Actions menu, select Delete.

- Verify the cluster to be deleted, then select Delete cluster.

The cluster standing will probably be up to date because the cluster is deleted. This course of often takes a couple of minutes.

Conclusion

The flexibility to question knowledge by way of intuitive pure language interfaces unlocks enormous potential for enterprise customers. As an alternative of battling advanced SQL syntax, groups can self-serve the analytical insights they want, on demand. This improves time-to-value whereas permitting much less technical customers to entry and extract which means from enterprise knowledge.

As highlighted on this submit, the newest advances in generative AI make sturdy NLQ-to-SQL methods achievable. With basis fashions reminiscent of Mixtral 8x7B working on SageMaker and instruments and libraries for connecting to completely different knowledge sources, organizations can now have an enterprise-grade answer to transform pure language queries into environment friendly SQL. By eliminating the standard SQL bottleneck, generative NLQ-to-SQL methods give again numerous hours every week for analysts and non-technical roles, driving larger enterprise agility and democratization in self-service analytics.

As generative AI continues to mature quickly, maintaining with the newest fashions and optimization strategies is crucial. This submit solely scratched the floor of what is going to be doable within the close to future as these applied sciences enhance. Pure language interfaces for accessing and manipulating knowledge nonetheless have enormous runways for innovation forward. To study extra about how AWS helps prospects make their concepts a actuality, check with the Generative AI Innovation Center.

Concerning the Authors

Jose Navarro is an AI/ML Options Architect at AWS, primarily based in Spain. Jose helps AWS prospects—from small startups to massive enterprises—architect and take their end-to-end machine studying use instances to manufacturing. In his spare time, he likes to train, spend high quality time with family and friends, and compensate for AI information and papers.

Jose Navarro is an AI/ML Options Architect at AWS, primarily based in Spain. Jose helps AWS prospects—from small startups to massive enterprises—architect and take their end-to-end machine studying use instances to manufacturing. In his spare time, he likes to train, spend high quality time with family and friends, and compensate for AI information and papers.

Prashanth Ganapathy is a Senior Options Architect within the Small Medium Enterprise (SMB) phase at AWS. He enjoys studying about AWS AI/ML companies and serving to prospects meet their enterprise outcomes by constructing options for them. Exterior of labor, Prashanth enjoys images, journey, and making an attempt out completely different cuisines.

Prashanth Ganapathy is a Senior Options Architect within the Small Medium Enterprise (SMB) phase at AWS. He enjoys studying about AWS AI/ML companies and serving to prospects meet their enterprise outcomes by constructing options for them. Exterior of labor, Prashanth enjoys images, journey, and making an attempt out completely different cuisines.

Uchenna Egbe is an Affiliate Options Architect at AWS. He spends his free time researching about herbs, teas, superfoods, and the way to incorporate them into his day by day eating regimen.

Uchenna Egbe is an Affiliate Options Architect at AWS. He spends his free time researching about herbs, teas, superfoods, and the way to incorporate them into his day by day eating regimen.

Sebastian Bustillo is a Options Architect at AWS. He focuses on AI/ML applied sciences with a with a profound ardour for generative AI and compute accelerators. At AWS, he helps prospects unlock enterprise worth by way of generative AI, helping with the general course of from ideation to manufacturing. When he’s not at work, he enjoys brewing an ideal cup of specialty espresso and exploring the world together with his spouse.

Sebastian Bustillo is a Options Architect at AWS. He focuses on AI/ML applied sciences with a with a profound ardour for generative AI and compute accelerators. At AWS, he helps prospects unlock enterprise worth by way of generative AI, helping with the general course of from ideation to manufacturing. When he’s not at work, he enjoys brewing an ideal cup of specialty espresso and exploring the world together with his spouse.