Construct knowledge-powered conversational functions utilizing LlamaIndex and Llama 2-Chat

Unlocking correct and insightful solutions from huge quantities of textual content is an thrilling functionality enabled by massive language fashions (LLMs). When constructing LLM functions, it’s typically obligatory to attach and question exterior information sources to offer related context to the mannequin. One well-liked method is utilizing Retrieval Augmented Era (RAG) to create Q&A programs that comprehend advanced data and supply pure responses to queries. RAG permits fashions to faucet into huge data bases and ship human-like dialogue for functions like chatbots and enterprise search assistants.

On this publish, we discover the best way to harness the ability of LlamaIndex, Llama 2-70B-Chat, and LangChain to construct highly effective Q&A functions. With these state-of-the-art applied sciences, you’ll be able to ingest textual content corpora, index vital data, and generate textual content that solutions customers’ questions exactly and clearly.

Llama 2-70B-Chat

Llama 2-70B-Chat is a robust LLM that competes with main fashions. It’s pre-trained on two trillion textual content tokens, and supposed by Meta for use for chat help to customers. Pre-training information is sourced from publicly accessible information and concludes as of September 2022, and fine-tuning information concludes July 2023. For extra particulars on the mannequin’s coaching course of, security issues, learnings, and supposed makes use of, check with the paper Llama 2: Open Foundation and Fine-Tuned Chat Models. Llama 2 fashions can be found on Amazon SageMaker JumpStart for a fast and simple deployment.

LlamaIndex

LlamaIndex is a knowledge framework that allows constructing LLM functions. It supplies instruments that supply information connectors to ingest your present information with varied sources and codecs (PDFs, docs, APIs, SQL, and extra). Whether or not you have got information saved in databases or in PDFs, LlamaIndex makes it easy to deliver that information into use for LLMs. As we display on this publish, LlamaIndex APIs make information entry easy and allows you to create highly effective customized LLM functions and workflows.

In case you are experimenting and constructing with LLMs, you might be possible accustomed to LangChain, which presents a strong framework, simplifying the event and deployment of LLM-powered functions. Much like LangChain, LlamaIndex presents quite a lot of instruments, together with information connectors, information indexes, engines, and information brokers, in addition to utility integrations reminiscent of instruments and observability, tracing, and analysis. LlamaIndex focuses on bridging the hole between the information and highly effective LLMs, streamlining information duties with user-friendly options. LlamaIndex is particularly designed and optimized for constructing search and retrieval functions, reminiscent of RAG, as a result of it supplies a easy interface for querying LLMs and retrieving related paperwork.

Answer overview

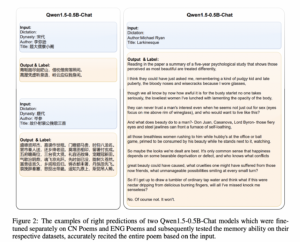

On this publish, we display the best way to create a RAG-based utility utilizing LlamaIndex and an LLM. The next diagram reveals the step-by-step structure of this resolution outlined within the following sections.

RAG combines data retrieval with pure language technology to provide extra insightful responses. When prompted, RAG first searches textual content corpora to retrieve essentially the most related examples to the enter. Throughout response technology, the mannequin considers these examples to enhance its capabilities. By incorporating related retrieved passages, RAG responses are typically extra factual, coherent, and in keeping with context in comparison with primary generative fashions. This retrieve-generate framework takes benefit of the strengths of each retrieval and technology, serving to handle points like repetition and lack of context that may come up from pure autoregressive conversational fashions. RAG introduces an efficient method for constructing conversational brokers and AI assistants with contextualized, high-quality responses.

Constructing the answer consists of the next steps:

- Arrange Amazon SageMaker Studio as the event surroundings and set up the required dependencies.

- Deploy an embedding mannequin from the Amazon SageMaker JumpStart hub.

- Obtain press releases to make use of as our exterior data base.

- Construct an index out of the press releases to have the ability to question and add as further context to the immediate.

- Question the data base.

- Construct a Q&A utility utilizing LlamaIndex and LangChain brokers.

All of the code on this publish is out there within the GitHub repo.

Stipulations

For this instance, you want an AWS account with a SageMaker area and acceptable AWS Identity and Access Management (IAM) permissions. For account setup directions, see Create an AWS Account. In the event you don’t have already got a SageMaker area, check with Amazon SageMaker domain overview to create one. On this publish, we use the AmazonSageMakerFullAccess position. It isn’t really helpful that you just use this credential in a manufacturing surroundings. As an alternative, it’s best to create and use a job with least-privilege permissions. You can too discover how you need to use Amazon SageMaker Role Manager to construct and handle persona-based IAM roles for widespread machine studying wants immediately by means of the SageMaker console.

Moreover, you want entry to a minimal of the next occasion sizes:

- ml.g5.2xlarge for endpoint utilization when deploying the Hugging Face GPT-J textual content embeddings mannequin

- ml.g5.48xlarge for endpoint utilization when deploying the Llama 2-Chat mannequin endpoint

To extend your quota, check with Requesting a quota increase.

Deploy a GPT-J embedding mannequin utilizing SageMaker JumpStart

This part provides you two choices when deploying SageMaker JumpStart fashions. You should utilize a code-based deployment utilizing the code supplied, or use the SageMaker JumpStart person interface (UI).

Deploy with the SageMaker Python SDK

You should utilize the SageMaker Python SDK to deploy the LLMs, as proven within the code accessible within the repository. Full the next steps:

- Set the occasion dimension that’s for use for deployment of the embeddings mannequin utilizing

instance_type = "ml.g5.2xlarge" - Find the ID the mannequin to make use of for embeddings. In SageMaker JumpStart, it’s recognized as

model_id = "huggingface-textembedding-gpt-j-6b-fp16" - Retrieve the pre-trained mannequin container and deploy it for inference.

SageMaker will return the identify of the mannequin endpoint and the next message when the embeddings mannequin has been deployed efficiently:

Deploy with SageMaker JumpStart in SageMaker Studio

To deploy the mannequin utilizing SageMaker JumpStart in Studio, full the next steps:

- On the SageMaker Studio console, select JumpStart within the navigation pane.

- Seek for and select the GPT-J 6B Embedding FP16 mannequin.

- Select Deploy and customise the deployment configuration.

- For this instance, we want an ml.g5.2xlarge occasion, which is the default occasion instructed by SageMaker JumpStart.

- Select Deploy once more to create the endpoint.

The endpoint will take roughly 5–10 minutes to be in service.

After you have got deployed the embeddings mannequin, in an effort to use the LangChain integration with SageMaker APIs, it’s good to create a operate to deal with inputs (uncooked textual content) and rework them to embeddings utilizing the mannequin. You do that by creating a category known as ContentHandler, which takes a JSON of enter information, and returns a JSON of textual content embeddings: class ContentHandler(EmbeddingsContentHandler).

Move the mannequin endpoint identify to the ContentHandler operate to transform the textual content and return embeddings:

You’ll be able to find the endpoint identify in both the output of the SDK or within the deployment particulars within the SageMaker JumpStart UI.

You’ll be able to check that the ContentHandler operate and endpoint are working as anticipated by inputting some uncooked textual content and operating the embeddings.embed_query(textual content) operate. You should utilize the instance supplied textual content = "Hello! It is time for the seashore" or attempt your individual textual content.

Deploy and check Llama 2-Chat utilizing SageMaker JumpStart

Now you’ll be able to deploy the mannequin that is ready to have interactive conversations along with your customers. On this occasion, we select one of many Llama 2-chat fashions, that’s recognized by way of

The mannequin must be deployed to a real-time endpoint utilizing predictor = my_model.deploy(). SageMaker will return the mannequin’s endpoint identify, which you need to use for the endpoint_name variable to reference later.

You outline a print_dialogue operate to ship enter to the chat mannequin and obtain its output response. The payload contains hyperparameters for the mannequin, together with the next:

- max_new_tokens – Refers back to the most variety of tokens that the mannequin can generate in its outputs.

- top_p – Refers back to the cumulative chance of the tokens that may be retained by the mannequin when producing its outputs

- temperature – Refers back to the randomness of the outputs generated by the mannequin. A temperature larger than 0 or equal to 1 will increase the extent of randomness, whereas a temperature of 0 will generate the most certainly tokens.

It’s best to choose your hyperparameters based mostly in your use case and check them appropriately. Fashions such because the Llama household require you to incorporate a further parameter indicating that you’ve learn and accepted the Finish Consumer License Settlement (EULA):

To check the mannequin, substitute the content material part of the enter payload: "content material": "what's the recipe of mayonnaise?". You should utilize your individual textual content values and replace the hyperparameters to grasp them higher.

Much like the deployment of the embeddings mannequin, you’ll be able to deploy Llama-70B-Chat utilizing the SageMaker JumpStart UI:

- On the SageMaker Studio console, select JumpStart within the navigation pane

- Seek for and select the

Llama-2-70b-Chat mannequin - Settle for the EULA and select Deploy, utilizing the default occasion once more

Much like the embedding mannequin, you need to use LangChain integration by making a content material handler template for the inputs and outputs of your chat mannequin. On this case, you outline the inputs as these coming from a person, and point out that they’re ruled by the system immediate. The system immediate informs the mannequin of its position in helping the person for a specific use case.

This content material handler is then handed when invoking the mannequin, along with the aforementioned hyperparameters and customized attributes (EULA acceptance). You parse all these attributes utilizing the next code:

When the endpoint is out there, you’ll be able to check that it’s working as anticipated. You’ll be able to replace llm("what's amazon sagemaker?") with your individual textual content. You additionally have to outline the particular ContentHandler to invoke the LLM utilizing LangChain, as proven within the code and the next code snippet:

Use LlamaIndex to construct the RAG

To proceed, set up LlamaIndex to create the RAG utility. You’ll be able to set up LlamaIndex utilizing the pip: pip set up llama_index

You first have to load your information (data base) onto LlamaIndex for indexing. This entails a number of steps:

- Select a knowledge loader:

LlamaIndex supplies quite a lot of information connectors accessible on LlamaHub for widespread information sorts like JSON, CSV, and textual content recordsdata, in addition to different information sources, permitting you to ingest quite a lot of datasets. On this publish, we use SimpleDirectoryReader to ingest a number of PDF recordsdata as proven within the code. Our information pattern is 2 Amazon press releases in PDF model within the press releases folder in our code repository. After you load the PDFs, you’ll be able to see that they been transformed to an inventory of 11 components.

As an alternative of loading the paperwork immediately, it’s also possible to covert the Doc object into Node objects earlier than sending them to the index. The selection between sending your entire Doc object to the index or changing the Doc into Node objects earlier than indexing is determined by your particular use case and the construction of your information. The nodes method is usually a sensible choice for lengthy paperwork, the place you wish to break and retrieve particular elements of a doc quite than your entire doc. For extra data, check with Documents / Nodes.

- Instantiate the loader and cargo the paperwork:

This step initializes the loader class and any wanted configuration, reminiscent of whether or not to disregard hidden recordsdata. For extra particulars, check with SimpleDirectoryReader.

- Name the loader’s

load_datamethodology to parse your supply recordsdata and information and convert them into LlamaIndex Doc objects, prepared for indexing and querying. You should utilize the next code to finish the information ingestion and preparation for full-text search utilizing LlamaIndex’s indexing and retrieval capabilities:

- Construct the index:

The important thing characteristic of LlamaIndex is its potential to assemble organized indexes over information, which is represented as paperwork or nodes. The indexing facilitates environment friendly querying over the information. We create our index with the default in-memory vector retailer and with our outlined setting configuration. The LlamaIndex Settings is a configuration object that gives generally used assets and settings for indexing and querying operations in a LlamaIndex utility. It acts as a singleton object, in order that it lets you set world configurations, whereas additionally permitting you to override particular parts regionally by passing them immediately into the interfaces (reminiscent of LLMs, embedding fashions) that use them. When a specific part will not be explicitly supplied, the LlamaIndex framework falls again to the settings outlined within the Settings object as a worldwide default. To make use of our embedding and LLM fashions with LangChain and configuring the Settings we have to set up llama_index.embeddings.langchain and llama_index.llms.langchain. We will configure the Settings object as within the following code:

By default, VectorStoreIndex makes use of an in-memory SimpleVectorStore that’s initialized as a part of the default storage context. In real-life use instances, you typically want to hook up with exterior vector shops reminiscent of Amazon OpenSearch Service. For extra particulars, check with Vector Engine for Amazon OpenSearch Serverless.

Now you’ll be able to run Q&A over your paperwork through the use of the query_engine from LlamaIndex. To take action, cross the index you created earlier for queries and ask your query. The question engine is a generic interface for querying information. It takes a pure language question as enter and returns a wealthy response. The question engine is usually constructed on prime of a number of indexes utilizing retrievers.

You’ll be able to see that the RAG resolution is ready to retrieve the right reply from the supplied paperwork:

Use LangChain instruments and brokers

Loader class. The loader is designed to load information into LlamaIndex or subsequently as a device in a LangChain agent. This provides you extra energy and adaptability to make use of this as a part of your utility. You begin by defining your tool from the LangChain agent class. The operate that you just cross on to your device queries the index you constructed over your paperwork utilizing LlamaIndex.

Then you choose the correct sort of the agent that you just want to use to your RAG implementation. On this case, you utilize the chat-zero-shot-react-description agent. With this agent, the LLM will take use the accessible device (on this state of affairs, the RAG over the data base) to offer the response. You then initialize the agent by passing your device, LLM, and agent sort:

You’ll be able to see the agent going by means of ideas, actions, and remark , use the device (on this state of affairs, querying your listed paperwork); and return a end result:

Yow will discover the end-to-end implementation code within the accompanying GitHub repo.

Clear up

To keep away from pointless prices, you’ll be able to clear up your assets, both by way of the next code snippets or the Amazon JumpStart UI.

To make use of the Boto3 SDK, use the next code to delete the textual content embedding mannequin endpoint and the textual content technology mannequin endpoint, in addition to the endpoint configurations:

To make use of the SageMaker console, full the next steps:

- On the SageMaker console, beneath Inference within the navigation pane, select Endpoints

- Seek for the embedding and textual content technology endpoints.

- On the endpoint particulars web page, select Delete.

- Select Delete once more to substantiate.

Conclusion

To be used instances targeted on search and retrieval, LlamaIndex supplies versatile capabilities. It excels at indexing and retrieval for LLMs, making it a robust device for deep exploration of information. LlamaIndex allows you to create organized information indexes, use various LLMs, increase information for higher LLM efficiency, and question information with pure language.

This publish demonstrated some key LlamaIndex ideas and capabilities. We used GPT-J for embedding and Llama 2-Chat because the LLM to construct a RAG utility, however you possibly can use any appropriate mannequin as a substitute. You’ll be able to discover the great vary of fashions accessible on SageMaker JumpStart.

We additionally confirmed how LlamaIndex can present highly effective, versatile instruments to attach, index, retrieve, and combine information with different frameworks like LangChain. With LlamaIndex integrations and LangChain, you’ll be able to construct extra highly effective, versatile, and insightful LLM functions.

Concerning the Authors

Dr. Romina Sharifpour is a Senior Machine Studying and Synthetic Intelligence Options Architect at Amazon Internet Providers (AWS). She has spent over 10 years main the design and implementation of progressive end-to-end options enabled by developments in ML and AI. Romina’s areas of curiosity are pure language processing, massive language fashions, and MLOps.

Dr. Romina Sharifpour is a Senior Machine Studying and Synthetic Intelligence Options Architect at Amazon Internet Providers (AWS). She has spent over 10 years main the design and implementation of progressive end-to-end options enabled by developments in ML and AI. Romina’s areas of curiosity are pure language processing, massive language fashions, and MLOps.

Nicole Pinto is an AI/ML Specialist Options Architect based mostly in Sydney, Australia. Her background in healthcare and monetary providers provides her a novel perspective in fixing buyer issues. She is enthusiastic about enabling clients by means of machine studying and empowering the following technology of ladies in STEM.

Nicole Pinto is an AI/ML Specialist Options Architect based mostly in Sydney, Australia. Her background in healthcare and monetary providers provides her a novel perspective in fixing buyer issues. She is enthusiastic about enabling clients by means of machine studying and empowering the following technology of ladies in STEM.