A visible language mannequin for UI and visually-situated language understanding – Google Analysis Weblog

Display screen person interfaces (UIs) and infographics, comparable to charts, diagrams and tables, play vital roles in human communication and human-machine interplay as they facilitate wealthy and interactive person experiences. UIs and infographics share related design ideas and visible language (e.g., icons and layouts), that supply a possibility to construct a single mannequin that may perceive, motive, and work together with these interfaces. Nonetheless, due to their complexity and different presentation codecs, infographics and UIs current a novel modeling problem.

To that finish, we introduce “ScreenAI: A Vision-Language Model for UI and Infographics Understanding”. ScreenAI improves upon the PaLI architecture with the versatile patching technique from pix2struct. We practice ScreenAI on a novel combination of datasets and duties, together with a novel Display screen Annotation job that requires the mannequin to establish UI component info (i.e., sort, location and outline) on a display screen. These textual content annotations present massive language fashions (LLMs) with display screen descriptions, enabling them to mechanically generate question-answering (QA), UI navigation, and summarization coaching datasets at scale. At solely 5B parameters, ScreenAI achieves state-of-the-art outcomes on UI- and infographic-based duties (WebSRC and MoTIF), and best-in-class efficiency on Chart QA, DocVQA, and InfographicVQA in comparison with fashions of comparable dimension. We’re additionally releasing three new datasets: Screen Annotation to guage the format understanding functionality of the mannequin, in addition to ScreenQA Short and Complex ScreenQA for a extra complete analysis of its QA functionality.

ScreenAI

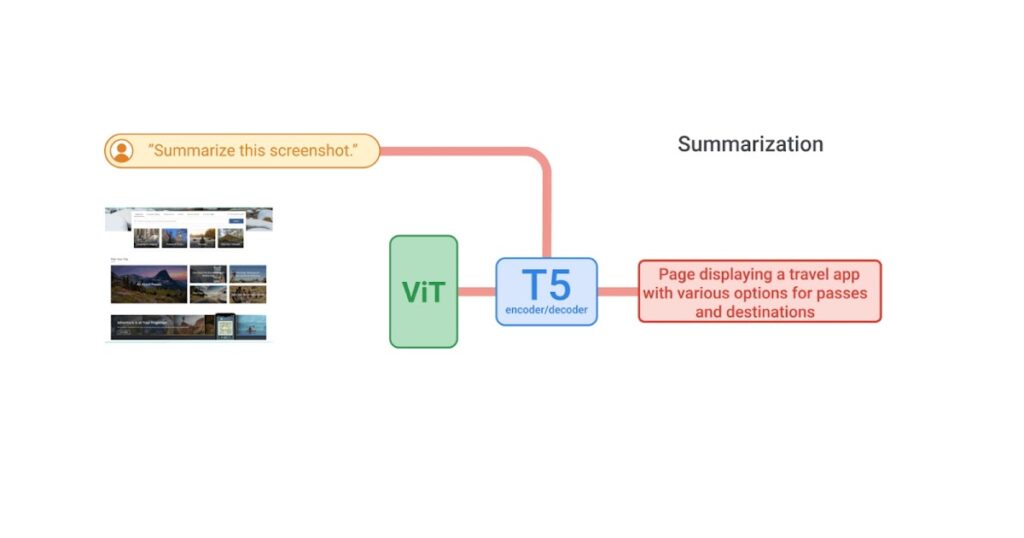

ScreenAI’s structure is predicated on PaLI, composed of a multimodal encoder block and an autoregressive decoder. The PaLI encoder makes use of a vision transformer (ViT) that creates picture embeddings and a multimodal encoder that takes the concatenation of the picture and textual content embeddings as enter. This versatile structure permits ScreenAI to unravel imaginative and prescient duties that may be recast as textual content+image-to-text issues.

On high of the PaLI structure, we make use of a versatile patching technique launched in pix2struct. As an alternative of utilizing a fixed-grid sample, the grid dimensions are chosen such that they protect the native facet ratio of the enter picture. This allows ScreenAI to work effectively throughout photos of varied facet ratios.

The ScreenAI mannequin is skilled in two levels: a pre-training stage adopted by a fine-tuning stage. First, self-supervised studying is utilized to mechanically generate information labels, that are then used to coach ViT and the language mannequin. ViT is frozen throughout the fine-tuning stage, the place most information used is manually labeled by human raters.

|

| ScreenAI mannequin structure. |

Information technology

To create a pre-training dataset for ScreenAI, we first compile an intensive assortment of screenshots from varied units, together with desktops, cell, and tablets. That is achieved through the use of publicly accessible web pages and following the programmatic exploration method used for the RICO dataset for cell apps. We then apply a format annotator, based mostly on the DETR mannequin, that identifies and labels a variety of UI components (e.g., picture, pictogram, button, textual content) and their spatial relationships. Pictograms bear additional evaluation utilizing an icon classifier able to distinguishing 77 completely different icon varieties. This detailed classification is important for deciphering the refined info conveyed by icons. For icons that aren’t lined by the classifier, and for infographics and pictures, we use the PaLI picture captioning mannequin to generate descriptive captions that present contextual info. We additionally apply an optical character recognition (OCR) engine to extract and annotate textual content material on display screen. We mix the OCR textual content with the earlier annotations to create an in depth description of every display screen.

LLM-based information technology

We improve the pre-training information’s variety utilizing PaLM 2 to generate input-output pairs in a two-step course of. First, display screen annotations are generated utilizing the approach outlined above, then we craft a immediate round this schema for the LLM to create artificial information. This course of requires immediate engineering and iterative refinement to search out an efficient immediate. We assess the generated information’s high quality by human validation in opposition to a top quality threshold.

You solely converse JSON. Don't write textual content that isn’t JSON.

You're given the next cell screenshot, described in phrases. Are you able to generate 5 questions relating to the content material of the screenshot in addition to the corresponding quick solutions to them?

The reply must be as quick as attainable, containing solely the required info. Your reply must be structured as follows:

questions: [

{{question: the question,

answer: the answer

}},

...

]

{THE SCREEN SCHEMA}

| A pattern immediate for QA information technology. |

By combining the pure language capabilities of LLMs with a structured schema, we simulate a variety of person interactions and situations to generate artificial, reasonable duties. Specifically, we generate three classes of duties:

- Query answering: The mannequin is requested to reply questions relating to the content material of the screenshots, e.g., “When does the restaurant open?”

- Display screen navigation: The mannequin is requested to transform a pure language utterance into an executable motion on a display screen, e.g., “Click on the search button.”

- Display screen summarization: The mannequin is requested to summarize the display screen content material in a single or two sentences.

| LLM-generated information. Examples for display screen QA, navigation and summarization. For navigation, the motion bounding field is displayed in crimson on the screenshot. |

Experiments and outcomes

As beforehand talked about, ScreenAI is skilled in two levels: pre-training and fine-tuning. Pre-training information labels are obtained utilizing self-supervised studying and fine-tuning information labels comes from human raters.

We fine-tune ScreenAI utilizing public QA, summarization, and navigation datasets and a wide range of duties associated to UIs. For QA, we use effectively established benchmarks within the multimodal and doc understanding discipline, comparable to ChartQA, DocVQA, Multi page DocVQA, InfographicVQA, OCR VQA, Web SRC and ScreenQA. For navigation, datasets used embrace Referring Expressions, MoTIF, Mug, and Android in the Wild. Lastly, we use Screen2Words for display screen summarization and Widget Captioning for describing particular UI components. Together with the fine-tuning datasets, we consider the fine-tuned ScreenAI mannequin utilizing three novel benchmarks:

- Display screen Annotation: Allows the analysis mannequin format annotations and spatial understanding capabilities.

- ScreenQA Brief: A variation of ScreenQA, the place its floor reality solutions have been shortened to comprise solely the related info that higher aligns with different QA duties.

- Complicated ScreenQA: Enhances ScreenQA Brief with harder questions (counting, arithmetic, comparability, and non-answerable questions) and incorporates screens with varied facet ratios.

The fine-tuned ScreenAI mannequin achieves state-of-the-art outcomes on varied UI and infographic-based duties (WebSRC and MoTIF) and best-in-class efficiency on Chart QA, DocVQA, and InfographicVQA in comparison with fashions of comparable dimension. ScreenAI achieves aggressive efficiency on Screen2Words and OCR-VQA. Moreover, we report outcomes on the brand new benchmark datasets launched to function a baseline for additional analysis.

|

| Evaluating mannequin efficiency of ScreenAI with state-of-the-art (SOTA) fashions of comparable dimension. |

Subsequent, we look at ScreenAI’s scaling capabilities and observe that throughout all duties, growing the mannequin dimension improves performances and the enhancements haven’t saturated on the largest dimension.

|

| Mannequin efficiency will increase with dimension, and the efficiency has not saturated even on the largest dimension of 5B params. |

Conclusion

We introduce the ScreenAI mannequin together with a unified illustration that permits us to develop self-supervised studying duties leveraging information from all these domains. We additionally illustrate the impression of knowledge technology utilizing LLMs and examine bettering mannequin efficiency on particular facets with modifying the coaching combination. We apply all of those methods to construct multi-task skilled fashions that carry out competitively with state-of-the-art approaches on a lot of public benchmarks. Nonetheless, we additionally be aware that our method nonetheless lags behind massive fashions and additional analysis is required to bridge this hole.

Acknowledgements

This undertaking is the results of joint work with Maria Wang, Fedir Zubach, Hassan Mansoor, Vincent Etter, Victor Carbune, Jason Lin, Jindong Chen and Abhanshu Sharma. We thank Fangyu Liu, Xi Chen, Efi Kokiopoulou, Jesse Berent, Gabriel Barcik, Lukas Zilka, Oriana Riva, Gang Li,Yang Li, Radu Soricut, and Tania Bedrax-Weiss for his or her insightful suggestions and discussions, together with Rahul Aralikatte, Hao Cheng and Daniel Kim for his or her help in information preparation. We additionally thank Jay Yagnik, Blaise Aguera y Arcas, Ewa Dominowska, David Petrou, and Matt Sharifi for his or her management, imaginative and prescient and help. We’re very grateful toTom Small for serving to us create the animation on this submit.