Alida features deeper understanding of buyer suggestions with Amazon Bedrock

This put up is co-written with Sherwin Chu from Alida.

Alida helps the world’s largest manufacturers create extremely engaged analysis communities to collect suggestions that fuels higher buyer experiences and product innovation.

Alida’s prospects obtain tens of 1000’s of engaged responses for a single survey, subsequently the Alida crew opted to leverage machine studying (ML) to serve their prospects at scale. Nonetheless, when using using conventional pure language processing (NLP) fashions, they discovered that these options struggled to totally perceive the nuanced suggestions present in open-ended survey responses. The fashions usually solely captured surface-level matters and sentiment, and missed essential context that might enable for extra correct and significant insights.

On this put up, we study how Anthropic’s Claude Instantaneous mannequin on Amazon Bedrock enabled the Alida crew to shortly construct a scalable service that extra precisely determines the subject and sentiment inside advanced survey responses. The brand new service achieved a 4-6 instances enchancment in subject assertion by tightly clustering on a number of dozen key matters vs. lots of of noisy NLP key phrases.

Amazon Bedrock is a totally managed service that gives a alternative of high-performing basis fashions (FMs) from main AI corporations, similar to AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon through a single API, together with a broad set of capabilities you want to construct generative AI purposes with safety, privateness, and accountable AI.

Utilizing Amazon Bedrock allowed Alida to carry their service to market quicker than if they’d used different machine studying (ML) suppliers or distributors.

The problem

Surveys with a mixture of multiple-choice and open-ended questions enable market researchers to get a extra holistic view by capturing each quantitative and qualitative information factors.

A number of-choice questions are simple to investigate at scale, however lack nuance and depth. Set response choices might also result in biasing or priming participant responses.

Open-ended survey questions enable responders to supply context and unanticipated suggestions. These qualitative information factors deepen researchers’ understanding past what multiple-choice questions can seize alone. The problem with the free-form textual content is that it could possibly result in advanced and nuanced solutions which can be tough for conventional NLP to totally perceive. For instance:

“I lately skilled a few of life’s hardships and was actually down and dissatisfied. Once I went in, the workers have been at all times very form to me. It’s helped me get via some powerful instances!”

Conventional NLP strategies will establish matters as “hardships,” “dissatisfied,” “form workers,” and “get via powerful instances.” It could possibly’t distinguish between the responder’s general present unfavorable life experiences and the particular optimistic retailer experiences.

Alida’s current resolution routinely course of giant volumes of open-ended responses, however they wished their prospects to realize higher contextual comprehension and high-level subject inference.

Amazon Bedrock

Previous to the introduction of LLMs, the best way ahead for Alida to enhance upon their current single-model resolution was to work carefully with business specialists and develop, practice, and refine new fashions particularly for every of the business verticals that Alida’s prospects operated in. This was each a time- and cost-intensive endeavor.

One of many breakthroughs that make LLMs so highly effective is using consideration mechanisms. LLMs use self-attention mechanisms that analyze the relationships between phrases in a given immediate. This permits LLMs to higher deal with the subject and sentiment within the earlier instance and presents an thrilling new expertise that can be utilized to deal with the problem.

With Amazon Bedrock, groups and people can instantly begin utilizing basis fashions with out having to fret about provisioning infrastructure or organising and configuring ML frameworks. You will get began with the next steps:

- Confirm that your person or function has permission to create or modify Amazon Bedrock sources. For particulars, see Identity-based policy examples for Amazon Bedrock

- Log in into the Amazon Bedrock console.

- On the Mannequin entry web page, overview the EULA and allow the FMs you’d like in your account.

- Begin interacting with the FMs through the next strategies:

Alida’s govt management crew was wanting to be an early adopter of the Amazon Bedrock as a result of they acknowledged its means to assist their groups to carry new generative AI-powered options to market quicker.

Vincy William, the Senior Director of Engineering at Alida who leads the crew accountable for constructing the subject and sentiment evaluation service, says,

“LLMs present an enormous leap in qualitative evaluation and do issues (at a scale that’s) humanly not potential to do. Amazon Bedrock is a recreation changer, it permits us to leverage LLMs with out the complexity.”

The engineering crew skilled the speedy ease of getting began with Amazon Bedrock. They may choose from varied basis fashions and begin specializing in immediate engineering as a substitute of spending time on right-sizing, provisioning, deploying, and configuring sources to run the fashions.

Resolution overview

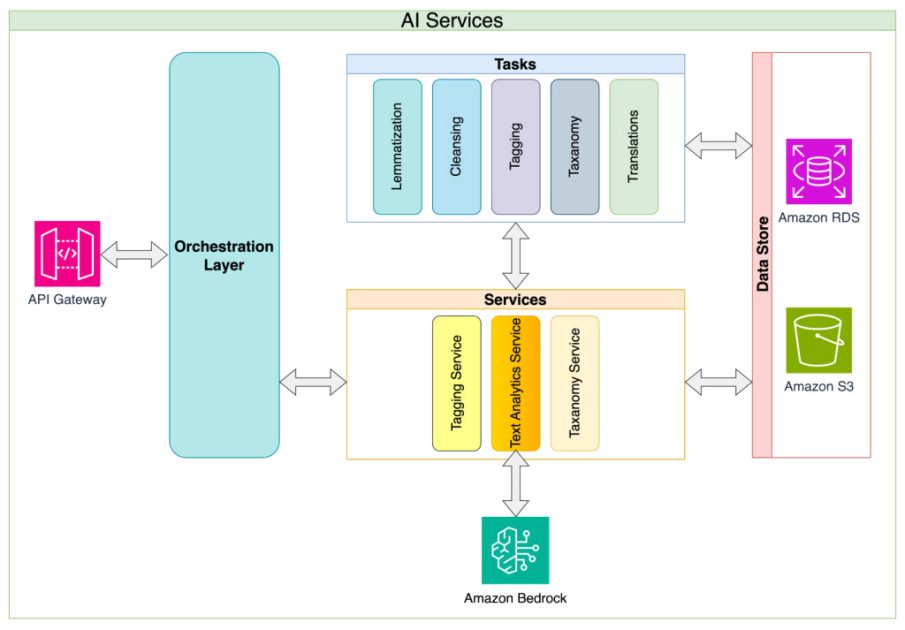

Sherwin Chu, Alida’s Chief Architect, shared Alida’s microservices structure method. Alida constructed the subject and sentiment classification as a service with survey response evaluation as its first utility. With this method, frequent LLM implementation challenges such because the complexity of managing prompts, token limits, request constraints, and retries are abstracted away, and the answer permits for consuming purposes to have a easy and secure API to work with. This abstraction layer method additionally allows the service house owners to repeatedly enhance inside implementation particulars and decrease API-breaking adjustments. Lastly, the service method permits for a single level to implement any information governance and safety insurance policies that evolve as AI governance matures within the group.

The next diagram illustrates the answer structure and stream.

Alida evaluated LLMs from varied suppliers, and located Anthropic’s Claude Instantaneous to be the correct steadiness between value and efficiency. Working carefully with the immediate engineering crew, Chu advocated to implement a immediate chaining technique versus a single monolith immediate method.

Immediate chaining allows you to do the next:

- Break down your goal into smaller, logical steps

- Construct a immediate for every step

- Present the prompts sequentially to the LLM

This creates extra factors of inspection, which has the next advantages:

- It’s simple to systematically consider adjustments you make to the enter immediate

- You possibly can implement extra detailed monitoring and monitoring of the accuracy and efficiency at every step

Key issues with this technique embody the rise within the variety of requests made to the LLM and the ensuing enhance within the general time it takes to finish the target. For Alida’s use case they selected to batching a set of open-ended responses in a single immediate to the LLM is what they selected to offset these results.

NLP vs. LLM

Alida’s current NLP resolution depends on clustering algorithms and statistical classification to investigate open-ended survey responses. When utilized to pattern suggestions for a espresso store’s cell app, it extracted matters based mostly on phrase patterns however lacked true comprehension. The next desk consists of some examples evaluating NLP responses vs. LLM responses.

| Survey Response | Present Conventional NLP | Amazon Bedrock with Claude Instantaneous | |

| Matter | Matter | Sentiment | |

| I nearly completely order my drinks via the app bc of comfort and it’s much less embarrassing to order tremendous personalized drinks lol. And I like incomes rewards! | [‘app bc convenience’, ‘drink’, ‘reward’] | Cellular Ordering Comfort | optimistic |

| The app works fairly good the one criticism I’ve is that I can’t add Any variety of cash that I wish to my reward card. Why does it particularly should be $10 to refill?! | [‘complaint’, ‘app’, ‘gift card’, ‘number money’] | Cellular Order Success Pace | unfavorable |

The instance outcomes present how the prevailing resolution was in a position to extract related key phrases, however isn’t in a position to obtain a extra generalized subject group task.

In distinction, utilizing Amazon Bedrock and Anthropic Claude Instantaneous, the LLM with in-context coaching is ready to assign the responses to pre-defined matters and assign sentiment.

In extra to delivering higher solutions for Alida’s prospects, for this explicit use-case, pursuing an answer utilizing an LLM over conventional NLP strategies saved an unlimited quantity of effort and time in coaching and sustaining an appropriate mannequin. The next desk compares coaching a conventional NLP mannequin vs. in-context coaching of an LLM.

| . | Knowledge Requirement | Coaching Course of | Mannequin Adaptability |

| Coaching a conventional NLP mannequin | Hundreds of human-labeled examples |

Mixture of automated and guide function engineering. Iterative practice and consider cycles. |

Slower turnaround as a result of must retrain mannequin |

| In-context coaching of LLM | A number of examples |

Educated on the fly throughout the immediate. Restricted by context window measurement. |

Sooner iterations by modifying the immediate. Restricted retention as a result of context window measurement. |

Conclusion

Alida’s use of Anthropic’s Claude Instantaneous mannequin on Amazon Bedrock demonstrates the highly effective capabilities of LLMs for analyzing open-ended survey responses. Alida was in a position to construct a superior service that was 4-6 instances extra exact at subject evaluation when in comparison with their NLP-powered service. Moreover, utilizing in-context immediate engineering for LLMs considerably decreased improvement time, as a result of they didn’t must curate 1000’s of human-labeled information factors to coach a conventional NLP mannequin. This in the end permits Alida to present their prospects richer insights sooner!

For those who’re prepared to begin constructing your personal basis mannequin innovation with Amazon Bedrock, checkout this hyperlink to Set up Amazon Bedrock. If you curious about studying about different intriguing Amazon Bedrock purposes, see the Amazon Bedrock specific section of the AWS Machine Studying Weblog.

In regards to the authors

Kinman Lam is an ISV/DNB Resolution Architect for AWS. He has 17 years of expertise in constructing and rising expertise corporations within the smartphone, geolocation, IoT, and open supply software program house. At AWS, he makes use of his expertise to assist corporations construct strong infrastructure to fulfill the rising calls for of rising companies, launch new services and products, enter new markets, and delight their prospects.

Kinman Lam is an ISV/DNB Resolution Architect for AWS. He has 17 years of expertise in constructing and rising expertise corporations within the smartphone, geolocation, IoT, and open supply software program house. At AWS, he makes use of his expertise to assist corporations construct strong infrastructure to fulfill the rising calls for of rising companies, launch new services and products, enter new markets, and delight their prospects.

Sherwin Chu is the Chief Architect at Alida, serving to product groups with architectural course, expertise alternative, and complicated problem-solving. He’s an skilled software program engineer, architect, and chief with over 20 years within the SaaS house for varied industries. He has constructed and managed quite a few B2B and B2C methods on AWS and GCP.

Sherwin Chu is the Chief Architect at Alida, serving to product groups with architectural course, expertise alternative, and complicated problem-solving. He’s an skilled software program engineer, architect, and chief with over 20 years within the SaaS house for varied industries. He has constructed and managed quite a few B2B and B2C methods on AWS and GCP.

Mark Roy is a Principal Machine Studying Architect for AWS, serving to prospects design and construct AI/ML and generative AI options. His focus since early 2023 has been main resolution structure efforts for the launch of Amazon Bedrock, AWS’ flagship generative AI providing for builders. Mark’s work covers a variety of use circumstances, with a main curiosity in generative AI, brokers, and scaling ML throughout the enterprise. He has helped corporations in insurance coverage, monetary providers, media and leisure, healthcare, utilities, and manufacturing. Previous to becoming a member of AWS, Mark was an architect, developer, and expertise chief for over 25 years, together with 19 years in monetary providers. Mark holds six AWS certifications, together with the ML Specialty Certification.

Mark Roy is a Principal Machine Studying Architect for AWS, serving to prospects design and construct AI/ML and generative AI options. His focus since early 2023 has been main resolution structure efforts for the launch of Amazon Bedrock, AWS’ flagship generative AI providing for builders. Mark’s work covers a variety of use circumstances, with a main curiosity in generative AI, brokers, and scaling ML throughout the enterprise. He has helped corporations in insurance coverage, monetary providers, media and leisure, healthcare, utilities, and manufacturing. Previous to becoming a member of AWS, Mark was an architect, developer, and expertise chief for over 25 years, together with 19 years in monetary providers. Mark holds six AWS certifications, together with the ML Specialty Certification.