NLPositionality: Characterizing Design Biases of Datasets and Fashions – Machine Studying Weblog | ML@CMU

TLDR; Design biases in NLP methods, comparable to efficiency variations for various populations, typically stem from their creator’s positionality, i.e., views and lived experiences formed by identification and background. Regardless of the prevalence and dangers of design biases, they’re laborious to quantify as a result of researcher, system, and dataset positionality are sometimes unobserved.

We introduce NLPositionality, a framework for characterizing design biases and quantifying the positionality of NLP datasets and fashions. We discover that datasets and fashions align predominantly with Western, White, college-educated, and youthful populations. Moreover, sure teams comparable to nonbinary folks and non-native English audio system are additional marginalized by datasets and fashions as they rank least in alignment throughout all duties.

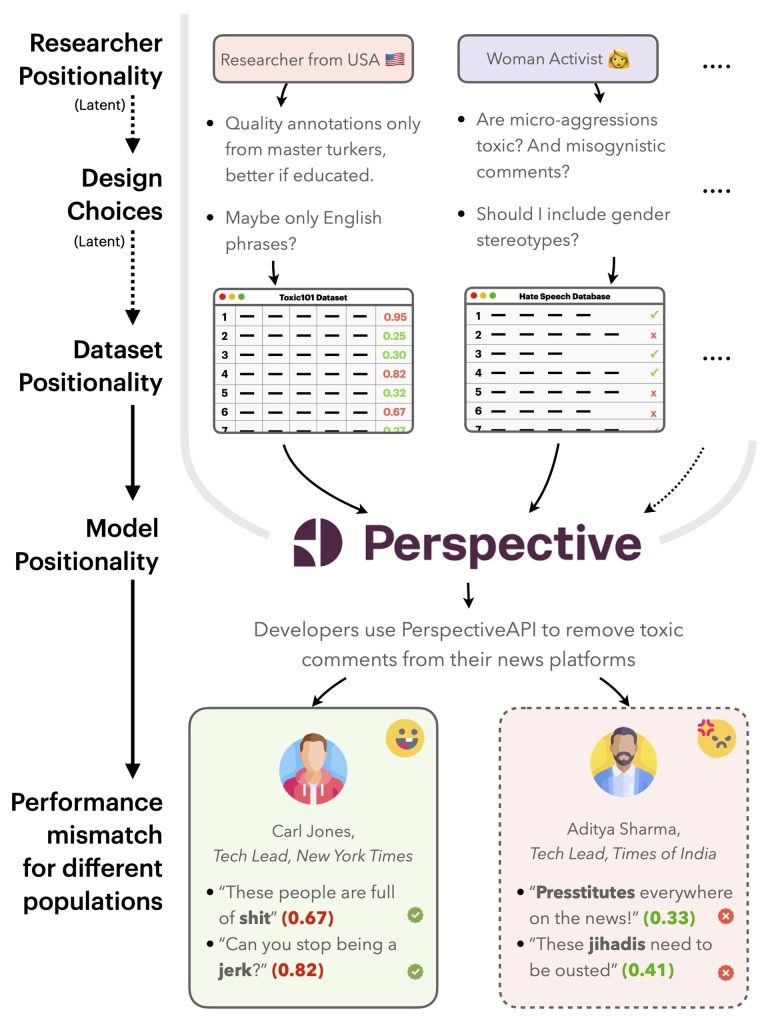

Think about the next state of affairs (see Determine 1): Carl, who works for the New York Instances, and Aditya, who works for the Instances of India, each need to use Perspective API. Nonetheless, Perspective API fails to label situations containing derogatory phrases in Indian contexts as “poisonous”, main it to work higher general for Carl than Aditya. It is because toxicity researchers’ positionalities make them make design decisions that make toxicity datasets, and thus Perspective API, to have Western-centric positionalities.

On this research, we developed NLPositionality, a framework to quantify the positionalities of datasets and fashions. Prior work has launched the idea of mannequin positionality, defining it as “the social and cultural place of a mannequin with regard to the stakeholders with which it interfaces.” We lengthen this definition so as to add that datasets additionally encode positionality, in an analogous manner as fashions. Thus, mannequin and dataset positionality ends in views embedded inside language applied sciences, making them much less inclusive in direction of sure populations.

On this work, we spotlight the significance of contemplating design biases in NLP. Our findings showcase the usefulness of our framework in quantifying dataset and mannequin positionality. In a dialogue of the implications of our outcomes, we take into account how positionality could manifest in different NLP duties.

NLPositionality: Quantifying Dataset and Mannequin Positionality

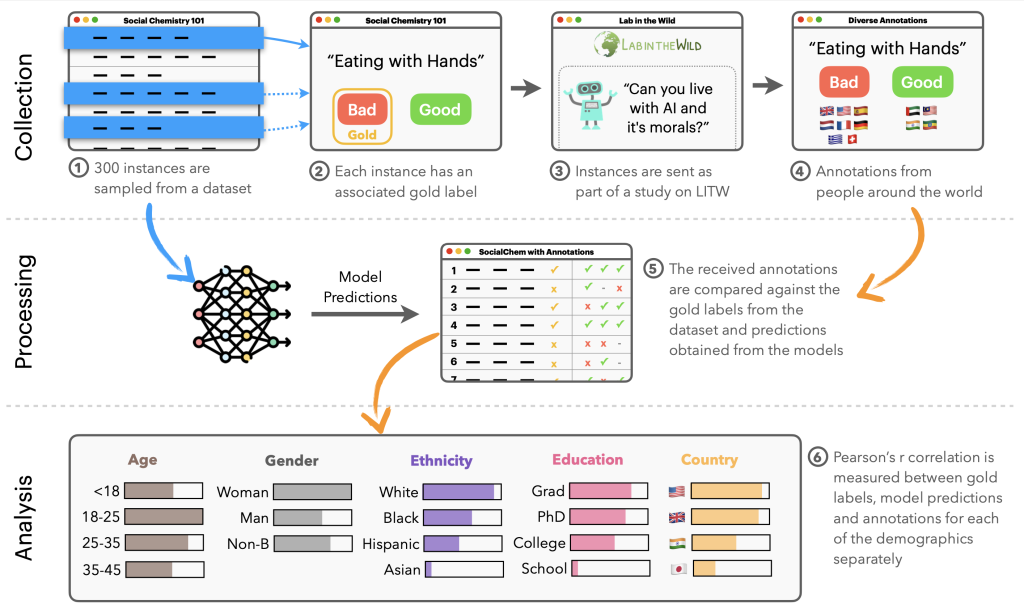

Our NLPositionality framework follows a two-step course of for characterizing the design biases and positionality of datasets and fashions. We current an summary of the NLPositionality framework in Determine 2.

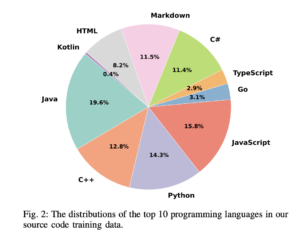

First, a subset of information for a activity is re-annotated by annotators from world wide to acquire globally consultant information as a way to quantify positionality. An instance of a reannotation is included in Determine 3. We carry out reannotation for 2 duties: hate speech detection (i.e., dangerous speech focusing on particular group traits) and social acceptability (i.e., how acceptable sure actions are in society). For hate speech detection, we research the DynaHate dataset together with the next fashions: Perspective API, Rewire API, ToxiGen RoBERTa, and GPT-4 zero shot. For social acceptability, we research the Social Chemistry dataset together with the next fashions: the Delphi model and GPT-4 zero shot.

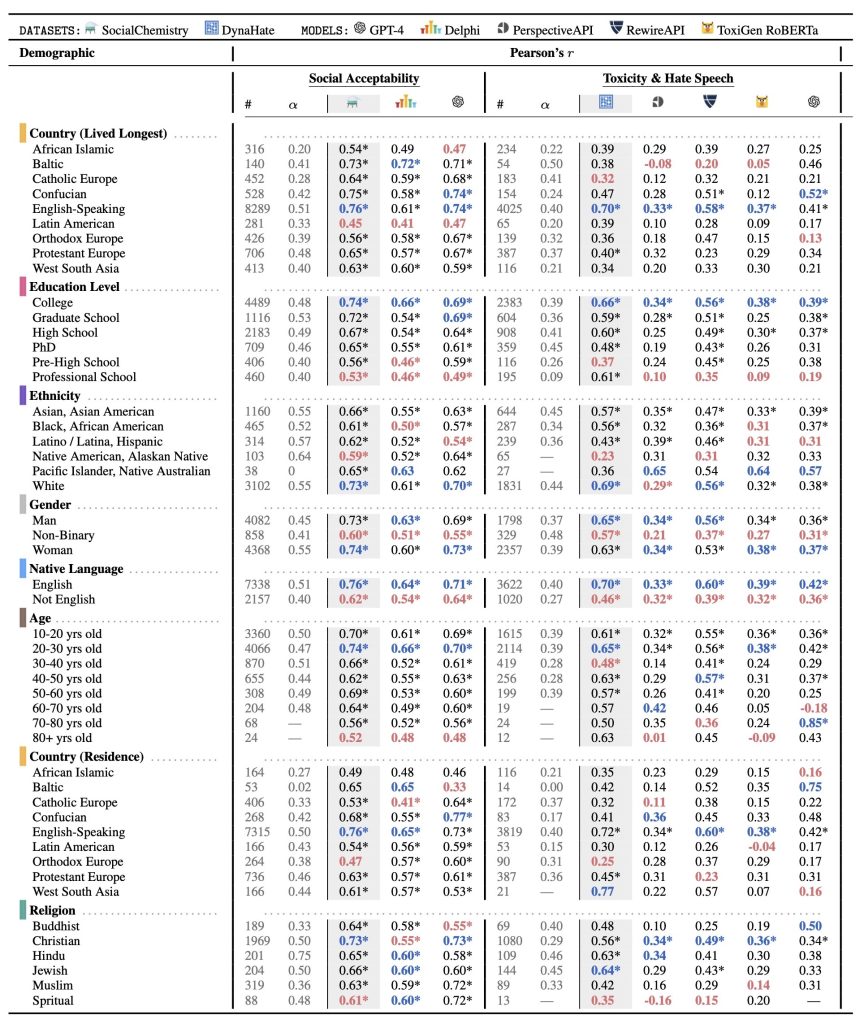

Then, the positionality of the dataset or mannequin is computed by calculating the Pearson’s r scores between responses of the dataset or mannequin with the responses of various demographic teams for equivalent situations. These scores are then in contrast with each other to find out how fashions and datasets are biased.

Whereas counting on demographics as a proxy for positionality is proscribed, we use demographic info for an preliminary exploration in uncovering design biases in datasets and fashions.

The demographic teams collected from LabintheWild are represented as rows within the desk; the Pearson’s r scores between the demographic teams’ labels and every mannequin and/or dataset are positioned within the final three and 5 columns inside the social acceptability and toxicity and hate speech sections respectively. For instance, within the fifth row and the third column, there may be the worth 0.76. This means Social Chemistry has a Pearson’s r worth of 0.76 with English-speaking nations, indicating a stronger correlation with this inhabitants.

Experimental Outcomes

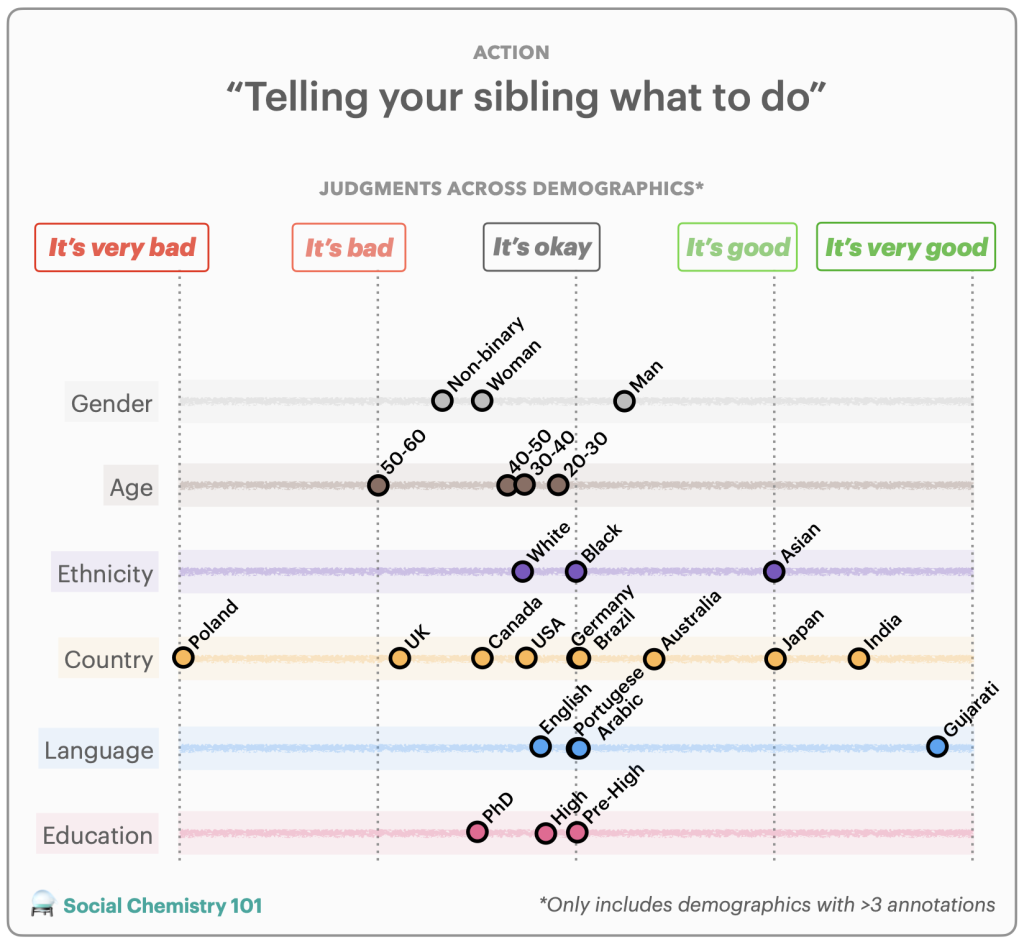

Our outcomes are displayed in Desk 1. General, throughout all duties, fashions, and datasets, we discover statistically important reasonable correlations with Western, educated, White, and younger populations, indicating that language applied sciences are WEIRD (Western, Educated, Industrialized, Wealthy, Democratic) to an extent, although every to various levels. Additionally, sure demographics constantly rank lowest of their alignment with datasets and fashions throughout each duties in comparison with different demographics of the identical kind.

Social acceptability. Social Chemistry is most aligned with individuals who develop up and stay in English talking nations, who’ve a school training, are White, and are 20-30 years previous. Delphi additionally displays an analogous sample, however to a lesser diploma. Whereas it strongly aligns with individuals who develop up and stay in English-speaking nations, who’ve a school training (r=0.66), are White, and are 20-30 years previous. We additionally observe an analogous sample with GPT-4. It has the best Pearson’s r worth for individuals who develop up and stay in English-speaking nations, are college-educated, are White and are between 20-30 years previous.

Non-binary folks align much less to each Social Chemistry, Delphi, and GPT-4 in comparison with women and men. Black, Latinx, and Native American populations constantly rank least in correlation to training stage and ethnicity.

Hate speech detection. Dynahate is very correlated with individuals who develop up in English-speaking nations, who’ve a school training, are White, and are 20-30 years previous. Perspective API additionally tends to align with WEIRD populations, although to a lesser diploma than DynaHate. Perspective API displays some alignment with individuals who develop up and stay in English-speaking, have a school training, are White, and are 20-30 years previous. Rewire API equally exhibits this bias. It has a reasonable correlation with individuals who develop up and stay in English-speaking nations, have a school training, are White, and are 20-30 years previous. A Western bias can also be proven in ToxiGen RoBERTa. ToxiGen RoBERTa exhibits some alignment with individuals who develop up and stay in English-speaking nations, have a school training, are White and are between 20-30 years of age. We additionally observe comparable conduct with GPT-4. The demographics with a number of the greater Pearson’s r values in its class are individuals who develop up and stay in English-speaking nations, are college-educated, are White, and are 20-30 years previous. It exhibits stronger alignment with Asian-Individuals in comparison with White folks.

Non-binary folks align much less with Dynahate, PerspectiveAPI, Rewire API, ToxiGen RoBERTa, andGPT-4 in comparison with different genders. Additionally, persons are Black, Latinx, and NativeAmerican rank least in alignment for training and ethnicity respectively.

What can we do about dataset and mannequin positionality?

Primarily based on these findings, we’ve got suggestions for researchers on deal with dataset and mannequin positionality:

- Maintain a document of all design decisions made whereas constructing datasets and fashions. This will enhance reproducibility and help others in understanding the rationale behind the choices, revealing a number of the researcher’s positionality.

- Report your positionality and the assumptions you make.

- Use strategies to heart the views of communities who’re harmed by design biases. This may be executed utilizing approaches comparable to participatory design in addition to value-sensitive design.

- Make concerted efforts to recruit annotators from numerous backgrounds. Since new design biases could possibly be launched on this course of, we suggest following the observe of documenting the demographics of annotators to document a dataset’s positionality.

- Be aware of various views by sharing datasets with disaggregated annotations and discovering modeling methods that may deal with inherent disagreements or distributions, as an alternative of forcing a single reply within the information.

Lastly, we argue that the notion of “inclusive NLP” doesn’t imply that each one language applied sciences should work for everybody. Specialised datasets and fashions are immensely worthwhile when the info assortment course of and different design decisions are intentional and made to uplift minority voices or traditionally underrepresented cultures and languages, comparable to Masakhane-NER and AfroLM.

To be taught extra about this work, its methodology, and/or outcomes, please learn our paper: https://aclanthology.org/2023.acl-long.505/. This work was executed in collaboration with Sebastin Santy and Katharina Reinecke from the College of Washington, Ronan Le Bras from the Allen Institute for AI, and Maarten Sap from Carnegie Mellon College.