A Visible Information to Mamba and State Area Fashions

The Transformer structure has been a significant part within the success of Giant Language Fashions (LLMs). It has been used for almost all LLMs which can be getting used at the moment, from open-source fashions like Mistral to closed-source fashions like ChatGPT.

To additional enhance LLMs, new architectures are developed that may even outperform the Transformer structure. Considered one of these strategies is Mamba, a State Area Mannequin.

Mamba was proposed within the paper Mamba: Linear-Time Sequence Modeling with Selective State Spaces. You’ll find its official implementation and mannequin checkpoints in its repository.

On this publish, I’ll introduce the sector of State Area Fashions within the context of language modeling and discover ideas one after the other to develop an instinct concerning the discipline. Then, we’ll cowl how Mamba may problem the Transformers structure.

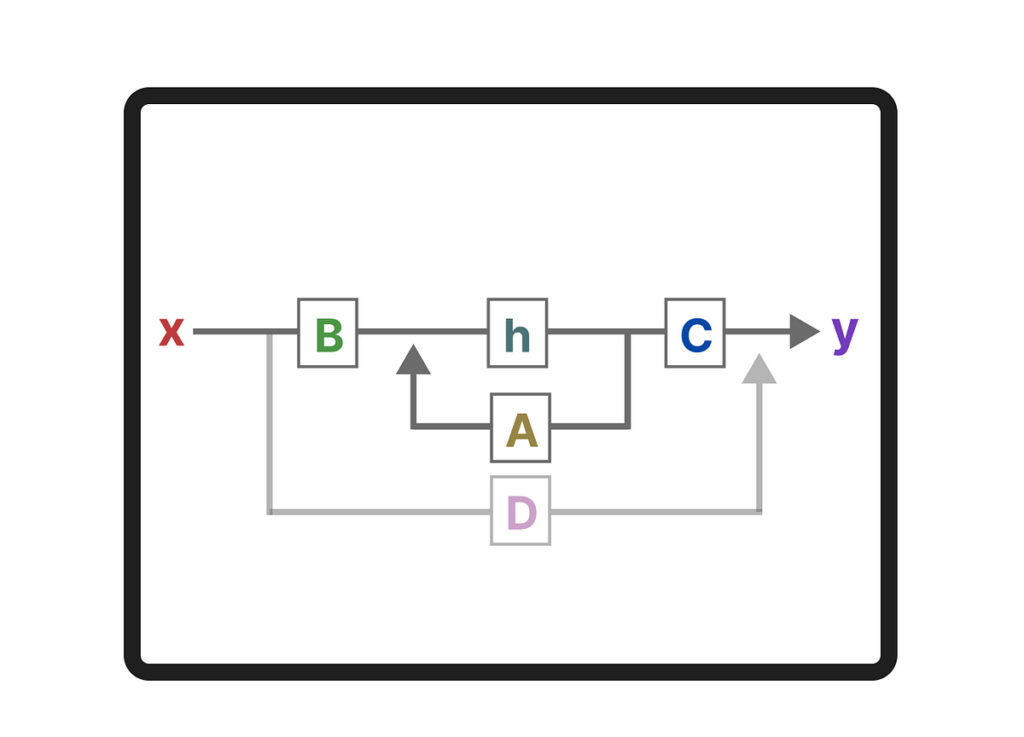

As a visible information, count on many visualizations to develop an instinct about Mamba and State Area Fashions!

For instance why Mamba is such an fascinating structure, let’s do a brief re-cap of transformers first and discover considered one of its disadvantages.

A Transformer sees any textual enter as a sequence that consists of tokens.

A serious advantage of Transformers is that no matter enter it receives, it could possibly look again at any of the sooner tokens within the sequence to derive its illustration.

Keep in mind that a Transformer consists of two constructions, a set of encoder blocks for representing textual content and a set of decoder blocks for producing textual content. Collectively, these constructions can be utilized for a number of duties, together with translation.