Unlocking the Mind’s Language Response: How GPT Fashions Predict and Affect Neural Exercise

Latest developments in machine studying and synthetic intelligence (ML) strategies are utilized in all fields. These superior AI techniques have been made potential resulting from advances in computing energy, entry to huge quantities of information, and enhancements in machine studying strategies. LLMs, which require large quantities of information, generate human-like language for a lot of purposes.

A brand new research by researchers from MIT and Harvard College have developed new insights to foretell how the human mind responds to language. The researchers emphasised that this may be the primary AI mannequin to successfully drive and suppress responses within the human language community. Language processing entails language networks, particularly mind areas primarily within the left hemisphere. They embody elements of the frontal and temporal lobes of the mind. There was analysis to know how this community capabilities, however a lot remains to be to be identified concerning the underlying mechanisms concerned in language comprehension.

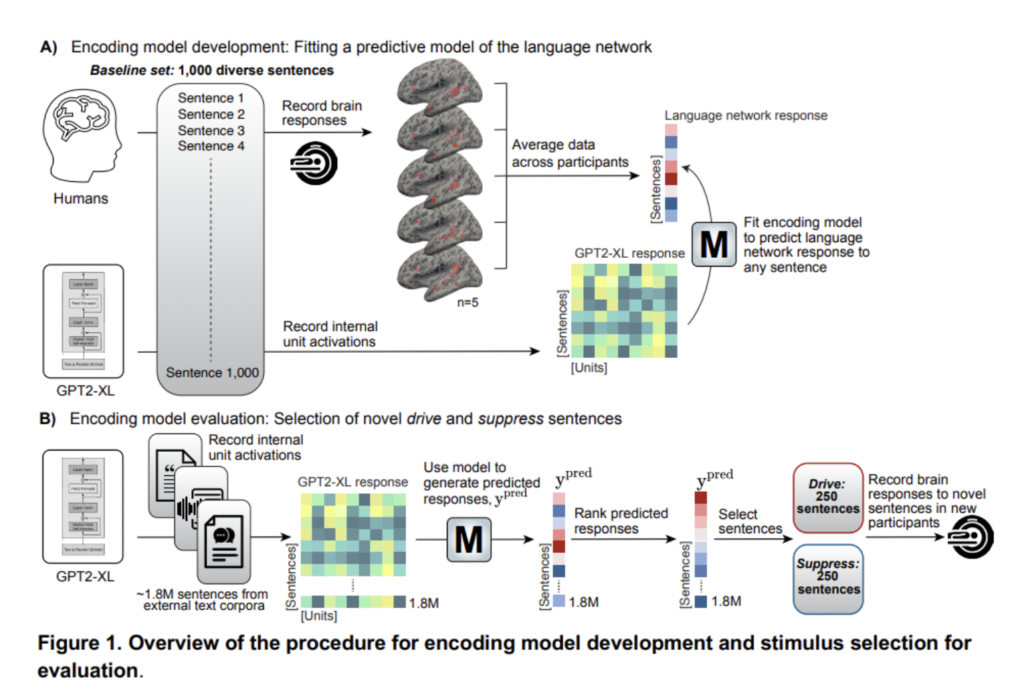

By this research, the researchers tried to judge LLMs’ effectiveness in predicting mind responses to varied linguistic inputs. Additionally, they purpose to know higher the traits of stimuli that drive or suppress responses inside the language community space of people. The researchers formulated an encoding mannequin based mostly on a GPT-style LLM to foretell the human mind’s reactions to arbitrary sentences introduced to individuals. They constructed this encoding mannequin utilizing last-token sentence embeddings from GPT2-XL. It was skilled on a dataset of 1,000 various, corpus-extracted sentences from 5 individuals. Lastly, they examined the mannequin on held-out sentences to evaluate its predictive capabilities. They concluded that the mannequin achieved a correlation coefficient of r=0.38.

To additional consider the mannequin’s robustness, the researchers carried out a number of different assessments utilizing various strategies for acquiring sentence embeddings and incorporating embeddings from one other LLM structure. They discovered that the mannequin maintained excessive predictive efficiency in these assessments. Additionally, they discovered that the encoding mannequin was correct for predictive efficiency when utilized to anatomically outlined language areas.

The researchers emphasised that this research and its findings maintain substantial implications for basic neuroscience analysis and real-world purposes. They famous that manipulating neural responses within the language community can open new fields for finding out language processing and probably treating issues affecting language operate. Additionally, implementing LLMs as fashions of human language processing can enhance pure language processing applied sciences, similar to digital assistants and chatbots.

In conclusion, this research is a big step in understanding the connection and dealing similarity between AI and the human mind. Researchers use LLMs to unravel the mysteries surrounding language processing and develop progressive methods for influencing neural exercise. Researchers anticipate to see extra thrilling discoveries on this area as AI and ML evolve.

Try the Paper. All credit score for this analysis goes to the researchers of this mission. Additionally, don’t overlook to observe us on Twitter and Google News. Be a part of our 36k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

In case you like our work, you’ll love our newsletter..

Don’t Overlook to hitch our Telegram Channel

Rachit Ranjan is a consulting intern at MarktechPost . He’s at the moment pursuing his B.Tech from Indian Institute of Know-how(IIT) Patna . He’s actively shaping his profession within the discipline of Synthetic Intelligence and Information Science and is passionate and devoted for exploring these fields.