Working Native LLMs and VLMs on the Raspberry Pi | by Pye Sone Kyaw | Jan, 2024

Ever considered operating your personal giant language fashions (LLMs) or imaginative and prescient language fashions (VLMs) by yourself machine? You in all probability did, however the ideas of setting issues up from scratch, having to handle the setting, downloading the fitting mannequin weights, and the lingering doubt of whether or not your machine may even deal with the mannequin has in all probability given you some pause.

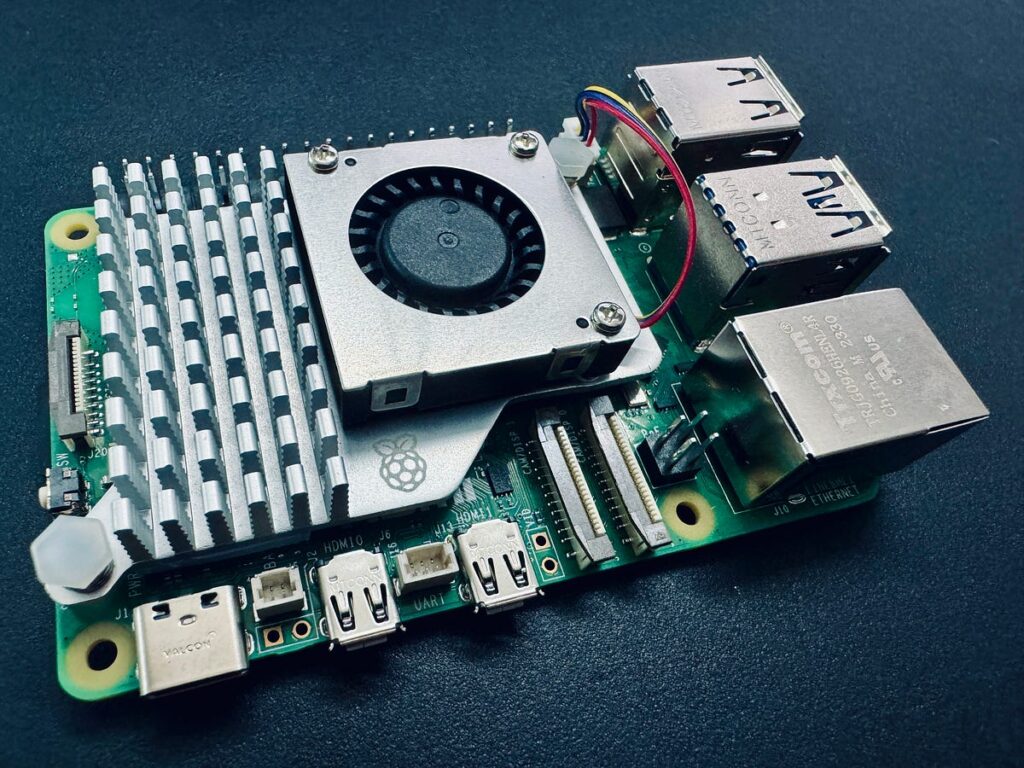

Let’s go one step additional than that. Think about working your personal LLM or VLM on a tool no bigger than a bank card — a Raspberry Pi. Unimaginable? By no means. I imply, I’m penning this submit in spite of everything, so it undoubtedly is feasible.

Potential, sure. However why would you even do it?

LLMs on the edge appear fairly far-fetched at this cut-off date. However this specific area of interest use case ought to mature over time, and we will certainly see some cool edge options being deployed with an all-local generative AI answer operating on-device on the edge.

It’s additionally about pushing the boundaries to see what’s attainable. If it may be achieved at this excessive finish of the compute scale, then it may be achieved at any stage in between a Raspberry Pi and a giant and highly effective server GPU.

Historically, edge AI has been carefully linked with pc imaginative and prescient. Exploring the deployment of LLMs and VLMs on the edge provides an thrilling dimension to this subject that’s simply rising.

Most significantly, I simply wished to do one thing enjoyable with my not too long ago acquired Raspberry Pi 5.

So, how will we obtain all this on a Raspberry Pi? Utilizing Ollama!

What’s Ollama?

Ollama has emerged as the most effective options for operating native LLMs by yourself private pc with out having to cope with the trouble of setting issues up from scratch. With just some instructions, all the things will be arrange with none points. All the pieces is self-contained and works splendidly in my expertise throughout a number of units and fashions. It even exposes a REST API for mannequin inference, so you may depart it operating on the Raspberry Pi and name it out of your different functions and units if you wish to.

There’s additionally Ollama Web UI which is a wonderful piece of AI UI/UX that runs seamlessly with Ollama for these apprehensive about command-line interfaces. It’s mainly a neighborhood ChatGPT interface, if you’ll.

Collectively, these two items of open-source software program present what I really feel is the perfect domestically hosted LLM expertise proper now.

Each Ollama and Ollama Net UI assist VLMs like LLaVA too, which opens up much more doorways for this edge Generative AI use case.

Technical Necessities

All you want is the next:

- Raspberry Pi 5 (or 4 for a much less speedy setup) — Go for the 8GB RAM variant to suit the 7B fashions.

- SD Card — Minimally 16GB, the bigger the dimensions the extra fashions you may match. Have it already loaded with an acceptable OS similar to Raspbian Bookworm or Ubuntu

- An web connection

Like I discussed earlier, operating Ollama on a Raspberry Pi is already close to the intense finish of the {hardware} spectrum. Primarily, any machine extra highly effective than a Raspberry Pi, offered it runs a Linux distribution and has an analogous reminiscence capability, ought to theoretically be able to operating Ollama and the fashions mentioned on this submit.

1. Putting in Ollama

To put in Ollama on a Raspberry Pi, we’ll keep away from utilizing Docker to preserve assets.

Within the terminal, run

curl https://ollama.ai/set up.sh | sh

You need to see one thing much like the picture beneath after operating the command above.

Just like the output says, go to 0.0.0.0:11434 to confirm that Ollama is operating. It’s regular to see the ‘WARNING: No NVIDIA GPU detected. Ollama will run in CPU-only mode.’ since we’re utilizing a Raspberry Pi. However in case you’re following these directions on one thing that’s alleged to have a NVIDIA GPU, one thing didn’t go proper.

For any points or updates, confer with the Ollama GitHub repository.

2. Working LLMs by means of the command line

Check out the official Ollama model library for an inventory of fashions that may be run utilizing Ollama. On an 8GB Raspberry Pi, fashions bigger than 7B gained’t match. Let’s use Phi-2, a 2.7B LLM from Microsoft, now beneath MIT license.

We’ll use the default Phi-2 mannequin, however be happy to make use of any of the opposite tags discovered here. Check out the model page for Phi-2 to see how one can work together with it.

Within the terminal, run

ollama run phi

When you see one thing much like the output beneath, you have already got a LLM operating on the Raspberry Pi! It’s that easy.

You may strive different fashions like Mistral, Llama-2, and so forth, simply ensure there’s sufficient area on the SD card for the mannequin weights.

Naturally, the larger the mannequin, the slower the output could be. On Phi-2 2.7B, I can get round 4 tokens per second. However with a Mistral 7B, the era pace goes right down to round 2 tokens per second. A token is roughly equal to a single phrase.

Now we have now LLMs operating on the Raspberry Pi, however we’re not achieved but. The terminal isn’t for everybody. Let’s get Ollama Net UI operating as effectively!

3. Putting in and Working Ollama Net UI

We will comply with the directions on the official Ollama Web UI GitHub Repository to put in it with out Docker. It recommends minimally Node.js to be >= 20.10 so we will comply with that. It additionally recommends Python to be at the very least 3.11, however Raspbian OS already has that put in for us.

We now have to put in Node.js first. Within the terminal, run

curl -fsSL https://deb.nodesource.com/setup_20.x | sudo -E bash - &&

sudo apt-get set up -y nodejs

Change the 20.x to a extra acceptable model if want be for future readers.

Then run the code block beneath.

git clone https://github.com/ollama-webui/ollama-webui.git

cd ollama-webui/# Copying required .env file

cp -RPp instance.env .env

# Constructing Frontend Utilizing Node

npm i

npm run construct

# Serving Frontend with the Backend

cd ./backend

pip set up -r necessities.txt --break-system-packages

sh begin.sh

It’s a slight modification of what’s offered on GitHub. Do take be aware that for simplicity and brevity we’re not following greatest practices like utilizing digital environments and we’re utilizing the — break-system-packages flag. For those who encounter an error like uvicorn not being discovered, restart the terminal session.

If all goes appropriately, it’s best to be capable to entry Ollama Net UI on port 8080 by means of http://0.0.0.0:8080 on the Raspberry Pi, or by means of http://<Raspberry Pi’s native deal with>:8080/ in case you are accessing by means of one other machine on the identical community.

When you’ve created an account and logged in, it’s best to see one thing much like the picture beneath.

For those who had downloaded some mannequin weights earlier, it’s best to see them within the dropdown menu like beneath. If not, you may go to the settings to obtain a mannequin.

Your complete interface could be very clear and intuitive, so I gained’t clarify a lot about it. It’s really a really well-done open-source challenge.

4. Working VLMs by means of Ollama Net UI

Like I discussed at first of this text, we will additionally run VLMs. Let’s run LLaVA, a well-liked open supply VLM which additionally occurs to be supported by Ollama. To take action, obtain the weights by pulling ‘llava’ by means of the interface.

Sadly, not like LLMs, it takes fairly a while for the setup to interpret the picture on the Raspberry Pi. The instance beneath took round 6 minutes to be processed. The majority of the time might be as a result of the picture aspect of issues is just not correctly optimised but, however it will undoubtedly change sooner or later. The token era pace is round 2 tokens/second.

To wrap all of it up

At this level we’re just about achieved with the targets of this text. To recap, we’ve managed to make use of Ollama and Ollama Net UI to run LLMs and VLMs like Phi-2, Mistral, and LLaVA on the Raspberry Pi.

I can undoubtedly think about fairly a couple of use circumstances for domestically hosted LLMs operating on the Raspberry Pi (or one other different small edge machine), particularly since 4 tokens/second does seem to be an appropriate pace with streaming for some use circumstances if we’re going for fashions across the dimension of Phi-2.

The sector of ‘small’ LLMs and VLMs, considerably paradoxically named given their ‘giant’ designation, is an energetic space of analysis with fairly a couple of mannequin releases not too long ago. Hopefully this rising development continues, and extra environment friendly and compact fashions proceed to get launched! Positively one thing to control within the coming months.

Disclaimer: I’ve no affiliation with Ollama or Ollama Net UI. All views and opinions are my very own and don’t symbolize any organisation.