Meet MosaicBERT: A BERT-Fashion Encoder Structure and Coaching Recipe that’s Empirically Optimized for Quick Pretraining

BERT is a language mannequin which was launched by Google in 2018. It’s based mostly on the transformer structure and is thought for its vital enchancment over earlier state-of-the-art fashions. As such, it has been the powerhouse of quite a few pure language processing (NLP) functions since its inception, and even within the age of huge language fashions (LLMs), BERT-style encoder fashions are utilized in duties like vector embeddings and retrieval augmented technology (RAG). Nonetheless, up to now half a decade, many vital developments have been made with different forms of architectures and coaching configurations which have but to be integrated into BERT.

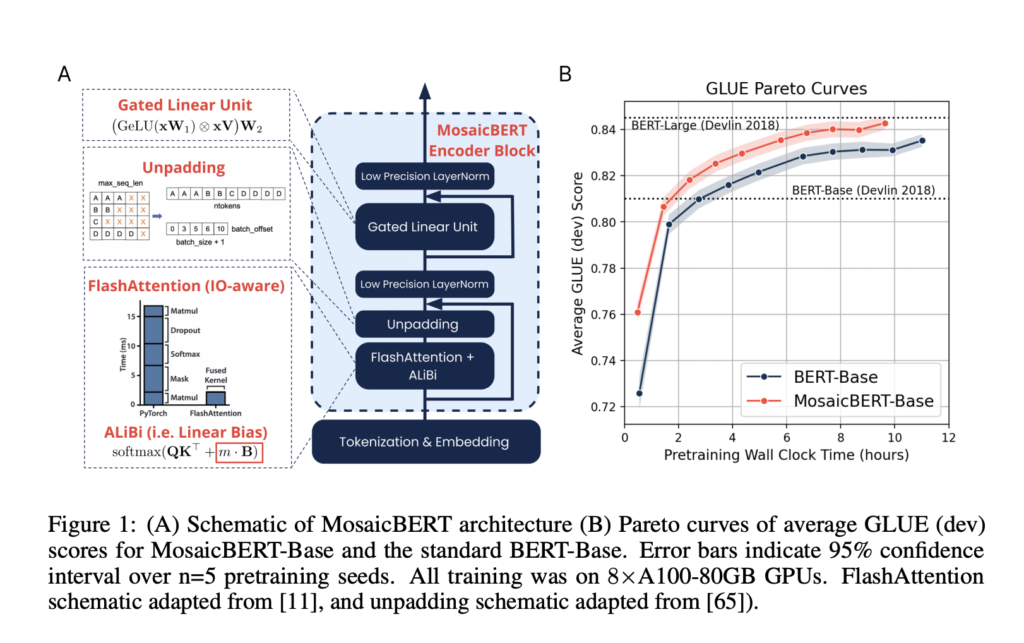

On this analysis paper, the authors have proven that velocity optimizations could be integrated into the BERT structure and coaching recipe. For this, they’ve launched an optimized framework referred to as MosaicBERT that improves the pretraining velocity and accuracy of the basic BERT structure, which has traditionally been computationally costly to coach.

To construct MosaicBERT, the researchers used totally different architectural decisions resembling FlashAttention, ALiBi, coaching with dynamic unpadding, low-precision LayerNorm, and Gated Linear Items.

- The flashAttention layer reduces the variety of learn/write operations between the GPU’s long-term and short-term reminiscence.

- ALiBi encodes place data by way of the eye operation, eliminating the place embeddings and performing as an oblique speedup methodology.

- The researchers modified the LayerNorm modules to run in bfloat16 precision as an alternative of float32, which reduces the quantity of information that must be loaded from reminiscence from 4 bytes per aspect to 2 bytes.

- Lastly, the Gated Linear Items improves the Pareto efficiency throughout all timescales.

The researchers pretrained BERT-Base and MosaicBERT-Base for 70,000 steps of batch measurement 4096 after which finetuned them on the GLUE benchmark suite. BERT-Base reached a median GLUE rating of 83.2% in 11.5 hours, whereas MosaicBERT achieved the identical accuracy in round 4.6 hours on the identical {hardware}, highlighting the numerous speedup. MosaicBERT additionally outperforms the BERT mannequin in 4 out of eight GLUE duties throughout the coaching period.

The massive variant of MosaicBERT additionally had a major speedup over the BERT variant, attaining a median GLUE rating of 83.2 in 15.85 hours in comparison with 23.35 hours taken by BERT-Massive. Each the variants of MosaicBERT are Pareto Optimum relative to the corresponding BERT fashions. The outcomes additionally present that the efficiency of BERT-Massive surpasses the bottom mannequin solely after in depth coaching.

In conclusion, the authors of this analysis paper have improved the pretraining velocity and accuracy of the BERT mannequin utilizing a mix of architectural decisions resembling FlashAttention, ALiBi, low-precision LayerNorm, and Gated Linear Items. Each the mannequin variants had a major speedup in comparison with their BERT counterparts by attaining the identical GLUE rating in much less time on the identical {hardware}. The authors hope their work will assist researchers pre-train BERT fashions quicker and cheaper, in the end enabling them to construct higher fashions.

Try the Paper. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t overlook to comply with us on Twitter. Be a part of our 35k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.