Google DeepMind Researchers Suggest Chain of Code (CoC): A Easy But Surprisingly Efficient Extension that Improves Language Mannequin (LM) Code-Pushed Reasoning

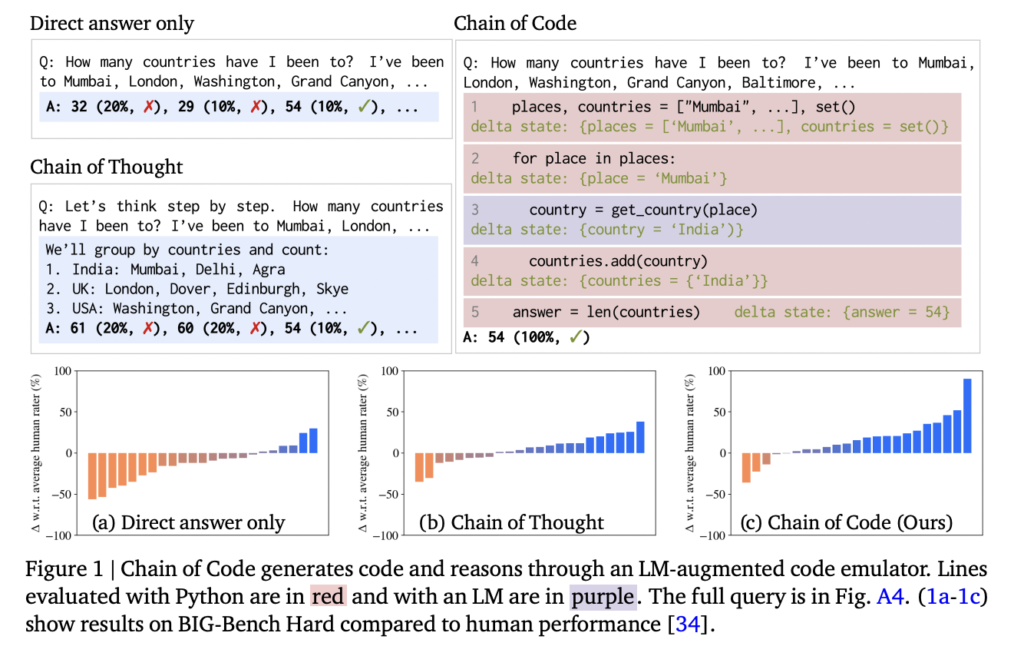

Researchers from Google DeepMind, Stanford College, and the College of California, Berkeley have developed a Chain of Code that addresses the issue of enhancing the code-driven reasoning of language fashions. Chain of Code encourages LMs to format semantic sub-tasks in a program as versatile pseudocode that the interpreter can explicitly catch undefined behaviors and hand off to simulate with an LM(as an “LMulator”). CoC scales nicely with massive and small fashions and broadens the scope of reasoning questions LMs can appropriately reply by pondering in code.

Works like Chain of Thought, least-to-most, and ScratchPad have leveraged prompting to enhance reasoning by breaking duties down into intermediate steps or sustaining a hint of intermediate outcomes.LMs educated on Github have been prompted to write down and execute code, which helps clear up complicated questions involving numeric or symbolic reasoning.

To unravel a given downside, CoC generates reasoning substeps within the code construction. This code offers the framework of reasoning by the ache and could also be within the type of express code, pseudocode, or pure language. CoC allows code use in solely new regimes by combining some great benefits of code with the highly effective semantic and commonsense data of LMs, which might simply categorical guidelines which can be difficult to talk in code(e.g., which meals are fruits?).

A core contribution of CoC is not only the technology of reasoning code however how it’s executed. As soon as the code is written, the code is tried to be run by a code interpreter- on this work, researchers think about Python, however the method is basic to any interpreter. If the code is efficiently executed, this system state is up to date, and the execution continues. If the code is just not executable or raises any exception, the language mannequin as an alternative is used to simulate the execution. The language mannequin’s outputs replace this system state, and the execution continues.

The general efficiency of the CoC method outperforms different strategies, exceeding the human baseline within the variety of duties it exceeds and the general quantity it exceeds the baseline. CoC achieves state-of-the-art efficiency in a number of research. It exhibits enhancements in efficiency because the mannequin measurement will increase, just like Chain of Thought prompting. Cross-task prompting leads to a drop in efficiency for all strategies, however CoC nonetheless outperforms Chain of Thought and direct prompting at scale, approaching human common efficiency.

CoC is an method in direction of reasoning with language fashions by writing code and executing code both with an interpreter or with a language mannequin that simulates the execution if the code is just not executable. CoC can leverage each the expressive construction of regulation and its highly effective instruments. Past this, by simulating the execution of non-executable code, CoC can apply to issues nominally exterior the scope of code (e.g., semantic reasoning issues).

Take a look at the Paper and Project. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t overlook to affix our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, the place we share the newest AI analysis information, cool AI tasks, and extra.

If you like our work, you will love our newsletter..

Sana Hassan, a consulting intern at Marktechpost and dual-degree scholar at IIT Madras, is obsessed with making use of know-how and AI to deal with real-world challenges. With a eager curiosity in fixing sensible issues, he brings a recent perspective to the intersection of AI and real-life options.