Construct belief and security for generative AI purposes with Amazon Comprehend and LangChain

We’re witnessing a speedy enhance within the adoption of enormous language fashions (LLM) that energy generative AI purposes throughout industries. LLMs are able to quite a lot of duties, resembling producing inventive content material, answering inquiries through chatbots, producing code, and extra.

Organizations trying to make use of LLMs to energy their purposes are more and more cautious about information privateness to make sure belief and security is maintained inside their generative AI purposes. This contains dealing with prospects’ personally identifiable info (PII) information correctly. It additionally contains stopping abusive and unsafe content material from being propagated to LLMs and checking that information generated by LLMs follows the identical ideas.

On this submit, we focus on new options powered by Amazon Comprehend that allow seamless integration to make sure information privateness, content material security, and immediate security in new and current generative AI purposes.

Amazon Comprehend is a pure language processing (NLP) service that makes use of machine studying (ML) to uncover info in unstructured information and textual content inside paperwork. On this submit, we focus on why belief and security with LLMs matter in your workloads. We additionally delve deeper into how these new moderation capabilities are utilized with the favored generative AI improvement framework LangChain to introduce a customizable belief and security mechanism in your use case.

Why belief and security with LLMs matter

Belief and security are paramount when working with LLMs attributable to their profound influence on a variety of purposes, from buyer help chatbots to content material era. As these fashions course of huge quantities of knowledge and generate humanlike responses, the potential for misuse or unintended outcomes will increase. Guaranteeing that these AI programs function inside moral and dependable boundaries is essential, not only for the status of companies that make the most of them, but additionally for preserving the belief of end-users and prospects.

Furthermore, as LLMs turn into extra built-in into our each day digital experiences, their affect on our perceptions, beliefs, and choices grows. Guaranteeing belief and security with LLMs goes past simply technical measures; it speaks to the broader duty of AI practitioners and organizations to uphold moral requirements. By prioritizing belief and security, organizations not solely shield their customers, but additionally guarantee sustainable and accountable development of AI in society. It might additionally assist to cut back threat of producing dangerous content material, and assist adhere to regulatory necessities.

Within the realm of belief and security, content material moderation is a mechanism that addresses varied facets, together with however not restricted to:

- Privateness – Customers can inadvertently present textual content that accommodates delicate info, jeopardizing their privateness. Detecting and redacting any PII is important.

- Toxicity – Recognizing and filtering out dangerous content material, resembling hate speech, threats, or abuse, is of utmost significance.

- Consumer intention – Figuring out whether or not the consumer enter (immediate) is protected or unsafe is crucial. Unsafe prompts can explicitly or implicitly categorical malicious intent, resembling requesting private or non-public info and producing offensive, discriminatory, or unlawful content material. Prompts might also implicitly categorical or request recommendation on medical, authorized, political, controversial, private, or monetary

Content material moderation with Amazon Comprehend

On this part, we focus on the advantages of content material moderation with Amazon Comprehend.

Addressing privateness

Amazon Comprehend already addresses privateness by its current PII detection and redaction talents through the DetectPIIEntities and ContainsPIIEntities APIs. These two APIs are backed by NLP fashions that may detect numerous PII entities resembling Social Safety numbers (SSNs), bank card numbers, names, addresses, telephone numbers, and so forth. For a full listing of entities, consult with PII universal entity types. DetectPII additionally supplies character-level place of the PII entity inside a textual content; for instance, the beginning character place of the NAME entity (John Doe) within the sentence “My identify is John Doe” is 12, and the top character place is nineteen. These offsets can be utilized to carry out masking or redaction of the values, thereby lowering dangers of personal information propagation into LLMs.

Addressing toxicity and immediate security

Immediately, we’re asserting two new Amazon Comprehend options within the type of APIs: Toxicity detection through the DetectToxicContent API, and immediate security classification through the ClassifyDocument API. Word that DetectToxicContent is a brand new API, whereas ClassifyDocument is an current API that now helps immediate security classification.

Toxicity detection

With Amazon Comprehend toxicity detection, you may determine and flag content material that could be dangerous, offensive, or inappropriate. This functionality is especially precious for platforms the place customers generate content material, resembling social media websites, boards, chatbots, remark sections, and purposes that use LLMs to generate content material. The first purpose is to take care of a constructive and protected surroundings by stopping the dissemination of poisonous content material.

At its core, the toxicity detection mannequin analyzes textual content to find out the chance of it containing hateful content material, threats, obscenities, or different types of dangerous textual content. The mannequin is educated on huge datasets containing examples of each poisonous and unhazardous content material. The toxicity API evaluates a given piece of textual content to offer toxicity classification and confidence rating. Generative AI purposes can then use this info to take acceptable actions, resembling stopping the textual content from propagating to LLMs. As of this writing, the labels detected by the toxicity detection API are HATE_SPEECH, GRAPHIC, HARRASMENT_OR_ABUSE, SEXUAL, VIOLENCE_OR_THREAT, INSULT, and PROFANITY. The next code demonstrates the API name with Python Boto3 for Amazon Comprehend toxicity detection:

Immediate security classification

Immediate security classification with Amazon Comprehend helps classify an enter textual content immediate as protected or unsafe. This functionality is essential for purposes like chatbots, digital assistants, or content material moderation instruments the place understanding the security of a immediate can decide responses, actions, or content material propagation to LLMs.

In essence, immediate security classification analyzes human enter for any specific or implicit malicious intent, resembling requesting private or non-public info and era of offensive, discriminatory, or unlawful content material. It additionally flags prompts in search of recommendation on medical, authorized, political, controversial, private, or monetary topics. Immediate classification returns two lessons, UNSAFE_PROMPT and SAFE_PROMPT, for an related textual content, with an related confidence rating for every. The arrogance rating ranges between 0–1 and mixed will sum as much as 1. As an illustration, in a buyer help chatbot, the textual content “How do I reset my password?” indicators an intent to hunt steering on password reset procedures and is labeled as SAFE_PROMPT. Equally, a press release like “I want one thing dangerous occurs to you” could be flagged for having a probably dangerous intent and labeled as UNSAFE_PROMPT. It’s essential to notice that immediate security classification is primarily targeted on detecting intent from human inputs (prompts), reasonably than machine-generated textual content (LLM outputs). The next code demonstrates methods to entry the immediate security classification function with the ClassifyDocument API:

Word that endpoint_arn within the previous code is an AWS-provided Amazon Resource Number (ARN) of the sample arn:aws:comprehend:<area>:aws:document-classifier-endpoint/prompt-safety, the place <area> is the AWS Area of your alternative the place Amazon Comprehend is available.

To exhibit these capabilities, we constructed a pattern chat utility the place we ask an LLM to extract PII entities resembling deal with, telephone quantity, and SSN from a given piece of textual content. The LLM finds and returns the suitable PII entities, as proven within the picture on the left.

With Amazon Comprehend moderation, we are able to redact the enter to the LLM and output from the LLM. Within the picture on the proper, the SSN worth is allowed to be handed to the LLM with out redaction. Nonetheless, any SSN worth within the LLM’s response is redacted.

The next is an instance of how a immediate containing PII info could be prevented from reaching the LLM altogether. This instance demonstrates a consumer asking a query that accommodates PII info. We use Amazon Comprehend moderation to detect PII entities within the immediate and present an error by interrupting the circulate.

The previous chat examples showcase how Amazon Comprehend moderation applies restrictions on information being despatched to an LLM. Within the following sections, we clarify how this moderation mechanism is applied utilizing LangChain.

Integration with LangChain

With the infinite potentialities of the applying of LLMs into varied use circumstances, it has turn into equally essential to simplify the event of generative AI purposes. LangChain is a well-liked open supply framework that makes it easy to develop generative AI purposes. Amazon Comprehend moderation extends the LangChain framework to supply PII identification and redaction, toxicity detection, and immediate security classification capabilities through AmazonComprehendModerationChain.

AmazonComprehendModerationChain is a customized implementation of the LangChain base chain interface. Because of this purposes can use this chain with their very own LLM chains to use the specified moderation to the enter immediate in addition to to the output textual content from the LLM. Chains could be constructed by merging quite a few chains or by mixing chains with different parts. You should utilize AmazonComprehendModerationChain with different LLM chains to develop advanced AI purposes in a modular and versatile method.

To elucidate it additional, we offer a couple of samples within the following sections. The supply code for the AmazonComprehendModerationChain implementation could be discovered throughout the LangChain open source repository. For full documentation of the API interface, consult with the LangChain API documentation for the Amazon Comprehend moderation chain. Utilizing this moderation chain is so simple as initializing an occasion of the category with default configurations:

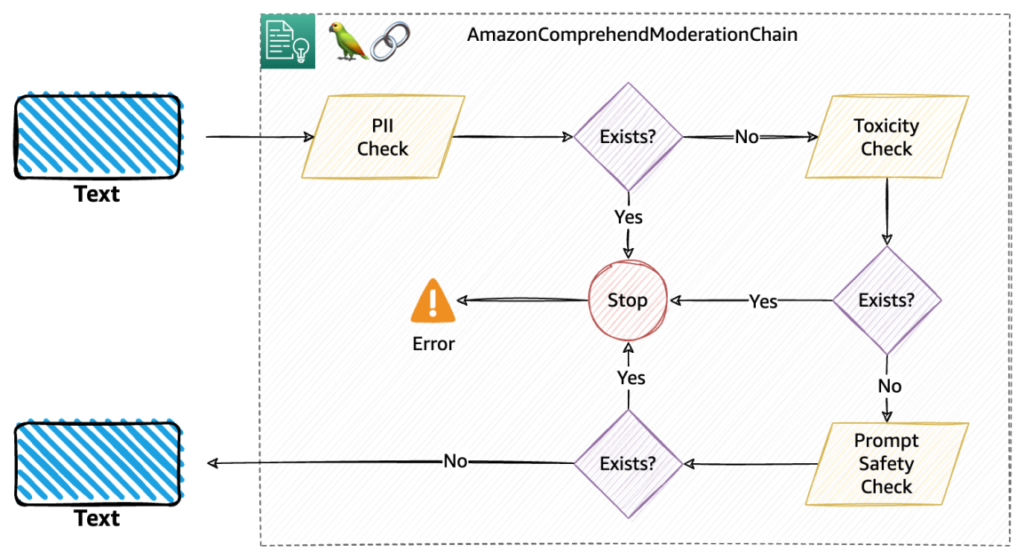

Behind the scenes, the moderation chain performs three consecutive moderation checks, particularly PII, toxicity, and immediate security, as defined within the following diagram. That is the default circulate for the moderation.

The next code snippet reveals a easy instance of utilizing the moderation chain with the Amazon FalconLite LLM (which is a quantized model of the Falcon 40B SFT OASST-TOP1 model) hosted in Hugging Face Hub:

Within the previous instance, we increase our chain with comprehend_moderation for each textual content going into the LLM and textual content generated by the LLM. This can carry out default moderation that may verify PII, toxicity, and immediate security classification in that sequence.

Customise your moderation with filter configurations

You should utilize the AmazonComprehendModerationChain with particular configurations, which provides you the flexibility to regulate what moderations you want to carry out in your generative AI–based mostly utility. On the core of the configuration, you’ve gotten three filter configurations out there.

- ModerationPiiConfig – Used to configure PII filter.

- ModerationToxicityConfig – Used to configure poisonous content material filter.

- ModerationIntentConfig – Used to configure intent filter.

You should utilize every of those filter configurations to customise the habits of how your moderations behave. Every filter’s configurations have a couple of widespread parameters, and a few distinctive parameters, that they are often initialized with. After you outline the configurations, you utilize the BaseModerationConfig class to outline the sequence by which the filters should apply to the textual content. For instance, within the following code, we first outline the three filter configurations, and subsequently specify the order by which they have to apply:

Let’s dive just a little deeper to grasp what this configuration achieves:

- First, for the toxicity filter, we specified a threshold of 0.6. Because of this if the textual content accommodates any of the out there poisonous labels or entities with a rating higher than the edge, the entire chain will probably be interrupted.

- If there is no such thing as a poisonous content material discovered within the textual content, a PII verify is On this case, we’re enthusiastic about checking if the textual content accommodates SSN values. As a result of the

redactparameter is about toTrue, the chain will masks the detected SSN values (if any) the place the SSN entitiy’s confidence rating is larger than or equal to 0.5, with the masks character specified (X). Ifredactis about toFalse, the chain will probably be interrupted for any SSN detected. - Lastly, the chain performs immediate security classification, and can cease the content material from propagating additional down the chain if the content material is classed with

UNSAFE_PROMPTwith a confidence rating of higher than or equal to 0.8.

The next diagram illustrates this workflow.

In case of interruptions to the moderation chain (on this instance, relevant for the toxicity and immediate security classification filters), the chain will elevate a Python exception, primarily stopping the chain in progress and permitting you to catch the exception (in a try-catch block) and carry out any related motion. The three potential exception varieties are:

ModerationPIIErrorModerationToxicityErrorModerationPromptSafetyError

You’ll be able to configure one filter or a couple of filter utilizing BaseModerationConfig. It’s also possible to have the identical sort of filter with totally different configurations throughout the similar chain. For instance, in case your use case is simply involved with PII, you may specify a configuration that should interrupt the chain if in case an SSN is detected; in any other case, it should carry out redaction on age and identify PII entities. A configuration for this may be outlined as follows:

Utilizing callbacks and distinctive identifiers

If you happen to’re aware of the idea of workflows, you might also be aware of callbacks. Callbacks inside workflows are unbiased items of code that run when sure circumstances are met throughout the workflow. A callback can both be blocking or nonblocking to the workflow. LangChain chains are, in essence, workflows for LLMs. AmazonComprehendModerationChain means that you can outline your personal callback features. Initially, the implementation is restricted to asynchronous (nonblocking) callback features solely.

This successfully implies that for those who use callbacks with the moderation chain, they are going to run independently of the chain’s run with out blocking it. For the moderation chain, you get choices to run items of code, with any enterprise logic, after every moderation is run, unbiased of the chain.

It’s also possible to optionally present an arbitrary distinctive identifier string when creating an AmazonComprehendModerationChain to allow logging and analytics later. For instance, for those who’re working a chatbot powered by an LLM, chances are you’ll need to monitor customers who’re constantly abusive or are intentionally or unknowingly exposing private info. In such circumstances, it turns into essential to trace the origin of such prompts and maybe retailer them in a database or log them appropriately for additional motion. You’ll be able to cross a novel ID that distinctly identifies a consumer, resembling their consumer identify or electronic mail, or an utility identify that’s producing the immediate.

The mix of callbacks and distinctive identifiers supplies you with a strong option to implement a moderation chain that matches your use case in a way more cohesive method with much less code that’s simpler to take care of. The callback handler is obtainable through the BaseModerationCallbackHandler, with three out there callbacks: on_after_pii(), on_after_toxicity(), and on_after_prompt_safety(). Every of those callback features known as asynchronously after the respective moderation verify is carried out throughout the chain. These features additionally obtain two default parameters:

- moderation_beacon – A dictionary containing particulars such because the textual content on which the moderation was carried out, the total JSON output of the Amazon Comprehend API, the kind of moderation, and if the provided labels (within the configuration) had been discovered throughout the textual content or not

- unique_id – The distinctive ID that you just assigned whereas initializing an occasion of the

AmazonComprehendModerationChain.

The next is an instance of how an implementation with callback works. On this case, we outlined a single callback that we would like the chain to run after the PII verify is carried out:

We then use the my_callback object whereas initializing the moderation chain and likewise cross a unique_id. You might use callbacks and distinctive identifiers with or with out a configuration. While you subclass BaseModerationCallbackHandler, it’s essential to implement one or all the callback strategies relying on the filters you propose to make use of. For brevity, the next instance reveals a approach to make use of callbacks and unique_id with none configuration:

The next diagram explains how this moderation chain with callbacks and distinctive identifiers works. Particularly, we applied the PII callback that ought to write a JSON file with the information out there within the moderation_beacon and the unique_id handed (the consumer’s electronic mail on this case).

Within the following Python notebook, we’ve got compiled a couple of alternative ways you may configure and use the moderation chain with varied LLMs, resembling LLMs hosted with Amazon SageMaker JumpStart and hosted in Hugging Face Hub. Now we have additionally included the pattern chat utility that we mentioned earlier with the next Python notebook.

Conclusion

The transformative potential of enormous language fashions and generative AI is plain. Nonetheless, their accountable and moral use hinges on addressing considerations of belief and security. By recognizing the challenges and actively implementing measures to mitigate dangers, builders, organizations, and society at giant can harness the advantages of those applied sciences whereas preserving the belief and security that underpin their profitable integration. Use Amazon Comprehend ContentModerationChain so as to add belief and security options to any LLM workflow, together with Retrieval Augmented Technology (RAG) workflows applied in LangChain.

For info on constructing RAG based mostly options utilizing LangChain and Amazon Kendra’s extremely correct, machine studying (ML)-powered intelligent search, see – Quickly build high-accuracy Generative AI applications on enterprise data using Amazon Kendra, LangChain, and large language models. As a subsequent step, consult with the code samples we created for utilizing Amazon Comprehend moderation with LangChain. For full documentation of the Amazon Comprehend moderation chain API, consult with the LangChain API documentation.

In regards to the authors

Wrick Talukdar is a Senior Architect with the Amazon Comprehend Service staff. He works with AWS prospects to assist them undertake machine studying on a big scale. Outdoors of labor, he enjoys studying and pictures.

Wrick Talukdar is a Senior Architect with the Amazon Comprehend Service staff. He works with AWS prospects to assist them undertake machine studying on a big scale. Outdoors of labor, he enjoys studying and pictures.

Anjan Biswas is a Senior AI Providers Options Architect with a give attention to AI/ML and Knowledge Analytics. Anjan is a part of the world-wide AI companies staff and works with prospects to assist them perceive and develop options to enterprise issues with AI and ML. Anjan has over 14 years of expertise working with world provide chain, manufacturing, and retail organizations, and is actively serving to prospects get began and scale on AWS AI companies.

Anjan Biswas is a Senior AI Providers Options Architect with a give attention to AI/ML and Knowledge Analytics. Anjan is a part of the world-wide AI companies staff and works with prospects to assist them perceive and develop options to enterprise issues with AI and ML. Anjan has over 14 years of expertise working with world provide chain, manufacturing, and retail organizations, and is actively serving to prospects get began and scale on AWS AI companies.

Nikhil Jha is a Senior Technical Account Supervisor at Amazon Internet Providers. His focus areas embrace AI/ML, and analytics. In his spare time, he enjoys enjoying badminton together with his daughter and exploring the outside.

Nikhil Jha is a Senior Technical Account Supervisor at Amazon Internet Providers. His focus areas embrace AI/ML, and analytics. In his spare time, he enjoys enjoying badminton together with his daughter and exploring the outside.

Chin Rane is an AI/ML Specialist Options Architect at Amazon Internet Providers. She is enthusiastic about utilized arithmetic and machine studying. She focuses on designing clever doc processing options for AWS prospects. Outdoors of labor, she enjoys salsa and bachata dancing.

Chin Rane is an AI/ML Specialist Options Architect at Amazon Internet Providers. She is enthusiastic about utilized arithmetic and machine studying. She focuses on designing clever doc processing options for AWS prospects. Outdoors of labor, she enjoys salsa and bachata dancing.