Empower your enterprise customers to extract insights from firm paperwork utilizing Amazon SageMaker Canvas Generative AI

Enterprises search to harness the potential of Machine Studying (ML) to resolve complicated issues and enhance outcomes. Till just lately, constructing and deploying ML fashions required deep ranges of technical and coding expertise, together with tuning ML fashions and sustaining operational pipelines. Since its introduction in 2021, Amazon SageMaker Canvas has enabled enterprise analysts to construct, deploy, and use a wide range of ML fashions – together with tabular, pc imaginative and prescient, and pure language processing – with out writing a line of code. This has accelerated the power of enterprises to use ML to make use of circumstances reminiscent of time-series forecasting, buyer churn prediction, sentiment evaluation, industrial defect detection, and plenty of others.

As introduced on October 5, 2023, SageMaker Canvas expanded its help of fashions to basis fashions (FMs) – giant language fashions used to generate and summarize content material. With the October 12, 2023 release, SageMaker Canvas lets customers ask questions and get responses which might be grounded of their enterprise knowledge. This ensures that outcomes are context-specific, opening up further use circumstances the place no-code ML could be utilized to resolve enterprise issues. For instance, enterprise groups can now formulate responses according to a corporation’s particular vocabulary and tenets, and might extra shortly question prolonged paperwork to get responses particular and grounded to the contents of these paperwork. All this content material is carried out in a personal and safe method, making certain that each one delicate knowledge is accessed with correct governance and safeguards.

To get began, a cloud administrator configures and populates Amazon Kendra indexes with enterprise knowledge as knowledge sources for SageMaker Canvas. Canvas customers choose the index the place their paperwork are, and might ideate, analysis, and discover realizing that the output will at all times be backed by their sources-of-truth. SageMaker Canvas makes use of state-of-the-art FMs from Amazon Bedrock and Amazon SageMaker JumpStart. Conversations could be began with a number of FMs side-by-side, evaluating the outputs and actually making generative-AI accessible to everybody.

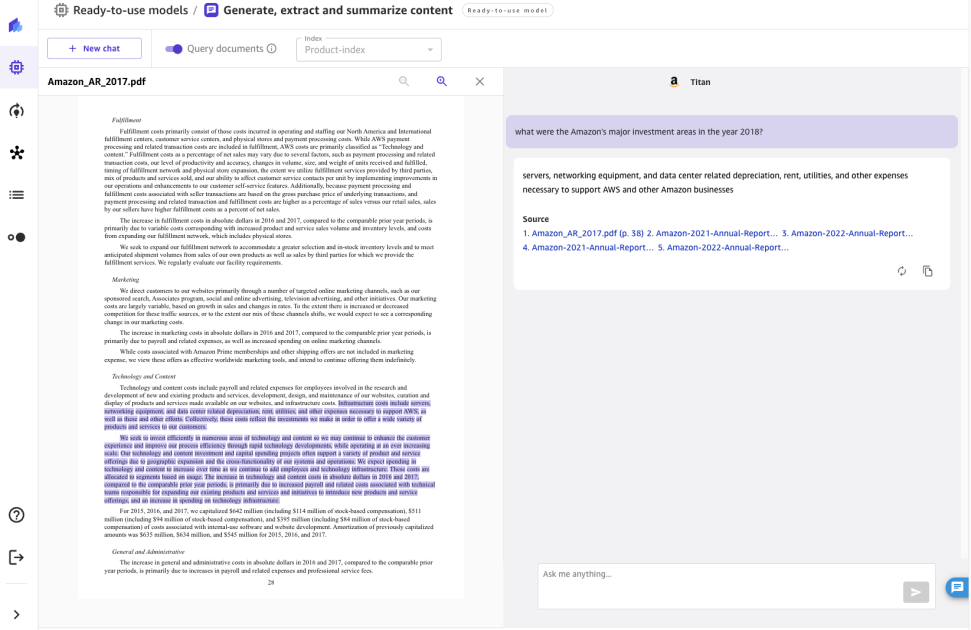

On this submit, we are going to overview the just lately launched function, focus on the structure, and current a step-by-step information to allow SageMaker Canvas to question paperwork out of your information base, as proven within the following display screen seize.

Resolution overview

Basis fashions can produce hallucinations – responses which might be generic, imprecise, unrelated, or factually incorrect. Retrieval Augmented Generation (RAG) is a often used method to cut back hallucinations. RAG architectures are used to retrieve knowledge from outdoors of an FM, which is then used to carry out in-context studying to reply the consumer’s question. This ensures that the FM can use knowledge from a trusted information base and use that information to reply customers’ questions, lowering the chance of hallucination.

With RAG, the info exterior to the FM and used to reinforce consumer prompts can come from a number of disparate knowledge sources, reminiscent of doc repositories, databases, or APIs. Step one is to transform your paperwork and any consumer queries right into a appropriate format to carry out relevancy semantic search. To make the codecs appropriate, a doc assortment, or information library, and user-submitted queries are transformed into numerical representations utilizing embedding fashions.

With this launch, RAG performance is offered in a no-code and seamless method. Enterprises can enrich the chat expertise in Canvas with Amazon Kendra because the underlying information administration system. The next diagram illustrates the answer structure.

Connecting SageMaker Canvas to Amazon Kendra requires a one-time set-up. We describe the set-up course of intimately in Establishing Canvas to question paperwork. If you happen to haven’t already set-up your SageMaker Area, seek advice from Onboard to Amazon SageMaker Domain.

As a part of the area configuration, a cloud administrator can select a number of Kendra indices that the enterprise analyst can question when interacting with the FM via SageMaker Canvas.

After the Kendra indices are hydrated and configured, enterprise analysts use them with SageMaker Canvas by beginning a brand new chat and deciding on “Question Paperwork” toggle. SageMaker Canvas will then handle the underlying communication between Amazon Kendra and the FM of option to carry out the next operations:

- Question the Kendra indices with the query coming from the consumer.

- Retrieve the snippets (and the sources) from Kendra indices.

- Engineer the immediate with the snippets with the unique question in order that the muse mannequin can generate a solution from the retrieved paperwork.

- Present the generated reply to the consumer, together with references to the pages/paperwork that have been used to formulate the response.

Establishing Canvas to question paperwork

On this part, we are going to stroll you thru the steps to arrange Canvas to question paperwork served via Kendra indexes. You must have the next conditions:

- SageMaker Area setup – Onboard to Amazon SageMaker Domain

- Create a Kendra index (or a couple of)

- Setup the Kendra Amazon S3 connector – comply with the Amazon S3 Connector – and add PDF recordsdata and different paperwork to the Amazon S3 bucket related to the Kendra index

- Setup IAM in order that Canvas has the suitable permissions, together with these required for calling Amazon Bedrock and/or SageMaker endpoints – comply with the Set-up Canvas Chat documentation

Now you possibly can replace the Area in order that it might probably entry the specified indices. On the SageMaker console, for the given Area, choose Edit below the Area Settings tab. Allow the toggle “Allow question paperwork with Amazon Kendra” which could be discovered on the Canvas Settings step. As soon as activated, select a number of Kendra indices that you simply need to use with Canvas.

That’s all that’s wanted to configure Canvas question paperwork function. Customers can now leap right into a chat inside Canvas and begin utilizing the information bases which were hooked up to the Area via the Kendra indexes. The maintainers of the knowledge-base can proceed to replace the source-of-truth and with the syncing functionality in Kendra, the chat customers will mechanically be capable of use the up-to-date data in a seamless method.

Utilizing the Question Paperwork function for chat

As a SageMaker Canvas consumer, the Question Paperwork function could be accessed from inside a chat. To begin the chat session, click on or seek for the “Generate, extract and summarize content material” button from the Prepared-to-use fashions tab in SageMaker Canvas.

As soon as there, you possibly can activate and off Question Paperwork with the toggle on the high of the display screen. Take a look at the knowledge immediate to be taught extra in regards to the function.

When Question Paperwork is enabled, you possibly can select amongst an inventory of Kendra indices enabled by the cloud administrator.

You’ll be able to choose an index when beginning a brand new chat. You’ll be able to then ask a query within the UX with information being mechanically sourced from the chosen index. Be aware that after a dialog has began in opposition to a selected index, it isn’t doable to change to a different index.

For the questions requested, the chat will present the reply generated by the FM together with the supply paperwork that contributed to producing the reply. When clicking any of the supply paperwork, Canvas opens a preview of the doc, highlighting the excerpt utilized by the FM.

Conclusion

Conversational AI has immense potential to rework buyer and worker expertise by offering a human-like assistant with pure and intuitive interactions reminiscent of:

- Performing analysis on a subject or search and browse the group’s information base

- Summarizing volumes of content material to quickly collect insights

- Looking for Entities, Sentiments, PII and different helpful knowledge, and growing the enterprise worth of unstructured content material

- Producing drafts for paperwork and enterprise correspondence

- Creating information articles from disparate inner sources (incidents, chat logs, wikis)

The revolutionary integration of chat interfaces, information retrieval, and FMs allows enterprises to supply correct, related responses to consumer questions by utilizing their area information and sources-of-truth.

By connecting SageMaker Canvas to information bases in Amazon Kendra, organizations can maintain their proprietary knowledge inside their very own surroundings whereas nonetheless benefiting from state-of-the-art pure language capabilities of FMs. With the launch of SageMaker Canvas’s Question Paperwork function, we’re making it straightforward for any enterprise to make use of LLMs and their enterprise information as source-of-truth to energy a safe chat expertise. All this performance is out there in a no-code format, permitting companies to keep away from dealing with the repetitive and non-specialized duties.

To be taught extra about SageMaker Canvas and the way it helps make it simpler for everybody to begin with Machine Studying, take a look at the SageMaker Canvas announcement. Study extra about how SageMaker Canvas helps foster collaboration between knowledge scientists and enterprise analysts by studying the Build, Share & Deploy post. Lastly, to learn to create your personal Retrieval Augmented Era workflow, seek advice from SageMaker JumpStart RAG.

References

Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., Küttler, H., Lewis, M., Yih, W., Rocktäschel, T., Riedel, S., Kiela, D. (2020). Retrieval-Augmented Era for Information-Intensive NLP Duties. Advances in Neural Data Processing Methods, 33, 9459-9474.

In regards to the Authors

Davide Gallitelli is a Senior Specialist Options Architect for AI/ML. He’s based mostly in Brussels and works carefully with prospects throughout the globe that wish to undertake Low-Code/No-Code Machine Studying applied sciences, and Generative AI. He has been a developer since he was very younger, beginning to code on the age of seven. He began studying AI/ML at college, and has fallen in love with it since then.

Davide Gallitelli is a Senior Specialist Options Architect for AI/ML. He’s based mostly in Brussels and works carefully with prospects throughout the globe that wish to undertake Low-Code/No-Code Machine Studying applied sciences, and Generative AI. He has been a developer since he was very younger, beginning to code on the age of seven. He began studying AI/ML at college, and has fallen in love with it since then.

Bilal Alam is an Enterprise Options Architect at AWS with a deal with the Monetary Companies trade. On most days Bilal helps prospects with constructing, uplifting and securing their AWS surroundings to deploy their most crucial workloads. He has in depth expertise in Telco, networking, and software program improvement. Extra just lately, he has been wanting into utilizing AI/ML to resolve enterprise issues.

Bilal Alam is an Enterprise Options Architect at AWS with a deal with the Monetary Companies trade. On most days Bilal helps prospects with constructing, uplifting and securing their AWS surroundings to deploy their most crucial workloads. He has in depth expertise in Telco, networking, and software program improvement. Extra just lately, he has been wanting into utilizing AI/ML to resolve enterprise issues.

Pashmeen Mistry is a Senior Product Supervisor at AWS. Outdoors of labor, Pashmeen enjoys adventurous hikes, pictures, and spending time along with his household.

Pashmeen Mistry is a Senior Product Supervisor at AWS. Outdoors of labor, Pashmeen enjoys adventurous hikes, pictures, and spending time along with his household.

Dan Sinnreich is a Senior Product Supervisor at AWS, serving to to democratize low-code/no-code machine studying. Earlier to AWS, Dan constructed and commercialized enterprise SaaS platforms and time-series fashions utilized by institutional buyers to handle threat and assemble optimum portfolios. Outdoors of labor, he could be discovered taking part in hockey, scuba diving, and studying science fiction.

Dan Sinnreich is a Senior Product Supervisor at AWS, serving to to democratize low-code/no-code machine studying. Earlier to AWS, Dan constructed and commercialized enterprise SaaS platforms and time-series fashions utilized by institutional buyers to handle threat and assemble optimum portfolios. Outdoors of labor, he could be discovered taking part in hockey, scuba diving, and studying science fiction.