Create a Generative AI Gateway to permit safe and compliant consumption of basis fashions

Within the quickly evolving world of AI and machine studying (ML), basis fashions (FMs) have proven super potential for driving innovation and unlocking new use circumstances. Nonetheless, as organizations more and more harness the facility of FMs, considerations surrounding knowledge privateness, safety, added price, and compliance have change into paramount. Regulated and compliance-oriented industries, equivalent to monetary companies, healthcare and life sciences, and authorities institutes, face distinctive challenges in making certain the safe and accountable consumption of those fashions. To strike a stability between agility, innovation, and adherence to requirements, a sturdy platform turns into important. On this put up, we suggest Generative AI Gateway as platform for an enterprise to permit safe entry to FMs for fast innovation.

On this put up, we outline what a Generative AI Gateway is, its advantages, and how you can architect one on AWS. A Generative AI Gateway may help massive enterprises management, standardize, and govern FM consumption from companies equivalent to Amazon Bedrock, Amazon SageMaker JumpStart, third-party mannequin suppliers (equivalent to Anthropic and their APIs), and different mannequin suppliers exterior of the AWS ecosystem.

What’s a Generative AI Gateway?

For conventional APIs (equivalent to REST or gRPC), API Gateway has established itself as a design sample that allows enterprises to standardize and management how APIs are externalized and consumed. As well as, API Registries enabled centralized governance, management, and discoverability of APIs.

Equally, Generative AI Gateway is a design sample that goals to increase on API Gateway and Registry patterns with concerns particular to serving and consuming basis fashions in massive enterprise settings. For instance, dealing with hallucinations, managing company-specific IPs and EULAs (Finish Person License Agreements), in addition to moderating generations are new obligations that transcend the scope of conventional API Gateways.

Along with necessities particular for generative AI, the technological and regulatory panorama for basis fashions is altering quick. This creates distinctive challenges for organizations to stability innovation pace and compliance. For instance:

- The state-of-the-art (SOTA) of fashions, architectures, and finest practices are always altering. This implies corporations want free coupling between app purchasers (mannequin shoppers) and mannequin inference endpoints, which ensures straightforward swap amongst massive language mannequin (LLM), imaginative and prescient, or multi-modal endpoints if wanted. An abstraction layer over mannequin inference endpoints supplies such free coupling.

- Regulatory uncertainty, particularly over IP and knowledge privateness, requires observability, monitoring, and hint of generations. For instance, if Retrieval Augmented Technology (RAG)-based purposes by chance embrace personally identifiable data (PII) knowledge in context, such points should be detected in actual time. This turns into difficult if massive enterprises with a number of knowledge science groups use bespoke, distributed platforms for deploying basis fashions.

Generative AI Gateway goals to resolve for these new necessities whereas offering the identical advantages of conventional API Gateways and Registries, equivalent to centralized governance and observability, and reuse of widespread parts.

Resolution overview

Particularly, Generative AI Gateway supplies the next key parts:

- A mannequin abstraction layer for authorized FMs

- An API Gateway for FMs (AI Gateway)

- A playground for FMs for inside mannequin discoverability

The next diagram illustrates the answer structure.

For added resilience, the recommended answer will be deployed in a Multi-AZ atmosphere. The dotted traces within the previous diagram signify community boundaries, though all the answer will be deployed in a single VPC.

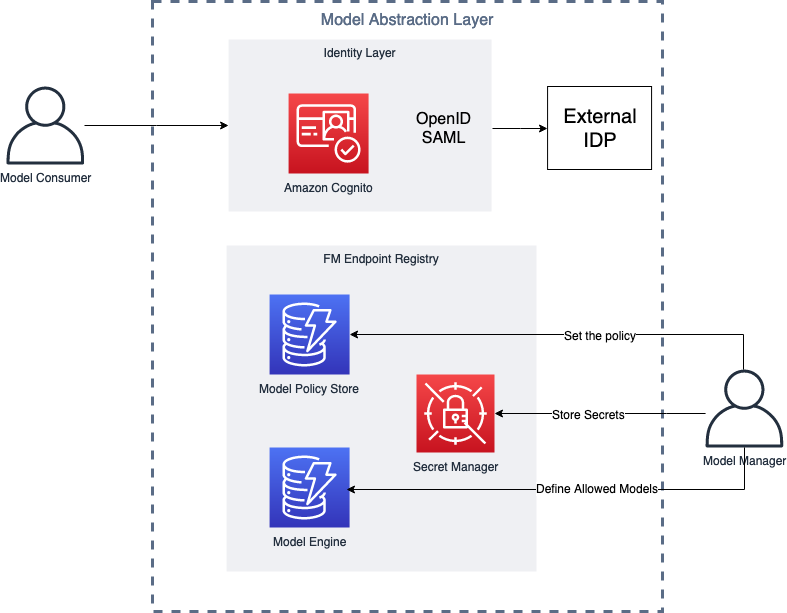

Mannequin abstraction layer

The mannequin abstraction layer serves as the muse for safe and managed entry to the group’s pool of FMs. The layer serves a single supply of reality on which fashions can be found to the corporate, crew, and worker, in addition to how you can entry every mannequin by storing endpoint data for every mannequin.

This layer serves because the cornerstone for safe, compliant, and agile consumption of FMs by the Generative AI Gateway, selling accountable AI practices inside the group.

The layer itself consists of 4 fundamental parts:

- FM endpoint registry – After the FMs are evaluated, authorized, and deployed for utilization, their endpoints are added to the FM endpoint registry—a centralized repository of all deployed or externally accessible API endpoints. The registry incorporates metadata about generative AI service endpoints that a company consumes, whether or not it’s an internally deployed FM or an externally supplied generative AI API from a vendor. The metadata consists of data equivalent to service endpoint data for every basis mannequin and their configuration, and entry insurance policies (primarily based on function, crew, and so forth).

- Mannequin coverage retailer and engine – For FMs to be consumed in a compliant method, the mannequin abstraction layer should observe qualitative and quantitative guidelines for mannequin generations. For instance, some generations may be topic to sure rules equivalent to CCPA (California Client Privateness Act), which requires customized era habits per geo. Due to this fact, the insurance policies needs to be nation and geo conscious, to make sure compliance throughout altering regulatory environments throughout locales.

- Identification layer – After the fashions can be found to be consumed, the id layer performs a pivotal function in entry administration, making certain that solely licensed customers or roles inside the group can work together with particular FMs by the AI Gateway. Position-based entry management (RBAC) mechanisms assist outline granular entry permissions, making certain that customers can entry fashions primarily based on their roles and obligations.

- Integration with vendor mannequin registries – FMS will be accessible in several methods, both deployed in group accounts beneath VPCs or accessible as APIs by totally different distributors. After passing the preliminary checks talked about earlier, the endpoint registry holds the mandatory details about these fashions from distributors and their variations uncovered by way of APIs. This abstracts approach the underlying complexities from the end-user.

To populate the AI mannequin endpoint registry, the Generative AI Gateway crew collaborates with a cross-function crew of area specialists and enterprise line stakeholders to rigorously choose and onboard FMs to the platform. Throughout this onboarding section, components like mannequin efficiency, price, moral alignment, compliance with {industry} rules, and the seller’s popularity are rigorously thought of. By conducting thorough evaluations, organizations be sure that the chosen FMs align with their particular enterprise wants and cling to safety and privateness necessities.

The next diagram illustrates the structure of this layer.

AWS companies may help in constructing a mannequin abstraction layer (MAL) as follows:

- The generative AI supervisor creates a registry desk utilizing Amazon DynamoDB. This desk is populated with details about the FMs both deployed internally within the group account or accessible by way of an API from distributors. This desk will maintain the endpoint, metadata, and configuration parameters for the mannequin. It may well additionally retailer the data if a customized AWS Lambda perform is required to invoke the underlying FM with vendor-specific API purchasers.

- The generative AI supervisor then determines entry for the consumer, provides limits, provides a coverage for what kind of generations the consumer can carry out (photos, textual content, multi-modality, and so forth), and provides different group particular insurance policies equivalent to accountable AI and content material filters that will probably be added as a separate coverage desk in DynamoDB.

- When the consumer makes a request utilizing the AI Gateway, it’s routed to Amazon Cognito to find out entry for the shopper. A Lambda authorizer helps decide the entry from the id layer, which will probably be managed by the DynamoDB desk coverage. If the shopper has entry, the related entry such because the AWS Identity and Access Management (IAM) function or API key for the FM endpoint are fetched from AWS Secrets Manager. Additionally, the registry is explored to search out the related endpoint and configuration at this stage.

- In any case the mandatory data associated to the request is fetched, such because the endpoint, configuration, entry keys, and customized perform, it’s handed again to the AI Gateway for use with the dispatcher Lambda perform that calls a particular mannequin endpoint.

AI Gateway

The AI Gateway serves as an important element that facilitates safe and environment friendly consumption of FMs inside the group. It operates on high of the mannequin abstraction layer, offering an API-based interface to inside customers, together with builders, knowledge scientists, and enterprise analysts.

By means of this user-friendly interface (programmatic and playground UI-based), inside customers can seamlessly entry, work together with, and use the group’s curated fashions, making certain related fashions are made accessible primarily based on their identities and obligations. An AI Gateway can comprise the next:

- A unified API interface throughout all FMs – The AI Gateway presents a unified API interface and SDK that abstracts the underlying technical complexities, enabling inside customers to work together with the group’s pool of FMs effortlessly. Customers can use the APIs to invoke totally different fashions and ship of their prompts to get mannequin era.

- API quota, limits, and utilization administration – This consists of the next:

- Consumed quota – To allow environment friendly useful resource allocation and value management, the AI Gateway supplies customers with insights into their consumed quota for every mannequin. This transparency permits customers to handle their AI useful resource utilization successfully, making certain optimum utilization and stopping useful resource waste.

- Request for devoted internet hosting – Recognizing the significance of useful resource allocation for essential use circumstances, the AI Gateway permits customers to request devoted internet hosting of particular fashions. Customers with high-priority or latency-sensitive purposes can use this function to make sure a constant and devoted atmosphere for his or her mannequin inference wants.

- Entry management and mannequin governance – Utilizing the id layer from the mannequin abstraction layer, the AI Gateway enforces stringent entry controls. Every consumer’s id and assigned roles decide the fashions they’ll entry. This granular entry management ensures that customers are introduced with solely the fashions related to their domains, sustaining knowledge safety and privateness whereas selling accountable AI utilization.

- Content material, privateness, and accountable AI coverage enforcement – The API Gateway employs each the preprocessing and postprocessing of all inputs to the mannequin in addition to the mannequin generations to filter and average for toxicity, violence, harmfulness, PII knowledge, and extra which might be specified by the mannequin abstraction layer for filtering. Centralizing this perform within the AI Gateway ensures enforcement and simple audit.

By integrating the AI Gateway with the mannequin abstraction layer and incorporating options equivalent to identity-based entry management, mannequin itemizing and metadata show, consumed quota monitoring, and devoted internet hosting requests, organizations can create a robust AI consumption platform.

As well as, the AI Gateway supplies the usual advantages of API Gateways, equivalent to the next:

- Value management mechanism – To optimize useful resource allocation and handle prices successfully, a sturdy price management mechanism will be carried out. This mechanism screens useful resource utilization, mannequin inference prices, and knowledge switch bills. It permits organizations to realize insights into generative AI useful resource expenditure, establish cost-saving alternatives, and make knowledgeable choices on useful resource allocation.

- Cache – Inference from FMs can change into costly, particularly throughout testing and improvement phases of the appliance. A cache layer may help cut back that price and even enhance the pace by sustaining a cache for frequent requests. The cache additionally offloads the inference burden on the endpoint, which makes room for different requests.

- Observability – This performs an important function in capturing actions carried out on the AI Gateway and the Discovery Playground. Detailed logs file consumer interactions, mannequin requests, and system responses. These logs present worthwhile data for troubleshooting, monitoring consumer habits, and reinforcing transparency and accountability.

- Quotas, price limits, and throttling – The governance facet of this layer can incorporate the appliance of quotas, price limits, and throttling to handle and management AI useful resource utilization. Quotas outline the utmost variety of requests a consumer or crew could make inside a particular time-frame, making certain truthful useful resource distribution. Fee limits stop extreme utilization of assets by implementing a most request price. Throttling mitigates the danger of system overload by controlling the frequency of incoming requests, stopping service disruptions.

- Audit trails and utilization monitoring – The crew assumes duty of sustaining detailed audit trails of all the ecosystem. These logs allow complete utilization monitoring, permitting the central crew to trace consumer actions, establish potential dangers, and preserve transparency in AI consumption.

The next diagram illustrates this structure.

AWS companies may help in constructing an AI Gateway as follows:

- The consumer makes the request utilizing Amazon API Gateway, which is routed to the mannequin abstraction layer after the request has been authenticated and licensed.

- The AI Gateway enforces utilization limits for every consumer’s request utilizing utilization restrict insurance policies returned by the MAL. For straightforward enforcement, we use the native functionality of API Gateway to implement metering. As well as, we carry out commonplace API Gateway validations on request utilizing a JSON schema.

- After the utilization limits are validated, each the endpoint configuration and credentials acquired from the MAL kind the precise inference payload utilizing native interfaces supplied by every of the authorized mannequin distributors. The dispatch layer normalizes the variations throughout distributors’ SDKs and API interfaces to supply a unified interface to the shopper. Points equivalent to DNS modifications, load balancing, and caching is also dealt with by a extra subtle dispatch service.

- After the response is acquired from the underlying mannequin endpoints, postprocessing Lambda features use the insurance policies from the MAL pertaining to content material (toxicity, nudity, and so forth) in addition to compliance (CCPA, GDPR, and so forth) to filter or masks generations as a complete or partially.

- All through the lifecycle of the request, all generations and inference payloads are logged by Amazon CloudWatch Logs, which will be organized by way of log teams relying on tags in addition to insurance policies retrieved from MAL. For instance, logs will be separated per mannequin vendor and geo. This permits for additional mannequin enchancment and troubleshooting.

- Lastly, a retroactive audit is obtainable by AWS CloudTrail.

Discovery Playground

The final element is to introduce a Discovery Playground, which presents a user-friendly interface constructed on high of the mannequin abstraction layer and the AI Gateway, providing a dynamic atmosphere for customers to discover, check, and unleash the complete potential of obtainable FMs. Past offering entry to AI capabilities, the playground empowers customers to work together with fashions utilizing a wealthy UI interface, present worthwhile suggestions, and share their discoveries with different customers inside the group. It gives the next key options:

- Playground interface – You’ll be able to effortlessly enter prompts and obtain mannequin outputs in actual time. The UI streamlines the interplay course of, making generative AI exploration accessible to customers with various ranges of technical experience.

- Mannequin playing cards – You’ll be able to entry a complete checklist of obtainable fashions together with their corresponding metadata. You’ll be able to discover detailed details about every mannequin, equivalent to its capabilities, efficiency metrics, and supported use circumstances. This function facilitates knowledgeable decision-making, empowering you to pick probably the most appropriate mannequin on your particular wants.

- Suggestions mechanism – A differentiating facet of the playground can be its suggestions mechanism, permitting you to supply insights on mannequin outputs. You’ll be able to report points like hallucination (fabricated data), inappropriate language, or any unintended habits noticed throughout interactions with the fashions.

- Suggestions to be used circumstances – The Discovery Playground will be designed to facilitate studying and understanding of FMs’ capabilities for various use circumstances. You’ll be able to experiment with numerous prompts and uncover which fashions excel in particular eventualities.

By providing a wealthy UI interface, mannequin playing cards, suggestions mechanism, use case suggestions, and the non-obligatory Instance Retailer, the Discovery Playground turns into a robust platform for generative AI exploration and data sharing inside the group.

Course of concerns

Whereas the earlier modules of the Generative AI Gateway provide a platform, this layer is extra sensible, making certain the accountable and compliant consumption of FMs inside the group. It encompasses further measures that transcend the technical elements, specializing in authorized, sensible, and regulatory concerns. This layer presents essential obligations for the central crew to deal with knowledge safety, licenses, organizational rules, and audit trails, fostering a tradition of belief and transparency:

- Knowledge safety and privateness – As a result of FMs can course of huge quantities of knowledge, knowledge safety and privateness change into paramount considerations. The central crew is answerable for implementing strong knowledge safety measures, together with encryption, entry controls, and knowledge anonymization. Compliance with knowledge safety rules, equivalent to GDPR, HIPAA, or different industry-specific requirements, is diligently ensured to safeguard delicate data and consumer privateness.

- Knowledge monitoring – A complete knowledge monitoring system needs to be established to trace incoming and outgoing data by the AI Gateway and Discovery Playground. This consists of monitoring the prompts supplied by customers and the corresponding mannequin outputs. The information monitoring mechanism permits the group to look at knowledge patterns, detect anomalies, and be sure that delicate data stays safe.

- Mannequin licenses and agreements – The central crew ought to take the lead in managing licenses and agreements related to using fashions. Vendor-provided fashions might include particular utilization agreements, utilization restrictions, or licensing phrases. The crew ensures compliance with these agreements and maintains a complete repository of all licenses, making certain a transparent understanding of the rights and limitations pertaining to every mannequin.

- Moral concerns – As AI methods change into more and more subtle, the central crew assumes the duty of making certain moral alignment in AI utilization. They assess fashions for potential biases, dangerous outputs, or unethical habits. Steps are taken to mitigate such points and foster accountable AI improvement and deployment inside the group.

- Proactive adaptation – To remain forward of rising challenges and ever-changing rules, the central crew takes a proactive method to governance. They repeatedly replace insurance policies, mannequin requirements, and compliance measures to align with the newest {industry} practices and authorized necessities. This ensures the group’s AI ecosystem stays in compliance and upholds moral requirements.

Conclusion

The Generative AI Gateway permits organizations to make use of basis fashions responsibly and securely. By means of the combination of the mannequin abstraction layer, AI Gateway, and Discovery Playground powered with monitoring, observability, governance, and safety, compliance, and audit layers, organizations can strike a stability between innovation and compliance. The AI Gateway empowers you with seamless entry to curated fashions, whereas the Discovery Playground fosters exploration and suggestions. Monitoring and governance present insights for optimized useful resource allocation and proactive decision-making. With a give attention to safety, compliance, and moral AI practices, the Generative AI Gateway opens doorways to a future the place AI-driven purposes thrive responsibly, unlocking new realms of potentialities for organizations.

Concerning the Authors

Talha Chattha is an AI/ML Specialist Options Architect at Amazon Internet Providers, primarily based in Stockholm, serving Nordic enterprises and digital native companies. Talha holds a deep ardour for Generative AI applied sciences, He works tirelessly to ship modern, scalable and worthwhile ML options within the area of Massive Language Fashions and Basis Fashions for his clients. When not shaping the way forward for AI, he explores the scenic European landscapes and scrumptious cuisines.

Talha Chattha is an AI/ML Specialist Options Architect at Amazon Internet Providers, primarily based in Stockholm, serving Nordic enterprises and digital native companies. Talha holds a deep ardour for Generative AI applied sciences, He works tirelessly to ship modern, scalable and worthwhile ML options within the area of Massive Language Fashions and Basis Fashions for his clients. When not shaping the way forward for AI, he explores the scenic European landscapes and scrumptious cuisines.

John Hwang is a Generative AI Architect at AWS with particular give attention to Massive Language Mannequin (LLM) purposes, vector databases, and generative AI product technique. He’s captivated with serving to corporations with AI/ML product improvement, and the way forward for LLM brokers and co-pilots. Previous to becoming a member of AWS, he was a Product Supervisor at Alexa, the place he helped deliver conversational AI to cellular gadgets, in addition to a derivatives dealer at Morgan Stanley. He holds a B.S. in Pc Science from Stanford College.

John Hwang is a Generative AI Architect at AWS with particular give attention to Massive Language Mannequin (LLM) purposes, vector databases, and generative AI product technique. He’s captivated with serving to corporations with AI/ML product improvement, and the way forward for LLM brokers and co-pilots. Previous to becoming a member of AWS, he was a Product Supervisor at Alexa, the place he helped deliver conversational AI to cellular gadgets, in addition to a derivatives dealer at Morgan Stanley. He holds a B.S. in Pc Science from Stanford College.

Paolo Di Francesco is a Senior Options Architect at Amazon Internet Providers (AWS). He holds a PhD in Telecommunication Engineering and has expertise in software program engineering. He’s captivated with machine studying and is at the moment specializing in utilizing his expertise to assist clients attain their objectives on AWS, particularly in discussions round MLOps. Exterior of labor, he enjoys enjoying soccer and studying.

Paolo Di Francesco is a Senior Options Architect at Amazon Internet Providers (AWS). He holds a PhD in Telecommunication Engineering and has expertise in software program engineering. He’s captivated with machine studying and is at the moment specializing in utilizing his expertise to assist clients attain their objectives on AWS, particularly in discussions round MLOps. Exterior of labor, he enjoys enjoying soccer and studying.