Dealing With Noisy Labels in Textual content Information

Picture by Editor

With the rising curiosity in pure language processing, increasingly practitioners are hitting the wall not as a result of they will’t construct or fine-tune LLMs, however as a result of their information is messy!

We’ll present easy, but very efficient coding procedures for fixing noisy labels in textual content information. We’ll cope with 2 widespread eventualities in real-world textual content information:

- Having a class that comprises combined examples from a couple of different classes. I like to name this sort of class a meta class.

- Having 2 or extra classes that must be merged into 1 class as a result of texts belonging to them check with the identical matter.

We’ll use ITSM (IT Service Administration) dataset created for this tutorial (CCO license). It’s obtainable on Kaggle from the hyperlink under:

https://www.kaggle.com/datasets/nikolagreb/small-itsm-dataset

It’s time to start out with the import of all libraries wanted and fundamental information examination. Brace your self, code is coming!

import pandas as pd

import numpy as np

import string

from sklearn.feature_extraction.textual content import TfidfVectorizer

from sklearn.naive_bayes import ComplementNB

from sklearn.pipeline import make_pipeline

from sklearn.model_selection import train_test_split

from sklearn import metrics

df = pd.read_excel("ITSM_data.xlsx")

df.data()

RangeIndex: 118 entries, 0 to 117

Information columns (complete 7 columns):

# Column Non-Null Depend Dtype

--- ------ -------------- -----

0 ID_request 118 non-null int64

1 Textual content 117 non-null object

2 Class 115 non-null object

3 Resolution 115 non-null object

4 Date_request_recieved 118 non-null datetime64[ns]

5 Date_request_solved 118 non-null datetime64[ns]

6 ID_agent 118 non-null int64

dtypes: datetime64[ns](2), int64(2), object(3)

reminiscence utilization: 6.6+ KB

Every row represents one entry within the ITSM database. We’ll attempt to predict the class of the ticket primarily based on the textual content of the ticket written by a person. Let’s study deeper an important fields for described enterprise use instances.

for textual content, class in zip(df.Textual content.pattern(3, random_state=2), df.Class.pattern(3, random_state=2)):

print("TEXT:")

print(textual content)

print("CATEGORY:")

print(class)

print("-"*100)

TEXT:

I simply wish to speak to an agent, there are too many issues on my computer to be defined in a single ticket. Please name me once you see this, whoever you're. (speak to agent)

CATEGORY:

Asana

----------------------------------------------------------------------------------------------------

TEXT:

Asana funktionierte nicht mehr, nachdem ich meinen Laptop computer neu gestartet hatte. Bitte helfen Sie.

CATEGORY:

Assist Wanted

----------------------------------------------------------------------------------------------------

TEXT:

My mail stopped to work after I up to date Home windows.

CATEGORY:

Outlook

----------------------------------------------------------------------------------------------------

If we check out the primary two tickets, though one ticket is in German, we are able to see that described issues check with the identical software program?—?Asana, however they carry totally different labels. That is beginning distribution of our classes:

df.Class.value_counts(normalize=True, dropna=False).mul(100).spherical(1).astype(str) + "%"

Outlook 19.1%

Discord 13.9%

CRM 12.2%

Web Browser 10.4%

Mail 9.6%

Keyboard 9.6%

Asana 8.7%

Mouse 8.7%

Assist Wanted 7.8%

Identify: Class, dtype: object

The assistance wanted seems to be suspicious, just like the class that may include tickets from a number of different classes. Additionally, classes Outlook and Mail sound related, possibly they need to be merged into one class. Earlier than diving deeper into talked about classes, we’ll eliminate lacking values in columns of our curiosity.

important_columns = ["Text", "Category"]

for cat in important_columns:

df.drop(df[df[cat].isna()].index, inplace=True)

df.reset_index(inplace=True, drop=True)

There isn’t a legitimate substitute for the examination of information with the naked eye. The flamboyant operate to take action in pandas is .pattern(), so we’ll do precisely that after extra, now for the suspicious class:

meta = df[df.Category == "Help Needed"]

for textual content in meta.Textual content.pattern(5, random_state=2):

print(textual content)

print("-"*100)

Discord emojis aren't obtainable to me, I want to have this feature enabled like different crew members have.

---------------------------------------------------------------------------

Bitte reparieren Sie mein Hubspot CRM. Seit gestern funktioniert es nicht mehr

---------------------------------------------------------------------------

My headphones aren't working. I want to order new.

---------------------------------------------------------------------------

Bundled issues with Workplace since restart:

Messages not despatched

Outlook doesn't join, mails don't arrive

Error 0x8004deb0 seems when Connection try, see attachment

The corporate account is affected: AB123

Entry through Workplace.com appears to be doable.

---------------------------------------------------------------------------

Asana funktionierte nicht mehr, nachdem ich meinen Laptop computer neu gestartet hatte. Bitte helfen Sie.

---------------------------------------------------------------------------

Clearly, we have now tickets speaking about Discord, Asana, and CRM. So the title of the class must be modified from “Assist Wanted” to present, extra particular classes. For step one of the reassignment course of, we’ll create the brand new column “Key phrases” that provides the knowledge if the ticket has the phrase from the checklist of classes within the “Textual content” column.

words_categories = np.distinctive([word.strip().lower() for word in df.Category]) # checklist of classes

def key phrases(row):

list_w = []

for phrase in row.translate(str.maketrans("", "", string.punctuation)).decrease().break up():

if phrase in words_categories:

list_w.append(phrase)

return list_w

df["Keywords"] = df.Textual content.apply(key phrases)

# since our output is within the checklist, this operate will give us higher trying ultimate output.

def clean_row(row):

row = str(row)

row = row.substitute("[", "")

row = row.replace("]", "")

row = row.substitute("'", "")

row = string.capwords(row)

return row

df["Keywords"] = df.Key phrases.apply(clean_row)

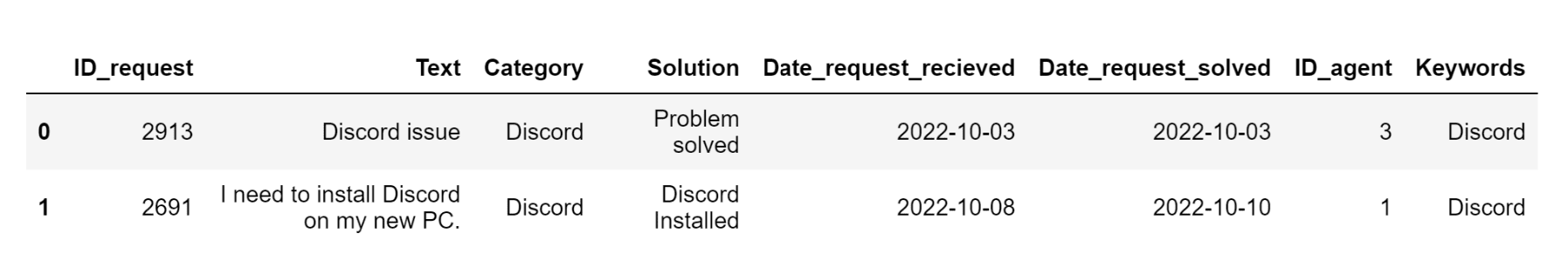

Additionally, notice that utilizing “if phrase in str(words_categories)” as an alternative of “if phrase in words_categories” would catch phrases from classes with greater than 1 phrase (Web Browser in our case), however would additionally require extra information preprocessing. To maintain issues easy and straight to the purpose, we’ll go along with the code for classes made from only one phrase. That is how our dataset seems to be now:

output as picture:

After extracting the key phrases column, we’ll assume the standard of the tickets. Our speculation:

- Ticket with simply 1 key phrase within the Textual content area that’s the similar because the class to the which ticket belongs can be simple to categorise.

- Ticket with a number of key phrases within the Textual content area, the place a minimum of one of many key phrases is identical because the class to the which ticket belongs can be simple to categorise within the majority of instances.

- The ticket that has key phrases, however none of them is the same as the title of the class to the which ticket belongs might be a loud label case.

- Different tickets are impartial primarily based on key phrases.

cl_list = []

for class, key phrases in zip(df.Class, df.Key phrases):

if class.decrease() == key phrases.decrease() and key phrases != "":

cl_list.append("easy_classification")

elif class.decrease() in key phrases.decrease(): # to cope with a number of key phrases within the ticket

cl_list.append("probably_easy_classification")

elif class.decrease() != key phrases.decrease() and key phrases != "":

cl_list.append("potential_problem")

else:

cl_list.append("impartial")

df["Ease_classification"] = cl_list

df.Ease_classification.value_counts(normalize=True, dropna=False).mul(100).spherical(1).astype(str) + "%"

impartial 45.6%

easy_classification 37.7%

potential_problem 9.6%

probably_easy_classification 7.0%

Identify: Ease_classification, dtype: object

We made our new distribution and now could be the time to look at tickets categorized as a possible downside. In apply, the next step would require rather more sampling and take a look at the bigger chunks of information with the naked eye, however the rationale can be the identical. You might be supposed to search out problematic tickets and determine when you can enhance their high quality or when you ought to drop them from the dataset. When you find yourself dealing with a big dataset keep calm, and do not forget that information examination and information preparation normally take rather more time than constructing ML algorithms!

pp = df[df.Ease_classification == "potential_problem"]

for textual content, class in zip(pp.Textual content.pattern(5, random_state=2), pp.Class.pattern(3, random_state=2)):

print("TEXT:")

print(textual content)

print("CATEGORY:")

print(class)

print("-"*100)

TEXT:

outlook situation , I did an replace Home windows and I've no extra outlook on my pocket book ? Please assist !

Outlook

CATEGORY:

Mail

--------------------------------------------------------------------

TEXT:

Please relase blocked attachements from the mail I obtained from title.surname@firm.com. These are information wanted for social media advertising and marketing campaing.

CATEGORY:

Outlook

--------------------------------------------------------------------

TEXT:

Asana funktionierte nicht mehr, nachdem ich meinen Laptop computer neu gestartet hatte. Bitte helfen Sie.

CATEGORY:

Assist Wanted

--------------------------------------------------------------------

We perceive that tickets from Outlook and Mail classes are associated to the identical downside, so we’ll merge these 2 classes and enhance the outcomes of our future ML classification algorithm.

mail_categories_to_merge = ["Outlook", "Mail"]

sum_mail_cluster = 0

for x in mail_categories_to_merge:

sum_mail_cluster += len(df[df["Category"] == x])

print("Variety of classes to be merged into new cluster: ", len(mail_categories_to_merge))

print("Anticipated variety of tickets within the new cluster: ", sum_mail_cluster)

def rename_to_mail_cluster(class):

if class in mail_categories_to_merge:

class = "Mail_CLUSTER"

else:

class = class

return class

df["Category"] = df["Category"].apply(rename_to_mail_cluster)

df.Class.value_counts()

Variety of classes to be merged into new cluster: 2

Anticipated variety of tickets within the new cluster: 33

Mail_CLUSTER 33

Discord 15

CRM 14

Web Browser 12

Keyboard 11

Asana 10

Mouse 10

Assist Wanted 9

Identify: Class, dtype: int64

Final, however not least, we wish to relabel some tickets from the meta class “Assist Wanted” to the correct class.

df.loc[(df["Category"] == "Assist Wanted") & ([set(x).intersection(words_categories) for x in df["Text"].str.decrease().str.substitute("[^ws]", "", regex=True).str.break up()]), "Class"] = "Change"

def cat_name_change(cat, key phrases):

if cat == "Change":

cat = key phrases

else:

cat = cat

return cat

df["Category"] = df.apply(lambda x: cat_name_change(x.Class, x.Key phrases), axis=1)

df["Category"] = df["Category"].substitute({"Crm":"CRM"})

df.Class.value_counts(dropna=False)

Mail_CLUSTER 33

Discord 16

CRM 15

Web Browser 12

Asana 11

Keyboard 11

Mouse 10

Assist Wanted 6

Identify: Class, dtype: int64

We did our information relabeling and cleansing however we should not name ourselves information scientists if we do not do a minimum of one scientific experiment and take a look at the impression of our work on the ultimate classification. We’ll achieve this by implementing The Complement Naive Bayes classifier in sklearn. Be at liberty to strive different, extra advanced algorithms. Additionally, remember that additional information cleansing may very well be achieved – for instance, we may additionally drop all tickets left within the “Assist Wanted” class.

mannequin = make_pipeline(TfidfVectorizer(), ComplementNB())

# outdated df

df_o = pd.read_excel("ITSM_data.xlsx")

important_categories = ["Text", "Category"]

for cat in important_categories:

df_o.drop(df_o[df_o[cat].isna()].index, inplace=True)

df_o.title = "dataset simply with out lacking"

df.title = "dataset after deeper cleansing"

for dataframe in [df_o, df]:

# Break up dataset into coaching set and take a look at set

X_train, X_test, y_train, y_test = train_test_split(dataframe.Textual content, dataframe.Class, test_size=0.2, random_state=1)

# Coaching the mannequin with practice information

mannequin.match(X_train, y_train)

# Predict the response for take a look at dataset

y_pred = mannequin.predict(X_test)

print(f"Accuracy of Complement Naive Bayes classifier mannequin on {dataframe.title} is: {spherical(metrics.accuracy_score(y_test, y_pred),2)}")

Accuracy of Complement Naive Bayes classifier mannequin on dataset simply with out lacking is: 0.48

Accuracy of Complement Naive Bayes classifier mannequin on dataset after deeper cleansing is: 0.65

Fairly spectacular, proper? The dataset we used is small (on goal, so you’ll be able to simply see what occurs in every step) so totally different random seeds would possibly produce totally different outcomes, however within the overwhelming majority of instances, the mannequin will carry out considerably higher on the dataset after cleansing in comparison with the unique dataset. We did a very good job!

Nikola Greb been coding for greater than 4 years, and for the previous two years he specialised in NLP. Earlier than turning to information science, he was profitable in gross sales, HR, writing and chess.