Improve your Amazon Redshift cloud information warehouse with simpler, less complicated, and quicker machine studying utilizing Amazon SageMaker Canvas

Machine studying (ML) helps organizations to extend income, drive enterprise development, and scale back prices by optimizing core enterprise features akin to provide and demand forecasting, buyer churn prediction, credit score threat scoring, pricing, predicting late shipments, and lots of others.

Typical ML growth cycles take weeks to many months and requires sparse information science understanding and ML growth abilities. Enterprise analysts’ concepts to make use of ML fashions typically sit in extended backlogs due to information engineering and information science crew’s bandwidth and information preparation actions.

On this publish, we dive right into a enterprise use case for a banking establishment. We are going to present you the way a monetary or enterprise analyst at a financial institution can simply predict if a buyer’s mortgage will likely be absolutely paid, charged off, or present utilizing a machine studying mannequin that’s greatest for the enterprise drawback at hand. The analyst can simply pull within the information they want, use pure language to scrub up and fill any lacking information, and at last construct and deploy a machine studying mannequin that may precisely predict the mortgage standing as an output, all without having to change into a machine studying knowledgeable to take action. The analyst can even be capable to shortly create a enterprise intelligence (BI) dashboard utilizing the outcomes from the ML mannequin inside minutes of receiving the predictions. Let’s be taught in regards to the providers we are going to use to make this occur.

Amazon SageMaker Canvas is a web-based visible interface for constructing, testing, and deploying machine studying workflows. It permits information scientists and machine studying engineers to work together with their information and fashions and to visualise and share their work with others with only a few clicks.

SageMaker Canvas has additionally built-in with Data Wrangler, which helps with creating information flows and getting ready and analyzing your information. Constructed into Information Wrangler, is the Chat for data prep possibility, which lets you use pure language to discover, visualize, and rework your information in a conversational interface.

Amazon Redshift is a quick, absolutely managed, petabyte-scale information warehouse service that makes it cost-effective to effectively analyze all of your information utilizing your current enterprise intelligence instruments.

Amazon QuickSight powers data-driven organizations with unified (BI) at hyperscale. With QuickSight, all customers can meet various analytic wants from the identical supply of fact by fashionable interactive dashboards, paginated reviews, embedded analytics, and pure language queries.

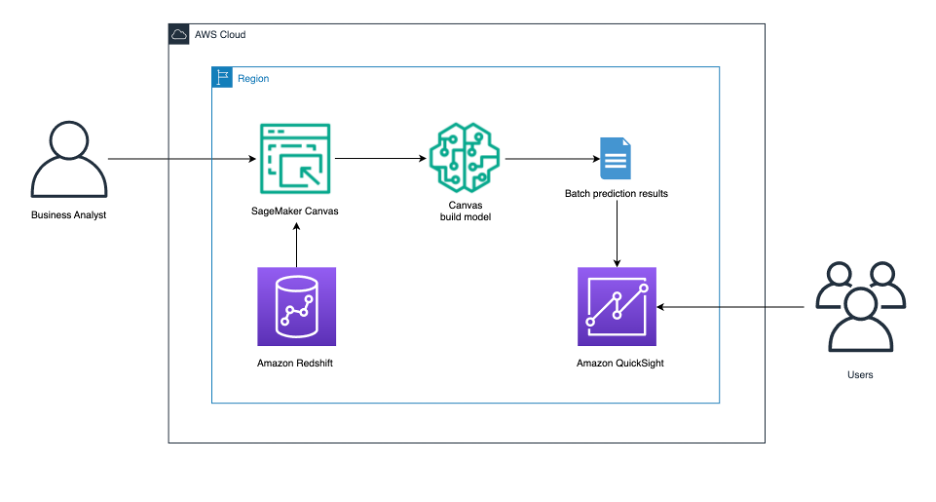

Resolution overview

The answer structure that follows illustrates:

- A enterprise analyst signing in to SageMaker Canvas.

- The enterprise analyst connects to the Amazon Redshift information warehouse and pulls the specified information into SageMaker Canvas to make use of.

- We inform SageMaker Canvas to construct a predictive evaluation ML mannequin.

- After the mannequin has been constructed, get batch prediction outcomes.

- Ship the outcomes to QuickSight for customers to additional analyze.

Stipulations

Earlier than you start, be sure you have the next conditions in place:

- An AWS account and position with the AWS Identity and Access Management (IAM) privileges to deploy the next sources:

- IAM roles.

- A provisioned or serverless Amazon Redshift information warehouse. For this publish we’ll use a provisioned Amazon Redshift cluster.

- A SageMaker area.

- A QuickSight account (optionally available).

- Primary information of a SQL question editor.

Arrange the Amazon Redshift cluster

We’ve created a CloudFormation template to arrange the Amazon Redshift cluster.

- Deploy the Cloudformation template to your account.

- Enter a stack title, then select Subsequent twice and preserve the remainder of parameters as default.

- Within the assessment web page, scroll right down to the Capabilities part, and choose I acknowledge that AWS CloudFormation would possibly create IAM sources.

- Select Create stack.

The stack will run for 10–quarter-hour. After it’s completed, you may view the outputs of the father or mother and nested stacks as proven within the following figures:

Father or mother stack

Nested stack

Pattern information

You’ll use a publicly obtainable dataset that AWS hosts and maintains in our personal S3 bucket as a workshop for financial institution clients and their loans that features buyer demographic information and mortgage phrases.

Implementation steps

Load information to the Amazon Redshift cluster

- Hook up with your Amazon Redshift cluster utilizing Question Editor v2. To navigate to the Amazon Redshift Question v2 editor, please comply with the steps Opening query editor v2.

- Create a desk in your Amazon Redshift cluster utilizing the next SQL command:

- Load information into the

loan_custdesk utilizing the nextCOPYcommand:

- Question the desk to see what the info seems like:

Arrange chat for information

- To make use of the chat for information possibility in Sagemaker Canvas, you could allow it in Amazon Bedrock.

- Open the AWS Administration Console, go to Amazon Bedrock, and select Mannequin entry within the navigation pane.

- Select Allow particular fashions, underneath Anthropic, choose Claude and choose Subsequent.

- Evaluation the choice and click on Submit.

- Open the AWS Administration Console, go to Amazon Bedrock, and select Mannequin entry within the navigation pane.

- Navigate to Amazon SageMaker service from the AWS administration console, choose Canvas and click on on Open Canvas.

- Select Datasets from the navigation pane, then select the Import information dropdown, and choose Tabular.

- For Dataset title, enter

redshift_loandataand select Create.

- On the following web page, select Information Supply and choose Redshift because the supply. Below Redshift, choose + Add Connection.

- Enter the next particulars to ascertain your Amazon Redshift connection :

- Cluster Identifier: Copy the

ProducerClusterNamefrom the CloudFormation nested stack outputs. - You possibly can reference the previous display screen shot for Nested Stack, the place you will see the cluster identifier output.

- Database title: Enter

dev. - Database consumer: Enter

awsuser. - Unload IAM Position ARN: Copy the

RedshiftDataSharingRoleNamefrom the nested stack outputs. - Connection Identify: Enter

MyRedshiftCluster. - Select Add connection.

- Cluster Identifier: Copy the

- After the connection is created, develop the

publicschema, drag theloan_custdesk into the editor, and select Create dataset.

- Select the

redshift_loandatadataset and select Create an information movement.

- Enter

redshift_flowfor the title and select Create.

- After the movement is created, select Chat for information prep.

- Within the textual content field, enter

summarize my informationand select the run arrow.

- The output ought to look one thing like the next:

- Now you need to use pure language to prep the dataset. Enter

Drop ssn and filter for ages over 17and click on on the run arrow. You will notice it was capable of deal with each steps. You may also view the PySpark code that it ran. So as to add these steps as dataset transforms, select Add to steps.

- Rename the step to

drop ssn and filter age > 17, select Replace, after which select Create mannequin.

- Export information and create mannequin: Enter

loan_data_forecast_datasetfor the Dateset title, for Mannequin title, enterloan_data_forecast, for Drawback kind, selectPredictive evaluation, for Goal column, chooseloan_status, and click on Export and create mannequin.

- Confirm the right Goal column and Mannequin kind is chosen and click on on Fast construct.

- Now the mannequin is being created. It normally takes 14–20 minutes relying on the dimensions of your information set.

- After the mannequin has accomplished coaching, you may be routed to the Analyze tab. There, you may see the typical prediction accuracy and the column influence on prediction consequence. Notice that your numbers would possibly differ from those you see within the following determine, due to the stochastic nature of the ML course of.

Use the mannequin to make predictions

- Now let’s use the mannequin to make predictions for the long run standing of loans. Select Predict.

- Below Select the prediction kind, choose Batch prediction, then choose Guide.

- Then choose loan_data_forecast_dataset from the dataset checklist, and click on Generate predictions.

- You’ll see the next after the batch prediction is full. Click on on the breadcrumb menu subsequent to the Prepared standing and click on on Preview to view the outcomes.

- Now you can view the predictions and obtain them as CSV.

- You may also generate single predictions for one row of information at a time. Below Select the prediction kind, choose Single Prediction after which change the values for any of the enter fields that you simply’d like, and select Replace.

Analyze the predictions

We are going to now present you easy methods to use Quicksight to visualise the predictions information from SageMaker canvas to additional acquire insights out of your information. SageMaker Canvas has direct integration with QuickSight, which is a cloud-powered enterprise analytics service that helps staff inside a corporation to construct visualizations, carry out ad-hoc evaluation, and shortly get enterprise insights from their information, anytime, on any machine.

- With the preview web page up, select Ship to Amazon QuickSight.

- Enter a QuickSight consumer title you need to share the outcomes to.

- Select Ship and it’s best to see affirmation saying the outcomes have been despatched efficiently.

- Now, you may create a QuickSight dashboard for predictions.

- Go to the QuickSight console by coming into QuickSight in your console providers search bar and select QuickSight.

- Below Datasets, choose the SageMaker Canvas dataset that was simply created.

- Select Edit Dataset.

- Below the State area, change the info kind to State.

- Select Create with Interactive sheet chosen.

- Below visible sorts, select the Stuffed map

- Choose the State and Likelihood

- Below Subject wells, select Likelihood and alter the Mixture to Common and Present as to %.

- Select Filter and add a filter for loan_status to incorporate absolutely paid loans solely. Select Apply.

- On the high proper within the blue banner, select Share and Publish Dashboard.

- We use the title Common likelihood for absolutely paid mortgage by state, however be happy to make use of your individual.

- Select Publish dashboard and also you’re performed. You’d now be capable to share this dashboard together with your predictions to different analysts and shoppers of this information.

- Go to the QuickSight console by coming into QuickSight in your console providers search bar and select QuickSight.

Clear up

Use the next steps to keep away from any additional value to your account:

- Sign out of SageMaker Canvas

- Within the AWS console, delete the CloudFormation stack you launched earlier within the publish.

Conclusion

We consider integrating your cloud information warehouse (Amazon Redshift) with SageMaker Canvas opens the door to producing many extra strong ML options for your online business at quicker and without having to maneuver information and with no ML expertise.

You now have enterprise analysts producing useful enterprise insights, whereas letting information scientists and ML engineers assist refine, tune, and lengthen fashions as wanted. SageMaker Canvas integration with Amazon Redshift supplies a unified surroundings for constructing and deploying machine studying fashions, permitting you to give attention to creating worth together with your information fairly than specializing in the technical particulars of constructing information pipelines or ML algorithms.

Extra studying:

- SageMaker Canvas Workshop

- re:Invent 2022 – SageMaker Canvas

- Hands-On Course for Business Analysts – Practical Decision Making using No-Code ML on AWS

Concerning the Authors

Suresh Patnam is Principal Gross sales Specialist AI/ML and Generative AI at AWS. He’s captivated with serving to companies of all sizes rework into fast-moving digital organizations specializing in information, AI/ML, and generative AI.

Suresh Patnam is Principal Gross sales Specialist AI/ML and Generative AI at AWS. He’s captivated with serving to companies of all sizes rework into fast-moving digital organizations specializing in information, AI/ML, and generative AI.

Sohaib Katariwala is a Sr. Specialist Options Architect at AWS targeted on Amazon OpenSearch Service. His pursuits are in all issues information and analytics. Extra particularly he loves to assist clients use AI of their information technique to unravel modern-day challenges.

Sohaib Katariwala is a Sr. Specialist Options Architect at AWS targeted on Amazon OpenSearch Service. His pursuits are in all issues information and analytics. Extra particularly he loves to assist clients use AI of their information technique to unravel modern-day challenges.

Michael Hamilton is an Analytics & AI Specialist Options Architect at AWS. He enjoys all issues information associated and serving to clients answer for his or her advanced use instances.

Michael Hamilton is an Analytics & AI Specialist Options Architect at AWS. He enjoys all issues information associated and serving to clients answer for his or her advanced use instances.

Nabil Ezzarhouni is an AI/ML and Generative AI Options Architect at AWS. He’s based mostly in Austin, TX and captivated with Cloud, AI/ML applied sciences, and Product Administration. When he isn’t working, he spends time along with his household, in search of the very best taco in Texas. As a result of…… why not?

Nabil Ezzarhouni is an AI/ML and Generative AI Options Architect at AWS. He’s based mostly in Austin, TX and captivated with Cloud, AI/ML applied sciences, and Product Administration. When he isn’t working, he spends time along with his household, in search of the very best taco in Texas. As a result of…… why not?