Meta AI Releases Meta Spirit LM: An Open Supply Multimodal Language Mannequin Mixing Textual content and Speech

One of many major challenges in growing superior text-to-speech (TTS) methods is the shortage of expressivity when transcribing and producing speech. Historically, massive language fashions (LLMs) used for constructing TTS pipelines convert speech to textual content utilizing computerized speech recognition (ASR), course of it utilizing an LLM, after which convert the output again to speech by way of TTS. Nonetheless, this method typically results in a loss in expressive high quality, as nuances resembling tone, emotion, and pitch are stripped away through the ASR course of. Because of this, the synthesized speech tends to sound monotonic or unnatural, unable to adequately convey feelings like pleasure, anger, or shock.

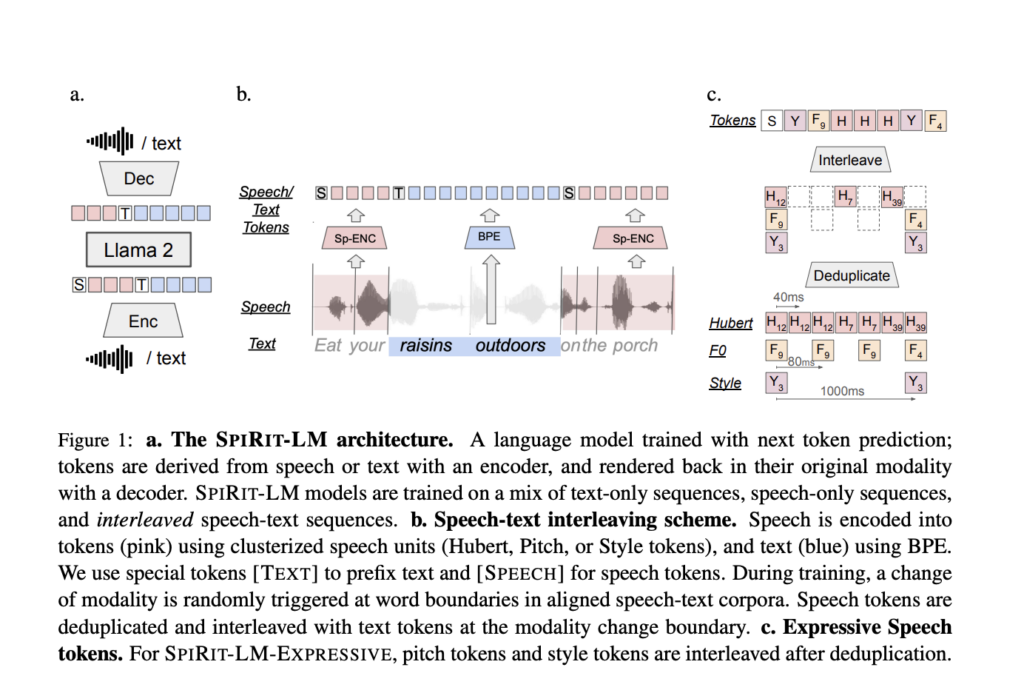

Meta AI just lately launched Meta Spirit LM, an modern open-source multimodal language mannequin able to freely mixing textual content and speech to handle these limitations. Meta Spirit LM addresses the restrictions of present TTS methods by integrating each textual content and speech on the phrase degree, permitting the mannequin to cross modalities extra seamlessly. This mannequin was educated on each speech and textual content datasets utilizing a word-level interleaving methodology, successfully capturing the expressive traits of spoken language whereas sustaining the robust semantic capabilities of text-based fashions.

Meta Spirit LM is available in two variations: Spirit LM Base and Spirit LM Expressive. Spirit LM Base makes use of phonetic tokens to encode speech, permitting for environment friendly illustration of phrases, whereas Spirit LM Expressive goes a step additional by incorporating pitch and magnificence tokens to seize particulars of tone, resembling pleasure or anger, and generate expressive speech that displays these feelings. This makes Meta Spirit LM a robust software for integrating textual content and speech modalities to provide coherent and natural-sounding speech.

Meta Spirit LM employs a singular word-level interleaving methodology to coach on a mixture of textual content and speech datasets. The mannequin’s structure is designed to freely transition between textual content and speech by encoding each modalities right into a single set of tokens. Spirit LM Base makes use of phonetic tokens derived from speech representations, whereas Spirit LM Expressive incorporates pitch and magnificence tokens that add layers of expressivity, resembling tone or emotional nuances.

This structure permits Meta Spirit LM to generate extra pure and contextually wealthy speech. The mannequin is able to few-shot studying for duties throughout modalities, resembling computerized speech recognition (ASR), text-to-speech (TTS), and speech classification. This versatility positions Meta Spirit LM as a major enchancment over conventional multimodal AI fashions that sometimes function in remoted domains. By studying representations that span textual content and speech, the mannequin may also be used for advanced purposes, together with expressive storytelling, emotion-driven digital assistants, and enhanced interactive dialogue methods.

The significance of Meta Spirit LM lies in its means to freely transition between speech and textual content, considerably enhancing the multimodal AI expertise. The Expressive model of the mannequin (Spirit LM Expressive) goes past normal speech fashions by permitting for the preservation of sentiment and tone throughout totally different modalities. Analysis outcomes on the Speech-Textual content Sentiment Preservation (STSP) benchmark point out that Spirit LM Expressive successfully retains emotional intent, delivering extra pure and emotive outputs than normal LLMs utilizing ASR and TTS cascades.

One other key facet of Meta Spirit LM’s contribution is its few-shot studying capabilities throughout totally different modalities. The mannequin has demonstrated the power to deal with cross-modal duties, resembling changing textual content to expressive speech, with a aggressive accuracy that showcases its generalized understanding throughout modalities. This makes Meta Spirit LM a major leap ahead within the improvement of conversational brokers, accessible communication instruments for these with disabilities, and academic applied sciences that require pure, expressive dialogue. The open-source nature of the mannequin additionally invitations the broader analysis neighborhood to discover and enhance upon its multimodal capabilities.

Meta Spirit LM represents a groundbreaking step in direction of integrating speech and textual content modalities in AI methods with out sacrificing expressivity. Meta Spirit LM Base and Spirit LM Expressive reveal a robust mixture of semantic understanding and expressive speech era by utilizing an interleaving method to coach on speech and textual content datasets. Whether or not it’s producing emotive digital assistants or enhancing conversational AI, Meta Spirit LM’s open-source method opens the door for extra modern and expressive makes use of of multimodal AI expertise. Meta AI’s contributions to this mannequin are anticipated to encourage additional analysis and improvement on the intersection of textual content and speech, in the end resulting in extra pure and succesful AI communication methods.

Try the GitHub and Details. All credit score for this analysis goes to the researchers of this mission. Additionally, don’t overlook to comply with us on Twitter and be a part of our Telegram Channel and LinkedIn Group. If you happen to like our work, you’ll love our newsletter.. Don’t Overlook to affix our 50k+ ML SubReddit.

[Upcoming Live Webinar- Oct 29, 2024] The Best Platform for Serving Fine-Tuned Models: Predibase Inference Engine (Promoted)

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.