No Free Lunch in LLM Watermarking: Commerce-offs in Watermarking Design Selections – Machine Studying Weblog | ML@CMU

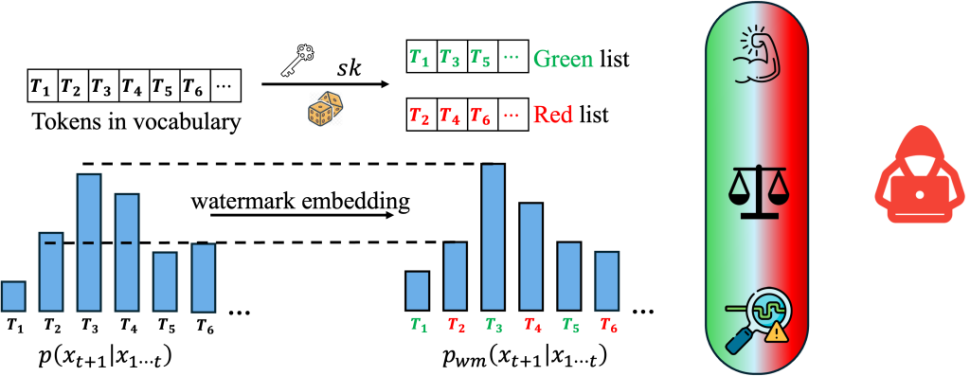

Advances in generative fashions have made it attainable for AI-generated textual content, code, and pictures to reflect human-generated content material in lots of functions. Watermarking, a way that embeds info within the output of a mannequin to confirm its supply, goals to mitigate the misuse of such AI-generated content material. Present state-of-the-art watermarking schemes embed watermarks by barely perturbing possibilities of the LLM’s output tokens, which might be detected by way of statistical testing throughout verification.

Sadly, our work exhibits that frequent design decisions in LLM watermarking schemes make the ensuing programs surprisingly prone to watermark removing or spoofing assaults—resulting in basic trade-offs in robustness, utility, and usefulness. To navigate these trade-offs, we rigorously examine a set of easy but efficient assaults on frequent watermarking programs and suggest tips and defenses for LLM watermarking in apply.

| Immediate | Alan Turing was born in … |

|---|---|

| Unwatermarked Z-Rating: 0.16 ↓ PPL: 3.19 |

Alan Turing was born in 1912 and died in 1954. He was an English mathematician, logician, cryptanalyst, and laptop scientist. In 1938, Turing joined the Authorities Code and Cypher College (GC&CS), the place he contributed to the design of the bombe, a machine that was used to decipher the Enigma-enciphered messages… |

| Watermarked Z-Rating: 5.98 ↑ PPL: 4.46 |

Alan Turing was born in 1912 and died in 1954, at the age of 41. He was the sensible British scientist and mathematician who is essentially credited with being the father of fashionable laptop science. He’s identified for his contributions to mathematical biology and chemistry. He was additionally one among the pioneers of laptop science… |

| (a) Piggyback spoofing assault Exploiting robustness Z-Rating: 5.98 ↑ |

Alan Turing was born in 1950 and died in 1994, on the age of 43. He was the sensible American scientist and mathematician who is essentially credited with being the daddy of contemporary laptop science. He’s identified for his contributions to mathematical biology and musicology. He was additionally one of many pioneers of laptop science… |

| (b) Watermark-removal assault Exploiting a number of keys Z-Rating: 2.40 ↓ PPL: 4.05 |

Alan Turing was born in 1912 and died in 1954. He was a mathematician, logician, cryptologist and theoretical laptop scientist. He is legendary for his work on code-breaking and synthetic intelligence, and his contribution to the Allied victory in World Conflict II. Turing was born in London. He confirmed an curiosity in arithmetic… |

| (c) Watermark-removal assault Exploiting public detection API Z-Rating: 1.47 ↓ PPL: 4.57 |

Alan Turing was born in 1912 and died in 1954. He was an English mathematician, laptop scientist, cryptanalyst and philosopher. Turing was a main mathematician and cryptanalyst. He was one among the key gamers in cracking the German Enigma Code throughout World Conflict II. He additionally got here up with the Turing Machine… |

What’s LLM Watermarking?

Much like picture watermarks, LLM watermarking embeds invisible secret patterns into the textual content. Right here, we briefly introduce LLMs and LLM watermarks. We use (x) to indicate a sequence of tokens, (x_i in mathcal{V}) represents the (i)-th token within the sequence, and (mathcal{V}) is the vocabulary. (M_{textual content{orig}}) denotes the unique mannequin with out a watermark, (M_{textual content{wm}}) is the watermarked mannequin, and (sk in mathcal{S}) is the watermark secret key sampled from (mathcal{S}).

Language Mannequin. State-of-the-art (SOTA) LLMs are auto-regressive fashions, which predict the following token based mostly on the prior tokens. We outline language fashions extra formally under:

Definition 1 (Language Mannequin). A language mannequin (LM) with out a watermark is a mapping:

$$ M_{textual content{orig}}: mathcal{V}^* rightarrow mathcal{V}, $$

the place the enter is a sequence of size (t) tokens (x). (M_{textual content{orig}}(textbf{x})) first returns the likelihood distribution for the following token, then (x_{t+1}) is sampled from this distribution.

Watermarks for LLMs. We concentrate on SOTA decoding-based sturdy watermarking schemes together with KGW, Unigram, and Exp. In every of those strategies, the watermarks are embedded by perturbing the output distribution of the unique LLM. The perturbation is decided by secret watermark keys held by the LLM proprietor. Formally, we outline the watermarking scheme:

Definition 2 (Watermarked LLMs). The watermarked LLM takes token sequence (x in mathcal{V}^* ) and secret key (sk in mathcal{S}) as enter, and outputs a perturbed likelihood distribution for the following token. The perturbation is decided by (sk):

$$M_{textual content{wm}} : mathcal{V}^* instances mathcal{S} rightarrow mathcal{V}$$

The watermark detection outputs the statistical testing rating for the null speculation that the enter token sequence is impartial of the watermark secret key: $$f_{textual content{detection}} : mathcal{V}^* instances mathcal{S} rightarrow mathbb{R}$$ The output rating displays the arrogance of the watermark’s existence within the enter.

Widespread Design Selections of LLM Watermarks

There are a selection of frequent design decisions in current LLM watermarking schemes, together with robustness, using a number of keys, and public detection APIs which have clear advantages to reinforce watermark safety and usefulness. We describe these key design decisions briefly under, after which clarify how an attacker can simply make the most of these design decisions to launch watermark removing or spoofing assaults.

Robustness. The aim of growing a watermark that’s sturdy to output perturbations is to defend towards watermark removing, which can be used to avoid detection schemes for functions corresponding to phishing or pretend information technology. Strong watermark designs have been the subject of many latest works. A extra sturdy watermark can higher defend towards watermark-removal assaults. Nonetheless, our work exhibits that robustness may also allow piggyback spoofing assaults.

Robustness. The aim of growing a watermark that’s sturdy to output perturbations is to defend towards watermark removing, which can be used to avoid detection schemes for functions corresponding to phishing or pretend information technology. Strong watermark designs have been the subject of many latest works. A extra sturdy watermark can higher defend towards watermark-removal assaults. Nonetheless, our work exhibits that robustness may also allow piggyback spoofing assaults.

A number of Keys. Many works have explored the potential of launching watermark stealing assaults to deduce the key sample of the watermark, which may then increase the efficiency of spoofing and removing assaults. A pure and efficient protection towards watermark stealing is utilizing a number of watermark keys throughout embedding, which may enhance the unbiasedness property of the watermark (it’s referred to as distortion-free within the Exp watermark). Rotating a number of secret keys is a standard apply in cryptography and can be prompt by prior watermarks. Extra keys getting used throughout embedding signifies higher watermark unbiasedness and thus it turns into tougher for attackers to deduce the watermark sample. Nonetheless, we present that utilizing a number of keys may also introduce new watermark-removal vulnerabilities.

A number of Keys. Many works have explored the potential of launching watermark stealing assaults to deduce the key sample of the watermark, which may then increase the efficiency of spoofing and removing assaults. A pure and efficient protection towards watermark stealing is utilizing a number of watermark keys throughout embedding, which may enhance the unbiasedness property of the watermark (it’s referred to as distortion-free within the Exp watermark). Rotating a number of secret keys is a standard apply in cryptography and can be prompt by prior watermarks. Extra keys getting used throughout embedding signifies higher watermark unbiasedness and thus it turns into tougher for attackers to deduce the watermark sample. Nonetheless, we present that utilizing a number of keys may also introduce new watermark-removal vulnerabilities.

Public Detection API. It’s nonetheless an open query whether or not watermark detection APIs needs to be made publicly obtainable to customers. Though this makes it simpler to detect watermarked textual content, it’s generally acknowledged that it’s going to make the system susceptible to assaults. We examine this assertion extra exactly by inspecting the precise danger trade-offs that exist, in addition to introducing a novel protection that will make the general public detection API extra possible in apply.

Public Detection API. It’s nonetheless an open query whether or not watermark detection APIs needs to be made publicly obtainable to customers. Though this makes it simpler to detect watermarked textual content, it’s generally acknowledged that it’s going to make the system susceptible to assaults. We examine this assertion extra exactly by inspecting the precise danger trade-offs that exist, in addition to introducing a novel protection that will make the general public detection API extra possible in apply.

Assaults, Defenses, and Pointers

Though the above design decisions are useful for enhancing the safety and usefulness of watermarking programs, additionally they introduce new vulnerabilities. Our work research a set of easy but efficient assaults on frequent watermarking programs and suggest tips and defenses for LLM watermarking in apply.

Specifically, we examine two varieties of assaults—watermark-removal assaults and (piggyback or basic) spoofing assaults. Within the watermark-removal assault, the attacker goals to generate a high-quality response from the LLM with out an embedded watermark. For the spoofing assaults, the aim is to generate a dangerous or incorrect output that has the sufferer group’s watermark embedded.

Piggyback Spoofing Assault Exploiting Robustness

Extra sturdy watermarks can higher defend towards modifying assaults, however this seemingly fascinating property may also be simply misused by malicious customers to launch easy piggyback spoofing assaults. In piggyback spoofing assaults, a small portion of poisonous or incorrect content material is inserted into the watermarked materials, making it look like it was generated by a selected watermarked LLM. The poisonous content material will nonetheless be detected as watermarked, probably damaging the status of the LLM service supplier.

Assault Process. (i) The attacker queries the goal watermarked LLM to obtain a high-entropy watermarked sentence (x_{wm}), (ii) The attacker edits (x_{wm}) and types a brand new piece of textual content (x’) and claims that (x’) is generated by the goal LLM. The modifying methodology might be outlined by the attacker. Easy methods might embody inserting poisonous tokens into the watermarked sentence (x_{wm}), or modifying particular tokens to make the output inaccurate. As we present, modifying might be completed at scale by querying one other LLM like GPT4 to generate fluent output.

Outcomes. We present that piggyback spoofing can generate fluent, watermarked, however inaccurate outcomes at scale. Particularly, we edit the watermarked sentence by querying GPT4 utilizing the immediate "Modify lower than 3 phrases within the following sentence and make it inaccurate or have reverse meanings."

Determine 3 exhibits that we are able to efficiently generate fluent outcomes, with a barely larger PPL. 94.17% of the piggyback outcomes have a z-score larger than the detection threshold 4. We randomly pattern 100 piggyback outcomes and manually examine that almost all of them (92%) are fluent and have inaccurate or reverse content material from the unique watermarked content material. We current a spoofing sentence under. For extra outcomes, please examine our manuscript.

Watermarked content material, z-score: 4.93, PPL: 4.61

Earth has a historical past of 4.5 billion years and people have been round for 200,000 years. But people have been utilizing computer systems for simply over 70 years and even then the time period was first utilized in 1945. Within the age of expertise, we’re nonetheless simply getting began. The primary laptop, ENIAC (Digital Numerical Integrator And Calculator), was constructed on the College of Pennsylvania between 1943 and 1946. The ENIAC took up 1800 sq ft and had 18,000 vacuum tube and mechanical components. The ENIAC was used for mathematical calculations, ballistics, and code breaking. The ENIAC was 1000 instances sooner than another calculator of the time. The primary laptop to run a program was the Z3, constructed by Konrad Zuse at his home.

Piggyback assault, z-score: 4.36, PPL: 5.68

Earth has a historical past of 4.5 billion years and people have been round for 200,000 years. But people have been utilizing computer systems for simply over 700 years and even then the time period was first utilized in 1445. Within the age of expertise, we’re nonetheless simply getting began. The primary laptop, ENIAC (Digital Numerical Integrator And Calculator), was constructed on the College of Pennsylvania between 1943 and 1946. The ENIAC took up 1800 sq ft and had 18,000 vacuum tube and mechanical components. The ENIAC was used for mathematical calculations, ballistics, and code breaking. The ENIAC was 1000 instances slower than another calculator of the time. The primary laptop to run a program was the Z3, constructed by Konrad Zuse at his home.

Dialogue. Piggyback spoofing assaults are straightforward to execute in apply. Strong LLM watermarks sometimes don’t take into account such assaults throughout design and deployment, and current sturdy watermarks are inherently susceptible to such assaults. We take into account this assault to be difficult to defend towards, particularly contemplating the examples offered above, the place by solely modifying a single token, your complete content material turns into incorrect. It’s laborious, if not unattainable, to detect whether or not a selected token is from the attacker through the use of sturdy watermark detection algorithms. Not too long ago, researchers proposed publicly detectable watermarks that plant a cryptographic signature into the generated sentence [Fairoze et al. 2024]. They mitigate such piggyback spoofing assaults at the price of sacrificing robustness. Thus, practitioners ought to weigh the dangers of removing vs. piggyback spoofing assaults for the mannequin at hand.

Guideline #1. Strong watermarks are susceptible to spoofing assaults and are usually not appropriate as proof of content material authenticity alone. To mitigate spoofing whereas preserving robustness, it might be crucial to mix extra measures corresponding to signature-based fragile watermarks.

Watermark-Elimination Assault Exploiting A number of Keys

SOTA watermarking schemes intention to make sure the watermarked textual content retains its prime quality and the personal watermark patterns are usually not simply distinguished by sustaining an unbiasedness property: $$|mathbb{E}_{sk in mathcal{S}}[M_{text{wm}}(textbf{x}, sk)] – M_text{orig}(textbf{x})| = O(epsilon),$$ i.e., the anticipated distribution of watermarked output over the watermark key area (sk in S) is near the output distribution with out a watermark, differing by a distance of (epsilon). Exp is rigorously unbiased, and KGW and Unigram barely shift the watermarked distributions.

We take into account a number of keys for use throughout watermark embedding to defend towards watermark stealing assaults. The perception of our proposed watermark-removal assault is that, given the “unbiased” nature of watermarks, malicious customers can estimate the output distribution with none watermark by repeatedly querying the watermarked LLM utilizing the identical immediate. As this assault estimates the unique, unwatermarked distribution, the standard of the generated content material is preserved.

Assault Process. (i) An attacker queries a watermarked mannequin with an enter (x) a number of instances, observing (n) subsequent tokens (x_{t+1}). (ii) The attacker then creates a frequency histogram of those tokens and samples in line with the frequency. This sampled token matches the results of sampling on an unwatermarked output distribution with a nontrivial likelihood. Consequently, the attacker can progressively remove watermarks whereas sustaining a top quality of the synthesized content material.

Outcomes. We examine the trade-off between resistance towards watermark stealing and watermark-removal assaults by evaluating a latest watermark stealing assault [Jovanović et al. 2024], the place we question the watermarked LLM to acquire 2.2 million tokens to “steal” the watermark sample after which launch spoofing assaults utilizing the estimated watermark sample. In our watermark removing assault, we take into account that the attacker has observations with totally different keys.

As proven in Determine 4a, utilizing a number of keys can successfully defend towards watermark stealing assaults. With a single key, the ASR is 91%. We observe that utilizing three keys can successfully cut back the ASR to 13%, and utilizing greater than 7 keys, the ASR of the watermark stealing is near zero. Nonetheless, utilizing extra keys additionally makes the system susceptible to our watermark-removal assaults as proven in Determine 4b. After we use greater than 7 keys, the detection scores of the content material produced by our watermark removing assaults carefully resemble these of unwatermarked content material and are a lot decrease than the detection thresholds, with ASRs larger than 97%. Determine 4c means that utilizing extra keys improves the standard of the output content material. It is because, with a better variety of keys, there’s a larger likelihood for an attacker to precisely estimate the unwatermarked distribution.

Dialogue. Many prior works have prompt utilizing a number of keys to defend towards watermark stealing assaults. Nonetheless, we reveal {that a} battle exists between bettering resistance to watermark stealing and the feasibility of eradicating watermarks. Our outcomes present that discovering a “candy spot” when it comes to the variety of keys to make use of to mitigate each the watermark stealing and the watermark-removal assaults will not be trivial. Given this tradeoff, we recommend that LLM service suppliers take into account “defense-in-depth” methods corresponding to anomaly detection, question fee limiting, and consumer identification verification.

Guideline #2. Utilizing a bigger variety of watermarking keys can defend towards watermark stealing assaults, however will increase vulnerability to watermark-removal assaults. Limiting customers’ question charges will help to mitigate each assaults.

Assaults Exploiting Public Detection APIs

Fially, we present that publicly obtainable detection APIs can allow each spoofing and removing assaults. The perception is that by querying the detection API, the attacker can achieve information about whether or not a selected token is carrying the watermark or not. Thus, the attacker can choose the tokens based mostly on the detection outcome to launch spoofing and removing assaults.

Assault Process. (i) An attacker feeds a immediate into the watermarked LLM (removing assault) or into an area LLM (spoofing assault), which generates the response in an auto-regressive method. For the token (x_i) the attacker will generate a listing of attainable replacements for (x_i). (ii) The attacker will question the detection utilizing these replacements and pattern a token based mostly on their possibilities and detection scores to take away or spoof the watermark whereas preserving a excessive output high quality. This substitute checklist might be generated by querying the watermarked LLM, querying an area mannequin, or just returned by the watermarked LLM (e.g., enabled by OpenAI’s API top_logprobs=5).

Outcomes. We consider the detection scores for each the watermark-removal and the spoofing assaults. Moreover, for the watermark-removal assault, the place the attackers care extra concerning the output high quality, we report the output PPL.

As proven in Determine 5a and Determine 5b, watermark-removal assaults exploiting the detection API considerably cut back detection confidence whereas sustaining excessive output high quality. As an illustration, for the KGW watermark on LLAMA-2-7B mannequin, we obtain a median z-score of 1.43, which is far decrease than the edge 4. The PPL can be near the watermarked outputs (6.17 vs. 6.28). We observe that the Exp watermark has larger PPL than the opposite two watermarks. A attainable purpose is that Exp watermark is deterministic, whereas different watermarks allow random sampling throughout inference. Our assault additionally employs sampling based mostly on the token possibilities and detection scores, thus we are able to enhance the output high quality for the Exp watermark. The spoofing assaults additionally considerably increase the detection confidence though the content material will not be from the watermarked LLM, as depicted in Determine 5c.

Defending Detection with Differential Privateness. In mild of the problems above, we suggest an efficient protection utilizing concepts from differential privateness (DP) to counteract the detection API based mostly spoofing assaults. DP provides random noise to operate outcomes evaluated on personal datasets such that the outcomes from neighboring datasets are indistinguishable. Equally, we take into account including Gaussian noise to the space rating within the watermark detection, making the detection ((epsilon, delta))-DP, and making certain that attackers can’t inform the distinction between two queries by changing a single token within the content material, thus growing the hardness of launching the assaults.

As proven in Determine 6, with a noise scale of (sigma=4), the DP detection’s accuracy drops from the unique 98.2% to 97.2% on KGW and LLAMA-2-7B, whereas the spoofing ASR turns into 0%. The outcomes are constant for different watermarks and fashions.

Dialogue. The detection API, obtainable to the general public, aids customers in differentiating between AI and human-created supplies. Nonetheless, it may be exploited by attackers to step by step take away watermarks or launch spoofing assaults. We suggest a protection using the concepts in differential privateness. Despite the fact that the attacker can nonetheless get hold of helpful info by growing the detection sensitivity, our protection considerably will increase the problem of spoofing assaults. Nonetheless, this methodology is much less efficient towards watermark-removal assaults that exploit the detection API as a result of attackers’ actions can be near random sampling, which, though with decrease success charges, stays an efficient approach of eradicating watermarks. Due to this fact, we go away growing a extra highly effective protection mechanism towards watermark-removal assaults exploiting detection API as future work. We advocate firms offering detection providers ought to detect and curb malicious conduct by limiting question charges from potential attackers, and likewise confirm the id of the customers to guard towards Sybil assaults.

Guideline #3. Public detection APIs can allow each spoofing and removing assaults. To defend towards these assaults, we suggest a DP-inspired protection, which mixed with methods corresponding to anomaly detection, question fee limiting, and consumer identification verification will help to make public detection extra possible in apply.

Conclusion

Though LLM watermarking is a promising software for auditing the utilization of LLM-generated textual content, basic trade-offs exist within the robustness, usability, and utility of current approaches. Specifically, our work exhibits that it’s straightforward to make the most of frequent design decisions in LLM watermarks to launch assaults that may simply take away the watermark or generate falsely watermarked textual content. Our examine finds that these vulnerabilities are frequent to current LLM watermarks and offers warning for the sector in deploying present options in apply with out fastidiously contemplating the affect and trade-offs of watermarking design decisions. To ascertain extra dependable future LLM watermarking programs, we additionally counsel tips for designing and deploying LLM watermarks together with attainable defenses motivated by the theoretical and empirical analyses of our assaults. For extra outcomes and discussions, please see our manuscript.