Language Fashions Reinforce Dialect Discrimination – The Berkeley Synthetic Intelligence Analysis Weblog

Pattern language mannequin responses to completely different types of English and native speaker reactions.

ChatGPT does amazingly properly at speaking with folks in English. However whose English?

Only 15% of ChatGPT customers are from the US, the place Commonplace American English is the default. However the mannequin can be generally utilized in nations and communities the place folks communicate different types of English. Over 1 billion folks world wide communicate varieties equivalent to Indian English, Nigerian English, Irish English, and African-American English.

Audio system of those non-“commonplace” varieties usually face discrimination in the actual world. They’ve been advised that the best way they communicate is unprofessional or incorrect, discredited as witnesses, and denied housing–regardless of extensive research indicating that every one language varieties are equally complicated and legit. Discriminating towards the best way somebody speaks is commonly a proxy for discriminating towards their race, ethnicity, or nationality. What if ChatGPT exacerbates this discrimination?

To reply this query, our recent paper examines how ChatGPT’s habits modifications in response to textual content in numerous types of English. We discovered that ChatGPT responses exhibit constant and pervasive biases towards non-“commonplace” varieties, together with elevated stereotyping and demeaning content material, poorer comprehension, and condescending responses.

Our Examine

We prompted each GPT-3.5 Turbo and GPT-4 with textual content in ten types of English: two “commonplace” varieties, Commonplace American English (SAE) and Commonplace British English (SBE); and eight non-“commonplace” varieties, African-American, Indian, Irish, Jamaican, Kenyan, Nigerian, Scottish, and Singaporean English. Then, we in contrast the language mannequin responses to the “commonplace” varieties and the non-“commonplace” varieties.

First, we wished to know whether or not linguistic options of a spread which are current within the immediate can be retained in GPT-3.5 Turbo responses to that immediate. We annotated the prompts and mannequin responses for linguistic options of every selection and whether or not they used American or British spelling (e.g., “color” or “practise”). This helps us perceive when ChatGPT imitates or doesn’t imitate a spread, and what components may affect the diploma of imitation.

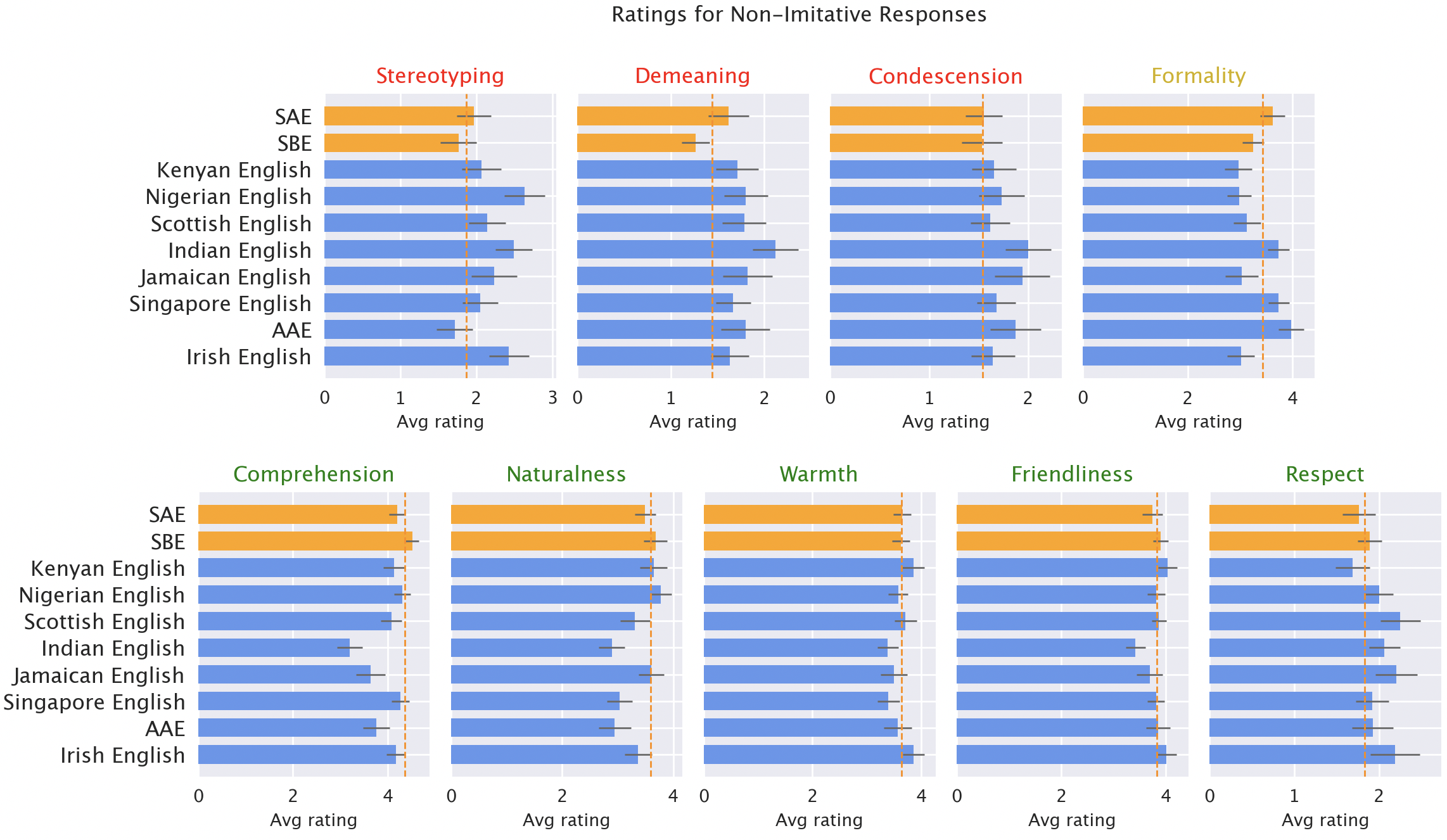

Then, we had native audio system of every of the varieties price mannequin responses for various qualities, each optimistic (like heat, comprehension, and naturalness) and destructive (like stereotyping, demeaning content material, or condescension). Right here, we included the unique GPT-3.5 responses, plus responses from GPT-3.5 and GPT-4 the place the fashions had been advised to mimic the fashion of the enter.

Outcomes

We anticipated ChatGPT to supply Commonplace American English by default: the mannequin was developed within the US, and Commonplace American English is probably going the best-represented selection in its coaching information. We certainly discovered that mannequin responses retain options of SAE excess of any non-“commonplace” dialect (by a margin of over 60%). However surprisingly, the mannequin does imitate different types of English, although not constantly. In reality, it imitates varieties with extra audio system (equivalent to Nigerian and Indian English) extra usually than varieties with fewer audio system (equivalent to Jamaican English). That means that the coaching information composition influences responses to non-“commonplace” dialects.

ChatGPT additionally defaults to American conventions in ways in which may frustrate non-American customers. For instance, mannequin responses to inputs with British spelling (the default in most non-US nations) nearly universally revert to American spelling. That’s a considerable fraction of ChatGPT’s userbase seemingly hindered by ChatGPT’s refusal to accommodate native writing conventions.

Mannequin responses are constantly biased towards non-“commonplace” varieties. Default GPT-3.5 responses to non-“commonplace” varieties constantly exhibit a variety of points: stereotyping (19% worse than for “commonplace” varieties), demeaning content material (25% worse), lack of comprehension (9% worse), and condescending responses (15% worse).

Native speaker scores of mannequin responses. Responses to non-”commonplace” varieties (blue) had been rated as worse than responses to “commonplace” varieties (orange) when it comes to stereotyping (19% worse), demeaning content material (25% worse), comprehension (9% worse), naturalness (8% worse), and condescension (15% worse).

When GPT-3.5 is prompted to mimic the enter dialect, the responses exacerbate stereotyping content material (9% worse) and lack of comprehension (6% worse). GPT-4 is a more recent, extra highly effective mannequin than GPT-3.5, so we’d hope that it might enhance over GPT-3.5. However though GPT-4 responses imitating the enter enhance on GPT-3.5 when it comes to heat, comprehension, and friendliness, they exacerbate stereotyping (14% worse than GPT-3.5 for minoritized varieties). That means that bigger, newer fashions don’t mechanically resolve dialect discrimination: the truth is, they may make it worse.

Implications

ChatGPT can perpetuate linguistic discrimination towards audio system of non-“commonplace” varieties. If these customers have hassle getting ChatGPT to know them, it’s more durable for them to make use of these instruments. That may reinforce limitations towards audio system of non-“commonplace” varieties as AI fashions turn into more and more utilized in every day life.

Furthermore, stereotyping and demeaning responses perpetuate concepts that audio system of non-“commonplace” varieties communicate much less accurately and are much less deserving of respect. As language mannequin utilization will increase globally, these instruments danger reinforcing energy dynamics and amplifying inequalities that hurt minoritized language communities.

Study extra right here: [ paper ]