How healthcare payers and plans can empower members with generative AI

On this put up, we focus on how generative synthetic intelligence (AI) may help medical health insurance plan members get the knowledge they want. Many medical health insurance plan beneficiaries discover it difficult to navigate by way of the advanced member portals offered by their insurance coverage. These portals typically require a number of clicks, filters, and searches to seek out particular details about their advantages, deductibles, declare historical past, and different necessary particulars. This will result in dissatisfaction, confusion, and elevated calls to customer support, leading to a suboptimal expertise for each members and suppliers.

The issue arises from the lack of conventional UIs to know and reply to pure language queries successfully. Members are compelled to study and adapt to the system’s construction and terminology, quite than the system being designed to know their pure language questions and supply related data seamlessly. Generative AI expertise, reminiscent of conversational AI assistants, can probably clear up this downside by permitting members to ask questions in their very own phrases and obtain correct, customized responses. By integrating generative AI powered by Amazon Bedrock and purpose-built AWS knowledge companies reminiscent of Amazon Relational Database Service (Amazon RDS) into member portals, healthcare payers and plans can empower their members to seek out the knowledge they want shortly and effortlessly, with out navigating by way of a number of pages or relying closely on customer support representatives. Amazon Bedrock is a totally managed service that gives a selection of high-performing basis fashions (FMs) from main AI firms like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon by way of a unified API, together with a broad set of capabilities to construct generative AI functions with safety, privateness, and accountable AI.

The answer offered on this put up not solely enhances the member expertise by offering a extra intuitive and user-friendly interface, but in addition has the potential to scale back name volumes and operational prices for healthcare payers and plans. By addressing this ache level, healthcare organizations can enhance member satisfaction, cut back churn, and streamline their operations, finally resulting in elevated effectivity and price financial savings.

Answer overview

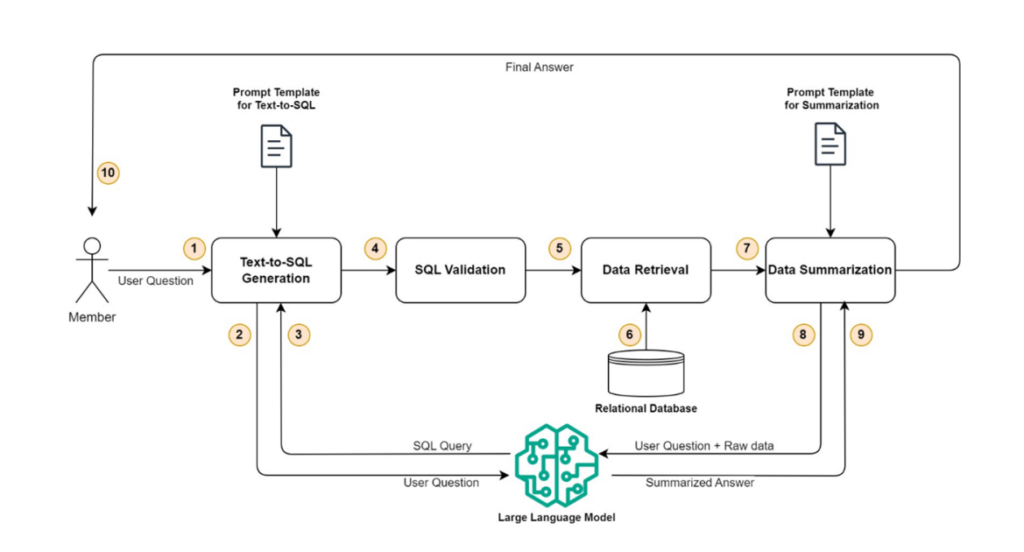

On this part, we dive deep to indicate how you should utilize generative AI and enormous language fashions (LLMs) to reinforce the member expertise by transitioning from a conventional filter-based declare search to a prompt-based search, which permits members to ask questions in pure language and get the specified claims or profit particulars. From a broad perspective, the whole answer could be divided into 4 distinct steps: text-to-SQL technology, SQL validation, knowledge retrieval, and knowledge summarization. The next diagram illustrates this workflow.

Let’s dive deep into every step one after the other.

Textual content-to-SQL technology

This step takes the consumer’s questions as enter and converts that right into a SQL question that can be utilized to retrieve the claim- or benefit-related data from a relational database. A pre-configured immediate template is used to name the LLM and generate a legitimate SQL question. The immediate template accommodates the consumer query, directions, and database schema together with key knowledge parts, reminiscent of member ID and plan ID, that are essential to restrict the question’s outcome set.

SQL validation

This step validates the SQL question generated in earlier step and makes certain it’s full and secure to be run on a relational database. Among the checks which might be carried out embrace:

- No delete, drop, replace, or insert operations are current within the generated question

- The question begins with choose

- WHERE clause is current

- Key circumstances are current within the WHERE clause (for instance, member-id = “78687576501” or member-id like “786875765%%”)

- Question size (string size) is in anticipated vary (for instance, no more than 250 characters)

- Unique consumer query size is in anticipated vary (for instance, no more than 200 characters)

If a test fails, the question isn’t run; as an alternative, a user-friendly message suggesting that the consumer contact customer support is distributed.

Knowledge retrieval

After the question has been validated, it’s used to retrieve the claims or advantages knowledge from a relational database. The retrieved knowledge is transformed right into a JSON object, which is used within the subsequent step to create the ultimate reply utilizing an LLM. This step additionally checks if no knowledge or too many rows are returned by the question. In each instances, a user-friendly message is distributed to the consumer, suggesting they supply extra particulars.

Knowledge summarization

Lastly, the JSON object retrieved within the knowledge retrieval step together with the consumer’s query is distributed to LLM to get the summarized response. A pre-configured immediate template is used to name the LLM and generate a user-friendly summarized response to the unique query.

Structure

The answer makes use of Amazon API Gateway, AWS Lambda, Amazon RDS, Amazon Bedrock, and Anthropic Claude 3 Sonnet on Amazon Bedrock to implement the backend of the applying. The backend could be built-in with an present net utility or portal, however for the aim of this put up, we use a single web page utility (SPA) hosted on Amazon Simple Storage Service (Amazon S3) for the frontend and Amazon Cognito for authentication and authorization. The next diagram illustrates the answer structure.

The workflow consists of the next steps:

- A single web page utility (SPA) is hosted utilizing Amazon S3 and loaded into the end-user’s browser utilizing Amazon CloudFront.

- Consumer authentication and authorization is finished utilizing Amazon Cognito.

- After a profitable authentication, a REST API hosted on API Gateway is invoked.

- The Lambda perform, uncovered as a REST API utilizing API Gateway, orchestrates the logic to carry out the useful steps: text-to-SQL technology, SQL validation, knowledge retrieval, and knowledge summarization. The Amazon Bedrock API endpoint is used to invoke the Anthropic Claude 3 Sonnet LLM. Declare and profit knowledge is saved in a PostgreSQL database hosted on Amazon RDS. One other S3 bucket is used for storing immediate templates that will likely be used for SQL technology and knowledge summarizations. This answer makes use of two distinct immediate templates:

- The text-to-SQL immediate template accommodates the consumer query, directions, database schema together with key knowledge parts, reminiscent of member ID and plan ID, that are essential to restrict the question’s outcome set.

- The information summarization immediate template accommodates the consumer query, uncooked knowledge retrieved from the relational database, and directions to generate a user-friendly summarized response to the unique query.

- Lastly, the summarized response generated by the LLM is distributed again to the net utility working within the consumer’s browser utilizing API Gateway.

Pattern immediate templates

On this part, we current some pattern immediate templates.

The next is an instance of a text-to-SQL immediate template:

The {text1} and {text2} knowledge objects will likely be changed programmatically to populate the ID of the logged-in member and consumer query. Additionally, extra examples could be added to assist the LLM generate acceptable SQLs.

The next is an instance of a knowledge summarization immediate template:

The {text1}, {text2}, and {text3} knowledge objects will likely be changed programmatically to populate the consumer query, the SQL question generated within the earlier step, and knowledge formatted in JSON and retrieved from Amazon RDS.

Safety

Amazon Bedrock is in scope for widespread compliance requirements reminiscent of Service and Group Management (SOC), Worldwide Group for Standardization (ISO), and Well being Insurance coverage Portability and Accountability Act (HIPAA) eligibility, and you should utilize Amazon Bedrock in compliance with the Common Knowledge Safety Regulation (GDPR). The service allows you to deploy and use LLMs in a secured and managed atmosphere. The Amazon Bedrock VPC endpoints powered by AWS PrivateLink help you set up a personal connection between the digital personal cloud (VPC) in your account and the Amazon Bedrock service account. It permits VPC cases to speak with service sources with out the necessity for public IP addresses. We outline the totally different accounts as follows:

- Buyer account – That is the account owned by the shopper, the place they handle their AWS sources reminiscent of RDS cases and Lambda features, and work together with the Amazon Bedrock hosted LLMs securely utilizing Amazon Bedrock VPC endpoints. You need to handle entry to Amazon RDS sources and databases by following the security best practices for Amazon RDS.

- Amazon Bedrock service accounts – This set of accounts is owned and operated by the Amazon Bedrock service workforce, which hosts the varied service APIs and associated service infrastructure.

- Mannequin deployment accounts – The LLMs provided by numerous distributors are hosted and operated by AWS in separate accounts devoted for mannequin deployment. Amazon Bedrock maintains full management and possession of mannequin deployment accounts, ensuring no LLM vendor has entry to those accounts.

When a buyer interacts with Amazon Bedrock, their requests are routed by way of a secured community connection to the Amazon Bedrock service account. Amazon Bedrock then determines which mannequin deployment account hosts the LLM mannequin requested by the shopper, finds the corresponding endpoint, and routes the request securely to the mannequin endpoint hosted in that account. The LLM fashions are used for inference duties, reminiscent of producing textual content or answering questions.

No buyer knowledge is saved inside Amazon Bedrock accounts, neither is it ever shared with LLM suppliers or used for tuning the fashions. Communications and knowledge transfers happen over personal community connections utilizing TLS 1.2+, minimizing the danger of knowledge publicity or unauthorized entry.

By implementing this multi-account structure and personal connectivity, Amazon Bedrock gives a safe atmosphere, ensuring buyer knowledge stays remoted and safe throughout the buyer’s personal account, whereas nonetheless permitting them to make use of the facility of LLMs offered by third-party suppliers.

Conclusion

Empowering medical health insurance plan members with generative AI expertise can revolutionize the way in which they work together with their insurance coverage and entry important data. By integrating conversational AI assistants powered by Amazon Bedrock and utilizing purpose-built AWS knowledge companies reminiscent of Amazon RDS, healthcare payers and insurance coverage can present a seamless, intuitive expertise for his or her members. This answer not solely enhances member satisfaction, however may cut back operational prices by streamlining customer support operations. Embracing modern applied sciences like generative AI turns into essential for organizations to remain aggressive and ship distinctive member experiences.

To study extra about how generative AI can speed up well being improvements and enhance affected person experiences, check with Payors on AWS and Transforming Patient Care: Generative AI Innovations in Healthcare and Life Sciences (Part 1). For extra details about utilizing generative AI with AWS companies, check with Build generative AI applications with Amazon Aurora and Knowledge Bases for Amazon Bedrock and the Generative AI class on the AWS Database Weblog.

In regards to the Authors

Sachin Jain is a Senior Options Architect at Amazon Internet Providers (AWS) with concentrate on serving to Healthcare and Life-Sciences clients of their cloud journey. He has over 20 years of expertise in expertise, healthcare and engineering area.

Sachin Jain is a Senior Options Architect at Amazon Internet Providers (AWS) with concentrate on serving to Healthcare and Life-Sciences clients of their cloud journey. He has over 20 years of expertise in expertise, healthcare and engineering area.

Sanjoy Thanneer is a Sr. Technical Account Supervisor with AWS based mostly out of New York. He has over 20 years of expertise working in Database and Analytics Domains. He’s captivated with serving to enterprise clients construct scalable , resilient and price environment friendly Functions.

Sanjoy Thanneer is a Sr. Technical Account Supervisor with AWS based mostly out of New York. He has over 20 years of expertise working in Database and Analytics Domains. He’s captivated with serving to enterprise clients construct scalable , resilient and price environment friendly Functions.

Sukhomoy Basak is a Sr. Options Architect at Amazon Internet Providers, with a ardour for Knowledge, Analytics, and GenAI options. Sukhomoy works with enterprise clients to assist them architect, construct, and scale functions to realize their enterprise outcomes.

Sukhomoy Basak is a Sr. Options Architect at Amazon Internet Providers, with a ardour for Knowledge, Analytics, and GenAI options. Sukhomoy works with enterprise clients to assist them architect, construct, and scale functions to realize their enterprise outcomes.