Assessing the Capability of Massive Language Fashions to Generate Progressive Analysis Concepts: Insights from a Examine with Over 100 NLP Specialists

Analysis concept technology strategies have developed by means of strategies like iterative novelty boosting, multi-agent collaboration, and multi-module retrieval. These approaches purpose to boost concept high quality and novelty in analysis contexts. Earlier research primarily centered on enhancing technology strategies over primary prompting, with out evaluating outcomes in opposition to human knowledgeable baselines. Massive language fashions (LLMs) have been utilized to varied analysis duties, together with experiment execution, computerized overview technology, and associated work curation. Nevertheless, these purposes differ from the artistic and open-ended job of analysis ideation addressed on this paper.

The sphere of computational creativity examines AI’s means to provide novel and various outputs. Earlier research indicated that AI-generated writings are typically much less artistic than these from skilled writers. In distinction, this paper finds that LLM-generated concepts will be extra novel than these from human consultants in analysis ideation. Human evaluations have been carried out to evaluate the affect of AI publicity or human-AI collaboration on novelty and variety, yielding blended outcomes. This research features a human analysis of concept novelty, specializing in evaluating human consultants and LLMs within the difficult job of analysis ideation.

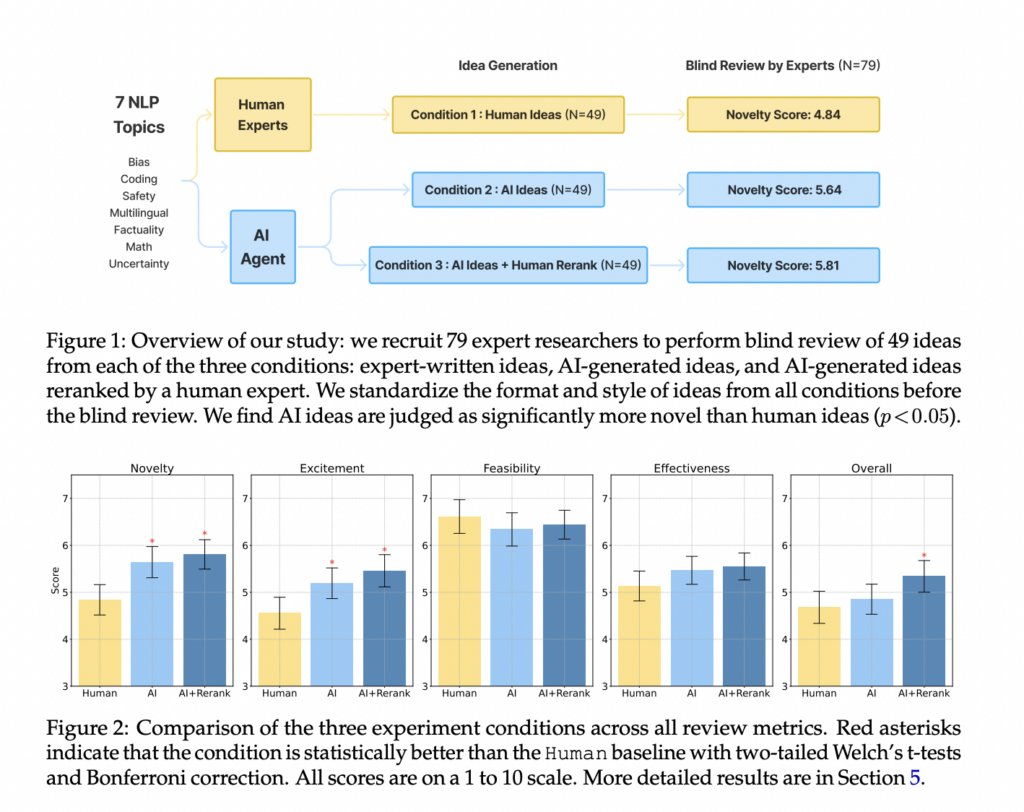

Current developments in LLMs have sparked curiosity in creating analysis brokers for autonomous concept technology. This research addresses the shortage of complete evaluations by rigorously assessing LLM capabilities in producing novel, expert-level analysis concepts. The experimental design compares an LLM ideation agent with knowledgeable NLP researchers, recruiting over 100 individuals for concept technology and blind critiques. Findings reveal LLM-generated concepts as extra novel however barely much less possible than human-generated ones. The research identifies open issues in constructing and evaluating analysis brokers, acknowledges challenges in human judgments of novelty, and proposes a complete design for future analysis involving concept execution into full tasks.

Researchers from Stanford College have launched Quantum Superposition Prompting (QSP), a novel framework designed to discover and quantify uncertainty in language mannequin outputs. QSP generates a ‘superposition’ of doable interpretations for a given question, assigning complicated amplitudes to every interpretation. The strategy makes use of ‘measurement’ prompts to break down this superposition alongside completely different bases, yielding likelihood distributions over outcomes. QSP’s effectiveness will likely be evaluated on duties involving a number of legitimate views or ambiguous interpretations, together with moral dilemmas, artistic writing prompts, and open-ended analytical questions.

The research additionally presents Fractal Uncertainty Decomposition (FUD), a method that recursively breaks down queries into hierarchical buildings of sub-queries, assessing uncertainty at every degree. FUD decomposes preliminary queries, estimates confidence for every sub-component, and recursively applies the method to low-confidence parts. The ensuing tree of nested confidence estimates is aggregated utilizing statistical strategies and prompted meta-analysis. Analysis metrics for these strategies embody range and coherence of generated superpositions, means to seize human-judged ambiguities, and enhancements in uncertainty calibration in comparison with classical strategies.

The research reveals that LLMs can generate analysis concepts judged as extra novel than these from human consultants, with statistical significance (p < 0.05). Nevertheless, LLM-generated concepts have been rated barely decrease in feasibility. Over 100 NLP researchers participated in producing and blindly reviewing concepts from each sources. The analysis used metrics together with novelty, feasibility, and total effectiveness. Open issues recognized embody LLM self-evaluation points and lack of concept range. The analysis proposes an end-to-end research design for future work, involving the execution of generated concepts into full tasks to evaluate the affect of novelty and feasibility judgments on analysis outcomes.

In conclusion, this research offers the primary rigorous comparability between LLMs and knowledgeable NLP researchers in producing analysis concepts. LLM-generated concepts have been judged extra novel however barely much less possible than human-generated ones. The analysis identifies open issues in LLM self-evaluation and concept range, highlighting challenges in creating efficient analysis brokers. Acknowledging the complexities of human judgments on novelty, the authors suggest an end-to-end research design for future analysis. This method entails executing generated concepts into full tasks to analyze how variations in novelty and feasibility judgments translate into significant analysis outcomes, addressing the hole between concept technology and sensible utility.

Take a look at the Paper. All credit score for this analysis goes to the researchers of this challenge. Additionally, don’t neglect to observe us on Twitter and be part of our Telegram Channel and LinkedIn Group. For those who like our work, you’ll love our newsletter..

Don’t Neglect to hitch our 50k+ ML SubReddit

Shoaib Nazir is a consulting intern at MarktechPost and has accomplished his M.Tech twin diploma from the Indian Institute of Know-how (IIT), Kharagpur. With a powerful ardour for Knowledge Science, he’s significantly within the various purposes of synthetic intelligence throughout varied domains. Shoaib is pushed by a need to discover the most recent technological developments and their sensible implications in on a regular basis life. His enthusiasm for innovation and real-world problem-solving fuels his steady studying and contribution to the sphere of AI