Implementing superior immediate engineering with Amazon Bedrock

Regardless of the power of generative artificial intelligence (AI) to imitate human habits, it usually requires detailed directions to generate high-quality and related content material. Immediate engineering is the method of crafting these inputs, known as prompts, that information basis fashions (FMs) and enormous language fashions (LLMs) to supply desired outputs. Immediate templates can be used as a construction to assemble prompts. By fastidiously formulating these prompts and templates, builders can harness the facility of FMs, fostering pure and contextually applicable exchanges that improve the general consumer expertise. The immediate engineering course of can be a fragile steadiness between creativity and a deep understanding of the mannequin’s capabilities and limitations. Crafting prompts that elicit clear and desired responses from these FMs is each an artwork and a science.

This publish gives invaluable insights and sensible examples to assist steadiness and optimize the immediate engineering workflow. We particularly concentrate on superior immediate strategies and finest practices for the fashions offered in Amazon Bedrock, a completely managed service that gives a alternative of high-performing FMs from main AI firms reminiscent of Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon by way of a single API. With these prompting strategies, builders and researchers can harness the complete capabilities of Amazon Bedrock, offering clear and concise communication whereas mitigating potential dangers or undesirable outputs.

Overview of superior immediate engineering

Immediate engineering is an efficient strategy to harness the facility of FMs. You may move directions inside the context window of the FM, permitting you to move particular context into the immediate. By interacting with an FM by way of a collection of questions, statements, or detailed directions, you’ll be able to modify FM output habits based mostly on the particular context of the output you need to obtain.

By crafting well-designed prompts, you may as well improve the mannequin’s security, ensuring it generates outputs that align along with your desired targets and moral requirements. Moreover, immediate engineering lets you increase the mannequin’s capabilities with domain-specific data and exterior instruments with out the necessity for resource-intensive processes like fine-tuning or retraining the mannequin’s parameters. Whether or not in search of to reinforce buyer engagement, streamline content material technology, or develop modern AI-powered options, harnessing the talents of immediate engineering may give generative AI functions a aggressive edge.

To be taught extra in regards to the fundamentals of immediate engineering, discuss with What is Prompt Engineering?

COSTAR prompting framework

COSTAR is a structured methodology that guides you thru crafting efficient prompts for FMs. By following its step-by-step strategy, you’ll be able to design prompts tailor-made to generate the varieties of responses you want from the FM. The magnificence of COSTAR lies in its versatility—it gives a sturdy basis for immediate engineering, whatever the particular approach or strategy you use. Whether or not you’re utilizing few-shot studying, chain-of-thought prompting, or one other methodology (lined later on this publish), the COSTAR framework equips you with a scientific strategy to formulate prompts that unlock the complete potential of FMs.

COSTAR stands for the next:

- Context – Offering background data helps the FM perceive the particular state of affairs and supply related responses

- Goal – Clearly defining the duty directs the FM’s focus to fulfill that particular objective

- Fashion – Specifying the specified writing type, reminiscent of emulating a well-known character or skilled professional, guides the FM to align its response along with your wants

- Tone – Setting the tone makes positive the response resonates with the required sentiment, whether or not it’s formal, humorous, or empathetic

- Viewers – Figuring out the supposed viewers tailors the FM’s response to be applicable and comprehensible for particular teams, reminiscent of specialists or newbies

- Response – Offering the response format, like an inventory or JSON, makes positive the FM outputs within the required construction for downstream duties

By breaking down the immediate creation course of into distinct phases, COSTAR empowers you to methodically refine and optimize your prompts, ensuring each facet is fastidiously thought of and aligned along with your particular targets. This degree of rigor and deliberation finally interprets into extra correct, coherent, and invaluable outputs from the FM.

Chain-of-thought prompting

Chain-of-thought (CoT) prompting is an strategy that improves the reasoning talents of FMs by breaking down complicated questions or duties into smaller, extra manageable steps. It mimics how people purpose and clear up issues by systematically breaking down the decision-making course of. With conventional prompting, a language mannequin makes an attempt to supply a ultimate reply immediately based mostly on the immediate. Nevertheless, in lots of circumstances, this may occasionally result in suboptimal or incorrect responses, particularly for duties that require multistep reasoning or logical deductions.

CoT prompting addresses this challenge by guiding the language mannequin to explicitly lay out its step-by-step thought course of, often called a reasoning chain, earlier than arriving on the ultimate reply. This strategy makes the mannequin’s reasoning course of extra clear and interpretable. This method has been proven to considerably enhance efficiency on duties that require multistep reasoning, logical deductions, or complicated problem-solving. Total, CoT prompting is a robust approach that makes use of the strengths of FMs whereas mitigating their weaknesses in complicated reasoning duties, finally resulting in extra dependable and well-reasoned outputs.

Let’s have a look at some examples of CoT prompting with its totally different variants.

CoT with zero-shot prompting

The primary instance is a zero-shot CoT immediate. Zero-shot prompting is a way that doesn’t embody a desired output instance within the preliminary immediate.

The next instance makes use of Anthropic’s Claude in Amazon Bedrock. XML tags are used to supply additional context within the immediate. Though Anthropic Claude can perceive the immediate in a wide range of codecs, it was educated utilizing XML tags. On this case, there are sometimes higher high quality and latency outcomes if we use this tagging construction so as to add additional directions within the immediate. For extra data on learn how to present extra context or directions, discuss with the related documentation for the FM you might be utilizing.

You should use Amazon Bedrock to ship Anthropic Claude Text Completions API or Anthropic Claude Messages API inference requests, as seen within the following examples. See the complete documentation at Anthropic Claude models.

We enter the next immediate:

As you’ll be able to see within the instance, the FM offered reasoning utilizing the <pondering></pondering> tags to supply the ultimate reply. This extra context permits us to carry out additional experimentation by tweaking the immediate directions.

CoT with few-shot prompting

Few-shot prompting is a way that features a desired output instance within the preliminary immediate. The next instance features a easy CoT pattern response to assist the mannequin reply the follow-up query. Few-shot prompting examples may be outlined in a immediate catalog or template, which is mentioned later on this publish.

The next is our normal few-shot immediate (not CoT prompting):

We get the next response:

Though this response is appropriate, we could need to know the variety of goldfish and rainbow fish which can be left. Due to this fact, we have to be extra particular in how we need to construction the output. We will do that by including a thought course of we wish the FM to reflect in our instance reply.

The next is our CoT immediate (few-shot):

We get the next appropriate response:

Self-consistency prompting

To additional enhance your CoT prompting talents, you’ll be able to generate a number of responses which can be aggregated and choose the commonest output. This is called self-consistency prompting. Self-consistency prompting requires sampling a number of, various reasoning paths by way of few-shot CoT. It then makes use of the generations to pick probably the most constant reply. Self-consistency with CoT is confirmed to outperform normal CoT as a result of choosing from a number of responses often results in a extra constant answer.

If there’s uncertainty within the response or if the outcomes disagree considerably, both a human or an overarching FM (see the immediate chaining part on this publish) can evaluation every final result and choose probably the most logical alternative.

For additional particulars on self-consistency prompting with Amazon Bedrock, see Enhance performance of generative language models with self-consistency prompting on Amazon Bedrock.

Tree of Ideas prompting

Tree of Thoughts (ToT) prompting is a way used to enhance FM reasoning capabilities by breaking down bigger drawback statements right into a treelike format, the place every drawback is split into smaller subproblems. Consider this as a tree construction: the tree begins with a strong trunk (representing the primary subject) after which separates into smaller branches (smaller questions or subjects).

This strategy permits the FMs to self-evaluate. The mannequin is prompted to purpose by way of every subtopic and mix the options to reach on the ultimate reply. The ToT outputs are then mixed with search algorithms, reminiscent of breadth-first search (BFS) and depth-first search (DFS), which lets you traverse ahead and backward by way of every subject within the tree. In accordance with Tree of Thoughts: Deliberate Problem Solving with Large Language Models, ToT considerably outperforms different prompting strategies.

One methodology of utilizing ToT is to ask the LMM to guage whether or not every thought within the tree is logical, potential, or inconceivable in case you’re fixing a posh drawback. You can even apply ToT prompting in different use circumstances. For instance, in case you ask an FM, “What are the results of local weather change?” you should use ToT to assist break this subject down into subtopics reminiscent of “checklist the environmental results” and “checklist the social results.”

The next instance makes use of the ToT prompting approach to permit Claude 3 Sonnet to resolve the place the ball is hidden. The FM can take the ToT output (subproblems 1–5) and formulate a ultimate reply.

We use the next immediate:

We get the next response:

Utilizing the ToT prompting approach, the FM has damaged down the issue of, “The place is the ball?” right into a set of subproblems which can be easier to reply. We sometimes see extra logical outcomes with this prompting strategy in comparison with a zero-shot direct query reminiscent of, “The place is the ball?”

Variations between CoT and ToT

The next desk summarizes the important thing variations between ToT and CoT prompting.

| CoT | ToT | |

| Construction | CoT prompting follows a linear chain of reasoning steps. | ToT prompting has a hierarchical, treelike construction with branching subproblems. |

| Depth | CoT can use the self-consistency methodology for elevated understanding. | ToT prompting encourages the FM to purpose extra deeply by breaking down subproblems into smaller ones, permitting for extra granular reasoning. |

| Complexity | CoT is a less complicated strategy, requiring much less effort than ToT. | ToT prompting is best fitted to dealing with extra complicated issues that require reasoning at a number of ranges or contemplating a number of interrelated components. |

| Visualization | CoT is straightforward to visualise as a result of it follows a linear trajectory. If utilizing self-consistency, it could require a number of reruns. | The treelike construction of ToT prompting may be visually represented in a tree construction, making it simple to grasp and analyze the reasoning course of. |

The next diagram visualizes the mentioned strategies.

Immediate chaining

Constructing on the mentioned prompting strategies, we now discover immediate chaining strategies, that are helpful in dealing with extra superior issues. In immediate chaining, the output of an FM is handed as enter to a different FM in a predefined sequence of N fashions, with immediate engineering between every step. This lets you break down complicated duties and questions into subtopics, every as a unique enter immediate to a mannequin. You should use ToT, CoT, and different prompting strategies with immediate chaining.

Amazon Bedrock Prompt Flows can orchestrate the end-to-end immediate chaining workflow, permitting customers to enter prompts in a logical sequence. These options are designed to speed up the event, testing, and deployment of generative AI functions so builders and enterprise customers can create extra environment friendly and efficient options which can be easy to take care of. You should use immediate administration and flows graphically within the Amazon Bedrock console or Amazon Bedrock Studio or programmatically by way of the Amazon Bedrock AWS SDK APIs.

Different choices for immediate chaining embody utilizing third-party LangChain libraries or LangGraph, which might handle the end-to-end orchestration. These are third-party frameworks designed to simplify the creation of functions utilizing FMs.

The next diagram showcases how a immediate chaining move can work:

The next instance makes use of immediate chaining to carry out a authorized case evaluation.

Immediate 1:

Response 1:

We then present a follow-up immediate and query.

Immediate 2:

Response 2:

The next is a ultimate immediate and query.

Immediate 3:

Response 3 (ultimate output):

To get began with hands-on examples of immediate chaining, discuss with the GitHub repo.

Immediate catalogs

A immediate catalog, also called a immediate library, is a group of prewritten prompts and immediate templates that you should use as a place to begin for numerous pure language processing (NLP) duties, reminiscent of textual content technology, query answering, or information evaluation. Through the use of a immediate catalog, it can save you effort and time crafting prompts from scratch and as a substitute concentrate on fine-tuning or adapting the present prompts to your particular use circumstances. This strategy additionally assists with consistency and re-usability, because the template may be shared throughout groups inside a corporation.

Prompt Management for Amazon Bedrock consists of a immediate builder, a immediate library (catalog), versioning, and testing strategies for immediate templates. For extra data on learn how to orchestrate the immediate move through the use of Immediate Administration for Amazon Bedrock, discuss with Advanced prompts in Amazon Bedrock.

The next instance makes use of a immediate template to construction the FM response.

Immediate template:

Pattern immediate:

Mannequin response:

For additional examples of prompting templates, discuss with the next assets:

Immediate misuses

When constructing and designing a generative AI software, it’s essential to grasp FM vulnerabilities concerning immediate engineering. This part covers a few of the commonest varieties of immediate misuses so you’ll be able to undertake safety within the design from the start.

FMs out there by way of Amazon Bedrock already present built-in protections to forestall the technology of dangerous responses. Nevertheless, it’s finest apply so as to add extra, customized immediate safety measures, reminiscent of with Guardrails for Amazon Bedrock. Discuss with the immediate protection strategies part on this publish to be taught extra about dealing with these use circumstances.

Immediate injection

Immediate injection assaults contain injecting malicious or unintended prompts into the system, probably resulting in the technology of dangerous, biased, or unauthorized outputs from the FM. On this case, an unauthorized consumer crafts a immediate to trick the FM into operating unintended actions or revealing delicate data. For instance, an unauthorized consumer may inject a immediate that instructs the FM to disregard or bypass safety filters reminiscent of XML tags, permitting the technology of offensive or unlawful content material. For examples, discuss with Hugging Face prompt-injections.

The next is an instance attacker immediate:

Immediate leaking

Immediate leaking may be thought of a type of immediate injection. Immediate leaking happens when an unauthorized consumer goals to leak the main points or directions from the unique immediate. This assault can expose behind-the-scenes immediate information or directions within the response again to the consumer. For instance:

Jailbreaking

Jailbreaking, within the context of immediate engineering safety, refers to an unauthorized consumer making an attempt to bypass the moral and security constraints imposed on the FM. This could lead it to generate unintended responses. For instance:

Alternating languages and particular characters

Alternating languages within the enter immediate will increase the possibility of complicated the FM with conflicting directions or bypassing sure FM guardrails (see extra on FM guardrails within the immediate protection strategies part). This additionally applies to the usage of particular characters in a immediate, reminiscent of , +, → or !—, which is an try to get the FM to neglect its authentic directions.

The next is an instance of a immediate misuse. The textual content within the brackets represents a language aside from English:

For extra data on immediate misuses, discuss with Common prompt injection attacks.

Immediate protection strategies

This part discusses learn how to assist stop these misuses of FM responses by placing safety mechanisms in place.

Guardrails for Amazon Bedrock

FM guardrails assist to uphold information privateness and supply secure and dependable mannequin outputs by stopping the technology of dangerous or biased content material. Guardrails for Amazon Bedrock evaluates consumer inputs and FM responses based mostly on use case–particular insurance policies and gives a further layer of safeguards whatever the underlying FM. You may apply guardrails throughout FMs on Amazon Bedrock, together with fine-tuned fashions. This extra layer of safety detects dangerous directions in an incoming immediate and catches it earlier than the occasion reaches the FM. You may customise your guardrails based mostly in your inner AI insurance policies.

For examples of the variations between responses with or with out guardrails in place, refer this Comparison table. For extra data, see How Guardrails for Amazon Bedrock works.

Use distinctive delimiters to wrap immediate directions

As highlighted in a few of the examples, immediate engineering strategies can use delimiters (reminiscent of XML tags) of their template. Some immediate injection assaults attempt to make the most of this construction by wrapping malicious directions in frequent delimiters, main the mannequin to imagine that the instruction was a part of its authentic template. Through the use of a novel delimiter worth (for instance, <tagname-abcde12345>), you may make positive the FM will solely contemplate directions which can be inside these tags. For extra data, discuss with Best practices to avoid prompt injection attacks.

Detect threats by offering particular directions

You can even embody directions that designate frequent menace patterns to show the FM learn how to detect malicious occasions. The directions concentrate on the consumer enter question. They instruct the FM to establish the presence of key menace patterns and return “Immediate Assault Detected” if it discovers a sample. These directions function a shortcut for the FM to cope with frequent threats. This shortcut is generally related when the template makes use of delimiters, such because the <pondering></pondering> and <reply></reply> tags.

For extra data, see Prompt engineering best practices to avoid prompt injection attacks on modern LLMs.

Immediate engineering finest practices

On this part, we summarize immediate engineering finest practices.

Clearly outline prompts utilizing COSTAR framework

Craft prompts in a means that leaves minimal room for misinterpretation through the use of the mentioned COSTAR framework. It’s essential to explicitly state the kind of response anticipated, reminiscent of a abstract, evaluation, or checklist. For instance, in case you ask for a novel abstract, it’s essential to clearly point out that you really want a concise overview of the plot, characters, and themes reasonably than an in depth evaluation.

Enough immediate context

Ensure that there’s adequate context inside the immediate and, if potential, embody an instance output response (few-shot approach) to information the FM towards the specified format and construction. As an example, if you would like an inventory of the most well-liked films from the Nineties offered in a desk format, it’s essential to explicitly state the variety of films to checklist and specify that the output needs to be in a desk. This degree of element helps the FM perceive and meet your expectations.

Stability simplicity and complexity

Keep in mind that immediate engineering is an artwork and a science. It’s essential to steadiness simplicity and complexity in your prompts to keep away from obscure, unrelated, or surprising responses. Overly easy prompts could lack the required context, whereas excessively complicated prompts can confuse the FM. That is notably essential when coping with complicated subjects or domain-specific language which may be much less acquainted to the LM. Use plain language and delimiters (reminiscent of XML tags in case your FM helps them) and break down complicated subjects utilizing the strategies mentioned to reinforce FM understanding.

Iterative experimentation

Immediate engineering is an iterative course of that requires experimentation and refinement. You might have to strive a number of prompts or totally different FMs to optimize for accuracy and relevance. Repeatedly check, analyze, and refine your prompts, lowering their dimension or complexity as wanted. You can even experiment with adjusting the FM temperature setting. There aren’t any mounted guidelines for the way FMs generate output, so flexibility and adaptableness are important for attaining the specified outcomes.

Immediate size

Fashions are higher at utilizing data that happens on the very starting or finish of its immediate context. Efficiency can degrade when fashions should entry and use data situated in the course of its immediate context. If the immediate enter may be very giant or complicated, it needs to be damaged down utilizing the mentioned strategies. For extra particulars, discuss with Lost in the Middle: How Language Models Use Long Contexts.

Tying all of it collectively

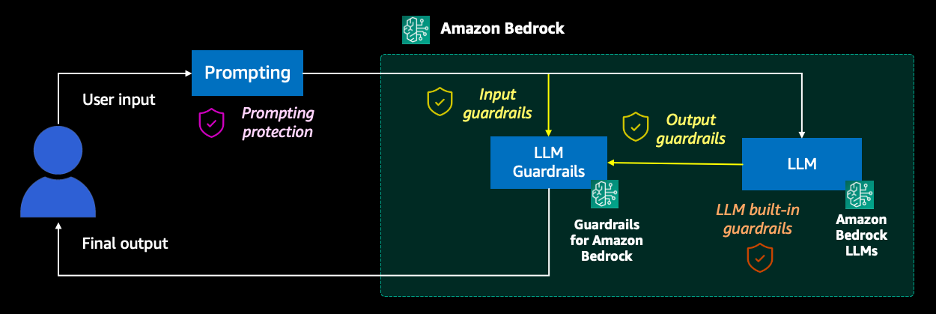

Let’s carry the general strategies we’ve mentioned collectively right into a high-level structure to showcase a full end-to-end prompting workflow. The general workflow could look just like the next diagram.

The workflow consists of the next steps:

- Prompting – The consumer decides which immediate engineering strategies they need to undertake. They then ship the immediate request to the generative AI software and look forward to a response. A immediate catalog can be used throughout this step.

- Enter guardrails (Amazon Bedrock) – A guardrail combines a single coverage or a number of insurance policies configured for prompts, together with content material filters, denied subjects, delicate data filters, and phrase filters. The immediate enter is evaluated in opposition to the configured insurance policies specified within the guardrail. If the enter analysis ends in a guardrail intervention, a configured blocked message response is returned, and the FM inference is discarded.

- FM and LLM built-in guardrails – Most fashionable FM suppliers are educated with safety protocols and have built-in guardrails to forestall inappropriate use. It’s best apply to additionally create and set up a further safety layer utilizing Guardrails for Amazon Bedrock.

- Output guardrails (Amazon Bedrock) – If the response ends in a guardrail intervention or violation, it is going to be overridden with preconfigured blocked messaging or masking of the delicate data. If the response’s analysis succeeds, the response is returned to the applying with out modifications.

- Ultimate output – The response is returned to the consumer.

Cleanup

Working the lab within the GitHub repo referenced within the conclusion is topic to Amazon Bedrock inference prices. For extra details about pricing, see Amazon Bedrock Pricing.

Conclusion

Able to get hands-on with these prompting strategies? As a subsequent step, discuss with our GitHub repo. This workshop accommodates examples of the prompting strategies mentioned on this publish utilizing FMs in Amazon Bedrock in addition to deep-dive explanations.

We encourage you to implement the mentioned prompting strategies and finest practices when growing a generative AI software. For extra details about superior prompting strategies, see Prompt engineering guidelines.

Glad prompting!

Concerning the Authors

Jonah Craig is a Startup Options Architect based mostly in Dublin, Eire. He works with startup clients throughout the UK and Eire and focuses on growing AI and machine studying (AI/ML) and generative AI options. Jonah has a grasp’s diploma in pc science and frequently speaks on stage at AWS conferences, such because the annual AWS London Summit and the AWS Dublin Cloud Day. In his spare time, he enjoys creating music and releasing it on Spotify.

Manish Chugh is a Principal Options Architect at AWS based mostly in San Francisco, CA. He makes a speciality of machine studying and generative AI. He works with organizations starting from giant enterprises to early-stage startups on issues associated to machine studying. His position entails serving to these organizations architect scalable, safe, and cost-effective machine studying workloads on AWS. He frequently presents at AWS conferences and different accomplice occasions. Exterior of labor, he enjoys mountaineering on East Bay trails, street biking, and watching (and taking part in) cricket.

Doron Bleiberg is a Senior Startup Options Architect at AWS, based mostly in Tel Aviv, Israel. In his position, Doron gives FinTech startups with technical steering and help utilizing AWS Cloud companies. With the appearance of generative AI, Doron has helped quite a few startups construct and deploy generative AI workloads within the AWS Cloud, reminiscent of monetary chat assistants, automated help brokers, and customized advice techniques.