Amazon Researchers Suggest a New Methodology to Measure the Activity-Particular Accuracy of Retrieval-Augmented Massive Language Fashions (RAG)

Massive Language Fashions (LLMs) have grow to be considerably widespread within the current occasions. Nevertheless, evaluating LLMs on a wider vary of duties might be extraordinarily tough. Public requirements don’t all the time precisely mirror an LLM’s basic abilities, particularly with regards to performing extremely specialised shopper duties that decision for domain-specific information. Totally different analysis metrics are used to seize totally different features of an LLM’s efficiency, however no single statistic is ample to seize all features of efficiency.

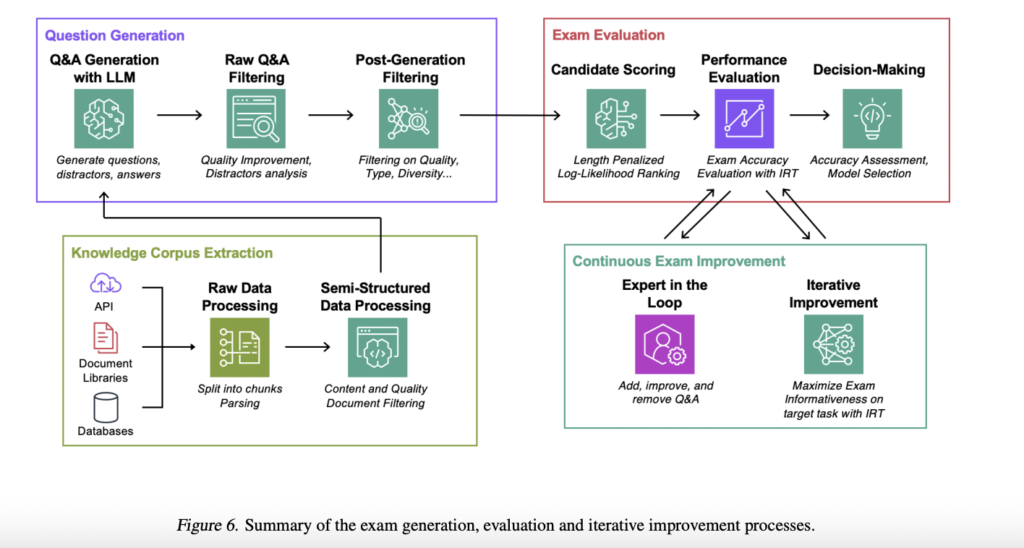

To evaluate the correctness of Retrieval-Augmented Era (RAG) techniques on explicit duties, a workforce of researchers from Amazon has supplied an exam-based analysis strategy that’s powered by LLMs. A pre-annotated floor reality dataset is just not needed for this absolutely automated process. Factual accuracy, or the system’s capability to acquire and apply the correct knowledge with a purpose to exactly reply a person’s inquiry, is the primary focus of the measurements. This technique affords customers extra insights into features influencing RAG efficiency, together with mannequin measurement, retrieval mechanisms, prompting strategies, and fine-tuning procedures, along with helping them in selecting the optimum part mixture for his or her RAG techniques.

The workforce has launched a completely automated, quantitative, exam-based analysis approach that may be scaled up or down. This contrasts with typical human-in-the-loop evaluations, which might be pricey as a result of they require the participation of an knowledgeable or annotator. Exams are created utilizing this technique by an LLM using the corpus of information associated to the present task. Subsequently, the candidate RAG techniques are assessed based on their capability to answer multiple-choice questions taken from these assessments.

This strategy ensures that factual information is evaluated successfully and persistently by placing a steadiness between the analysis’s representativeness and scoring simplicity. By evaluating examination outcomes, one can establish areas wherein one wants to enhance, which permits for ongoing, feedback-driven enhancements to the examination corpus.

A methodological enhancement plan inside the automated exam-generating course of has additionally been launched. Particularly, the generated checks are optimized utilizing Merchandise Response Idea (IRT) to enhance their informativeness on task-specific mannequin efficiency. Utilizing open-ended question-answering duties throughout 4 distinct information corpora, AWS DevOps troubleshooting manuals, Arxiv abstracts, StackExchange queries, and SEC filings, the workforce has illustrated and assessed this system. This big selection of subjects demonstrates the adaptability and efficiency of this evaluation course of.

The workforce has shared their main contributions as follows.

- An intensive strategy to the automated evaluation of Retrieval-Augmented Era (RAG) LLM pipelines has been launched. This system relies on artificial checks which are task-specific and made to satisfy the distinctive necessities of every task.

- Merchandise Response Idea (IRT) has been used to create dependable and understandable evaluation metrics. In an effort to guarantee a deeper information of mannequin efficiency, these metrics assist in the quantification and clarification of the features that have an effect on mannequin effectiveness.

- A methodical, utterly automated strategy to creating checks has been proposed. This technique makes use of an iterative refinement course of to optimize the informativeness of the exams, guaranteeing an correct analysis of the mannequin’s capabilities.

- By creating 4 distinctive duties, the workforce has supplied benchmark datasets for assessing RAG techniques. These initiatives provide a broad vary of analysis eventualities as a result of they’re primarily based on publicly accessible datasets from varied disciplines.

Try the Paper and GitHub. All credit score for this analysis goes to the researchers of this challenge. Additionally, don’t overlook to observe us on Twitter and be a part of our Telegram Channel and LinkedIn Group. For those who like our work, you’ll love our newsletter..

Don’t Overlook to hitch our 47k+ ML SubReddit

Discover Upcoming AI Webinars here

Tanya Malhotra is a remaining 12 months undergrad from the College of Petroleum & Vitality Research, Dehradun, pursuing BTech in Laptop Science Engineering with a specialization in Synthetic Intelligence and Machine Studying.

She is a Information Science fanatic with good analytical and significant pondering, together with an ardent curiosity in buying new abilities, main teams, and managing work in an organized method.