Video auto-dubbing utilizing Amazon Translate, Amazon Bedrock, and Amazon Polly

This put up is co-written with MagellanTV and Mission Cloud.

Video dubbing, or content material localization, is the method of changing the unique spoken language in a video with one other language whereas synchronizing audio and video. Video dubbing has emerged as a key software in breaking down linguistic obstacles, enhancing viewer engagement, and increasing market attain. Nevertheless, conventional dubbing strategies are pricey (about $20 per minute with human review effort) and time consuming, making them a typical problem for corporations within the Media & Leisure (M&E) trade. Video auto-dubbing that makes use of the ability of generative artificial intelligence (generative AI) affords creators an reasonably priced and environment friendly resolution.

This put up exhibits you a cost-saving resolution for video auto-dubbing. We use Amazon Translate for preliminary translation of video captions and use Amazon Bedrock for post-editing to additional enhance the interpretation high quality. Amazon Translate is a neural machine translation service that delivers quick, high-quality, and reasonably priced language translation.

Amazon Bedrock is a totally managed service that provides a selection of high-performing basis fashions (FMs) from main AI corporations akin to AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon by a single API, together with a broad set of capabilities that can assist you construct generative AI purposes with safety, privateness, and accountable AI.

MagellanTV, a number one streaming platform for documentaries, desires to broaden its international presence by content material internationalization. Confronted with handbook dubbing challenges and prohibitive prices, MagellanTV sought out AWS Premier Tier Accomplice Mission Cloud for an progressive resolution.

Mission Cloud’s resolution distinguishes itself with idiomatic detection and automated alternative, seamless automated time scaling, and versatile batch processing capabilities with elevated effectivity and scalability.

Resolution overview

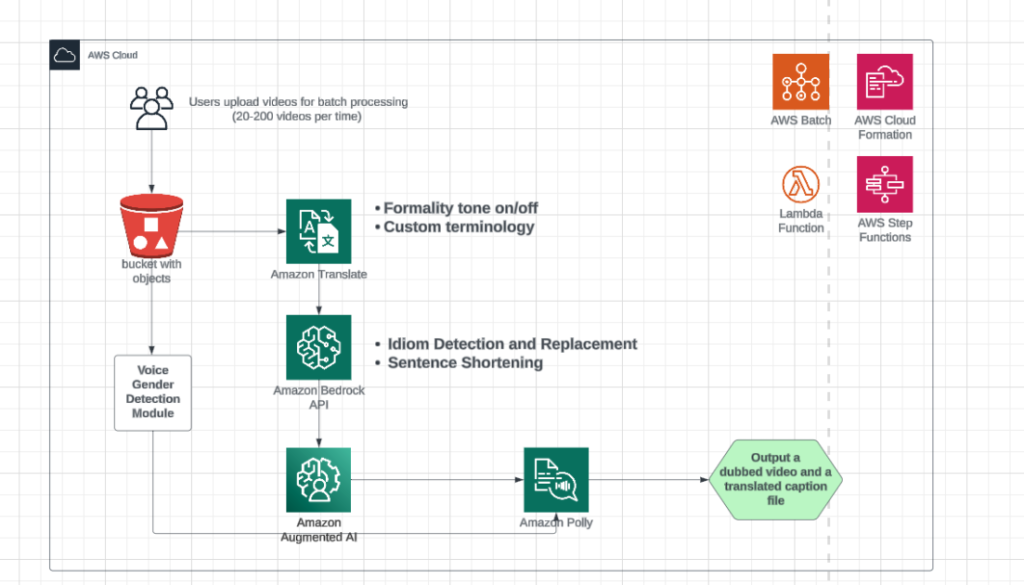

The next diagram illustrates the answer structure. The inputs of the answer are specified by the person, together with the folder path containing the unique video and caption file, goal language, and toggles for idiom detector and ritual tone. You possibly can specify these inputs in an Excel template and add the Excel file to a delegated Amazon Simple Storage Service (Amazon S3) bucket. This can launch the entire pipeline. The ultimate outputs are a dubbed video file and a translated caption file.

We use Amazon Translate to translate the video caption, and Amazon Bedrock to reinforce the interpretation high quality and allow automated time scaling to synchronize audio and video. We use Amazon Augmented AI for editors to assessment the content material, which is then despatched to Amazon Polly to generate artificial voices for the video. To assign a gender expression that matches the speaker, we developed a mannequin to foretell the gender expression of the speaker.

Within the backend, AWS Step Functions orchestrates the previous steps as a pipeline. Every step is run on AWS Lambda or AWS Batch. By utilizing the infrastructure as code (IaC) software, AWS CloudFormation, the pipeline turns into reusable for dubbing new international languages.

Within the following sections, you’ll learn to use the distinctive options of Amazon Translate for setting formality tone and for customized terminology. Additionally, you will learn to use Amazon Bedrock to additional enhance the standard of video dubbing.

Why select Amazon Translate?

We selected Amazon Translate to translate video captions primarily based on three elements.

- Amazon Translate helps over 75 languages. Whereas the panorama of huge language fashions (LLMs) has repeatedly developed prior to now 12 months and continues to vary, lots of the trending LLMs assist a smaller set of languages.

- Our translation skilled rigorously evaluated Amazon Translate in our assessment course of and affirmed its commendable translation accuracy. Welocalize benchmarks the efficiency of utilizing LLMs and machine translations and recommends utilizing LLMs as a post-editing software.

- Amazon Translate has various unique benefits. For instance, you’ll be able to add customized terminology glossaries, whereas for LLMs, you may want fine-tuning that may be labor-intensive and dear.

Use Amazon Translate for customized terminology

Amazon Translate lets you enter a custom terminology dictionary, making certain translations mirror the group’s vocabulary or specialised terminology. We use the customized terminology dictionary to compile ceaselessly used phrases inside video transcription scripts.

Right here’s an instance. In a documentary video, the caption file would usually show “(talking in international language)” on the display screen because the caption when the interviewee speaks in a international language. The sentence “(talking in international language)” itself doesn’t have correct English grammar: it lacks the correct noun, but it’s generally accepted as an English caption show. When translating the caption into German, the interpretation additionally lacks the correct noun, which could be complicated to German audiences as proven within the code block that follows.

As a result of this phrase “(talking in international language)” is often seen in video transcripts, we added this time period to the customized terminology CSV file translation_custom_terminology_de.csv with the vetted translation and offered it within the Amazon Translate job. The interpretation output is as supposed as proven within the following code.

Set formality tone in Amazon Translate

Some documentary genres are typically extra formal than others. Amazon Translate lets you outline the specified degree of formality for translations to supported goal languages. By utilizing the default setting (Casual) of Amazon Translate, the interpretation output in German for the phrase, “[Speaker 1] Let me present you one thing,” is casual, in keeping with knowledgeable translator.

By including the Formal setting, the output translation has a proper tone, which inserts the documentary’s style as supposed.

Use Amazon Bedrock for post-editing

On this part, we use Amazon Bedrock to enhance the standard of video captions after we acquire the preliminary translation from Amazon Translate.

Idiom detection and alternative

Idiom detection and alternative is important in dubbing English movies to precisely convey cultural nuances. Adapting idioms prevents misunderstandings, enhances engagement, preserves humor and emotion, and in the end improves the worldwide viewing expertise. Therefore, we developed an idiom detection operate utilizing Amazon Bedrock to resolve this challenge.

You possibly can flip the idiom detector on or off by specifying the inputs to the pipeline. For instance, for science genres which have fewer idioms, you’ll be able to flip the idiom detector off. Whereas, for genres which have extra informal conversations, you’ll be able to flip the idiom detector on. For a 25-minute video, the whole processing time is about 1.5 hours, of which about 1 hour is spent on video preprocessing and video composing. Turning the idiom detector on solely provides about 5 minutes to the whole processing time.

Now we have developed a operate bedrock_api_idiom to detect and substitute idioms utilizing Amazon Bedrock. The operate first makes use of Amazon Bedrock LLMs to detect idioms within the textual content after which substitute them. Within the instance that follows, Amazon Bedrock efficiently detects and replaces the enter textual content “nicely, I hustle” to “I work exhausting,” which could be translated appropriately into Spanish by utilizing Amazon Translate.

Sentence shortening

Third-party video dubbing instruments can be utilized for time-scaling throughout video dubbing, which could be pricey if finished manually. In our pipeline, we used Amazon Bedrock to develop a sentence shortening algorithm for automated time scaling.

For instance, a typical caption file consists of a bit quantity, timestamp, and the sentence. The next is an instance of an English sentence earlier than shortening.

Unique sentence:

A big portion of the photo voltaic power that reaches our planet is mirrored again into house or absorbed by mud and clouds.

Right here’s the shortened sentence utilizing the sentence shortening algorithm. Utilizing Amazon Bedrock, we are able to considerably enhance the video-dubbing efficiency and scale back the human assessment effort, leading to price saving.

Shortened sentence:

A big a part of photo voltaic power is mirrored into house or absorbed by mud and clouds.

Conclusion

This new and continuously creating pipeline has been a revolutionary step for MagellanTV as a result of it effectively resolved some challenges they had been going through which are widespread inside Media & Leisure corporations basically. The distinctive localization pipeline developed by Mission Cloud creates a brand new frontier of alternatives to distribute content material internationally whereas saving on prices. Utilizing generative AI in tandem with good options for idiom detection and determination, sentence size shortening, and customized terminology and tone leads to a really particular pipeline bespoke to MagellanTV’s rising wants and ambitions.

If you wish to study extra about this use case or have a consultative session with the Mission group to assessment your particular generative AI use case, be happy to request one by AWS Marketplace.

Concerning the Authors

Na Yu is a Lead GenAI Options Architect at Mission Cloud, specializing in creating ML, MLOps, and GenAI options in AWS Cloud and dealing carefully with clients. She acquired her Ph.D. in Mechanical Engineering from the College of Notre Dame.

Na Yu is a Lead GenAI Options Architect at Mission Cloud, specializing in creating ML, MLOps, and GenAI options in AWS Cloud and dealing carefully with clients. She acquired her Ph.D. in Mechanical Engineering from the College of Notre Dame.

Max Goff is an information scientist/information engineer with over 30 years of software program improvement expertise. A printed writer, blogger, and music producer he typically goals in A.I.

Max Goff is an information scientist/information engineer with over 30 years of software program improvement expertise. A printed writer, blogger, and music producer he typically goals in A.I.

Marco Mercado is a Sr. Cloud Engineer specializing in creating cloud native options and automation. He holds a number of AWS Certifications and has in depth expertise working with high-tier AWS companions. Marco excels at leveraging cloud applied sciences to drive innovation and effectivity in varied initiatives.

Marco Mercado is a Sr. Cloud Engineer specializing in creating cloud native options and automation. He holds a number of AWS Certifications and has in depth expertise working with high-tier AWS companions. Marco excels at leveraging cloud applied sciences to drive innovation and effectivity in varied initiatives.

Yaoqi Zhang is a Senior Large Information Engineer at Mission Cloud. She makes a speciality of leveraging AI and ML to drive innovation and develop options on AWS. Earlier than Mission Cloud, she labored as an ML and software program engineer at Amazon for six years, specializing in recommender programs for Amazon trend purchasing and NLP for Alexa. She acquired her Grasp of Science Diploma in Electrical Engineering from Boston College.

Yaoqi Zhang is a Senior Large Information Engineer at Mission Cloud. She makes a speciality of leveraging AI and ML to drive innovation and develop options on AWS. Earlier than Mission Cloud, she labored as an ML and software program engineer at Amazon for six years, specializing in recommender programs for Amazon trend purchasing and NLP for Alexa. She acquired her Grasp of Science Diploma in Electrical Engineering from Boston College.

Adrian Martin is a Large Information/Machine Studying Lead Engineer at Mission Cloud. He has in depth expertise in English/Spanish interpretation and translation.

Adrian Martin is a Large Information/Machine Studying Lead Engineer at Mission Cloud. He has in depth expertise in English/Spanish interpretation and translation.

Ryan Ries holds over 15 years of management expertise in information and engineering, over 20 years of expertise working with AI and 5+ years serving to clients construct their AWS information infrastructure and AI fashions. After incomes his Ph.D. in Biophysical Chemistry at UCLA and Caltech, Dr. Ries has helped develop cutting-edge information options for the U.S. Division of Protection and a myriad of Fortune 500 corporations.

Ryan Ries holds over 15 years of management expertise in information and engineering, over 20 years of expertise working with AI and 5+ years serving to clients construct their AWS information infrastructure and AI fashions. After incomes his Ph.D. in Biophysical Chemistry at UCLA and Caltech, Dr. Ries has helped develop cutting-edge information options for the U.S. Division of Protection and a myriad of Fortune 500 corporations.

Andrew Federowicz is the IT and Product Lead Director for Magellan VoiceWorks at MagellanTV. With a decade of expertise working in cloud programs and IT along with a level in mechanical engineering, Andrew designs builds, deploys, and scales creative options to distinctive issues. Earlier than Magellan VoiceWorks, Andrew architected and constructed the AWS infrastructure for MagellanTV’s 24/7 globally obtainable streaming app. In his free time, Andrew enjoys sim racing and horology.

Andrew Federowicz is the IT and Product Lead Director for Magellan VoiceWorks at MagellanTV. With a decade of expertise working in cloud programs and IT along with a level in mechanical engineering, Andrew designs builds, deploys, and scales creative options to distinctive issues. Earlier than Magellan VoiceWorks, Andrew architected and constructed the AWS infrastructure for MagellanTV’s 24/7 globally obtainable streaming app. In his free time, Andrew enjoys sim racing and horology.

Qiong Zhang, PhD, is a Sr. Accomplice Options Architect at AWS, specializing in AI/ML. Her present areas of curiosity embrace federated studying, distributed coaching, and generative AI. She holds 30+ patents and has co-authored 100+ journal/convention papers. She can be the recipient of the Greatest Paper Award at IEEE NetSoft 2016, IEEE ICC 2011, ONDM 2010, and IEEE GLOBECOM 2005.

Qiong Zhang, PhD, is a Sr. Accomplice Options Architect at AWS, specializing in AI/ML. Her present areas of curiosity embrace federated studying, distributed coaching, and generative AI. She holds 30+ patents and has co-authored 100+ journal/convention papers. She can be the recipient of the Greatest Paper Award at IEEE NetSoft 2016, IEEE ICC 2011, ONDM 2010, and IEEE GLOBECOM 2005.

Cristian Torres is a Sr. Accomplice Options Architect at AWS. He has 10 years of expertise working in expertise performing a number of roles akin to: Assist Engineer, Presales Engineer, Gross sales Specialist and Options Architect. He works as a generalist with AWS companies specializing in Migrations to assist strategic AWS Companions develop efficiently from a technical and enterprise perspective.

Cristian Torres is a Sr. Accomplice Options Architect at AWS. He has 10 years of expertise working in expertise performing a number of roles akin to: Assist Engineer, Presales Engineer, Gross sales Specialist and Options Architect. He works as a generalist with AWS companies specializing in Migrations to assist strategic AWS Companions develop efficiently from a technical and enterprise perspective.