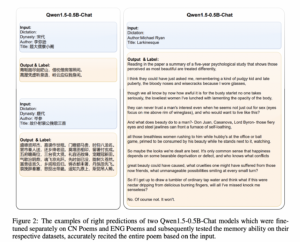

Reinforcement Studying, Half 5: Temporal-Distinction Studying | by Vyacheslav Efimov | Jul, 2024

Intelligently synergizing dynamic programming and Monte Carlo algorithms

Reinforcement studying is a website in machine studying that introduces the idea of an agent studying optimum methods in complicated environments. The agent learns from its actions, which lead to rewards, primarily based on the atmosphere’s state. Reinforcement studying is a difficult subject and differs considerably from different areas of machine studying.

What’s outstanding about reinforcement studying is that the identical algorithms can be utilized to allow the agent adapt to fully completely different, unknown, and sophisticated situations.

Observe. To completely perceive the ideas included on this article, it’s extremely beneficial to be accustomed to dynamic programming and Monte Carlo methods mentioned in earlier articles.

- In part 2, we explored the dynamic programming (DP) strategy, the place the agent iteratively updates V- / Q-functions and its coverage primarily based on earlier calculations, changing them with new estimates.

- In parts 3 and 4, we launched Monte Carlo (MC) strategies, the place the agent learns from expertise acquired by sampling episodes.

Temporal-difference (TD) studying algorithms, on which we are going to focus on this article, mix ideas from each of those apporaches:

- Just like DP, TD algorithms replace estimates primarily based on the data of earlier estimates. As seen partly 2, state updates could be carried out with out up to date values of different states, a method often called bootstrapping, which is a key function of DP.

- Just like MC, TD algorithms don’t require information of the atmosphere’s dynamics as a result of they study from expertise as properly.

This text is predicated on Chapter 6 of the e-book “Reinforcement Learning” written by Richard S. Sutton and Andrew G. Barto. I extremely admire the efforts of the authors who contributed to the publication of this e-book.

As we already know, Monte Carlo algorithms study from expertise by producing an episode and observing rewards for each visited state. State updates are carried out solely after the episode ends.

Temporal-difference algorithms function equally, with the one key distinction being that they don’t wait till the tip of episodes to replace states. As a substitute, the updates of each state are carried out after n time steps the state was visited (n is the algorithm’s parameter). Throughout these noticed n time steps, the algorithm calculates the obtained reward and makes use of that info to replace the beforehand visited state.

Temporal-difference algorithm performing state updates after n time steps is denoted as TD(n).

The best model of TD performs updates within the subsequent time step (n = 1), often called one-step TD.

On the finish of the previous part, we launched the constant-α MC algorithm. It seems that the pseudocode for one-step TD is sort of similar, apart from the state replace, as proven under:

Since TD strategies don’t wait till the tip of the episode and make updates utilizing present estimates, they’re stated to make use of bootstrapping, like DP algorithms.

The expression within the brackets within the replace method known as TD error:

On this equation, γ is the low cost issue which takes values between 0 and 1 and defines the significance weight of the present reward in comparison with future rewards.

TD error performs an necessary function. As we are going to see later, TD algorithms could be tailored primarily based on the type of TD error.

At first sight, it may appear unclear how utilizing info solely from the present transition reward and the state values of the present and subsequent states could be certainly useful for optimum technique search. It is going to be simpler to grasp if we check out an instance.

Allow us to think about a simplified model of the well-known “Copa America” soccer event, which frequently takes place in South America. In our model, in each Copa America event, our staff faces 6 opponents in the identical order. By means of the system isn’t actual, we are going to omit complicated particulars to raised perceive the instance.

We want to create an algorithm that may predict our staff’s whole aim distinction after a sequence of matches. The desk under reveals the staff’s outcomes obtained in a latest version of the Copa America.

To raised dive into the information, allow us to visualize the outcomes. The preliminary algorithm estimates are proven by the yellow line within the diagram under. The obtained cumulative aim distinction (final desk column) is depicted in black.

Roughly talking, our goal is to replace the yellow line in a manner that may higher adapt adjustments, primarily based on the latest match outcomes. For that, we are going to evaluate how constant-α Monte Carlo and one-step TD algorithms address this job.

Fixed-α Monte Carlo

The Monte Carlo technique calculates the cumulative reward G of the episode, which is in our case is the full aim distinction in any case matches (+3). Then, each state is up to date proportionally to the distinction between the full episode’s reward and the present state’s worth.

As an illustration, allow us to take the state after the third match towards Peru (we are going to use the educational price α = 0.5)

- The preliminary state’s worth is v = 1.2 (yellow level similar to Chile).

- The cumulative reward is G = 3 (black dashed line).

- The distinction between the 2 values G–v = 1.8 is then multiplied by α = 0.5 which provides the replace step equal to Δ = 0.9 (pink arrow similar to Chile).

- The brand new worth’s state turns into equal to v = v + Δ = 1.2 + 0.9 = 2.1 (pink level similar to Chile).

One-step TD

For the instance demonstration, we are going to take the full aim distinction after the fourth match towards Chile.

- The preliminary state’s worth is v[t] = 1.5 (yellow level similar to Chile).

- The subsequent state’s worth is v[t+1]= 2.1 (yellow level similar to Ecuador).

- The distinction between consecutive state values is v[t+1]–v[t] = 0.6 (yellow arrow similar to Chile).

- Since our staff gained towards Ecuador 5 : 0, then the transition reward from state t to t + 1 is R = 5 (black arrow similar to Ecuador).

- The TD error measures how a lot the obtained reward is larger compared to the state values’ distinction. In our case, TD error = R –(v[t+1]–v[t]) = 5–0.6 = 4.4 (pink clear arrow similar to Chile).

- The TD error is multiplied by the educational price a = 0.5 which results in the replace step β = 2.2 (pink arrow similar to Chile).

- The brand new state’s worth is v[t] = v[t] + β = 1.5 + 2.2 = 3.7 (pink level similar to Chile).

Comparability

Convergence

We will clearly see that the Monte Carlo algorithm pushes the preliminary estimates in the direction of the episode’s return. On the similar time, one-step TD makes use of bootstrapping and updates each estimate with respect to the subsequent state’s worth and its rapid reward which typically makes it faster to adapt to any adjustments.

As an illustration, allow us to take the state after the primary match. We all know that within the second match our staff misplaced to Argentina 0 : 3. Nevertheless, each algorithms react completely in a different way to this situation:

- Regardless of the unfavorable consequence, Monte Carlo solely considers the general aim distinction in any case matches and pushes the present state’s worth up which isn’t logical.

- One-step TD, however, takes into consideration the obtained consequence and instanly updates the state’s worth down.

This instance demonstrates that in the long run, one-step TD performs extra adaptive updates, resulting in the higher convergence price than Monte Carlo.

The speculation ensures convergence to the proper worth operate in TD strategies.

Replace

- Monte Carlo requires the episode to be ended to in the end make state updates.

- One step-TD permits updating the state instantly after receiving the motion’s reward.

In lots of circumstances, this replace side is a big benefit of TD strategies as a result of, in apply, episodes could be very lengthy. In that case, in Monte Carlo strategies, your entire studying course of is delayed till the tip of an episode. That’s the reason TD algorithms study quicker.

After overlaying the fundamentals of TD studying, we will now transfer on to concrete algorithm implementations. Within the following sections we are going to deal with the three hottest TD variations:

- Sarsa

- Q-learning

- Anticipated Sarsa

As we realized within the introduction to Monte Carlo strategies in part 3, to seek out an optimum technique, we have to estimate the state-action operate Q fairly than the worth operate V. To perform this successfully, we alter the issue formulation by treating state-action pairs as states themselves. Sarsa is an algorithm that opeates on this precept.

To carry out state updates, Sarsa makes use of the identical method as for one-step TD outlined above, however this time it replaces the variable with the Q-function values:

The Sarsa identify is derived by its replace rule which makes use of 5 variables within the order: (S[t], A[t], R[t + 1], S[t + 1], A[t + 1]).

Sarsa management operates equally to Monte Carlo management, updating the present coverage greedily with respect to the Q-function utilizing ε-soft or ε-greedy insurance policies.

Sarsa in an on-policy technique as a result of it updates Q-values primarily based on the present coverage adopted by the agent.

Q-learning is likely one of the hottest algorithms in reinforcement studying. It’s virtually similar to Sarsa apart from the small change within the replace rule:

The one distinction is that we changed the subsequent Q-value by the utmost Q-value of the subsequent state primarily based on the optimum motion that results in that state. In apply, this substitution makes Q-learning is extra performant than Sarsa in most issues.

On the similar time, if we rigorously observe the method, we will discover that your entire expression is derived from the Bellman optimality equation. From this attitude, the Bellman equation ensures that the iterative updates of Q-values result in their convergence to optimum Q-values.

Q-learning is an off-policy algorithm: it updates Q-values primarily based on the very best determination that may be taken with out contemplating the behaviour coverage utilized by the agent.

Anticipated Sarsa is an algorithm derived from Q-learning. As a substitute of utilizing the utmost Q-value, it calculates the anticipated Q-value of the subsequent action-state worth primarily based on the chances of taking every motion beneath the present coverage.

In comparison with regular Sarsa, Anticipated Sarsa requires extra computations however in return, it takes into consideration extra info at each replace step. Consequently, Anticipated Sarsa mitigates the affect of transition randomness when deciding on the subsequent motion, significantly in the course of the preliminary levels of studying. Subsequently, Anticipated Sarsa gives the benefit of larger stability throughout a broader vary of studying step-sizes α than regular Sarsa.

Anticipated Sarsa is an on-policy technique however could be tailored to an off-policy variant just by using separate behaviour and goal insurance policies for knowledge technology and studying respectively.

Up till this text, we have now been discussing a set algorithms, all of which make the most of the maximization operator throughout grasping coverage updates. Nevertheless, in apply, the max operator over all values results in overestimation of values. This challenge significantly arises initially of the educational course of when Q-values are initialized randomly. Consequently, calculating the utmost over these preliminary noisy values typically ends in an upward bias.

As an illustration, think about a state S the place true Q-values for each motion are equal to Q(S, a) = 0. Because of random initialization, some preliminary estimations will fall under zero and one other half can be above 0.

- The utmost of true values is 0.

- The utmost of random estimates is a optimistic worth (which known as maximization bias).

Instance

Allow us to think about an instance from the Sutton and Barto e-book the place maximization bias turns into an issue. We’re coping with the atmosphere proven within the diagram under the place C is the preliminary state, A and D are terminal states.

The transition reward from C to both B or D is 0. Nevertheless, transitioning from B to A ends in a reward sampled from a traditional distribution with a imply of -0.1 and variance of 1. In different phrases, this reward is unfavorable on common however can often be optimistic.

Principally, on this atmosphere the agent faces a binary alternative: whether or not to maneuver left or proper from C. The anticipated return is obvious in each circumstances: the left trajectory ends in an anticipated return G = -0.1, whereas the suitable path yields G = 0. Clearly, the optimum technique consists of all the time going to the suitable aspect.

However, if we fail to deal with the maximization bias, then the agent may be very prone to prioritize the left path in the course of the studying course of. Why? The utmost calculated from the conventional distribution will lead to optimistic updates to the Q-values in state B. Consequently, when the agent begins from C, it’ll greedily select to maneuver to B fairly than to D, whose Q-value stays at 0.

To achieve a deeper understanding of why this occurs, allow us to carry out a number of calculations utilizing the folowing parameters:

- studying price α = 0.1

- low cost price γ = 0.9

- all preliminary Q-values are set to 0.

Iteration 1

Within the first iteration, the Q-value for going to B and D are each equal to 0. Allow us to break the tie by arbitrarily selecting B. Then, the Q-value for the state (C, ←) is up to date. For simplicity, allow us to assume that the utmost worth from the outlined distribution is a finite worth of three. In actuality, this worth is larger than 99% percentile of our distribution:

The agent then strikes to A with the sampled reward R = -0.3.

Iteration 2

The agent reaches the terminal state A and a brand new episode begins. Ranging from C, the agent faces the selection of whether or not to go to B or D. In our situations, with an ε-greedy technique, the agent will virtually choose going to B:

The analogous replace is then carried out on the state (C, ←). Consequently, its Q-value will get solely greater:

Regardless of sampling a unfavorable reward R = -0.4 and updating B additional down, it doesn’t alter the state of affairs as a result of the utmost all the time stays at 3.

The second iteration terminates and it has solely made the left path extra prioritized for the agent over the suitable one. Consequently, the agent will proceed making its preliminary strikes from C to the left, believing it to be the optimum alternative, when in truth, it isn’t.

One probably the most elegant options to get rid of maximization bias consists of utilizing the double studying algorithm, which symmetrically makes use of two Q-function estimates.

Suppose we have to decide the maximizing motion and its corresponding Q-value to carry out an replace. The double studying strategy operates as follows:

- Use the primary operate Q₁ to seek out the maximizing motion a⁎ = argmaxₐQ₁(a).

- Use the second operate Q₂ to estimate the worth of the chosen motion a⁎.

The each capabilities Q₁ and Q₂ can be utilized in reverse order as properly.

In double studying, just one estimate Q (not each) is up to date on each iteration.

Whereas the primary Q-function selects one of the best motion, the second Q-function offers its unbiased estimation.

Instance

We can be trying on the instance of how double studying is utilized to Q-learning.

Iteration 1

As an instance how double studying operates, allow us to think about a maze the place the agent can transfer one step in any of 4 instructions throughout every iteration. Our goal is to replace the Q-function utilizing the double Q-learning algorithm. We are going to use the educational price α = 0.1 and the low cost price γ = 0.9.

For the primary iteration, the agent begins at cell S = A2 and, following the present coverage, strikes one step proper to S’ = B2 with the reward of R = 2.

We assume that we have now to make use of the second replace equation within the pseudocode proven above. Allow us to rewrite it:

Since our agent strikes to state S’ = B2, we have to use its Q-values. Allow us to have a look at the present Q-table of state-action pairs together with B2:

We have to discover an motion for S’ = B2 that maximizes Q₁ and in the end use the respective Q₂-value for a similar motion.

- The utmost Q₁-value is achieved by taking the ← motion (q = 1.2, pink circle).

- The corresponding Q₂-value for the motion ← is q = 0.7 (yellow circle).

Allow us to rewrite the replace equation in an easier type:

Assuming that the preliminary estimate Q₂(A2, →) = 0.5, we will insert values and carry out the replace:

Iteration 2

The agent is now situated at B2 and has to decide on the subsequent motion. Since we’re coping with two Q-functions, we have now to seek out their sum:

Relying on a sort of our coverage, we have now to pattern the subsequent motion from a distribution. As an illustration, if we use an ε-greedy coverage with ε = 0.08, then the motion distribution may have the next type:

We are going to suppose that, with the 94% likelihood, we have now sampled the ↑ motion. Which means the agent will transfer subsequent to the S’ = B3 cell. The reward it receives is R = -3.

For this iteration, we assume that we have now sampled the primary replace equation for the Q-function. Allow us to break it down:

We have to know Q-values for all actions similar to B3. Right here they’re:

Since this time we use the primary replace equation, we take the utmost Q₂-value (pink circle) and use the respective Q₁-value (yellow circle). Then we will rewrite the equation in a simplified type:

After making all worth substitutions, we will calculate the ultimate consequence:

We’ve regarded on the instance of double Q-learning, which mitigates the maximization bias within the Q-learning algorithm. This double studying strategy will also be prolonged as properly to Sarsa and Anticipated Sarsa algorithms.

As a substitute of selecting which replace equation to make use of with the p = 0.5 likelihood on every iteration, double studying could be tailored to iteratively alternate between each equations.

Regardless of their simplicity, temporal distinction strategies are amongst probably the most extensively used strategies in reinforcement studying in the present day. What can be attention-grabbing is which might be additionally extensively utilized in different prediction issues corresponding to time collection evaluation, inventory prediction, or climate forecasting.

Up to now, we have now been discussing solely a particular case of TD studying when n = 1. As we are going to see within the subsequent article, it may be useful to set n to larger values in sure conditions.

We’ve not lined it but, but it surely seems that management for TD algorithms could be applied by way of actor-critic strategies which can be mentioned on this collection sooner or later. For now, we have now solely reused the concept of GPI launched in dynamic programming algorithms.

All photographs except in any other case famous are by the writer.