How BRIA AI used distributed coaching in Amazon SageMaker to coach latent diffusion basis fashions for business use

This put up is co-written with Bar Fingerman from BRIA AI.

This put up explains how BRIA AI educated BRIA AI 2.0, a high-resolution (1024×1024) text-to-image diffusion mannequin, on a dataset comprising petabytes of licensed photos shortly and economically. Amazon SageMaker training jobs and Amazon SageMaker distributed training libraries took on the undifferentiated heavy lifting related to infrastructure administration. SageMaker helps you construct, practice, and deploy machine studying (ML) fashions in your use instances with totally managed infrastructure, instruments, and workflows.

BRIA AI is a pioneering platform specializing in accountable and open generative synthetic intelligence (AI) for builders, providing superior fashions completely educated on licensed information from companions resembling Getty Photos, DepositPhotos, and Alamy. BRIA AI caters to main manufacturers, animation and gaming studios, and advertising businesses with its multimodal suite of generative fashions. Emphasizing moral sourcing and business readiness, BRIA AI’s fashions are source-available, safe, and optimized for integration with varied tech stacks. By addressing foundational challenges in information procurement, steady mannequin coaching, and seamless know-how integration, BRIA AI goals to be the go-to platform for artistic AI utility builders.

You may as well discover the BRIA AI 2.0 mannequin for picture technology on AWS Marketplace.

This weblog put up discusses how BRIA AI labored with AWS to handle the next key challenges:

- Attaining out-of-the-box operational excellence for big mannequin coaching

- Lowering time-to-train through the use of information parallelism

- Maximizing GPU utilization with environment friendly information loading

- Lowering mannequin coaching price (by paying just for internet coaching time)

Importantly, BRIA AI was ready to make use of SageMaker whereas maintaining the initially used HuggingFace Accelerate (Speed up) software program stack intact. Thus, transitioning to SageMaker coaching didn’t require adjustments to BRIA AI’s mannequin implementation or coaching code. Later, BRIA AI was capable of seamlessly evolve their software program stack on SageMaker together with their mannequin coaching.

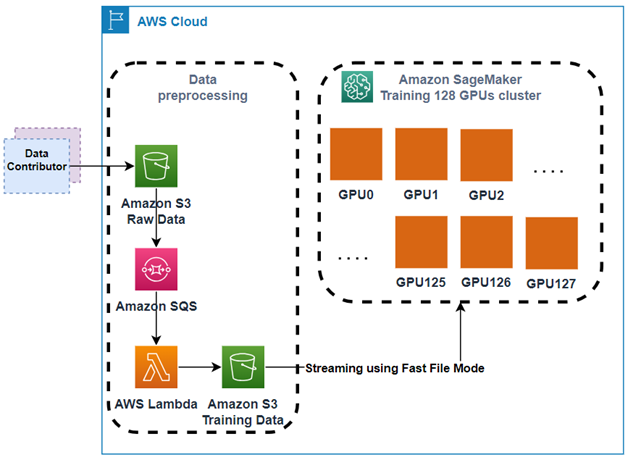

Coaching pipeline structure

BRIA AI’s coaching pipeline consists of two predominant elements:

Knowledge preprocessing:

- Knowledge contributors add licensed uncooked picture information to BRIA AI’s Amazon Simple Storage Service (Amazon S3) bucket.

- A picture pre-processing pipeline utilizing Amazon Simple Queue Service (Amazon SQS) and AWS Lambda capabilities generates lacking picture metadata and packages coaching information into giant webdataset information for later environment friendly information streaming straight from an S3 bucket, and information sharding throughout GPUs. See the [Challenge 1] part. Webdataset is a PyTorch implementation subsequently it matches properly with Speed up.

Mannequin coaching:

- SageMaker distributes coaching jobs for managing the coaching cluster and runs the coaching itself.

- Streaming information from S3 to the coaching cases utilizing SageMaker’s FastFile mode.

Pre-training challenges and options

Pre-training basis fashions is a difficult activity. Challenges embrace price, efficiency, orchestration, monitoring, and the engineering experience wanted all through the weeks-long coaching course of.

The 4 challenges we confronted have been:

Problem 1: Attaining out-of-the-box operational excellence for big mannequin coaching

To orchestrate the coaching cluster and recuperate from failures, BRIA AI depends on SageMaker Coaching Jobs’ resiliency options. These embrace cluster well being checks, built-in retries, and job resiliency. Earlier than your job begins, SageMaker runs GPU well being checks and verifies NVIDIA Collective Communications Library (NCCL) communication on GPU cases, changing defective cases (if vital) to verify your coaching script begins working on a wholesome cluster of cases. You may as well configure SageMaker to mechanically retry coaching jobs that fail with a SageMaker inner server error (ISE). As a part of retrying a job, SageMaker will substitute cases that encountered unrecoverable GPU errors with contemporary cases, reboot the wholesome cases, and begin the job once more. This leads to quicker restarts and workload completion. Through the use of AWS Deep Learning Containers, the BRIA AI workload benefited from the SageMaker SDK mechanically setting the mandatory setting variables to tune NVIDIA NCCL AWS Elastic Fabric Adapter (EFA) networking based mostly on well-known finest practices. This helps maximize the workload throughput.

To watch the coaching cluster, BRIA AI used the built-in SageMaker integration to Amazon CloudWatch logs (applicative logs), and CloudWatch metrics (CPU, GPU, and networking metrics).

Problem 2: Lowering time-to-train through the use of information parallelism

BRIA AI wanted to coach a stable-diffusion 2.0 mannequin from scratch on petabytes-scale licensed picture dataset. Coaching on a single GPU may take few month to finish. To satisfy deadline necessities, BRIA AI used information parallelism through the use of a SageMaker coaching with 16 p4de.24xlarge cases, lowering the overall coaching time to beneath two weeks. Distributed information parallel coaching permits for a lot quicker coaching of enormous fashions by splitting information throughout many units that practice in parallel, whereas syncing gradients commonly to maintain a constant shared mannequin. It makes use of the mixed computing energy of many units. BRIA AI used a cluster of 4 p4de.24xlarge cases (8xA100 80GB NVIDIA GPUs) to realize a throughput of 1.8 it per second for an efficient batch dimension of 2048 (batch=8, bf16, accumulate=2).

p4de.24xlarge cases embrace 600 GB per second peer-to-peer GPU communication with NVIDIA NVSwitch. 400 gigabits per second (Gbps) occasion networking with assist for EFA and NVIDIA GPUDirect RDMA (distant direct reminiscence entry).

Be aware: At present you should utilize p5.48xlarge cases (8XH100 80GB GPUs) with 3200 Gbps networking between cases utilizing EFA 2.0 (not used on this pre-training by BRIA AI).

Speed up is a library that permits the identical PyTorch code to be run throughout a distributed configuration with minimal code changes.

BRIA AI used Speed up for small scale coaching off the cloud. When it was time to scale out coaching within the cloud, BRIA AI was capable of proceed utilizing Speed up, due to its built-in integration with SageMaker and Amazon SageMaker distributed data parallel library (SMDDP). SMDDP is function constructed to the AWS infrastructure, lowering communications overhead in two methods:

- The library performs AllReduce, a key operation throughout distributed coaching that’s accountable for a big portion of communication overhead (optimum GPU utilization with environment friendly AllReduce overlapping with a backward move).

- The library performs optimized node-to-node communication by totally using the AWS community infrastructure and Amazon Elastic Compute Cloud (Amazon EC2) occasion topology (optimum bandwidth use with balanced fusion buffer).

Be aware that SageMaker coaching helps many open supply distributed coaching libraries, for instance Fully Sharded Data Parallel (FSDP), and DeepSpeed. BRIA AI used FSDP in SageMaker in different coaching workloads. On this case, through the use of the ShardingStrategy.SHARD_GRAD_OP function, BRIA AI was capable of obtain an optimum batch dimension and speed up their coaching course of.

Problem 3: Attaining environment friendly information loading

The BRIA AI dataset included lots of of thousands and thousands of photos that wanted to be delivered from storage onto GPUs for processing. Effectively accessing this huge quantity of information throughout a coaching cluster presents a number of challenges:

- The information won’t match into the storage of a single occasion.

- Downloading the multi-terabyte dataset to every coaching occasion is time consuming whereas the GPUs sit idle.

- Copying thousands and thousands of small picture information from Amazon S3 can develop into a bottleneck due to collected roundtrip time of fetching objects from S3.

- The information must be break up accurately between cases.

BRIA AI addressed these challenges through the use of SageMaker fast file input mode, which supplied the next out-of-the-box options:

- Streaming As a substitute of copying information when coaching begins, or utilizing a further distributed file system, we selected to stream information straight from Amazon S3 to the coaching cases utilizing SageMaker quick file mode. This permits coaching to begin instantly with out ready for downloads. Streaming additionally reduces the necessity to match datasets into occasion storage.

- Knowledge distribution: Quick file mode was configured to shard the dataset information between a number of cases utilizing S3DataDistributionType=ShardedByS3Key.

- Native file entry: Quick file mode supplies a neighborhood POSIX filesystem interface to information in Amazon S3. This allowed BRIA AI’s information loader to entry distant information as if it was native.

- Packaging information to giant containers: Utilizing thousands and thousands of small picture and metadata information is an overhead when streaming information from object storage like Amazon S3. To scale back this overhead, BRIA AI compacted a number of information into giant TAR file containers (2–5 GB), which may be effectively streamed from S3 utilizing quick file mode to the cases. Particularly, BRIA AI used WebDataset for environment friendly native information loading and used a coverage whereby there isn’t any information loading synchronization between cases and every GPU masses random batches by means of a hard and fast seed. This coverage helps remove bottlenecks and maintains quick and deterministic information loading efficiency.

For extra on information loading concerns, see Choose the best data source for your Amazon SageMaker training job weblog put up.

Problem 4: Paying just for internet coaching time

Pre-training giant language fashions is just not steady. The mannequin coaching typically requires intermittent stops for analysis and changes. As an example, the mannequin would possibly cease converging and want changes, otherwise you would possibly need to pause coaching to check the mannequin, refine information, or troubleshoot points. These pauses end in prolonged intervals the place the GPU cluster is idle. With SageMaker coaching jobs, BRIA AI was capable of solely pay all through their energetic coaching time. This allowed BRIA AI to coach fashions at a decrease price and with larger effectivity.

BRIA AI coaching technique consists of three steps for decision for optimum mannequin convergence:

- Preliminary coaching on a 256×256 – 32 GPUs cluster

- Progressive refinement to a 512×512 – 64 GPUs cluster

- Closing coaching on a 1024×1024 – 128 GPUs cluster

In every step, the computing required was totally different attributable to utilized tradeoffs, such because the batch dimension per decision and the higher restrict of the GPU and gradient accumulation. The tradeoff is between cost-saving and mannequin protection.

BRIA AI’s price calculations have been facilitated by sustaining a constant iteration per second charge, which allowed for correct estimation of coaching time. This enabled exact willpower of the required variety of iterations and calculation of the coaching compute price per hour.

BRIA AI coaching GPU utilization and common batch dimension time:

- GPU utilization: Common is over 98 p.c, signifying maximization of GPUs for the entire coaching cycle and that our information loader is effectively streaming information at a excessive charge.

- Iterations per second : Coaching technique consists of three steps—Preliminary coaching on 256×256, progressive refinement to 512×512, and last coaching on 1024×1024 decision for optimum mannequin convergence. For every step, the quantity of computing varies as a result of there are tradeoffs that we are able to apply with totally different batch sizes per decision whereas contemplating the higher restrict of the GPU and gradient accumulation, the place the strain is cost-saving in opposition to mannequin protection.

End result examples

Prompts used for producing the photographs

Immediate 1, higher left picture: A trendy man sitting casually on out of doors steps, sporting a inexperienced hoodie, matching inexperienced pants, black footwear, and sun shades. He’s smiling and has neatly groomed hair and a brief beard. A brown leather-based bag is positioned beside him. The background contains a brick wall and a window with white frames.

Immediate 2, higher proper picture: A vibrant Indian marriage ceremony ceremony. The smiling bride in a magenta saree with gold embroidery and henna-adorned palms sits adorned in conventional gold jewellery. The groom, sitting in entrance of her, in a golden sherwani and white dhoti, pours water right into a ceremonial vessel. They’re surrounded by flowers, candles, and leaves in a colourful, festive environment crammed with conventional objects.

Immediate 3, decrease left picture: A wood tray crammed with quite a lot of scrumptious pastries. The tray features a croissant dusted with powdered sugar, a chocolate-filled croissant, {a partially} eaten croissant, a Danish pastry and a muffin subsequent to a small jar of chocolate sauce, and a bowl of espresso beans, all organized on a beige fabric.

Immediate 4, decrease proper picture: A panda pouring milk right into a white cup on a desk with espresso beans, flowers, and a espresso press. The background contains a black-and-white image and an ornamental wall piece.

Conclusion

On this put up, we noticed how Amazon SageMaker enabled BRIA AI to coach a diffusion mannequin effectively, while not having to manually provision and configure infrastructure. Through the use of SageMaker coaching, BRIA AI was capable of scale back prices and speed up iteration pace, lowering coaching time with distributed coaching whereas sustaining 98 p.c GPU utilization, and maximize worth per price. By taking up the undifferentiated heavy lifting, SageMaker empowered BRIA AI’s staff to be extra productive and ship improvements quicker. The benefit of use and automation provided by SageMaker coaching jobs makes it a beautiful possibility for any staff seeking to effectively practice giant, state-of-the-art fashions.

To study extra about how SageMaker may help you practice giant AI fashions effectively and cost-effectively, discover the Amazon SageMaker web page. You may as well attain out to your AWS account staff to find find out how to unlock the total potential of your large-scale AI initiatives.

In regards to the Authors

Bar Fingerman, Head Of Engineering AI/ML at BRIA AI.

Bar Fingerman, Head Of Engineering AI/ML at BRIA AI.

Doron Bleiberg, Senior Startup Options Architect.

Doron Bleiberg, Senior Startup Options Architect.

Gili Nachum, Principal Gen AI/ML Specialist Options Architect.

Gili Nachum, Principal Gen AI/ML Specialist Options Architect.

Erez Zarum, Startup Options Architect,

Erez Zarum, Startup Options Architect,