Understanding and Implementing Medprompt | by Anand Subramanian | Jul, 2024

We now carry out selection shuffling ensembling by shuffling the order of reply selections for every check query, creating a number of variants of the identical query. The LLM is then prompted with these variants, together with the corresponding few-shot exemplars, to generate reasoning steps and a solution for every variant. Lastly, we carry out a majority vote over the predictions from all variants and choose the ultimate prediction.

The code associated to this implementation may be discovered at this github repo link.

We use the MedQA [6] dataset for implementing and evaluating Medprompt. We first outline helper capabilities for parsing the jsonl information.

def write_jsonl_file(file_path, dict_list):

"""

Write an inventory of dictionaries to a JSON Traces file.Args:

- file_path (str): The trail to the file the place the information will likely be written.

- dict_list (listing): A listing of dictionaries to put in writing to the file.

"""

with open(file_path, 'w') as file:

for dictionary in dict_list:

json_line = json.dumps(dictionary)

file.write(json_line + 'n')

def read_jsonl_file(file_path):

"""

Parses a JSONL (JSON Traces) file and returns an inventory of dictionaries.

Args:

file_path (str): The trail to the JSONL file to be learn.

Returns:

listing of dict: A listing the place every component is a dictionary representing

a JSON object from the file.

"""

jsonl_lines = []

with open(file_path, 'r', encoding="utf-8") as file:

for line in file:

json_object = json.hundreds(line)

jsonl_lines.append(json_object)

return jsonl_lines

Implementing Self-Generated CoT

For our implementation, we make the most of the coaching set from MedQA. We implement a zero-shot CoT immediate and course of all of the coaching questions. We use GPT-4o in our implementation. For every query, we generate the CoT and the corresponding reply. We outline a immediate which is predicated on the template offered within the Medprompt paper.

system_prompt = """You're an knowledgeable medical skilled. You're supplied with a medical query with a number of reply selections.

Your objective is to assume by way of the query fastidiously and clarify your reasoning step-by-step earlier than choosing the ultimate reply.

Reply solely with the reasoning steps and reply as specified under.

Beneath is the format for every query and reply:Enter:

## Query: {{query}}

{{answer_choices}}

Output:

## Reply

(mannequin generated chain of thought clarification)

Subsequently, the reply is [final model answer (e.g. A,B,C,D)]"""

def build_few_shot_prompt(system_prompt, query, examples, include_cot=True):

"""

Builds the zero-shot immediate.Args:

system_prompt (str): Job Instruction for the LLM

content material (dict): The content material for which to create a question, formatted as

required by `create_query`.

Returns:

listing of dict: A listing of messages, together with a system message defining

the duty and a person message with the enter query.

"""

messages = [{"role": "system", "content": system_prompt}]

for elem in examples:

messages.append({"function": "person", "content material": create_query(elem)})

if include_cot:

messages.append({"function": "assistant", "content material": format_answer(elem["cot"], elem["answer_idx"])})

else:

answer_string = f"""## AnswernTherefore, the reply is {elem["answer_idx"]}"""

messages.append({"function": "assistant", "content material": answer_string})

messages.append({"function": "person", "content material": create_query(query)})

return messages

def get_response(messages, model_name, temperature = 0.0, max_tokens = 10):

"""

Obtains the responses/solutions of the mannequin by way of the chat-completions API.

Args:

messages (listing of dict): The constructed messages offered to the API.

model_name (str): Title of the mannequin to entry by way of the API

temperature (float): A worth between 0 and 1 that controls the randomness of the output.

A temperature worth of 0 ideally makes the mannequin choose the almost definitely token, making the outputs deterministic.

max_tokens (int): Most variety of tokens that the mannequin ought to generate

Returns:

str: The response message content material from the mannequin.

"""

response = shopper.chat.completions.create(

mannequin=model_name,

messages=messages,

temperature=temperature,

max_tokens=max_tokens

)

return response.selections[0].message.content material

We additionally outline helper capabilities for parsing the reasoning and the ultimate reply choice from the LLM response.

def matches_ans_option(s):

"""

Checks if the string begins with the precise sample 'Subsequently, the reply is [A-Z]'.Args:

s (str): The string to be checked.

Returns:

bool: True if the string matches the sample, False in any other case.

"""

return bool(re.match(r'^Subsequently, the reply is [A-Z]', s))

def extract_ans_option(s):

"""

Extracts the reply choice (a single capital letter) from the beginning of the string.

Args:

s (str): The string containing the reply sample.

Returns:

str or None: The captured reply choice if the sample is discovered, in any other case None.

"""

match = re.search(r'^Subsequently, the reply is ([A-Z])', s)

if match:

return match.group(1) # Returns the captured alphabet

return None

def matches_answer_start(s):

"""

Checks if the string begins with the markdown header '## Reply'.

Args:

s (str): The string to be checked.

Returns:

bool: True if the string begins with '## Reply', False in any other case.

"""

return s.startswith("## Reply")

def validate_response(s):

"""

Validates a multi-line string response that it begins with '## Reply' and ends with the reply sample.

Args:

s (str): The multi-line string response to be validated.

Returns:

bool: True if the response is legitimate, False in any other case.

"""

file_content = s.cut up("n")

return matches_ans_option(file_content[-1]) and matches_answer_start(s)

def parse_answer(response):

"""

Parses a response that begins with '## Reply', extracting the reasoning and the reply selection.

Args:

response (str): The multi-line string response containing the reply and reasoning.

Returns:

tuple: A tuple containing the extracted CoT reasoning and the reply selection.

"""

split_response = response.cut up("n")

assert split_response[0] == "## Reply"

cot_reasoning = "n".be a part of(split_response[1:-1]).strip()

ans_choice = extract_ans_option(split_response[-1])

return cot_reasoning, ans_choice

We now course of the questions within the coaching set of MedQA. We acquire CoT responses and solutions for all questions and retailer them to a folder.

train_data = read_jsonl_file("knowledge/phrases_no_exclude_train.jsonl")cot_responses = []

# os.mkdir("cot_responses")

existing_files = os.listdir("cot_responses/")

for idx, merchandise in enumerate(tqdm(train_data)):

if str(idx) + ".txt" in existing_files:

proceed

immediate = build_zero_shot_prompt(system_prompt, merchandise)

attempt:

response = get_response(immediate, model_name="gpt-4o", max_tokens=500)

cot_responses.append(response)

with open(os.path.be a part of("cot_responses", str(idx) + ".txt"), "w", encoding="utf-8") as f:

f.write(response)

besides Exception as e :

print(str(e))

cot_responses.append("")

We now iterate throughout all of the generated responses to verify if they’re legitimate and cling to the prediction format outlined within the immediate. We discard responses that don’t conform to the required format. After that, we verify the anticipated solutions towards the bottom fact for every query and solely retain questions for which the anticipated solutions match the bottom fact.

questions_dict = []

ctr = 0

for idx, query in enumerate(tqdm(train_data)):

file = open(os.path.be a part of("cot_responses/", str(idx) + ".txt"), encoding="utf-8").learn()

if not validate_response(file):

proceedcot, pred_ans = parse_answer(file)

dict_elem = {}

dict_elem["idx"] = idx

dict_elem["question"] = query["question"]

dict_elem["answer"] = query["answer"]

dict_elem["options"] = query["options"]

dict_elem["cot"] = cot

dict_elem["pred_ans"] = pred_ans

questions_dict.append(dict_elem)

filtered_questions_dict = []

for merchandise in tqdm(questions_dict):

pred_ans = merchandise["options"][item["pred_ans"]]

if pred_ans == merchandise["answer"]:

filtered_questions_dict.append(merchandise)

Implementing the KNN mannequin

Having processed the coaching set and obtained the CoT response for all these questions, we now embed all questions utilizing the text-embedding-ada-002 from OpenAI.

def get_embedding(textual content, mannequin="text-embedding-ada-002"):

return shopper.embeddings.create(enter = [text], mannequin=mannequin).knowledge[0].embeddingfor merchandise in tqdm(filtered_questions_dict):

merchandise["embedding"] = get_embedding(merchandise["question"])

inv_options_map = {v:okay for okay,v in merchandise["options"].objects()}

merchandise["answer_idx"] = inv_options_map[item["answer"]]

We now practice a KNN mannequin utilizing these query embeddings. This acts as a retriever at inference time, because it helps us to retrieve related datapoints from the coaching set which might be most much like the query from the check set.

import numpy as np

from sklearn.neighbors import NearestNeighborsembeddings = np.array([d["embedding"] for d in filtered_questions_dict])

indices = listing(vary(len(filtered_questions_dict)))

knn = NearestNeighbors(n_neighbors=5, algorithm='auto', metric='cosine').match(embeddings)

Implementing the Dynamic Few-Shot and Selection Shuffling Ensemble Logic

We are able to now run inference. We subsample 500 questions from the MedQA check set for our analysis. For every query, we retrieve the 5 most related questions from the practice set utilizing the KNN module, together with their respective CoT reasoning steps and predicted solutions. We assemble a few-shot immediate utilizing these examples.

For every query, we additionally shuffle the order of the choices 5 occasions to create totally different variants. We then make the most of the constructed few-shot immediate to get the anticipated reply for every of the variants with shuffled choices.

def shuffle_option_labels(answer_options):

"""

Shuffles the choices of the query.Parameters:

answer_options (dict): A dictionary with the choices.

Returns:

dict: A brand new dictionary with the shuffled choices.

"""

choices = listing(answer_options.values())

random.shuffle(choices)

labels = [chr(i) for i in range(ord('A'), ord('A') + len(options))]

shuffled_options_dict = {label: choice for label, choice in zip(labels, choices)}

return shuffled_options_dict

test_samples = read_jsonl_file("final_processed_test_set_responses_medprompt.jsonl")for query in tqdm(test_samples, color ="inexperienced"):

question_variants = []

prompt_variants = []

cot_responses = []

question_embedding = get_embedding(query["question"])

distances, top_k_indices = knn.kneighbors([question_embedding], n_neighbors=5)

top_k_dicts = [filtered_questions_dict[i] for i in top_k_indices[0]]

query["outputs"] = []

for idx in vary(5):

question_copy = query.copy()

shuffled_options = shuffle_option_labels(query["options"])

inv_map = {v:okay for okay,v in shuffled_options.objects()}

question_copy["options"] = shuffled_options

question_copy["answer_idx"] = inv_map[question_copy["answer"]]

question_variants.append(question_copy)

immediate = build_few_shot_prompt(system_prompt, question_copy, top_k_dicts)

prompt_variants.append(immediate)

for immediate in tqdm(prompt_variants):

response = get_response(immediate, model_name="gpt-4o", max_tokens=500)

cot_responses.append(response)

for question_sample, reply in zip(question_variants, cot_responses):

if validate_response(reply):

cot, pred_ans = parse_answer(reply)

else:

cot = ""

pred_ans = ""

query["outputs"].append({"query": question_sample["question"], "choices": question_sample["options"], "cot": cot, "pred_ans": question_sample["options"].get(pred_ans, "")})

We now consider the outcomes of Medprompt over the check set. For every query, we’ve 5 predictions generated by way of the ensemble logic. We take the mode, or most ceaselessly occurring prediction, for every query as the ultimate prediction and consider the efficiency. Two edge instances are attainable right here:

- Two totally different reply choices are predicted two occasions every, with no clear winner.

- There may be an error with the response generated, that means that we don’t have a predicted reply choice.

For each of those edge instances, we take into account the query to be wrongly answered by the LLM.

def find_mode_string_list(string_list):

"""

Finds essentially the most ceaselessly occurring strings.Parameters:

string_list (listing of str): A listing of strings.

Returns:

listing of str or None: A listing containing essentially the most frequent string(s) from the enter listing.

Returns None if the enter listing is empty.

"""

if not string_list:

return None

string_counts = Counter(string_list)

max_freq = max(string_counts.values())

mode_strings = [string for string, count in string_counts.items() if count == max_freq]

return mode_strings

ctr = 0

for merchandise in test_samples:

pred_ans = [x["pred_ans"] for x in merchandise["outputs"]]

freq_ans = find_mode_string_list(pred_ans)

if len(freq_ans) > 1:

final_prediction = ""

else:

final_prediction = freq_ans[0]

if final_prediction == merchandise["answer"]:

ctr +=1

print(ctr / len(test_samples))

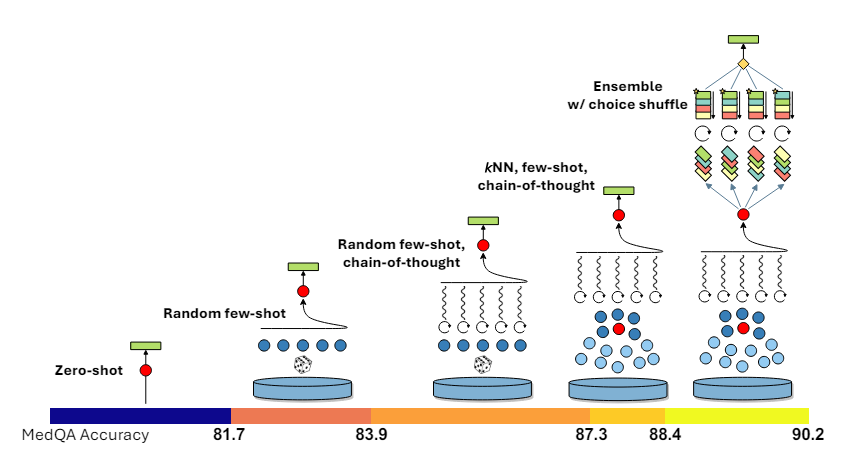

We consider the efficiency of Medprompt with GPT-4o when it comes to accuracy on the MedQA check subset. Moreover, we benchmark the efficiency of Zero-shot prompting, Random Few-Shot prompting, and Random Few-Shot with CoT prompting.

We observe that Medprompt and Random Few-Shot CoT prompting outperform the Zero and Few-Shot prompting baselines. Nonetheless, surprisingly, we discover that Random Few-Shot CoT outperforms our Medprompt efficiency. This may very well be on account of a few causes:

- The unique Medprompt paper benchmarked the efficiency of GPT-4. We observe that GPT-4o outperforms GPT-4T and GPT-4 on numerous textual content benchmarks considerably (https://openai.com/index/hello-gpt-4o/), indicating that Medprompt might have a lesser impact on a stronger mannequin like GPT-4o.

- We prohibit our analysis to 500 questions subsampled from MedQA. The Medprompt paper evaluates different Medical MCQA datasets and the complete model of MedQA. Evaluating GPT-4o on the entire variations of the datasets might give a greater image of the general efficiency.

Medprompt is an attention-grabbing framework for creating refined prompting pipelines, significantly for adapting a generalist LLM to a selected area with out the necessity for fine-tuning. It additionally highlights the issues concerned in deciding between prompting and fine-tuning for numerous use instances. Exploring how far prompting may be pushed to boost LLM efficiency is essential, because it gives a useful resource and cost-efficient various to fine-tuning.

[1] Nori, H., Lee, Y. T., Zhang, S., Carignan, D., Edgar, R., Fusi, N., … & Horvitz, E. (2023). Can generalist basis fashions outcompete special-purpose tuning? case examine in medication. arXiv preprint arXiv:2311.16452. (https://arxiv.org/abs/2311.16452)

[2] Wei, J., Wang, X., Schuurmans, D., Bosma, M., Xia, F., Chi, E., … & Zhou, D. (2022). Chain-of-thought prompting elicits reasoning in giant language fashions. Advances in Neural Info Processing Methods, 35, 24824–24837. (https://openreview.net/pdf?id=_VjQlMeSB_J)

[3] Gekhman, Z., Yona, G., Aharoni, R., Eyal, M., Feder, A., Reichart, R., & Herzig, J. (2024). Does Tremendous-Tuning LLMs on New Data Encourage Hallucinations?. arXiv preprint arXiv:2405.05904. (https://arxiv.org/abs/2405.05904)

[4] Singhal, Ok., Azizi, S., Tu, T., Mahdavi, S. S., Wei, J., Chung, H. W., … & Natarajan, V. (2023). Massive language fashions encode scientific information. Nature, 620(7972), 172–180. (https://www.nature.com/articles/s41586-023-06291-2)

[5] Singhal, Ok., Tu, T., Gottweis, J., Sayres, R., Wulczyn, E., Hou, L., … & Natarajan, V. (2023). In direction of expert-level medical query answering with giant language fashions. arXiv preprint arXiv:2305.09617. (https://arxiv.org/abs/2305.09617)

[6] Jin, D., Pan, E., Oufattole, N., Weng, W. H., Fang, H., & Szolovits, P. (2021). What illness does this affected person have? a large-scale open area query answering dataset from medical exams. Utilized Sciences, 11(14), 6421. (https://arxiv.org/abs/2009.13081) (Authentic dataset is launched underneath a MIT License)