Vitech makes use of Amazon Bedrock to revolutionize info entry with AI-powered chatbot

This put up is co-written with Murthy Palla and Madesh Subbanna from Vitech.

Vitech is a world supplier of cloud-centered profit and funding administration software program. Vitech helps group insurance coverage, pension fund administration, and funding purchasers broaden their choices and capabilities, streamline their operations, and achieve analytical insights. To serve their clients, Vitech maintains a repository of knowledge that features product documentation (consumer guides, commonplace working procedures, runbooks), which is presently scattered throughout a number of inner platforms (for instance, Confluence websites and SharePoint folders). The dearth of a centralized and simply navigable information system led to a number of points, together with:

- Low productiveness attributable to lack of an environment friendly retrieval system and sometimes results in info overload

- Inconsistent info entry as a result of there was no singular, unified supply of fact

To handle these challenges, Vitech used generative artificial intelligence (AI) with Amazon Bedrock to construct VitechIQ, an AI-powered chatbot for Vitech staff to entry an inner repository of documentation.

For purchasers that wish to construct an AI-driven chatbot that interacts with inner repository of paperwork, AWS presents a completely managed functionality Knowledge Bases for Amazon Bedrock, that may implement your entire Retrieval Increase Era (RAG) workflow from ingestion to retrieval, and immediate augmentation with out having to construct any customized integrations to knowledge sources or handle knowledge flows. Alternatively, open-source applied sciences like Langchain can be utilized to orchestrate the end-to-end move.

On this weblog, we walkthrough the architectural elements, analysis standards for the elements chosen by Vitech and the method move of consumer interplay inside VitechIQ.

Technical elements and analysis standards

On this part, we talk about the important thing technical elements and analysis standards for the elements concerned in constructing the answer.

Internet hosting massive language fashions

Vitech explored the choice of internet hosting Giant Language Fashions (LLMs) fashions utilizing Amazon Sagemaker. Vitech wanted a completely managed and safe expertise to host LLMs and remove the undifferentiated heavy lifting related to internet hosting 3P fashions. Amazon Bedrock is a completely managed service that makes FMs from main AI startups and Amazon obtainable by way of an API, so one can select from a variety of FMs to seek out the mannequin that’s greatest fitted to their use case. With Bedrock’s serverless expertise, one can get began shortly, privately customise FMs with their very own knowledge, and simply combine and deploy them into purposes utilizing the AWS instruments with out having to handle any infrastructure. Vitech thereby chosen Amazon Bedrock to host LLMs and combine seamlessly with their present infrastructure.

Retrieval Augmented Era vs. effective tuning

Conventional LLMs don’t have an understanding of Vitech’s processes and move, making it crucial to enhance the facility of LLMs with Vitech’s information base. Superb-tuning would permit Vitech to coach the mannequin on a small pattern set, thereby permitting the mannequin to supply response utilizing Vitech’s vocabulary. Nevertheless, for this use case, the complexity related to fine-tuning and the prices weren’t warranted. As an alternative, Vitech opted for Retrieval Augmented Era (RAG), wherein the LLM can use vector embeddings to carry out a semantic search and supply a extra related reply to customers when interacting with the chatbot.

Knowledge retailer

Vitech’s product documentation is basically obtainable in .pdf format, making it the usual format utilized by VitechIQ. In instances the place doc is in obtainable in different codecs, customers preprocess this knowledge and convert it into .pdf format. These paperwork are uploaded and saved in Amazon Simple Storage Service (Amazon S3), making it the centralized knowledge retailer.

Knowledge chunking

Chunking is the method of breaking down massive textual content paperwork into smaller, extra manageable segments (resembling paragraphs or sections). Vitech selected a recursive chunking methodology that entails dynamically dividing textual content primarily based on its inherent construction like chapters and sections, providing a extra pure division of textual content. A piece dimension of 1,000 tokens with a 200-token overlap offered essentially the most optimum outcomes.

Giant language fashions

VitechIQ makes use of two key LLM fashions to deal with the enterprise problem of offering environment friendly and correct info retrieval:

- Vector embedding – This course of converts the paperwork right into a numerical illustration, ensuring semantic relationships are captured (comparable paperwork are represented numerically nearer to one another), permitting for an environment friendly search. Vitech explored a number of vector embeddings fashions and chosen the Amazon Titan Embeddings textual content mannequin provided by Amazon Bedrock.

- Query answering – The core performance of VitechIQ is to supply concise and reliable solutions to consumer queries primarily based on the retrieved context. Vitech selected the Anthropic Claude mannequin, obtainable from Amazon Bedrock, for this objective. The excessive token restrict of 200,000 (roughly 150,000 phrases) permits the mannequin to course of intensive context and keep consciousness of the continued dialog, enabling it to supply extra correct and related responses. Moreover, VitechIQ consists of metadata from the vector database (for instance, doc URLs) within the mannequin’s output, offering customers with supply attribution and enhancing belief within the generated solutions.

Immediate engineering

Immediate engineering is essential for the information retrieval system. The immediate guides the LLM on tips on how to reply and work together primarily based on the consumer query. Prompts additionally assist floor the mannequin. As a part of immediate engineering, VitechIQ configured the immediate with a set of directions for the LLM to maintain the conversations related and remove discriminatory remarks, and guided it on how to reply to open-ended conversations. The next is an instance of a immediate utilized in VitechIQ:

Vector retailer

Vitech explored vector shops like OpenSearch and Redis. Nevertheless, Vitech has experience in dealing with and managing Amazon Aurora PostgreSQL-Compatible Edition databases for his or her enterprise purposes. Amazon Aurora PostgreSQL supplies help for the open supply pgvector extension to course of vector embeddings, and Amazon Aurora Optimized Reads presents an economical and performant possibility. These components led to the collection of Amazon Aurora PostgreSQL as the shop for vector embeddings.

Processing framework

LangChain provided seamless machine studying (ML) mannequin integration, permitting Vitech to construct customized automated AI elements and be mannequin agnostic. LangChain’s out-of-the-box chain and brokers libraries have empowered Vitech to undertake options like immediate templates and reminiscence administration, accelerating the general improvement course of. Vitech used Python digital environments to freeze a steady model of the LangChain dependencies and seamlessly transfer it from improvement to manufacturing environments. With help of Langchain ConversationBufferMemory library, VitechIQ shops dialog info utilizing a stateful session to take care of the relevance in dialog. The state is deleted after a configurable idle timeout elapses.

A number of LangChain libraries had been used throughout VitechIQ; the next are a couple of notable libraries and their utilization:

- langchain.llms (Bedrock) – Work together with LLMs offered by Amazon Bedrock

- langchain.embeddings (BedrockEmbeddings) – Create embeddings

- langchain.chains.question_answering (load_qa_chain) – Carry out Q&A

- langchain.prompts (PromptTemplate) – Create immediate templates

- langchain.vectorstores.pgvector (PGVector) – Create vector embeddings and carry out semantic search

- langchain.text_splitter (RecursiveCharacterTextSplitter) – Cut up paperwork into chunks

- langchain.reminiscence (ConversationBufferMemory) – Handle conversational reminiscence

They used the next variations:

Person interface

The VitechIQ consumer interface is constructed utilizing Streamlit. Streamlit presents a user-friendly expertise to shortly construct interactive and simply deployable options utilizing the Python library (used broadly at Vitech). The Streamlit app is hosted on an Amazon Elastic Cloud Compute (Amazon EC2) fronted with Elastic Load Balancing (ELB), permitting Vitech to scale as visitors will increase.

Optimizing search outcomes

To scale back hallucination and optimize the token dimension and search outcomes, VitechIQ performs semantic search utilizing the worth okay within the search operate (similarity_search_with_score). VitechIQ filters embedding responses to the highest 10 outcomes after which limits the dataset to information which have a rating lower than 0.48 (indicating shut co-relation), thereby figuring out essentially the most related response and eliminating noise.

Amazon Bedrock VPC interface endpoints

Vitech wished to ensure all communication is stored non-public and doesn’t traverse the general public web. VitechIQ makes use of an Amazon Bedrock VPC interface endpoint to ensure the connectivity is secured finish to finish.

Monitoring

VitechIQ software logs are despatched to Amazon CloudWatch. This helps Vitech administration get insights on present utilization and traits on matters. Moreover, Vitech makes use of Amazon Bedrock runtime metrics to measure latency, efficiency, and variety of tokens.

“We famous that the mixture of Amazon Bedrock and Claude not solely matched, however in some instances surpassed, in efficiency and high quality and it conforms to Vitech safety requirements in comparison with what we noticed with a competing generative AI resolution.”

– Madesh Subbanna, VP Databases & Analytics at Vitech

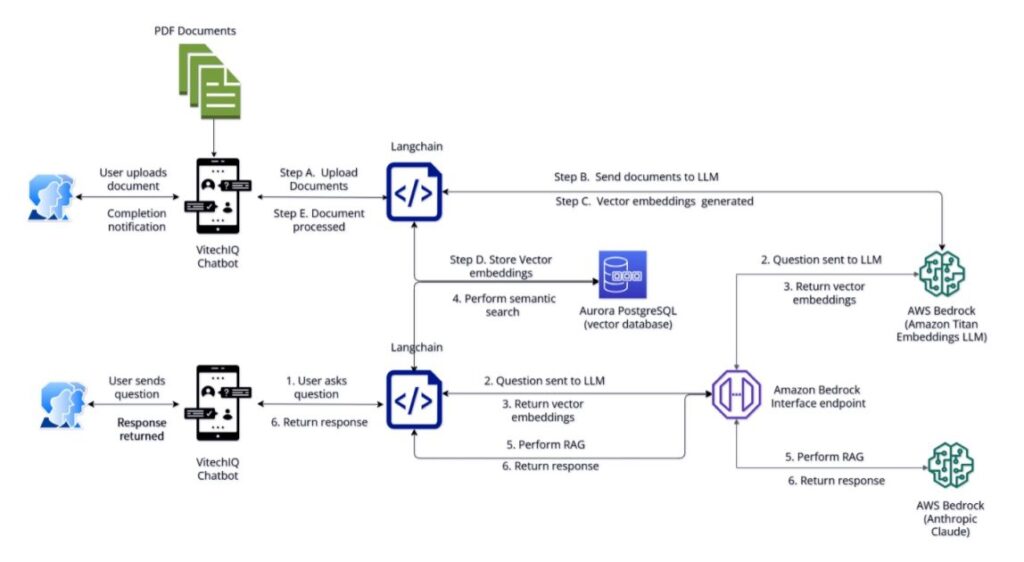

Resolution overview

Let’s look on how all these elements come collectively as an example the end-user expertise. The next diagram exhibits the answer structure.

The VitechIQ consumer expertise will be cut up into two course of flows: doc repository, and information retrieval.

Doc repository move

This step entails the curation and assortment of paperwork that can comprise the information base. Internally, Vitech stakeholders conduct due diligence to evaluate and approve a doc earlier than it’s uploaded to VitechIQ. For every doc uploaded to VitechIQ, the consumer supplies an inner reference hyperlink (Confluence or SharePoint), to ensure any future revisions will be tracked and essentially the most up-to-date info is out there on VitechIQ. As new doc variations can be found, VitechIQ updates the embeddings to so the suggestions stay related and updated.

Vitech stakeholders conduct a guide evaluate on a weekly foundation of the paperwork and revisions which are being requested to be uploaded. In consequence, the paperwork have a 1-week turnaround to be obtainable in VitechIQ for consumer consumption.

The next screenshot illustrates the VitechIQ interface to add paperwork.

The add process consists of the next steps:

- The area stakeholder uploads the paperwork to VitechIQ.

- LangChain makes use of recursive chunking to parse the doc and ship it to the Amazon Titan Embeddings mannequin.

- The Amazon Titan Embeddings mannequin generates vector embeddings.

- These vector embeddings are saved in an Aurora PostgreSQL database.

- The consumer receives notification of the success (or failure) of the add.

Information retrieval move

On this move, the consumer interacts with the VitechIQ chatbot, which supplies a summarized and correct response to their query. VitechIQ additionally supplies supply doc attribution in response to the consumer query (it makes use of the URL of the doc uploaded within the earlier course of move).

The next screenshot illustrates a consumer interplay with VitechIQ.

The method consists of the next steps:

- The consumer interacts with VitechIQ by asking a query in pure language.

- The query is distributed by the Amazon Bedrock interface endpoint to the Amazon Titan Embeddings mannequin.

- The Amazon Titan Embeddings mannequin converts the query and generates vector embeddings.

- The vector embeddings are despatched to Amazon Aurora PostgreSQL to carry out a semantic search on the information base paperwork.

- Utilizing RAG, the immediate is enhanced with context and related paperwork, after which despatched to Amazon Bedrock (Anthropic Claude) for summarization.

- Amazon Bedrock generates a summarized response based on the immediate directions and sends the response again to the consumer.

As extra questions are requested by consumer, the context is handed again into the immediate, making it conscious of the continued dialog.

Advantages provided by VitechIQ

By utilizing the facility of generative AI, VitechIQ has efficiently addressed the important challenges of knowledge fragmentation and inaccessibility. The next are the important thing achievements and revolutionary impression of VitechIQ:

- Centralized information hub – This helps streamline the method of knowledge retrieval, leading to over 50% discount in inquiries to product groups.

- Enhanced productiveness and effectivity – Customers are offered fast and correct entry. VitechIQ is used on common by 50 customers each day, which accounts to roughly 2,000 queries on a month-to-month foundation.

- Steady evolution and studying – Vitech is ready to broaden its information base on new domains. Vitech’s API documentation (spanning 35,000 paperwork with a doc dimension as much as 3 GB) was uploaded to VitechIQ, enabling improvement groups to seamlessly seek for documentation.

Conclusion

VitechIQ stands as a testomony to the corporate’s dedication to harnessing the facility of AI for operational excellence and the capabilities provided by Amazon Bedrock. As Vitech iterates by means of the answer, few of the highest precedence roadmap gadgets embrace utilizing the LangChain Expression Language (LCEL), modernizing the Streamlit software to host on Docker, and automating the doc add course of. Moreover, Vitech is exploring alternatives to construct comparable functionality for his or her exterior clients. The success of VitechIQ is a stepping stone for additional technological developments, setting a brand new commonplace for the way know-how can increase human capabilities within the company world. Vitech continues to innovate by partnering with AWS on applications just like the Generative AI Innovation Heart and establish extra customer-facing implementations. To be taught extra, go to Amazon Bedrock.

Concerning the Authors

Samit Kumbhani is an AWS Senior Options Architect within the New York Metropolis space with over 18 years of expertise. He presently collaborates with Impartial Software program Distributors (ISVs) to construct extremely scalable, revolutionary, and safe cloud options. Exterior of labor, Samit enjoys enjoying cricket, touring, and biking.

Samit Kumbhani is an AWS Senior Options Architect within the New York Metropolis space with over 18 years of expertise. He presently collaborates with Impartial Software program Distributors (ISVs) to construct extremely scalable, revolutionary, and safe cloud options. Exterior of labor, Samit enjoys enjoying cricket, touring, and biking.

Murthy Palla is a Technical Supervisor at Vitech with 9 years of intensive expertise in knowledge structure and engineering. Holding certifications as an AWS Options Architect and AI/ML Engineer from the College of Texas at Austin, he makes a speciality of superior Python, databases like Oracle and PostgreSQL, and Snowflake. In his present function, Murthy leads R&D initiatives to develop revolutionary knowledge lake and warehousing options. His experience extends to making use of generative AI in enterprise purposes, driving technological development and operational excellence inside Vitech.

Murthy Palla is a Technical Supervisor at Vitech with 9 years of intensive expertise in knowledge structure and engineering. Holding certifications as an AWS Options Architect and AI/ML Engineer from the College of Texas at Austin, he makes a speciality of superior Python, databases like Oracle and PostgreSQL, and Snowflake. In his present function, Murthy leads R&D initiatives to develop revolutionary knowledge lake and warehousing options. His experience extends to making use of generative AI in enterprise purposes, driving technological development and operational excellence inside Vitech.

Madesh Subbanna is the Vice President at Vitech, the place he leads the database workforce and has been a foundational determine because the early levels of the corporate. With 20 years of technical and management expertise, he has considerably contributed to the evolution of Vitech’s structure, efficiency, and product design. Madesh has been instrumental in integrating superior database options, DataInsight, AI, and ML applied sciences into the V3locity platform. His function transcends technical contributions, encompassing mission administration and strategic planning with senior administration to make sure seamless mission supply and innovation. Madesh’s profession at Vitech, marked by a sequence of progressive management positions, displays his deep dedication to technological excellence and shopper success.

Madesh Subbanna is the Vice President at Vitech, the place he leads the database workforce and has been a foundational determine because the early levels of the corporate. With 20 years of technical and management expertise, he has considerably contributed to the evolution of Vitech’s structure, efficiency, and product design. Madesh has been instrumental in integrating superior database options, DataInsight, AI, and ML applied sciences into the V3locity platform. His function transcends technical contributions, encompassing mission administration and strategic planning with senior administration to make sure seamless mission supply and innovation. Madesh’s profession at Vitech, marked by a sequence of progressive management positions, displays his deep dedication to technological excellence and shopper success.

Ameer Hakme is an AWS Options Architect primarily based in Pennsylvania. He collaborates with Impartial Software program Distributors (ISVs) within the Northeast area, aiding them in designing and constructing scalable and trendy platforms on the AWS Cloud. An professional in AI/ML and generative AI, Ameer helps clients unlock the potential of those cutting-edge applied sciences. In his leisure time, he enjoys driving his bike and spending high quality time along with his household.

Ameer Hakme is an AWS Options Architect primarily based in Pennsylvania. He collaborates with Impartial Software program Distributors (ISVs) within the Northeast area, aiding them in designing and constructing scalable and trendy platforms on the AWS Cloud. An professional in AI/ML and generative AI, Ameer helps clients unlock the potential of those cutting-edge applied sciences. In his leisure time, he enjoys driving his bike and spending high quality time along with his household.