Implementing Information Bases for Amazon Bedrock in assist of GDPR (proper to be forgotten) requests

The Basic Knowledge Safety Regulation (GDPR) proper to be forgotten, also called the proper to erasure, provides people the proper to request the deletion of their personally identifiable info (PII) information held by organizations. Because of this people can ask corporations to erase their private information from their techniques and from the techniques of any third events with whom the info was shared.

Amazon Bedrock is a completely managed service that makes foundational fashions (FMs) from main synthetic intelligence (AI) corporations and Amazon obtainable via an API, so you’ll be able to select from a variety of FMs to seek out the mannequin that’s greatest suited in your use case. With the Amazon Bedrock serverless expertise, you may get began rapidly, privately customise FMs with your personal information, and combine and deploy them into your purposes utilizing the Amazon Web Services (AWS) instruments with out having to handle infrastructure.

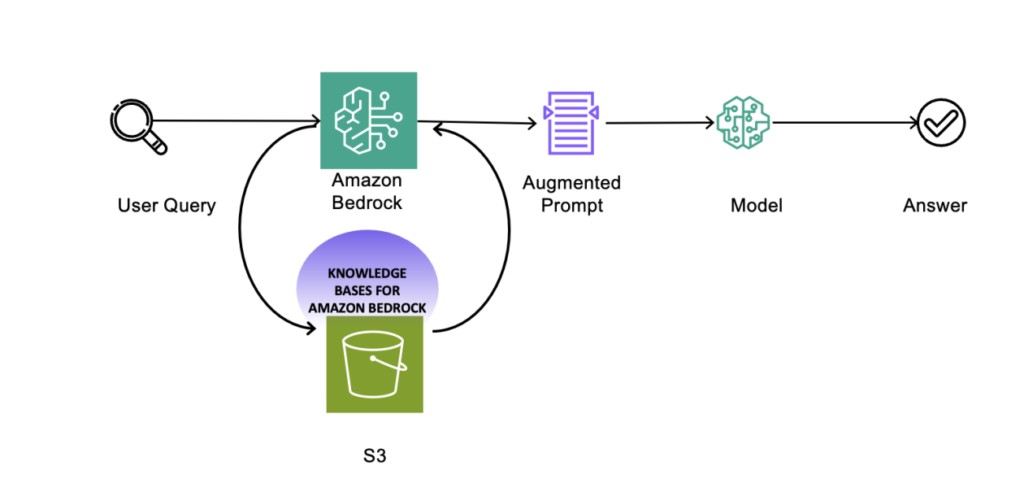

FMs are educated on huge portions of information, permitting them for use to reply questions on a wide range of topics. Nevertheless, if you wish to use an FM to reply questions on your non-public information that you’ve got saved in your Amazon Simple Storage Service (Amazon S3) bucket, you should use a way generally known as Retrieval Augmented Generation (RAG) to supply related solutions in your prospects.

Knowledge Bases for Amazon Bedrock is a completely managed RAG functionality that lets you customise FM responses with contextual and related firm information. Information Bases for Amazon Bedrock automates the end-to-end RAG workflow, together with ingestion, retrieval, immediate augmentation, and citations, so that you don’t have to put in writing customized code to combine information sources and handle queries.

Many organizations are constructing generative AI purposes and powering them with RAG-based architectures to assist keep away from hallucinations and reply to the requests primarily based on their company-owned proprietary information, together with personally identifiable info (PII) information.

On this publish, we focus on the challenges related to RAG architectures in responding to GDPR proper to be forgotten requests, construct a GDPR compliant RAG structure sample utilizing Information Bases for Amazon Bedrock, and actionable greatest practices for organizations to reply to the proper to be forgotten request necessities of the GDPR for information saved in vector datastores.

Who does GDPR apply to?

The GDPR applies to all organizations established within the EU and to organizations, whether or not or not established within the EU, that course of the non-public information of EU people in reference to both the providing of products or providers to information topics within the EU or the monitoring of conduct that takes place throughout the EU.

The next are key phrases used when discussing the GDPR:

- Knowledge topic – An identifiable dwelling individual and resident within the EU or UK, on whom private information is held by a enterprise or group or service supplier.

- Processor – The entity that processes the info on the directions of the controller (for instance, AWS).

- Controller – The entity that determines the needs and technique of processing private information (for instance, an AWS buyer).

- Private information – Info referring to an recognized or identifiable individual, together with names, e mail addresses, and cellphone numbers.

Challenges and concerns with RAG architectures

Typical RAG structure at a excessive stage entails three phases:

- Supply information pre-processing

- Producing embeddings utilizing an embedding LLM

- Storing the embeddings in a vector retailer.

Challenges related to these phases contain not understanding all touchpoints the place information is endured, sustaining a knowledge pre-processing pipeline for doc chunking, selecting a chunking technique, vector database, and indexing technique, producing embeddings, and any guide steps to purge information from vector shops and preserve it in sync with supply information. The next diagram depicts a high-level RAG structure.

As a result of Information Bases for Amazon Bedrock is a completely managed RAG resolution, no buyer information is saved throughout the Amazon Bedrock service account completely, and request particulars with out prompts or responses are logged in Amazon CloudTrail. Mannequin suppliers can’t entry buyer information within the deployment account. Crucially, if you happen to delete information from the supply S3 bucket, it’s routinely faraway from the underlying vector retailer after syncing the data base.

Nevertheless, bear in mind that the service account retains the info for eight days; after that, it will likely be purged from the service account. This information is maintained securely with server-side encryption (SSE) utilizing a service key, and optionally utilizing a customer-provided key. If the info must be purged instantly from the service account, you’ll be able to contact the AWS crew to take action. This streamlined method simplifies the GDPR proper to be forgotten compliance for generative AI purposes.

When calling data bases, utilizing the RetrieveAndGenerate API, Information Bases for Amazon Bedrock takes care of managing periods and reminiscence in your behalf. This information is SSE encrypted by default, and optionally encrypted utilizing a customer-managed key (CMK). Knowledge to handle periods is routinely purged after 24 hours.

The next resolution discusses a reference structure sample utilizing Information Bases for Amazon Bedrock and greatest practices to assist your information topic’s proper to be forgotten request in your group.

Resolution method: Simplified RAG implementation utilizing Information Bases for Amazon Bedrock

With a data base, you’ll be able to securely join basis fashions (FMs) in Amazon Bedrock to your organization information for RAG. Entry to further information helps the mannequin generate extra related, context-specific, and correct responses with out constantly retraining the FM. Info retrieved from the data base comes with supply attribution to enhance transparency and decrease hallucinations.

Information Bases for Amazon Bedrock manages the end-to-end RAG workflow for you. You specify the situation of your information, choose an embedding mannequin to transform the info into vector embeddings, and have Information Bases for Amazon Bedrock create a vector retailer in your account to retailer the vector information. When you choose this feature (obtainable solely within the console), Information Bases for Amazon Bedrock creates a vector index in Amazon OpenSearch Serverless in your account, eradicating the necessity to take action your self.

Vector embeddings embrace the numeric representations of textual content information inside your paperwork. Every embedding goals to seize the semantic or contextual which means of the info. Amazon Bedrock takes care of making, storing, managing, and updating your embeddings within the vector retailer, and it verifies that your information is in sync together with your vector retailer. The next diagram depicts a simplified structure utilizing Information Bases for Amazon Bedrock:

Conditions to create a data base

Earlier than you’ll be able to create a data base, you need to full the next stipulations.

Knowledge preparation

Earlier than making a data base utilizing Information Bases for Amazon Bedrock, it’s important to arrange the info to reinforce the FM in a RAG implementation. On this instance, we used a easy curated .csv file which comprises buyer PII info that must be deleted to reply to a GDPR proper to be forgotten request by the info topic.

Configure an S3 bucket

You’ll must create an S3 bucket and make it non-public. Amazon S3 offers a number of encryption choices for securing the info at relaxation and in transit. Optionally, you’ll be able to allow bucket versioning as a mechanism to test a number of variations of the identical file. For this instance, we created a bucket with versioning enabled with the title bedrock-kb-demo-gdpr. After you create the bucket, add the .csv file to the bucket. The next screenshot exhibits what the add seems like when it’s full.

Choose the uploaded file and from Actions dropdown and select the Question with S3 Choose possibility to question the .csv information utilizing SQL if the info was loaded appropriately.

The question within the following screenshot shows the primary 5 data from the .csv file. On this demonstration, let’s assume that you should take away the info associated to a selected buyer. Instance: buyer info pertaining to the e-mail deal with artwork@venere.org.

Steps to create a data base

With the stipulations in place, the following step is to make use of Information Bases for Amazon Bedrock to create a data base.

- On the Amazon Bedrock console, choose Information Base underneath Orchestration within the left navigation pane.

- Select Create Information base.

- For Information base title, enter a reputation.

- For Runtime function, choose Create and use a brand new service function, enter a service function title, and select Subsequent.

- Within the subsequent stage, to configure the info supply, enter a knowledge supply title and level to the S3 bucket created within the stipulations.

- Develop the Superior settings part and choose Use default KMS key after which choose Default chunking from Chunking technique. Select Subsequent.

- Select the embeddings mannequin within the subsequent display. On this instance we selected Titan Embeddings G1-Textual content v1.2.

- For Vector database, select Fast create a brand new vector retailer – Beneficial to arrange an OpenSearch Serverless vector retailer in your behalf. Go away all the opposite choices as default.

- Select Overview and Create and choose Create data base within the subsequent display which completes the data base setup.

- Overview the abstract web page, choose the Knowledge supply and select Sync. This begins the method of changing the info saved within the S3 bucket into vector embeddings in your OpenSearch Serverless vector assortment.

- Be aware: The syncing operation can take minutes to hours to finish, primarily based on the scale of the dataset saved in your S3 bucket. Throughout the sync operation, Amazon Bedrock downloads paperwork in your S3 bucket, divides them into chunks (we opted for the default technique on this publish), generates the vector embedding, and shops the embedding in your OpenSearch Serverless vector assortment. When the preliminary sync is full, the info supply standing will change to Prepared.

- Now you should utilize your data base. We use the Take a look at data base characteristic of Amazon Bedrock, select the Anthropic Claude 2.1 mannequin, and ask it a query a few pattern buyer.

We’ve demonstrated use Information Bases for Amazon Bedrock and conversationally question the info utilizing the data base take a look at characteristic. The question operation will also be carried out programmatically via the data base API and AWS SDK integrations from inside a generative AI utility.

Delete buyer info

Within the pattern immediate, we had been in a position to retrieve the shopper’s PII info—which was saved as a part of the supply dataset—utilizing the e-mail deal with. To reply to GDPR proper to be forgotten requests, the following sequence of steps demonstrates how buyer information deletion at supply deletes the data from the generative AI utility powered by Information Bases for Bedrock.

- Delete the shopper info a part of the supply .csv file and re-upload the file to the S3 bucket. The next snapshot of querying the .csv file utilizing S3 Choose exhibits that the shopper info related to the e-mail attribute

artwork@venere.orgwas not returned within the outcomes.

- Re-sync the data base information supply once more from the Amazon Bedrock console.

- After the sync operation is full and the info supply standing is Prepared, take a look at the data base once more utilizing the immediate used earlier to confirm if the shopper PII info is returned within the response.

We had been in a position to efficiently show that after the shopper PII info was faraway from the supply within the S3 bucket, the associated entries from the data base are routinely deleted after the sync operation. We will additionally verify that the related vector embeddings saved in OpenSearch Serverless assortment had been cleared by querying from the OpenSearch dashboard utilizing dev instruments.

Be aware: In some RAG-based architectures, session historical past can be endured in an exterior database akin to Amazon DynamoDB. It’s necessary to judge if this session historical past comprises PII information and develop a plan to take away the info if obligatory.

Audit monitoring

To assist GDPR compliance efforts, organizations ought to contemplate implementing an audit management framework to report proper to be forgotten requests. This may assist together with your audit requests and supply the power to roll again in case of unintentional deletions noticed in the course of the high quality assurance course of. It’s necessary to keep up the checklist of customers and techniques that could be impacted throughout this course of to keep up efficient communication. Additionally contemplate storing the metadata of the information being loaded in your data bases for efficient monitoring. Instance columns embrace data base title, File Identify, Date of sync, Modified Person, PII Test, Delete requested by, and so forth. Amazon Bedrock will write API actions to AWS CloudTrail, which will also be used for audit monitoring.

Some prospects would possibly must persist the Amazon CloudWatch Logs to assist their inner insurance policies. By default, request particulars with out prompts or responses are logged in CloudTrail and Amazon CloudWatch. Nevertheless, prospects can allow Model invocation logs, which might retailer PII info. You’ll be able to assist safeguard delicate information that’s ingested by CloudWatch Logs through the use of log group information safety insurance policies. These insurance policies allow you to audit and masks delicate information that seems in log occasions ingested by the log teams in your account. While you create a knowledge safety coverage, delicate information that matches the info identifiers (for instance, PII) you’ve chosen is masked at egress factors, together with CloudWatch Logs Insights, metric filters, and subscription filters. Solely customers who’ve the logs: Unmask IAM permission can view unmasked information. You may as well use customized information identifiers to create information identifiers tailor-made to your particular use case. There are a lot of strategies prospects can make use of to detect and purge the identical. Full implementation particulars are past the scope of this publish.

Knowledge discovery and findability

Findability is a vital step of the method. Organizations must have mechanisms to seek out the info into account in an environment friendly and fast method for well timed response. You’ll be able to Discuss with the FAIR blog and 5 Actionable steps to GDPR Compliance. On this present instance, you’ll be able to leverage S3 Macie to find out the PII information in S3.

Backup and restore

Knowledge from underlying vector shops could be transferred, exported, or copied to completely different AWS providers or exterior of the AWS cloud. Organizations ought to have an efficient governance course of to detect and take away information to align with the GDPR compliance requirement. Nevertheless, that is past the scope of this publish. It’s the accountability of the shopper to take away the info from the underlying backups. It’s good observe to maintain the retention interval at 29 days (if relevant) in order that the backups are cleared after 30 days. Organizations also can set the backup schedule to a sure date (for instance, the primary of each month). If the coverage requires you to take away the info from the backup instantly, you’ll be able to take a snapshot of the vector retailer after the deletion of required PII information after which purge the prevailing backup.

Communication

It’s necessary to speak to the customers and processes that could be impacted by this deletion. For instance, if the applying is powered by single sign-on (SSO) utilizing an identification retailer akin to AWS IAM Identity Center or Okta consumer profile, then info can be utilized for managing the stakeholder communications.

Safety controls

Sustaining safety is of nice significance in GDPR compliance. By implementing sturdy safety measures, organizations may help shield private information from unauthorized entry, inadvertent entry, and misuse, thereby serving to keep the privateness rights of people. AWS affords a complete suite of providers and options that may assist assist GDPR compliance and improve safety measures. To study extra concerning the shared accountability between AWS and prospects for safety and compliance, see the AWS shared responsibility model. The shared accountability mannequin is a helpful method as an example the completely different tasks of AWS (as a knowledge processor or sub processor) and its prospects (as both information controllers or information processors) underneath the GDPR.

AWS affords a GDPR-compliant AWS Knowledge Processing Addendum (AWS DPA), which lets you adjust to GDPR contractual obligations. The AWS DPA is incorporated into the AWS Service Terms.

Article 32 of the GDPR requires that organizations should “…implement applicable technical and organizational measures to make sure a stage of safety applicable to the danger, together with …the pseudonymization and encryption of private information[…].” As well as, organizations should “safeguard in opposition to the unauthorized disclosure of or entry to non-public information.” See the Navigating GDPR Compliance on AWS whitepaper for extra particulars.

Conclusion

We encourage you to take cost of your information privateness immediately. Prioritizing GPDR compliance and information privateness not solely strengthens belief, however also can construct buyer loyalty and safeguard private info within the digital period. Should you want help or steerage, attain out to an AWS consultant. AWS has groups of Enterprise Assist Representatives, Skilled Providers Consultants, and different workers to assist with GDPR questions. You’ll be able to contact us with questions. To study extra about GDPR compliance when utilizing AWS providers, see the General Data Protection Regulation (GDPR) Center.

Disclaimer: The knowledge offered above isn’t a authorized recommendation. It’s supposed to showcase generally adopted greatest practices. It’s essential to seek the advice of together with your group’s privateness officer or authorized counsel and decide applicable options.

Concerning the Authors

Yadukishore Tatavarthi is a Senior Companion Options Architect supporting Healthcare and life science prospects at Amazon Internet Providers. He has been serving to the shoppers during the last 20 years in constructing the enterprise information methods, advising prospects on Generative AI, cloud implementations, migrations, reference structure creation, information modeling greatest practices, information lake/warehouses architectures.

Yadukishore Tatavarthi is a Senior Companion Options Architect supporting Healthcare and life science prospects at Amazon Internet Providers. He has been serving to the shoppers during the last 20 years in constructing the enterprise information methods, advising prospects on Generative AI, cloud implementations, migrations, reference structure creation, information modeling greatest practices, information lake/warehouses architectures.

Krishna Prasad is a Senior Options Architect in Strategic Accounts Options Structure crew at AWS. He works with prospects to assist resolve their distinctive enterprise and technical challenges offering steerage in numerous focus areas like distributed compute, safety, containers, serverless, synthetic intelligence (AI), and machine studying (ML).

Krishna Prasad is a Senior Options Architect in Strategic Accounts Options Structure crew at AWS. He works with prospects to assist resolve their distinctive enterprise and technical challenges offering steerage in numerous focus areas like distributed compute, safety, containers, serverless, synthetic intelligence (AI), and machine studying (ML).

Rajakumar Sampathkumar is a Principal Technical Account Supervisor at AWS, offering buyer steerage on business-technology alignment and supporting the reinvention of their cloud operation fashions and processes. He’s obsessed with cloud and machine studying. Raj can also be a machine studying specialist and works with AWS prospects to design, deploy, and handle their AWS workloads and architectures.

Rajakumar Sampathkumar is a Principal Technical Account Supervisor at AWS, offering buyer steerage on business-technology alignment and supporting the reinvention of their cloud operation fashions and processes. He’s obsessed with cloud and machine studying. Raj can also be a machine studying specialist and works with AWS prospects to design, deploy, and handle their AWS workloads and architectures.