Going past AI assistants: Examples from Amazon.com reinventing industries with generative AI

Generative AI revolutionizes enterprise operations via numerous functions, together with conversational assistants similar to Amazon’s Rufus and Amazon Seller Assistant. Moreover, among the most impactful generative AI functions function autonomously behind the scenes, a vital functionality that empowers enterprises to rework their operations, information processing, and content material creation at scale. These non-conversational implementations, typically within the type of agentic workflows powered by large language models (LLMs), execute particular enterprise targets throughout industries with out direct person interplay.

Non-conversational functions supply distinctive benefits similar to larger latency tolerance, batch processing, and caching, however their autonomous nature requires stronger guardrails and exhaustive high quality assurance in comparison with conversational functions, which profit from real-time person suggestions and supervision.

This submit examines 4 numerous Amazon.com examples of such generative AI functions:

Every case examine reveals totally different points of implementing non-conversational generative AI functions, from technical structure to operational concerns. All through these examples, you’ll learn the way the great suite of AWS companies, together with Amazon Bedrock and Amazon SageMaker, are the important thing to success. Lastly, we listing key learnings generally shared throughout these use instances.

Creating high-quality product listings on Amazon.com

Creating high-quality product listings with complete particulars helps prospects make knowledgeable buy selections. Historically, promoting companions manually entered dozens of attributes per product. The brand new generative AI answer, launched in 2024, transforms this course of by proactively buying product info from model web sites and different sources to enhance the shopper expertise throughout quite a few product classes.

Generative AI simplifies the promoting accomplice expertise by enabling info enter in numerous codecs similar to URLs, product pictures, or spreadsheets and routinely translating this into the required construction and format. Over 900,000 promoting companions have used it, with almost 80% of generated itemizing drafts accepted with minimal edits. AI-generated content material gives complete product particulars that assist with readability and accuracy, which may contribute to product discoverability in buyer searches.

For brand new listings, the workflow begins with promoting companions offering preliminary info. The system then generates complete listings utilizing a number of info sources, together with titles, descriptions, and detailed attributes. Generated listings are shared with promoting companions for approval or enhancing.

For present listings, the system identifies merchandise that may be enriched with extra information.

Information integration and processing for a big number of outputs

The Amazon group constructed strong connectors for inside and exterior sources with LLM-friendly APIs utilizing Amazon Bedrock and different AWS companies to seamlessly combine into Amazon.com backend methods.

A key problem is synthesizing numerous information into cohesive listings throughout greater than 50 attributes, each textual and numerical. LLMs require particular management mechanisms and directions to precisely interpret ecommerce ideas as a result of they won’t carry out optimally with such advanced, various information. For instance, LLMs may misread “capability” in a knife block as dimensions reasonably than variety of slots, or mistake “Match Put on” as a method description as a substitute of a model identify. Immediate engineering and fine-tuning had been extensively used to handle these instances.

Technology and validation with LLMs

The generated product listings needs to be full and proper. To assist this, the answer implements a multistep workflow utilizing LLMs for each era and validation of attributes. This dual-LLM strategy helps stop hallucinations, which is important when coping with security hazards or technical specs. The group developed superior self-reflection strategies to ensure the era and validation processes complement one another successfully.

The next determine illustrates the era course of with validation each carried out by LLMs.

Determine 1. Product Itemizing creation workflow

Multi-layer high quality assurance with human suggestions

Human suggestions is central to the answer’s high quality assurance. The method consists of Amazon.com specialists for preliminary analysis and promoting accomplice enter for acceptance or edits. This gives high-quality output and allows ongoing enhancement of AI fashions.

The standard assurance course of consists of automated testing strategies combining ML-, algorithm-, or LLM-based evaluations. Failed listings endure regeneration, and profitable listings proceed to additional testing. Utilizing causal inference models, we establish underlying options affecting itemizing efficiency and alternatives for enrichment. In the end, listings that go high quality checks and obtain promoting accomplice acceptance are printed, ensuring prospects obtain correct and complete product info.

The next determine illustrates the workflow of going to manufacturing with testing, analysis, and monitoring of product itemizing era.

Determine 2. Product Itemizing testing and human within the loop workflow

Utility-level system optimization for accuracy and price

Given the excessive requirements for accuracy and completeness, the group adopted a complete experimentation strategy with an automatic optimization system. This method explores numerous combos of LLMs, prompts, playbooks, workflows, and AI instruments to iterate for larger enterprise metrics, together with price. Via steady analysis and automatic testing, the product itemizing generator successfully balances efficiency, price, and effectivity whereas staying adaptable to new AI developments. This strategy means prospects profit from high-quality product info, and promoting companions have entry to cutting-edge instruments for creating listings effectively.

Generative AI-powered prescription processing in Amazon Pharmacy

Constructing upon the human-AI hybrid workflows beforehand mentioned within the vendor itemizing instance, Amazon Pharmacy demonstrates how these ideas could be utilized in a Health Insurance Portability and Accountability Act (HIPAA)-regulated business. Having shared a conversational assistant for affected person care specialists within the submit Learn how Amazon Pharmacy created their LLM-based chat-bot using Amazon SageMaker, we now give attention to automated prescription processing, which you’ll examine in The life of a prescription at Amazon Pharmacy and the next research paper in Nature Magazine.

At Amazon Pharmacy, we developed an AI system constructed on Amazon Bedrock and SageMaker to assist pharmacy technicians course of remedy instructions extra precisely and effectively. This answer integrates human specialists with LLMs in creation and validation roles to boost precision in remedy directions for our sufferers.

Agentic workflow design for healthcare accuracy

The prescription processing system combines human experience (information entry technicians and pharmacists) with AI assist for course solutions and suggestions. The workflow, proven within the following diagram, begins with a pharmacy knowledge-based preprocessor standardizing uncooked prescription textual content in Amazon DynamoDB, adopted by fine-tuned small language fashions (SLMs) on SageMaker figuring out important parts (dosage, frequency).

(a) |

|

(b) |

(c) |

|

Determine 3. (a) Information entry technician and pharmacist workflow with two GenAI modules, (b) Suggestion module workflow and (c) Flagging module workflow |

|

The system seamlessly integrates specialists similar to information entry technicians and pharmacists, the place generative AI enhances the general workflow in direction of agility and accuracy to raised serve our sufferers. A course meeting system with security guardrails then generates directions for information entry technicians to create their typed instructions via the suggestion module. The flagging module flags or corrects errors and enforces additional security measures as suggestions supplied to the info entry technician. The technician finalizes extremely correct, safe-typed instructions for pharmacists who can both present suggestions or execute the instructions to the downstream service.

One spotlight from the answer is using job decomposition, which empowers engineers and scientists to interrupt the general course of into a large number of steps with particular person modules made from substeps. The group extensively used fine-tuned SLMs. As well as, the method employs conventional ML procedures similar to named entity recognition (NER) or estimation of ultimate confidence with regression models. Utilizing SLMs and conventional ML in such contained, well-defined procedures considerably improved processing velocity whereas sustaining rigorous security requirements as a consequence of incorporation of acceptable guardrails on particular steps.

The system contains a number of well-defined substeps, with every subprocess working as a specialised part working semi-autonomously but collaboratively throughout the workflow towards the general goal. This decomposed strategy, with particular validations at every stage, proved more practical than end-to-end options whereas enabling using fine-tuned SLMs. The group used AWS Fargate to orchestrate the workflow given its present integration into present backend methods.

Of their product growth journey, the group turned to Amazon Bedrock, which supplied high-performing LLMs with ease-of-use options tailor-made to generative AI functions. SageMaker enabled additional LLM picks, deeper customizability, and conventional ML strategies. To be taught extra about this method, see How task decomposition and smaller LLMs can make AI more affordable and skim concerning the Amazon Pharmacy business case study.

Constructing a dependable software with guardrails and HITL

To adjust to HIPAA requirements and supply affected person privateness, we applied strict information governance practices alongside a hybrid strategy that mixes fine-tuned LLMs utilizing Amazon Bedrock APIs with Retrieval Augmented Generation (RAG) utilizing Amazon OpenSearch Service. This mix allows environment friendly information retrieval whereas sustaining excessive accuracy for particular subtasks.

Managing LLM hallucinations—which is important in healthcare—required extra than simply fine-tuning on massive datasets. Our answer implements domain-specific guardrails constructed on Amazon Bedrock Guardrails, complemented by human-in-the-loop (HITL) oversight to advertise system reliability.

The Amazon Pharmacy group continues to boost this method via real-time pharmacist suggestions and expanded prescription format capabilities. This balanced strategy of innovation, area experience, superior AI companies, and human oversight not solely improves operational effectivity, however implies that the AI system correctly augments healthcare professionals in delivering optimum affected person care.

Generative AI-powered buyer evaluate highlights

Whereas our earlier instance showcased how Amazon Pharmacy integrates LLMs into real-time workflows for prescription processing, this subsequent use case demonstrates how comparable strategies—SLMs, conventional ML, and considerate workflow design—could be utilized to offline batch inferencing at large scale.

Amazon has launched AI-generated customer review highlights to course of over 200 million annual product critiques and rankings. This function distills shared buyer opinions into concise paragraphs highlighting optimistic, impartial, and damaging suggestions about merchandise and their options. Buyers can shortly grasp consensus whereas sustaining transparency by offering entry to associated buyer critiques and protecting unique critiques obtainable.

The system enhances purchasing selections via an interface the place prospects can discover evaluate highlights by deciding on particular options (similar to image high quality, distant performance, or ease of set up for a Hearth TV). Options are visually coded with inexperienced verify marks for optimistic sentiment, orange minus indicators for damaging, and grey for impartial—which suggests customers can shortly establish product strengths and weaknesses based mostly on verified buy critiques. The next screenshot reveals evaluate highlights concerning noise degree for a product.

Determine 4. An instance product evaluate highlights for a product.

A recipe for cost-effective use of LLMs for offline use instances

The group developed an economical hybrid structure combining conventional ML strategies with specialised SLMs. This strategy assigns sentiment evaluation and key phrase extraction to conventional ML whereas utilizing optimized SLMs for advanced textual content era duties, enhancing each accuracy and processing effectivity. The next diagram reveals ttraditional ML and LLMs working to supply the general workflow.

Determine 5. Use of conventional ML and LLMs in a workflow.

The function employs SageMaker batch transform for asynchronous processing, considerably decreasing prices in comparison with real-time endpoints. To ship a close to zero-latency expertise, the answer caches extracted insights alongside present critiques, decreasing wait instances and enabling simultaneous entry by a number of prospects with out extra computation. The system processes new critiques incrementally, updating insights with out reprocessing the entire dataset. For optimum efficiency and cost-effectiveness, the function makes use of Amazon Elastic Compute Cloud (Amazon EC2) Inf2 instances for batch remodel jobs, providing up to 40% better price-performance to alternatives.

By following this complete strategy, the group successfully managed prices whereas dealing with the large scale of critiques and merchandise in order that the answer remained each environment friendly and scalable.

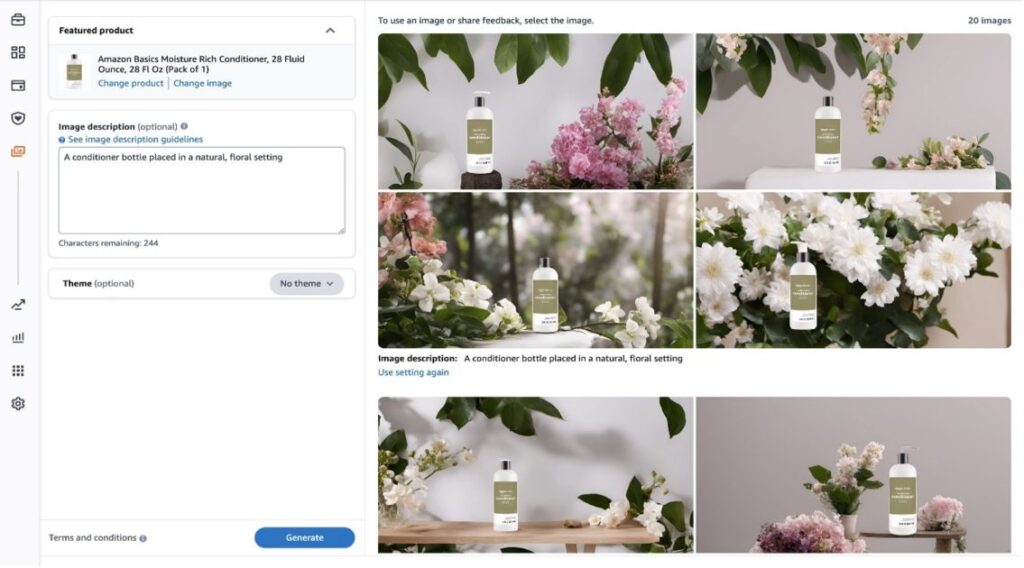

Amazon Adverts AI-powered inventive picture and video era

Having explored largely text-centric generative AI functions in earlier examples, we now flip to multimodal generative AI with Amazon Ads creative content generation for sponsored ads. The answer has capabilities for image and video era, the small print of which we share on this part. In widespread, this answer makes use of Amazon Nova inventive content material era fashions at its core.

Working backward from buyer want, a March 2023 Amazon survey revealed that just about 75% of advertisers fighting marketing campaign success cited inventive content material era as their main problem. Many advertisers—significantly these with out in-house capabilities or company assist—face important obstacles as a result of experience and prices of manufacturing high quality visuals. The Amazon Adverts answer democratizes visible content material creation, making it accessible and environment friendly for advertisers of various sizes. The affect has been substantial: advertisers utilizing AI-generated pictures in Sponsored Brands campaigns noticed almost 8% click-through rates (CTR) and submitted 88% extra campaigns than non-users.

Final 12 months, the AWS Machine Studying Weblog printed a submit detailing the image generation solution. Since then, Amazon has adopted Amazon Nova Canvas as its basis for inventive picture era, creating professional-grade pictures from textual content or picture prompts with options for text-based enhancing and controls for coloration scheme and format changes.

In September 2024, the Amazon Adverts group included the creation of short-form video ads from product pictures. This function makes use of foundation models available on Amazon Bedrock to provide prospects management over visible model, pacing, digicam movement, rotation, and zooming via pure language, utilizing an agentic workflow to first describe video storyboards after which generate the content material for the story. The next screenshot reveals an instance of inventive picture era for product backgrounds on Amazon Adverts.

Determine 6. Adverts picture era instance for a product.

As mentioned within the unique submit, responsible AI is on the middle of the answer, and Amazon Nova inventive fashions include built-in controls to assist security and accountable AI use, together with watermarking and content material moderation.

The answer makes use of AWS Step Functions with AWS Lambda capabilities to orchestrate serverless orchestration of each picture and video era processes. Generated content material is saved in Amazon Simple Storage Service (Amazon S3) with metadata in DynamoDB, and Amazon API Gateway gives buyer entry to the era capabilities. The answer now employs Amazon Bedrock Guardrails along with sustaining Amazon Rekognition and Amazon Comprehend integration at numerous steps for extra security checks. The next screenshot reveals inventive AI-generated movies on Amazon Adverts marketing campaign builder.

Determine 7. Adverts video era for a product

Creating high-quality advert creatives at scale introduced advanced challenges. The generative AI mannequin wanted to supply interesting, brand-appropriate pictures throughout numerous product classes and promoting contexts whereas remaining accessible to advertisers no matter technical experience. High quality assurance and enchancment are basic to each picture and video era capabilities. The system undergoes continuous enhancement via in depth HITL processes enabled by Amazon SageMaker Ground Truth. This implementation delivers a robust device that transforms advertisers’ inventive course of, making high-quality visible content material creation extra accessible throughout numerous product classes and contexts.

That is only the start of Amazon Adverts utilizing generative AI to empower promoting prospects to create the content material they should drive their promoting targets. The answer demonstrates how decreasing inventive obstacles instantly will increase promoting exercise whereas sustaining excessive requirements for accountable AI use.

Key technical learnings and discussions

Non-conversational functions profit from larger latency tolerance, enabling batch processing and caching, however require strong validation mechanisms and stronger guardrails as a consequence of their autonomous nature. These insights apply to each non-conversational and conversational AI implementations:

- Activity decomposition and agentic workflows – Breaking advanced issues into smaller parts has confirmed priceless throughout implementations. This deliberate decomposition by area specialists allows specialised fashions for particular subtasks, as demonstrated in Amazon Pharmacy prescription processing, the place fine-tuned SLMs deal with discrete duties similar to dosage identification. This technique permits for specialised brokers with clear validation steps, enhancing reliability and simplifying upkeep. The Amazon vendor itemizing use case exemplifies this via its multistep workflow with separate era and validation processes. Moreover, the evaluate highlights use case showcased cost-effective and managed use of LLMs through the use of conventional ML for preprocessing and performing elements that might be related to an LLM job.

- Hybrid architectures and mannequin choice – Combining conventional ML with LLMs gives higher management and cost-effectiveness than pure LLM approaches. Conventional ML excels at well-defined duties, as proven within the evaluate highlights system for sentiment evaluation and data extraction. Amazon groups have strategically deployed each massive and small language fashions based mostly on necessities, integrating RAG with fine-tuning for efficient domain-specific functions just like the Amazon Pharmacy implementation.

- Price optimization methods – Amazon groups achieved effectivity via batch processing, caching mechanisms for high-volume operations, specialised occasion varieties similar to AWS Inferentia and AWS Trainium, and optimized mannequin choice. Assessment highlights demonstrates how incremental processing reduces computational wants, and Amazon Adverts used Amazon Nova foundation models (FMs) to cost-effectively create inventive content material.

- High quality assurance and management mechanisms – High quality management depends on domain-specific guardrails via Amazon Bedrock Guardrails and multilayered validation combining automated testing with human analysis. Twin-LLM approaches for era and validation assist stop hallucinations in Amazon vendor listings, and self-reflection strategies enhance accuracy. Amazon Nova inventive FMs present inherent accountable AI controls, complemented by continuous A/B testing and efficiency measurement.

- HITL implementation – The HITL strategy spans a number of layers, from skilled analysis by pharmacists to end-user suggestions from promoting companions. Amazon groups established structured enchancment workflows, balancing automation and human oversight based mostly on particular area necessities and threat profiles.

- Accountable AI and compliance – Accountable AI practices embody content material ingestion guardrails for regulated environments and adherence to laws similar to HIPAA. Amazon groups built-in content material moderation for user-facing functions, maintained transparency in evaluate highlights by offering entry to supply info, and applied information governance with monitoring to advertise high quality and compliance.

These patterns allow scalable, dependable, and cost-effective generative AI options whereas sustaining high quality and accountability requirements. The implementations reveal that efficient options require not simply subtle fashions, however cautious consideration to structure, operations, and governance, supported by AWS companies and established practices.

Subsequent steps

The examples from Amazon.com shared on this submit illustrate how generative AI can create worth past conventional conversational assistants. We invite you to observe these examples or create your individual answer to find how generative AI can reinvent your small business and even your business. You may go to the AWS generative AI use cases web page to begin the ideation course of.

These examples confirmed that efficient generative AI implementations typically profit from combining various kinds of fashions and workflows. To be taught what FMs are supported by AWS companies, seek advice from Supported foundation models in Amazon Bedrock and Amazon SageMaker JumpStart Foundation Models. We additionally recommend you discover Amazon Bedrock Flows, which may ease the trail in direction of constructing workflows. Moreover, we remind you that Trainium and Inferentia accelerators present vital price financial savings in these functions.

Agentic workflows, as illustrated in our examples, have confirmed significantly priceless. We suggest exploring Amazon Bedrock Agents for shortly constructing agentic workflows.

Profitable generative AI implementation extends past mannequin choice—it represents a complete software program growth course of from experimentation to software monitoring. To start constructing your basis throughout these important companies, we invite you to discover Amazon QuickStart.

Conclusion

These examples reveal how generative AI extends past conversational assistants to drive innovation and effectivity throughout industries. Success comes from combining AWS companies with sturdy engineering practices and enterprise understanding. In the end, efficient generative AI options give attention to fixing actual enterprise issues whereas sustaining excessive requirements of high quality and accountability.

To be taught extra about how Amazon makes use of AI, seek advice from Artificial Intelligence in Amazon Information.

In regards to the Authors

Burak Gozluklu is a Principal AI/ML Specialist Options Architect and lead GenAI Scientist Architect for Amazon.com on AWS, based mostly in Boston, MA. He helps strategic prospects undertake AWS applied sciences and particularly Generative AI options to realize their enterprise targets. Burak has a PhD in Aerospace Engineering from METU, an MS in Programs Engineering, and a post-doc in system dynamics from MIT in Cambridge, MA. He maintains his connection to academia as a analysis affiliate at MIT. Outdoors of labor, Burak is an fanatic of yoga.

Burak Gozluklu is a Principal AI/ML Specialist Options Architect and lead GenAI Scientist Architect for Amazon.com on AWS, based mostly in Boston, MA. He helps strategic prospects undertake AWS applied sciences and particularly Generative AI options to realize their enterprise targets. Burak has a PhD in Aerospace Engineering from METU, an MS in Programs Engineering, and a post-doc in system dynamics from MIT in Cambridge, MA. He maintains his connection to academia as a analysis affiliate at MIT. Outdoors of labor, Burak is an fanatic of yoga.

Emilio Maldonado is a Senior chief at Amazon liable for Product Information, oriented at constructing methods to scale the e-commerce Catalog metadata, manage all product attributes, and leverage GenAI to deduce exact info that guides Sellers and Buyers to work together with merchandise. He’s captivated with creating dynamic groups and forming partnerships. He holds a Bachelor of Science in C.S. from Tecnologico de Monterrey (ITESM) and an MBA from Wharton, College of Pennsylvania.

Emilio Maldonado is a Senior chief at Amazon liable for Product Information, oriented at constructing methods to scale the e-commerce Catalog metadata, manage all product attributes, and leverage GenAI to deduce exact info that guides Sellers and Buyers to work together with merchandise. He’s captivated with creating dynamic groups and forming partnerships. He holds a Bachelor of Science in C.S. from Tecnologico de Monterrey (ITESM) and an MBA from Wharton, College of Pennsylvania.

Wenchao Tong is a Sr. Principal Technologist at Amazon Adverts in Palo Alto, CA, the place he spearheads the event of GenAI functions for inventive constructing and efficiency optimization. His work empowers prospects to boost product and model consciousness and drive gross sales by leveraging revolutionary AI applied sciences to enhance inventive efficiency and high quality. Wenchao holds a Grasp’s diploma in Laptop Science from Tongji College. Outdoors of labor, he enjoys climbing, board video games, and spending time along with his household.

Wenchao Tong is a Sr. Principal Technologist at Amazon Adverts in Palo Alto, CA, the place he spearheads the event of GenAI functions for inventive constructing and efficiency optimization. His work empowers prospects to boost product and model consciousness and drive gross sales by leveraging revolutionary AI applied sciences to enhance inventive efficiency and high quality. Wenchao holds a Grasp’s diploma in Laptop Science from Tongji College. Outdoors of labor, he enjoys climbing, board video games, and spending time along with his household.

Alexandre Alves is a Sr. Principal Engineer at Amazon Well being Companies, specializing in ML, optimization, and distributed methods. He helps ship wellness-forward well being experiences.

Alexandre Alves is a Sr. Principal Engineer at Amazon Well being Companies, specializing in ML, optimization, and distributed methods. He helps ship wellness-forward well being experiences.

Puneet Sahni is Sr. Principal Engineer in Amazon. He works on enhancing the info high quality of all merchandise obtainable in Amazon catalog. He’s captivated with leveraging product information to enhance our buyer experiences. He has a Grasp’s diploma in Electrical engineering from Indian Institute of Expertise (IIT) Bombay. Outdoors of labor he having fun with spending time along with his younger children and travelling.

Puneet Sahni is Sr. Principal Engineer in Amazon. He works on enhancing the info high quality of all merchandise obtainable in Amazon catalog. He’s captivated with leveraging product information to enhance our buyer experiences. He has a Grasp’s diploma in Electrical engineering from Indian Institute of Expertise (IIT) Bombay. Outdoors of labor he having fun with spending time along with his younger children and travelling.

Vaughn Schermerhorn is a Director at Amazon, the place he leads Buying Discovery and Analysis—spanning Buyer Critiques, content material moderation, and web site navigation throughout Amazon’s international marketplaces. He manages a multidisciplinary group of utilized scientists, engineers, and product leaders targeted on surfacing reliable buyer insights via scalable ML fashions, multimodal info retrieval, and real-time system structure. His group develops and operates large-scale distributed methods that energy billions of purchasing selections each day. Vaughn holds levels from Georgetown College and San Diego State College and has lived and labored within the U.S., Germany, and Argentina. Outdoors of labor, he enjoys studying, journey, and time along with his household.

Vaughn Schermerhorn is a Director at Amazon, the place he leads Buying Discovery and Analysis—spanning Buyer Critiques, content material moderation, and web site navigation throughout Amazon’s international marketplaces. He manages a multidisciplinary group of utilized scientists, engineers, and product leaders targeted on surfacing reliable buyer insights via scalable ML fashions, multimodal info retrieval, and real-time system structure. His group develops and operates large-scale distributed methods that energy billions of purchasing selections each day. Vaughn holds levels from Georgetown College and San Diego State College and has lived and labored within the U.S., Germany, and Argentina. Outdoors of labor, he enjoys studying, journey, and time along with his household.

Tarik Arici is a Principal Utilized Scientist at Amazon Choice and Catalog Programs (ASCS), engaged on Catalog High quality Enhancement utilizing GenAI workflows. He has a PhD in Electrical and Laptop Engineering from Georgia Tech. Outdoors of labor, Tarik enjoys swimming and biking.

Tarik Arici is a Principal Utilized Scientist at Amazon Choice and Catalog Programs (ASCS), engaged on Catalog High quality Enhancement utilizing GenAI workflows. He has a PhD in Electrical and Laptop Engineering from Georgia Tech. Outdoors of labor, Tarik enjoys swimming and biking.