Demand Forecasting with Darts: A Tutorial | by Sandra E.G. | Jan, 2025

Have you ever gathered all of the related knowledge?

Let’s assume your organization has offered you with a transactional database with gross sales of various merchandise and completely different sale areas. This knowledge known as panel knowledge, which implies that you’ll be working with many time collection concurrently.

The transactional database will most likely have the next format: the date of the sale, the situation identifier the place the sale occurred, the product identifier, the amount, and doubtless the financial price. Relying on how this knowledge is collected, will probably be aggregated otherwise, by time (each day, weekly, month-to-month) and by group (by buyer or by location and product).

However is that this all the info you want for demand forecasting? Sure and no. After all, you’ll be able to work with this knowledge and make some predictions, and if the relations between the collection will not be complicated, a easy mannequin may work. However if you’re studying this tutorial, you’re most likely fascinated about predicting demand when the info will not be as easy. On this case, there’s extra data that may be a gamechanger you probably have entry to it:

- Historic inventory knowledge: It’s essential to pay attention to when stockouts happen, because the demand may nonetheless be excessive when gross sales knowledge doesn’t replicate it.

- Promotions knowledge: Reductions and promotions also can have an effect on demand as they have an effect on the shoppers’ purchasing conduct.

- Occasions knowledge: As mentioned later, one can extract time options from the date index. Nonetheless, vacation knowledge or particular dates also can situation consumption.

- Different area knowledge: Every other knowledge that might have an effect on the demand for the merchandise you’re working with could be related to the duty.

Let’s code!

For this tutorial, we’ll work with month-to-month gross sales knowledge aggregated by product and sale location. This instance dataset is from the Stallion Kaggle Competition and information beer merchandise (SKU) distributed to retailers via wholesalers (Companies). Step one is to format the dataset and choose the columns that we need to use for coaching the fashions. As you’ll be able to see within the code snippet, we’re combining all of the occasions columns into one known as ‘particular days’ for simplicity. As beforehand talked about, this dataset misses inventory knowledge, so if stockouts occurred we might be misinterpreting the realdemand.

# Load knowledge with pandas

sales_data = pd.read_csv(f'{local_path}/price_sales_promotion.csv')

volume_data = pd.read_csv(f'{local_path}/historical_volume.csv')

events_data = pd.read_csv(f'{local_path}/event_calendar.csv')# Merge all knowledge

dataset = pd.merge(volume_data, sales_data, on=['Agency','SKU','YearMonth'], how='left')

dataset = pd.merge(dataset, events_data, on='YearMonth', how='left')

# Datetime

dataset.rename(columns={'YearMonth': 'Date', 'SKU': 'Product'}, inplace=True)

dataset['Date'] = pd.to_datetime(dataset['Date'], format='%Ypercentm')

# Format reductions

dataset['Discount'] = dataset['Promotions']/dataset['Price']

dataset = dataset.drop(columns=['Promotions','Sales'])

# Format occasions

special_days_columns = ['Easter Day','Good Friday','New Year','Christmas','Labor Day','Independence Day','Revolution Day Memorial','Regional Games ','FIFA U-17 World Cup','Football Gold Cup','Beer Capital','Music Fest']

dataset['Special_days'] = dataset[special_days_columns].max(axis=1)

dataset = dataset.drop(columns=special_days_columns)

Have you ever checked for flawed values?

Whereas this half is extra apparent, it’s nonetheless price mentioning, as it could possibly keep away from feeding flawed knowledge into our fashions. In transactional knowledge, search for zero-price transactions, gross sales quantity bigger than the remaining inventory, transactions of discontinued merchandise, and related.

Are you forecasting gross sales or demand?

It is a key distinction we must always make when forecasting demand, because the aim is to foresee the demand for merchandise to optimize re-stocking. If we have a look at gross sales with out observing the inventory values, we might be underestimating demand when stockouts happen, thus, introducing bias into our fashions. On this case, we are able to ignore transactions after a stockout or attempt to fill these values accurately, for instance, with a shifting common of the demand.

Let’s code!

Within the case of the chosen dataset for this tutorial, the preprocessing is sort of easy as we don’t have inventory knowledge. We have to right zero-price transactions by filling them with the right worth and fill the lacking values for the low cost column.

# Fill costs

dataset.Worth = np.the place(dataset.Worth==0, np.nan, dataset.Worth)

dataset.Worth = dataset.groupby(['Agency', 'Product'])['Price'].ffill()

dataset.Worth = dataset.groupby(['Agency', 'Product'])['Price'].bfill()# Fill reductions

dataset.Low cost = dataset.Low cost.fillna(0)

# Kind

dataset = dataset.sort_values(by=['Agency','Product','Date']).reset_index(drop=True)

Do it’s essential forecast all merchandise?

Relying on some situations akin to funds, price financial savings and the fashions you’re utilizing you may not need to forecast the entire catalog of merchandise. Let’s say after experimenting, you resolve to work with neural networks. These are often expensive to coach, and take extra time and plenty of sources. When you select to coach and forecast the entire set of merchandise, the prices of your answer will improve, perhaps even making it not price investing in in your firm. On this case, a very good different is to phase the merchandise primarily based on particular standards, for instance utilizing your mannequin to forecast simply the merchandise that produce the best quantity of revenue. The demand for remaining merchandise might be predicted utilizing an easier and cheaper mannequin.

Are you able to extract any extra related data?

Characteristic extraction could be utilized in any time collection job, as you’ll be able to extract some attention-grabbing variables from the date index. Notably, in demand forecasting duties, these options are attention-grabbing as some shopper habits might be seasonal.Extracting the day of the week, the week of the month, or the month of the 12 months might be attention-grabbing to assist your mannequin establish these patterns. It’s key to encode these options accurately, and I counsel you to examine cyclical encoding because it might be extra appropriate in some conditions for time options.

Let’s code!

The very first thing we’re doing on this tutorial is to phase our merchandise and maintain solely these which are high-rotation. Doing this step earlier than performing function extraction can assist scale back efficiency prices when you could have too many low-rotation collection that you’re not going to make use of. For computing rotation, we’re solely going to make use of prepare knowledge. For that, we outline the splits of the info beforehand. Discover that we have now 2 dates for the validation set, VAL_DATE_IN signifies these dates that additionally belong to the coaching set however can be utilized as enter of the validation set, and VAL_DATE_OUT signifies from which level the timesteps will probably be used to guage the output of the fashions. On this case, we tag as high-rotation all collection which have gross sales 75% of the 12 months, however you’ll be able to mess around with the applied perform within the supply code. After that, we carry out a second segmentation, to make sure that we have now sufficient historic knowledge to coach the fashions.

# Cut up dates

TEST_DATE = pd.Timestamp('2017-07-01')

VAL_DATE_OUT = pd.Timestamp('2017-01-01')

VAL_DATE_IN = pd.Timestamp('2016-01-01')

MIN_TRAIN_DATE = pd.Timestamp('2015-06-01')# Rotation

rotation_values = rotation_tags(dataset[dataset.Date<VAL_DATE_OUT], interval_length_list=[365], threshold_list=[0.75])

dataset = dataset.merge(rotation_values, on=['Agency','Product'], how='left')

dataset = dataset[dataset.Rotation=='high'].reset_index(drop=True)

dataset = dataset.drop(columns=['Rotation'])

# Historical past

first_transactions = dataset[dataset.Volume!=0].groupby(['Agency','Product'], as_index=False).agg(

First_transaction = ('Date', 'min'),

)

dataset = dataset.merge(first_transactions, on=['Agency','Product'], how='left')

dataset = dataset[dataset.Date>=dataset.First_transaction]

dataset = dataset[MIN_TRAIN_DATE>=dataset.First_transaction].reset_index(drop=True)

dataset = dataset.drop(columns=['First_transaction'])

As we’re working with month-to-month aggregated knowledge, there aren’t many time options to be extracted. On this case, we embrace the place, which is only a numerical index of the order of the collection. Time options could be computed on prepare time by specifying them to Darts by way of encoders. Furthermore, we additionally compute the shifting common and exponential shifting common of the earlier 4 months.

dataset['EMA_4'] = dataset.groupby(['Agency','Product'], group_keys=False).apply(lambda group: group.Quantity.ewm(span=4, modify=False).imply())

dataset['MA_4'] = dataset.groupby(['Agency','Product'], group_keys=False).apply(lambda group: group.Quantity.rolling(window=4, min_periods=1).imply())# Darts' encoders

encoders = {

"place": {"previous": ["relative"], "future": ["relative"]},

"transformer": Scaler(),

}

Have you ever outlined a baseline set of predictions?

As in different use instances, earlier than coaching any fancy fashions, it’s essential set up a baseline that you simply need to overcome.Normally, when selecting a baseline mannequin, it is best to purpose for one thing easy that hardly has any prices. A typical observe on this area is utilizing the shifting common of demand over a time window as a baseline. This baseline could be computed with out requiring any fashions, however for code simplicity, on this tutorial, we’ll use the Darts’ baseline mannequin, NaiveMovingAverage.

Is your mannequin native or world?

You might be working with a number of time collection. Now, you’ll be able to select to coach a neighborhood mannequin for every of those time collection or prepare only one world mannequin for all of the collection. There may be not a ‘proper’ reply, each work relying in your knowledge. If in case you have knowledge that you simply consider has related behaviors when grouped by retailer, varieties of merchandise, or different categorical options, you may profit from a world mannequin. Furthermore, you probably have a really excessive quantity of collection and also you need to use fashions which are extra expensive to retailer as soon as educated, you might also desire a world mannequin. Nonetheless, if after analyzing your knowledge you consider there aren’t any frequent patterns between collection, your quantity of collection is manageable, or you aren’t utilizing complicated fashions, selecting native fashions could also be finest.

What libraries and fashions did you select?

There are numerous choices for working with time collection. On this tutorial, I recommend utilizing Darts. Assuming you’re working with Python, this forecasting library may be very simple to make use of. It supplies instruments for managing time collection knowledge, splitting knowledge, managing grouped time collection, and performing completely different analyses. It affords all kinds of world and native fashions, so you’ll be able to run experiments with out switching libraries. Examples of the out there choices are baseline fashions, statistical fashions like ARIMA or Prophet, Scikit-learn-based fashions, Pytorch-based fashions, and ensemble fashions. Fascinating choices are fashions like Temporal Fusion Transformer (TFT) or Time Collection Deep Encoder (TiDE), which may study patterns between grouped collection, supporting categorical covariates.

Let’s code!

Step one to start out utilizing the completely different Darts fashions is to show the Pandas Dataframes into the time collection Darts objects and break up them accurately. To take action, I’ve applied two completely different features that use Darts’ functionalities to carry out these operations. The options of costs, reductions, and occasions will probably be identified when forecasting happens, whereas for calculated options we’ll solely know previous values.

# Darts format

series_raw, collection, past_cov, future_cov = to_darts_time_series_group(

dataset=dataset,

goal='Quantity',

time_col='Date',

group_cols=['Agency','Product'],

past_cols=['EMA_4','MA_4'],

future_cols=['Price','Discount','Special_days'],

freq='MS', # first day of every month

encode_static_cov=True, # in order that the fashions can use the explicit variables (Company & Product)

)# Cut up

train_val, take a look at = split_grouped_darts_time_series(

collection=collection,

split_date=TEST_DATE

)

prepare, _ = split_grouped_darts_time_series(

collection=train_val,

split_date=VAL_DATE_OUT

)

_, val = split_grouped_darts_time_series(

collection=train_val,

split_date=VAL_DATE_IN

)

The primary mannequin we’re going to use is the NaiveMovingAverage baseline mannequin, to which we’ll examine the remainder of our fashions. This mannequin is basically quick because it doesn’t study any patterns and simply performs a shifting common forecast given the enter and output dimensions.

maes_baseline, time_baseline, preds_baseline = eval_local_model(train_val, take a look at, NaiveMovingAverage, mae, prediction_horizon=6, input_chunk_length=12)

Usually, earlier than leaping into deep studying, you’ll attempt utilizing less complicated and less expensive fashions, however on this tutorial, I wished to concentrate on two particular deep studying fashions which have labored effectively for me. I used each of those fashions to forecast the demand for a whole lot of merchandise throughout a number of shops by utilizing each day aggregated gross sales knowledge and completely different static and steady covariates, in addition to inventory knowledge. You will need to notice that these fashions work higher than others particularly in long-term forecasting.

The primary mannequin is the Temporal Fusion Transformer. This mannequin permits you to work with plenty of time collection concurrently (i.e., it’s a world mannequin) and may be very versatile relating to covariates. It really works with static, previous (the values are solely identified previously), and future (the values are identified in each the previous and future) covariates. It manages to study complicated patterns and it helps probabilistic forecasting. The one downside is that, whereas it’s well-optimized, it may be expensive to tune and prepare. In my expertise, it may give excellent outcomes however the strategy of tuning the hyperparameters takes an excessive amount of time if you’re brief on sources. On this tutorial, we’re coaching the TFT with mostlythe default parameters, and the identical enter and output home windows that we used for the baseline mannequin.

# PyTorch Lightning Coach arguments

early_stopping_args = {

"monitor": "val_loss",

"endurance": 50,

"min_delta": 1e-3,

"mode": "min",

}pl_trainer_kwargs = {

"max_epochs": 200,

#"accelerator": "gpu", # uncomment for gpu use

"callbacks": [EarlyStopping(**early_stopping_args)],

"enable_progress_bar":True

}

common_model_args = {

"output_chunk_length": 6,

"input_chunk_length": 12,

"pl_trainer_kwargs": pl_trainer_kwargs,

"save_checkpoints": True, # checkpoint to retrieve the perfect performing mannequin state,

"force_reset": True,

"batch_size": 128,

"random_state": 42,

}

# TFT params

best_hp = {

'optimizer_kwargs': {'lr':0.0001},

'loss_fn': MAELoss(),

'use_reversible_instance_norm': True,

'add_encoders':encoders,

}

# Prepare

begin = time.time()

## COMMENT TO LOAD PRE-TRAINED MODEL

fit_mixed_covariates_model(

model_cls = TFTModel,

common_model_args = common_model_args,

specific_model_args = best_hp,

model_name = 'TFT_model',

past_cov = past_cov,

future_cov = future_cov,

train_series = prepare,

val_series = val,

)

time_tft = time.time() - begin

# Predict

best_tft = TFTModel.load_from_checkpoint(model_name='TFT_model', finest=True)

preds_tft = best_tft.predict(

collection = train_val,

past_covariates = past_cov,

future_covariates = future_cov,

n = 6

)

The second mannequin is the Time Collection Deep Encoder. This mannequin is slightly bit more moderen than the TFT and is constructed with dense layers as an alternative of LSTM layers, which makes the coaching of the mannequin a lot much less time-consuming. The Darts implementation additionally helps all varieties of covariates and probabilistic forecasting, in addition to a number of time collection. The paper on this mannequin exhibits that it could possibly match or outperform transformer-based fashions on forecasting benchmarks. In my case, because it was a lot less expensive to tune, I managed to acquire higher outcomes with TiDE than I did with the TFT mannequin in the identical period of time or much less. As soon as once more for this tutorial, we’re simply doing a primary run with principally default parameters. Notice that for TiDE the variety of epochs wanted is often smaller than for the TFT.

# PyTorch Lightning Coach arguments

early_stopping_args = {

"monitor": "val_loss",

"endurance": 10,

"min_delta": 1e-3,

"mode": "min",

}pl_trainer_kwargs = {

"max_epochs": 50,

#"accelerator": "gpu", # uncomment for gpu use

"callbacks": [EarlyStopping(**early_stopping_args)],

"enable_progress_bar":True

}

common_model_args = {

"output_chunk_length": 6,

"input_chunk_length": 12,

"pl_trainer_kwargs": pl_trainer_kwargs,

"save_checkpoints": True, # checkpoint to retrieve the perfect performing mannequin state,

"force_reset": True,

"batch_size": 128,

"random_state": 42,

}

# TiDE params

best_hp = {

'optimizer_kwargs': {'lr':0.0001},

'loss_fn': MAELoss(),

'use_layer_norm': True,

'use_reversible_instance_norm': True,

'add_encoders':encoders,

}

# Prepare

begin = time.time()

## COMMENT TO LOAD PRE-TRAINED MODEL

fit_mixed_covariates_model(

model_cls = TiDEModel,

common_model_args = common_model_args,

specific_model_args = best_hp,

model_name = 'TiDE_model',

past_cov = past_cov,

future_cov = future_cov,

train_series = prepare,

val_series = val,

)

time_tide = time.time() - begin

# Predict

best_tide = TiDEModel.load_from_checkpoint(model_name='TiDE_model', finest=True)

preds_tide = best_tide.predict(

collection = train_val,

past_covariates = past_cov,

future_covariates = future_cov,

n = 6

)

How are you evaluating the efficiency of your mannequin?

Whereas typical time collection metrics are helpful for evaluating how good your mannequin is at forecasting, it is strongly recommended to go a step additional. First, when evaluating in opposition to a take a look at set, it is best to discard all collection which have stockouts, as you received’t be evaluating your forecast in opposition to actual knowledge. Second, it’s also attention-grabbing to include area information or KPIs into your analysis. One key metric might be how a lot cash would you be incomes along with your mannequin, avoiding stockouts. One other key metric might be how a lot cash are you saving by avoiding overstocking brief shelf-life merchandise. Relying on the steadiness of your costs, you may even prepare your fashions with a customized loss perform, akin to a price-weighted Imply Absolute Error (MAE) loss.

Will your mannequin’s predictions deteriorate with time?

Dividing your knowledge in a prepare, validation, and take a look at break up will not be sufficient for evaluating the efficiency of a mannequin that might go into manufacturing. By simply evaluating a brief window of time with the take a look at set, your mannequin selection is biased by how effectively your mannequin performs in a really particular predictive window. Darts supplies an easy-to-use implementation of backtesting, permitting you to simulate how your mannequin would carry out over time by forecasting shifting home windows of time. With backtesting you can too simulate the retraining of the mannequin each N steps.

Let’s code!

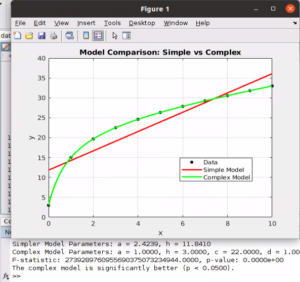

If we have a look at our fashions’ outcomes when it comes to MAE throughout all collection we are able to see that the clear winner is TiDE, because it manages to cut back the baseline’s error probably the most whereas retaining the time price pretty low. Nonetheless, let’s say that our beer firm’s finest curiosity is to cut back the financial price of stockouts and overstocking equally. In that case, we are able to consider the predictions utilizing a price-weighted MAE.

After computing the price-weighted MAE for all collection, the TiDE remains to be the perfect mannequin, though it may have been completely different. If we compute the development of utilizing TiDE w.r.t the baseline mannequin, when it comes to MAE is 6.11% however when it comes to financial prices, the development will increase slightly bit. Reversely, when trying on the enchancment when utilizing TFT, the development is bigger when taking a look at simply gross sales quantity quite than when taking costs into the calculation.

For this dataset, we aren’t utilizing backtesting to match predictions due to the restricted quantity of information attributable to it being month-to-month aggregated. Nonetheless, I encourage you to carry out backtesting along with your tasks if attainable. Within the supply code, I embrace this perform to simply carry out backtesting with Darts:

def backtesting(mannequin, collection, past_cov, future_cov, start_date, horizon, stride):

historical_backtest = mannequin.historical_forecasts(

collection, past_cov, future_cov,

begin=start_date,

forecast_horizon=horizon,

stride=stride, # Predict each N months

retrain=False, # Maintain the mannequin mounted (no retraining)

overlap_end=False,

last_points_only=False

)

maes = mannequin.backtest(collection, historical_forecasts=historical_backtest, metric=mae)return np.imply(maes)

How will you present the predictions?

On this tutorial, it’s assumed that you’re already working with a predefined forecasting horizon and frequency. If this wasn’t offered, it’s also a separate use case by itself, the place supply or provider lead instances also needs to be taken under consideration. Understanding how usually your mannequin’s forecast is required is vital because it may require a unique stage of automation. If your organization wants predictions each two months, perhaps investing time, cash, and sources within the automation of this job isn’t essential. Nonetheless, if your organization wants predictions twice per week and your mannequin takes longer to make these predictions, automating the method can save future efforts.

Will you deploy the mannequin within the firm’s cloud companies?

Following the earlier recommendation, should you and your organization resolve to deploy the mannequin and put it into manufacturing, it’s a good suggestion to comply with MLOps rules. This could permit anybody to simply make modifications sooner or later, with out disrupting the entire system. Furthermore, it’s also vital to observe the mannequin’s efficiency as soon as in manufacturing, as idea drift or knowledge drift may occur. These days quite a few cloud companies provide instruments that handle the event, deployment, and monitoring of machine studying fashions. Examples of those are Azure Machine Studying and Amazon Internet Providers.