Combine basis fashions into your code with Amazon Bedrock

The rise of enormous language fashions (LLMs) and basis fashions (FMs) has revolutionized the sector of pure language processing (NLP) and synthetic intelligence (AI). These highly effective fashions, skilled on huge quantities of information, can generate human-like textual content, reply questions, and even interact in artistic writing duties. Nonetheless, coaching and deploying such fashions from scratch is a posh and resource-intensive course of, usually requiring specialised experience and vital computational assets.

Enter Amazon Bedrock, a totally managed service that gives builders with seamless entry to cutting-edge FMs via easy APIs. Amazon Bedrock streamlines the mixing of state-of-the-art generative AI capabilities for builders, providing pre-trained fashions that may be custom-made and deployed with out the necessity for intensive mannequin coaching from scratch. Amazon maintains the flexibleness for mannequin customization whereas simplifying the method, making it simple for builders to make use of cutting-edge generative AI applied sciences of their purposes. With Amazon Bedrock, you possibly can combine superior NLP options, equivalent to language understanding, textual content technology, and query answering, into your purposes.

On this put up, we discover how you can combine Amazon Bedrock FMs into your code base, enabling you to construct highly effective AI-driven purposes with ease. We information you thru the method of establishing the surroundings, creating the Amazon Bedrock shopper, prompting and wrapping code, invoking the fashions, and utilizing varied fashions and streaming invocations. By the top of this put up, you’ll have the data and instruments to harness the facility of Amazon Bedrock FMs, accelerating your product improvement timelines and empowering your purposes with superior AI capabilities.

Answer overview

Amazon Bedrock gives a easy and environment friendly manner to make use of highly effective FMs via APIs, with out the necessity for coaching customized fashions. For this put up, we run the code in a Jupyter pocket book inside VS Code and use Python. The method of integrating Amazon Bedrock into your code base entails the next steps:

- Arrange your improvement surroundings by importing the required dependencies and creating an Amazon Bedrock shopper. This shopper will function the entry level for interacting with Amazon Bedrock FMs.

- After the Amazon Bedrock shopper is ready up, you possibly can outline prompts or code snippets that might be used to work together with the FMs. These prompts can embody pure language directions or code snippets that the mannequin will course of and generate output based mostly on.

- With the prompts outlined, you possibly can invoke the Amazon Bedrock FM by passing the prompts to the shopper. Amazon Bedrock helps varied fashions, every with its personal strengths and capabilities, permitting you to decide on probably the most appropriate mannequin on your use case.

- Relying on the mannequin and the prompts supplied, Amazon Bedrock will generate output, which might embody pure language textual content, code snippets, or a mix of each. You’ll be able to then course of and combine this output into your utility as wanted.

- For sure fashions and use circumstances, Amazon Bedrock helps streaming invocations, which let you work together with the mannequin in actual time. This may be significantly helpful for conversational AI or interactive purposes the place you could trade a number of prompts and responses with the mannequin.

All through this put up, we offer detailed code examples and explanations for every step, serving to you seamlessly combine Amazon Bedrock FMs into your code base. By utilizing these highly effective fashions, you possibly can improve your purposes with superior NLP capabilities, speed up your improvement course of, and ship progressive options to your customers.

Stipulations

Earlier than you dive into the mixing course of, ensure you have the next stipulations in place:

- AWS account – You’ll want an AWS account to entry and use Amazon Bedrock. If you happen to don’t have one, you possibly can create a new account.

- Improvement surroundings – Arrange an built-in improvement surroundings (IDE) along with your most well-liked coding language and instruments. You’ll be able to work together with Amazon Bedrock utilizing AWS SDKs obtainable in Python, Java, Node.js, and extra.

- AWS credentials – Configure your AWS credentials in your improvement surroundings to authenticate with AWS companies. Yow will discover directions on how to do that within the AWS documentation on your chosen SDK. We stroll via a Python instance on this put up.

With these stipulations in place, you’re prepared to begin integrating Amazon Bedrock FMs into your code.

In your IDE, create a brand new file. For this instance, we use a Jupyter pocket book (Kernel: Python 3.12.0).

Within the following sections, we reveal how you can implement the answer in a Jupyter pocket book.

Arrange the surroundings

To start, import the required dependencies for interacting with Amazon Bedrock. The next is an instance of how you are able to do this in Python.

First step is to import boto3 and json:

Subsequent, create an occasion of the Amazon Bedrock shopper. This shopper will function the entry level for interacting with the FMs. The next is a code instance of how you can create the shopper:

Outline prompts and code snippets

With the Amazon Bedrock shopper arrange, outline prompts and code snippets that might be used to work together with the FMs. These prompts can embody pure language directions or code snippets that the mannequin will course of and generate output based mostly on.

On this instance, we requested the mannequin, “Good day, who're you?”.

To ship the immediate to the API endpoint, you want some key phrase arguments to move in. You may get these arguments from the Amazon Bedrock console.

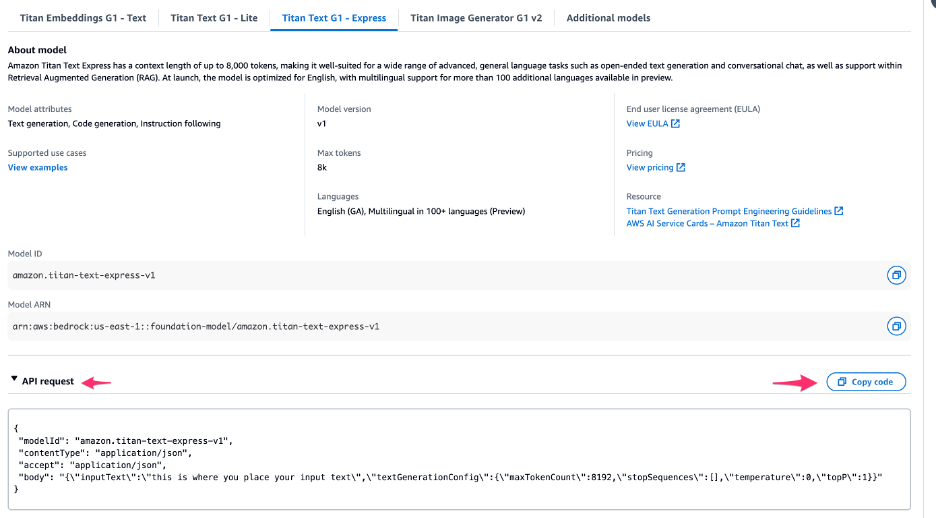

- On the Amazon Bedrock console, select Base fashions within the navigation pane.

- Choose Titan Textual content G1 – Categorical.

- Select the mannequin title (Titan Textual content G1 – Categorical) and go to the API request.

- Copy the API request:

- Insert this code within the Jupyter pocket book with the next minor modifications:

- We put up the API requests to key phrase arguments (kwargs).

- The subsequent change is on the immediate. We are going to change ”that is the place you place your enter textual content” by ”Good day, who’re you?”

- Print the key phrase arguments:

This could provide the following output:

{'modelId': 'amazon.titan-text-express-v1', 'contentType': 'utility/json', 'settle for': 'utility/json', 'physique': '{"inputText":"Good day, who're you?","textGenerationConfig":{"maxTokenCount":8192,"stopSequences":[],"temperature":0,"topP":1}}'}

Invoke the mannequin

With the immediate outlined, now you can invoke the Amazon Bedrock FM.

- Go the immediate to the shopper:

This may invoke the Amazon Bedrock mannequin with the supplied immediate and print the generated streaming physique object response.

{'ResponseMetadata': {'RequestId': '3cfe2718-b018-4a50-94e3-59e2080c75a3',

'HTTPStatusCode': 200,

'HTTPHeaders': {'date': 'Fri, 18 Oct 2024 11:30:14 GMT',

'content-type': 'utility/json',

'content-length': '255',

'connection': 'keep-alive',

'x-amzn-requestid': '3cfe2718-b018-4a50-94e3-59e2080c75a3',

'x-amzn-bedrock-invocation-latency': '1980',

'x-amzn-bedrock-output-token-count': '37',

'x-amzn-bedrock-input-token-count': '6'},

'RetryAttempts': 0},

'contentType': 'utility/json',

'physique': <botocore.response.StreamingBody at 0x105e8e7a0>}

The previous Amazon Bedrock runtime invoke mannequin will work for the FM you select to invoke.

- Unpack the JSON string as follows:

You need to get a response as follows (that is the response we received from the Titan Textual content G1 – Categorical mannequin for the immediate we equipped).

{'inputTextTokenCount': 6, 'outcomes': [{'tokenCount': 37, 'outputText': 'nI am Amazon Titan, a large language model built by AWS. It is designed to assist you with tasks and answer any questions you may have. How may I help you?', 'completionReason': 'FINISH'}]}

Experiment with completely different fashions

Amazon Bedrock presents varied FMs, every with its personal strengths and capabilities. You’ll be able to specify which mannequin you need to use by passing the model_name parameter when creating the Amazon Bedrock shopper.

- Just like the earlier Titan Textual content G1 – Categorical instance, get the API request from the Amazon Bedrock console. This time, we use Anthropic’s Claude on Amazon Bedrock.

{"modelId": "anthropic.claude-v2","contentType": "utility/json","settle for": "*/*","physique": "{"immediate":"nnHuman: Good day worldnnAssistant:","max_tokens_to_sample":300,"temperature":0.5,"top_k":250,"top_p":1,"stop_sequences":["nnHuman:"],"anthropic_version":"bedrock-2023-05-31"}"}

Anthropic’s Claude accepts the immediate differently (nnHuman:), so the API request on the Amazon Bedrock console gives the immediate in the way in which that Anthropic’s Claude can settle for.

- Edit the API request and put it within the key phrase argument:

You need to get the next response:

{'modelId': 'anthropic.claude-v2', 'contentType': 'utility/json', 'settle for': '*/*', 'physique': '{"immediate":"nnHuman: now we have obtained some textual content with none context.nWe might want to label the textual content with a title in order that others can shortly see what the textual content is about nnHere is the textual content between these <textual content></textual content> XML tagsnn<textual content>nToday I despatched to the seaside and noticed a whale. I ate an ice-cream and swam within the sean</textual content>nnProvide title between <title></title> XML tagsnnAssistant:","max_tokens_to_sample":300,"temperature":0.5,"top_k":250,"top_p":1,"stop_sequences":["nnHuman:"],"anthropic_version":"bedrock-2023-05-31"}'}

- With the immediate outlined, now you can invoke the Amazon Bedrock FM by passing the immediate to the shopper:

You need to get the next output:

{'ResponseMetadata': {'RequestId': '72d2b1c7-cbc8-42ed-9098-2b4eb41cd14e', 'HTTPStatusCode': 200, 'HTTPHeaders': {'date': 'Thu, 17 Oct 2024 15:07:23 GMT', 'content-type': 'utility/json', 'content-length': '121', 'connection': 'keep-alive', 'x-amzn-requestid': '72d2b1c7-cbc8-42ed-9098-2b4eb41cd14e', 'x-amzn-bedrock-invocation-latency': '538', 'x-amzn-bedrock-output-token-count': '15', 'x-amzn-bedrock-input-token-count': '100'}, 'RetryAttempts': 0}, 'contentType': 'utility/json', 'physique': <botocore.response.StreamingBody at 0x1200b5990>}

- Unpack the JSON string as follows:

This leads to the next output on the title for the given textual content.

{'sort': 'completion',

'completion': ' <title>A Day on the Seashore</title>',

'stop_reason': 'stop_sequence',

'cease': 'nnHuman:'}

- Print the completion:

As a result of the response is returned within the XML tags as you outlined, you possibly can devour the response and show it to the shopper.

' <title>A Day on the Seashore</title>'

Invoke mannequin with streaming code

For sure fashions and use circumstances, Amazon Bedrock helps streaming invocations, which let you work together with the mannequin in actual time. This may be significantly helpful for conversational AI or interactive purposes the place you could trade a number of prompts and responses with the mannequin. For instance, if you happen to’re asking the FM for an article or story, you may need to stream the output of the generated content material.

- Import the dependencies and create the Amazon Bedrock shopper:

- Outline the immediate as follows:

- Edit the API request and put it in key phrase argument as earlier than:

We use the API request of the claude-v2 mannequin.

- Now you can invoke the Amazon Bedrock FM by passing the immediate to the shopper:

We useinvoke_model_with_response_streamas an alternative ofinvoke_model.

You get a response like the next as streaming output:

Here's a draft article in regards to the fictional planet Foobar: Exploring the Mysteries of Planet Foobar Far off in a distant photo voltaic system lies the mysterious planet Foobar. This unusual world has confounded scientists and explorers for hundreds of years with its weird environments and alien lifeforms. Foobar is barely bigger than Earth and orbits a small, dim pink star. From area, the planet seems rusty orange because of its sandy deserts and pink rock formations. Whereas the planet appears to be like barren and dry at first look, it really incorporates a various array of ecosystems. The poles of Foobar are coated in icy tundra, dwelling to resilient lichen-like vegetation and furry, six-legged mammals. Transferring in direction of the equator, the tundra slowly provides method to rocky badlands dotted with scrubby vegetation. This arid zone incorporates historical dried up riverbeds that time to a as soon as lush surroundings. The center of Foobar is dominated by expansive deserts of tremendous, deep pink sand. These deserts expertise scorching warmth throughout the day however drop to freezing temperatures at evening. Hardy cactus-like vegetation handle to thrive on this harsh panorama alongside robust reptilian creatures. Oases wealthy with palm-like timber can sometimes be discovered tucked away in hidden canyons. Scattered all through Foobar are pockets of tropical jungles thriving alongside rivers and wetlands.

Conclusion

On this put up, we confirmed how you can combine Amazon Bedrock FMs into your code base. With Amazon Bedrock, you need to use state-of-the-art generative AI capabilities with out the necessity for coaching customized fashions, accelerating your improvement course of and enabling you to construct highly effective purposes with superior NLP options.

Whether or not you’re constructing a conversational AI assistant, a code technology device, or one other utility that requires NLP capabilities, Amazon Bedrock gives a easy and environment friendly answer. By utilizing the facility of FMs via Amazon Bedrock APIs, you possibly can give attention to constructing progressive options and delivering worth to your customers, with out worrying in regards to the underlying complexities of language fashions.

As you proceed to discover and combine Amazon Bedrock into your tasks, bear in mind to remain updated with the newest updates and options supplied by the service. Moreover, contemplate exploring different AWS companies and instruments that may complement and improve your AI-driven purposes, equivalent to Amazon SageMaker for machine studying mannequin coaching and deployment, or Amazon Lex for constructing conversational interfaces.

To additional discover the capabilities of Amazon Bedrock, check with the next assets:

Share and study with our generative AI group at community.aws.

Comfortable coding and constructing with Amazon Bedrock!

Concerning the Authors

Rajakumar Sampathkumar is a Principal Technical Account Supervisor at AWS, offering buyer steering on business-technology alignment and supporting the reinvention of their cloud operation fashions and processes. He’s captivated with cloud and machine studying. Raj can also be a machine studying specialist and works with AWS prospects to design, deploy, and handle their AWS workloads and architectures.

Rajakumar Sampathkumar is a Principal Technical Account Supervisor at AWS, offering buyer steering on business-technology alignment and supporting the reinvention of their cloud operation fashions and processes. He’s captivated with cloud and machine studying. Raj can also be a machine studying specialist and works with AWS prospects to design, deploy, and handle their AWS workloads and architectures.

YaduKishore Tatavarthi is a Senior Associate Options Architect at Amazon Internet Companies, supporting prospects and companions worldwide. For the previous 20 years, he has been serving to prospects construct enterprise knowledge methods, advising them on Generative AI, cloud implementations, migrations, reference structure creation, knowledge modeling greatest practices, and knowledge lake/warehouse architectures.

YaduKishore Tatavarthi is a Senior Associate Options Architect at Amazon Internet Companies, supporting prospects and companions worldwide. For the previous 20 years, he has been serving to prospects construct enterprise knowledge methods, advising them on Generative AI, cloud implementations, migrations, reference structure creation, knowledge modeling greatest practices, and knowledge lake/warehouse architectures.