Understanding RAG Half II: How Traditional RAG Works

Understanding RAG Half I: How Traditional RAG Works

Picture by Editor | Midjourney & Canva

Within the first publish on this collection, we launched retrieval augmented technology (RAG), explaining that it turned essential to develop the capabilities of standard massive language fashions (LLMs). We additionally briefly outlining what’s the key concept underpinning RAG: retrieving contextually related info from exterior data bases to make sure that LLMs produce correct and up-to-date info, with out affected by hallucinations and with out the necessity for continuously re-training the mannequin.

The second article on this revealing collection demystifies the mechanisms below which a standard RAG system operates. Whereas many enhanced and extra refined variations of RAG preserve spawning virtually day by day as a part of the frantic AI progress these days, step one to understanding the newest state-of-the-art RAG approaches is to first comprehend the traditional RAG workflow.

The Traditional RAG Workflow

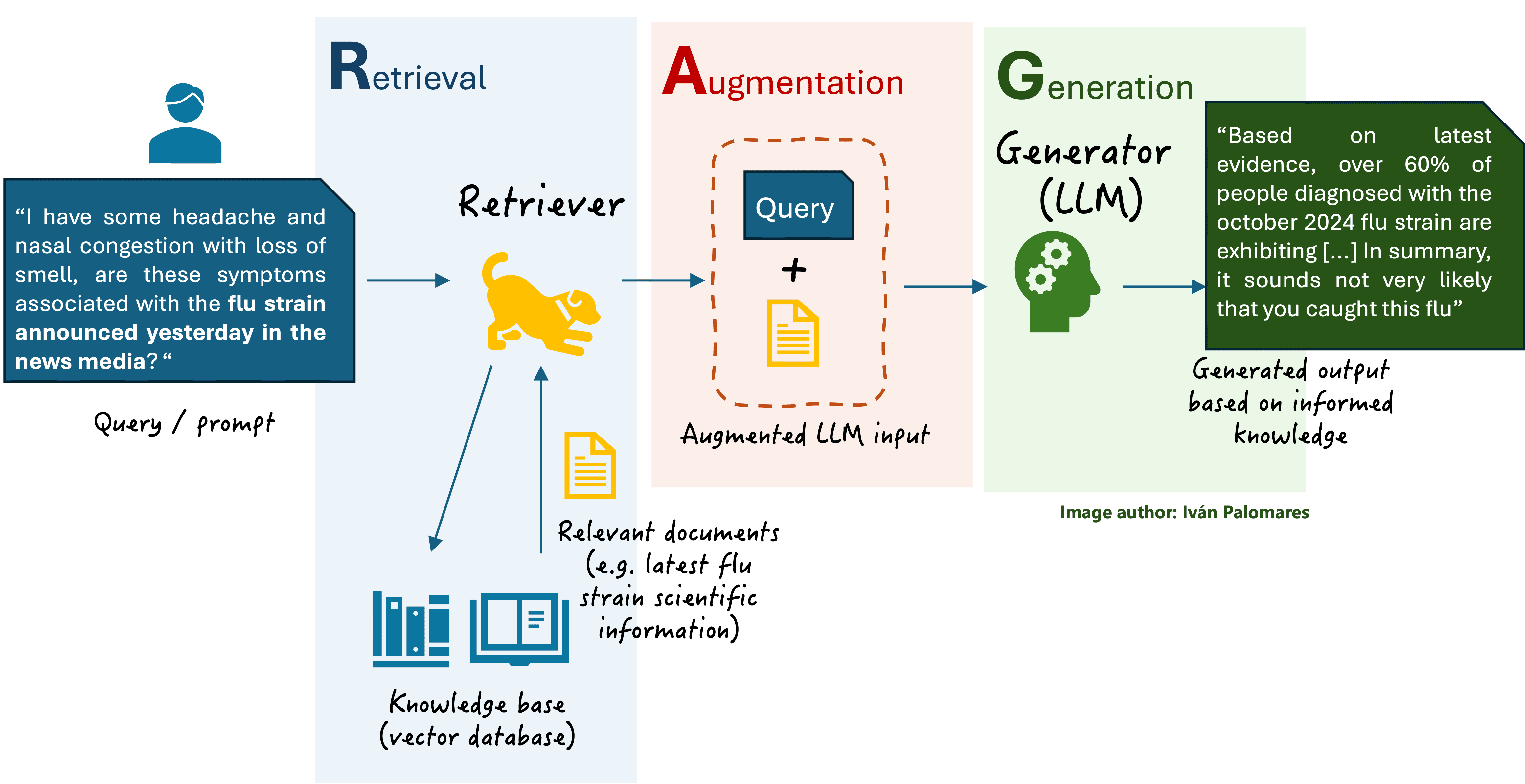

A typical RAG system (depicted within the diagram under) handles three key data-related elements:

- An LLM that has acquired data from the info it has been educated with, usually tens of millions to billions of textual content paperwork.

- A vector database, additionally referred to as data base storing textual content paperwork. However why the title vector database? In RAG and pure language processing (NLP) techniques as an entire, textual content info is remodeled into numerical representations referred to as vectors, capturing the semantic which means of the textual content. Vectors symbolize phrases, sentences, or total paperwork, sustaining key properties of the unique textual content such that two comparable vectors are related to phrases, sentences, or items of textual content with comparable semantics. Storing textual content as numerical vectors enhances the system’s effectivity, such that related paperwork are shortly discovered and retrieved.

- Queries or prompts formulated by the person in pure language.

Common scheme of a fundamental RAG system

In a nutshell, when the person asks a query in pure language to an LLM-based assistant endowed with an RAG engine, three phases happen between sending the query and receiving the reply:

- Retrieval: a part referred to as retriever accesses the vector database to seek out and retrieve related paperwork to the person question.

- Augmentation: the unique person question is augmented by incorporating contextual data from the retrieved paperwork.

- Technology: the LLM -also generally known as generator from an RAG viewpoint- receives the person question augmented with related contextual info and generates a extra exact and truthful textual content response.

Contained in the Retriever

The retriever is the part in an RAG system that finds related info to reinforce the ultimate output later generated by the LLM. You may image it like an enhanced search engine that doesn’t simply match key phrases within the person question to saved paperwork however understands the which means behind the question.

The retriever scans an enormous physique of area data associated to the question, saved in vectorized format (numerical representations of textual content), and pulls out essentially the most related items of textual content to construct a context round them which is hooked up to the unique person question. A typical approach to establish related data is similarity search, the place the person question is encoded right into a vector illustration, and this vector is in comparison with saved vector information. This manner, detecting essentially the most related items of data to the person question, boils right down to iteratively performing some mathematical calculations to establish the closest (most comparable) vectors to the vector illustration of that question. And thus, the retriever manages to drag correct, context-aware info not solely effectively, but additionally precisely.

Contained in the Generator

The generator in RAG is usually a classy language mannequin, usually an LLM primarily based on transformer structure, which takes the augmented enter from the retriever and produces an correct, context-aware, and normally truthful response. This final result usually surpasses the standard of a standalone LLM by incorporating related exterior info.

Contained in the mannequin, the technology course of entails each understanding and producing textual content, managed by elements that encode the augmented enter and generate output textual content phrase by phrase. Every phrase is predicted primarily based on the previous phrases: this activity, carried out because the final stage contained in the LLM, is named the next-word prediction drawback: predicting the more than likely subsequent phrase to keep up coherence and relevance within the message being generated.

This post additional elaborates on the language technology course of led by the generator.

Trying Forward

Within the subsequent publish on this article collection about understanding RAG, we are going to uncover fusion strategies for RAG, characterised by utilizing specialised approaches for combining info from a number of retrieved paperwork, thereby enhancing the context for producing a response.

One frequent instance of fusion strategies in RAG is reranking, which entails scoring and prioritizing a number of retrieved paperwork primarily based on their person relevance earlier than passing essentially the most related ones to the generator. This helps additional enhance the standard of the augmented context, and the eventual responses produced by the language mannequin.